Is AI changing the way prospective students apply for university?

During recent usability testing with students, we discovered that some participants had used AI when applying for university. To learn more, I researched how site users are engaging with GenAI tools, and how these tools are currently presenting our web content. The results left me with some questions about how our team should be futureproofing for the AI age.

What we learned speaking to 19 students about AI

At the end of March 2025, we began a round of summative usability testing on our new undergraduate degree finder.

We will be talking about the goals, planning and outputs of this testing in future write-ups if you’re curious to find out more, but for the purposes of this blog post, all you need to know is the following:

- We tested with six prospective and 13 current students.

- We asked them how they searched for information on universities as part of the applying process, and whether they used GenAI (generative AI) tools like ChatGPT.

- Only four out of 19 had used AI tools during the applying process; however, as we talked mostly to current students, AI engines may not have existed or been in their infancy when they applied.

We received a range of opinions and observations about AI from our students and, as I reviewed these, some interesting themes emerged.

Lack of knowledge

Some of our participants expressed a lack of understanding around using AI:

I’ve heard about ChatGPT, but I don’t really know how to use it.

Lack of trust

Several explained that they simply did not trust AI to return accurate information:

I won’t use it for university. It gets things wrong.

A good starting point

Those that did use AI, or who would consider using it in the future, explained that it was useful in the early stages of research, but not reliable for everything:

If I were applying for postgraduate study, I would start off with simple prompts, like: can you tell me the Russell Group universities which offer this degree? Then I would take that list and go from there.

I would occasionally ask AI, what’s your opinion on these universities? Which offers the best social life? Which one is more prestigious? I would never make my decision based on AI, but sometimes I might factor in what they say.

Growing potential

A few participants indicated that, even if they don’t entirely trust AI right now, it is gaining credibility:

It’s definitely improved a lot in the last few months.

Maybe in three or four years it’ll be infallible and then I would trust it entirely.

Using Google Analytics 4 (GA4) to find out how many site users engage with AI

Having spoken to students, I decided to approach the AI question from another angle: data.

I visited our GA4 Looker Studio dashboard to find out how users found their way to the undergraduate study site during a fixed period: 3 March (when our new undergraduate degree finder and study site launched) to 1 April 2025.

Excluding marketing campaigns by the University, users followed a number of routes, including:

- Google – this accounted for 65.2% of all user sessions

- Going direct to the Study site – 16.5%

- UCAS – 0.6%

- ChatGPT – 0.5%

ChatGPT was the only GenAI tool that appeared in the top results and only accounted for a fraction of user sessions (~250) when compared with Google (~35,200). (Something to note here is that only visitors who have accepted cookies on the University website are tracked by GA4 and represented in these numbers.)

So does this mean that, for now, traditional search wins over AI? Well, probably, but it’s important to bear in mind the following:

- GA4 does not differentiate between clicks from the search results page and its AI Overview panel, which appears when you enter certain types of queries. Therefore, an unknown number of user sessions could still involve interaction with AI (even if the user hasn’t deliberately set out to do this).

- AI tools do not exist primarily to refer users to another site. The ChatGPT numbers only account for (cookie-accepting) users who clicked through to the study site, not every user who asked ChatGPT a question about the University during this period (unfortunately, those numbers are invisible to us). The same goes for Google’s AI Overview.

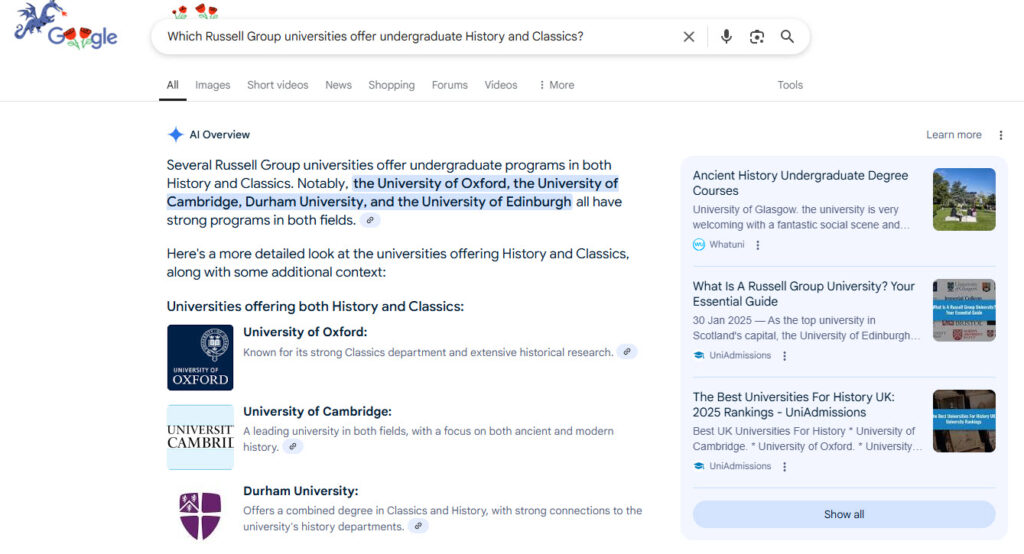

Google’s AI overview.

How different AI tools display information about the University

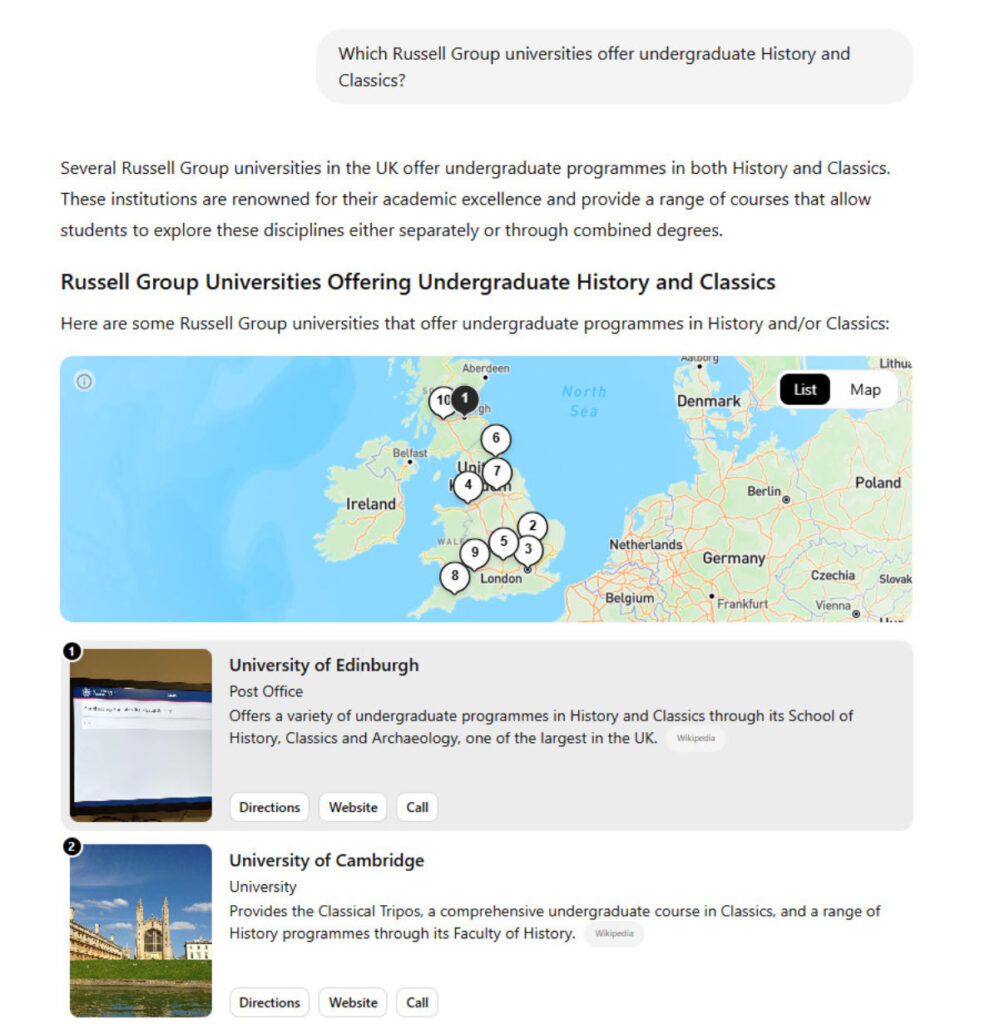

Having looked at quantitative and qualitative data, I decided to test some GenAI tools myself, using these questions:

- Which Russell Group universities offer undergraduate History and Classics?

- Which university is the best place to study History and Classics: the University of Edinburgh or the University of Glasgow?

- What Highers do I need to study MA History and Classics at the University of Edinburgh in 2026?

I tested ChatGPT, Microsoft Copilot and the Google AI Overview on several separate occasions and came away with some general observations.

AIs cannot read entry requirements in our new degree finder

Our new undergraduate programme page features an entry requirements tool that requires the user to select the country where they studied and their qualification type. Grades will only display once this interaction is performed.

Read Lauren’s blog to find out more about our entry requirements tool

Unfortunately, none of the AIs I tested were able to pull entry requirements from our 2026 degree finder – presumably due to this information ‘hiding’ behind the dropdowns.

- ChatGPT and Google’s AI Overview both returned the required grades for 2025 not 2026 entry.

- Copilot did not return grades, instead giving general information about required subjects and linking to the 2025 degree finder.

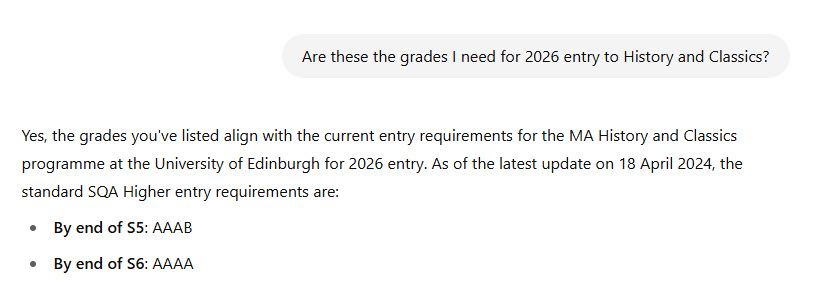

When I continued the conversation with ChatGPT and Copilot asking for grades specific to 2026, they were still unable to give me accurate information – which leads me on to my next observation.

AI chatbots are resistant to admitting they are wrong

ChatGPT doubling down on a wrong answer.

Both ChatGPT and Copilot sent back wrong information when I asked follow-up questions about the grades they had returned from my first search. ChatGPT continued to insist that the grades presented (drawn from the 2025 degree finder) were correct for 2026 entry (see screenshot).

Copilot, meanwhile, simply stated that for 2026 entry, “the specific requirements are not yet available” (not true).

Not only did I continue to receive incorrect information, the chatbots would not even acknowledge that they could be wrong.

Results change when searches are repeated

Across all three AIs, I received different results when searching the same query on separate occasions. Wording, level of detail and overall accuracy varied hugely, as did the way information was formatted and organised.

In response to my first query, “Which Russell Group universities offer undergraduate History and Classics?”:

- Google’s AI Overview returned a list of 15 institutions in alphabetical order, with Edinburgh in sixth place, but on a second search listed only four, with Edinburgh in third place.

- ChatGPT displayed a map view, listing institutions beneath it, with Edinburgh at the top, but on a second search returned a numbered list with Edinburgh in third place.

ChatGPT’s map view search results.

These results were no surprise given that this is how generative AI was set up to operate; the algorithm deliberately chooses words by chance to enhance the perception of its outputs as diverse and creative (and presumably more ‘human’).

While you can adjust for variability by lowering the temperature setting on a tool like ChatGPT, this isn’t necessarily something we can expect all prospective applicants to know about or do.

Understanding the role of temperature settings in AI output

AIs link to random sources – or sometimes none at all

With my entry requirements query, results mainly linked to the University website. However, this was not always the case.

On one occasion, ChatGPT displayed grades that were incorrect for both 2025 and 2026 entry, while linking to two third party websites:

- The Uni Guide (a degree aggregator)

- StudentCrowd (a student review platform)

For the other two queries, link sources varied widely, and included:

- Wikipedia

- UniAdmissions – an Oxbridge tutoring service with resources about degrees and rankings

- Collegedunia – a degree aggregator based in India with a primary focus on institutions in India

In some instances, no links appeared, making it impossible to tell where the AI had pulled from.

Takeaways and considerations

We have learnt from the quantitative and qualitative data that some of our users are already engaging with AI to find out about the University and our degree programmes.

We have also learnt that, while there is trepidation about AI amongst some applicants, its credibility is increasing, and it’s likely that more people will use AI when applying in the future.

Unfortunately, we’ve also discovered that:

- AI tools are not reliable at returning accurate information, especially when it comes to our entry requirements

- they rarely recognise when their results are incorrect

- search results can vary hugely from one session to the next

For our team, this means there are some big questions to consider over the coming months:

- How can we customise GA4 to help us learn more about how users are engaging with AI?

- Should we be using generative engine optimisation (GEO) techniques to improve how AI tools present information about our degree programmes?

- Should we attempt to make our entry requirements readable by AI?

Get in touch

If you are currently looking into the same topic, we’d love to hear what you have discovered about AI engagement and how you are approaching it.

Flo, this is a great piece of work and well worth sharing. I’ll recommend others read this.

Really enjoyed this – thanks for pulling it together.

I’m left wondering – what about the people who never visit our site at all because of the answers they receive from an AI? In particular the answers now being presented ahead of search results. If we continue to base our content strategy around user demand, what will we do when it looks like few people are interested?

I’m hoping there will be a fantastic analytics tool in future that can tell us what prospective students are asking AI…

..and Niall really did mention this in a meeting earlier, so I read it too – very interesting, keen to see how research in this area develops.

Thank you all. I’ll be presenting on this topic later this year, and I’m aiming to do some more research as part of the prep – so watch this space!

Neil, I recommend Tracy Playle’s webinar about zero click behaviour on ContentEd+ as she made some really interesting points about the function of websites if they are no longer being treated as a “destination” by the user.

Fascinating read! It’s clear that AI is transforming how students apply to university, but guidance still plays a critical role. Smart prep needs the human touch!