Usability testing: we’re testing the site, not you

In this post, I recap the experience of conducting online usability testing for our project with the Student Immigration Service (SIS) and share some lessons we learned about the importance of testing before a site goes live.

What is usability testing?

Usability testing is a way for us to test our content designs to see if they have the desired effect on a user.

In short, we need to ensure users can achieve what we want them to do on a page and testing allows us to see what works and what does not. To do this, we need to do the following tasks:

- create prototype content on a website

- prepare a test script

- recruit participants

- conduct a pilot test

- run usability tests

- run a playback session

- revise the content based on the test findings

In this project, we tested our content with staff initially and then with current students who had been through the Student visa application process.

Test preparation

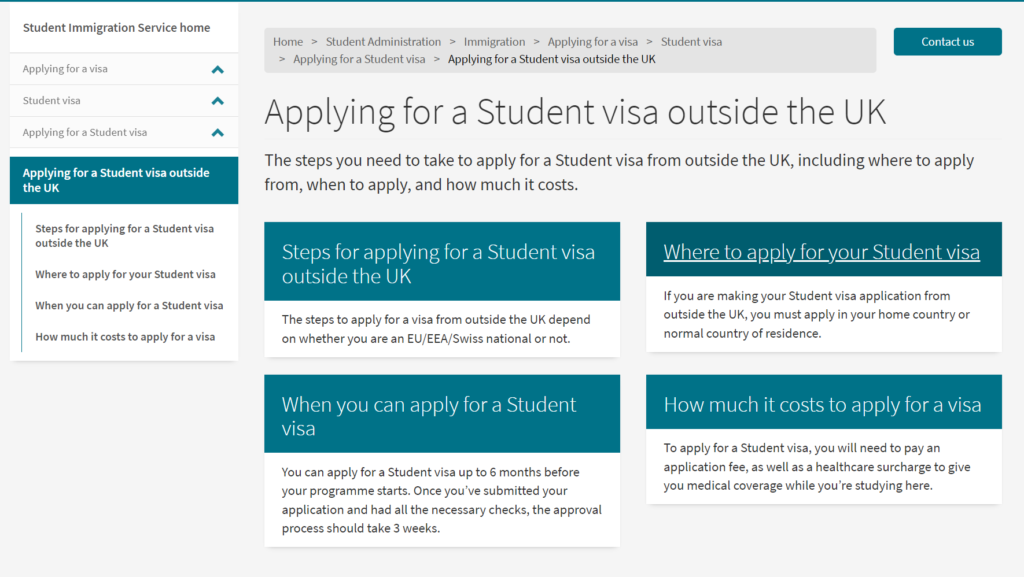

We couldn’t test all our content designs, we didn’t have time, and so we prioritised the most important content areas. For example, we tested the journey of someone applying for a Student visa, outside the UK, as most students using the service will need to use this information before starting their visa application.

Screenshot of ‘Applying for a Student visa outside the UK’ page on the Student Immigration Service website

Writing a test script

We also wrote a script for the facilitator to use during a test and we devised a realistic scenario that would apply to most students using the service.

For example, in our final usability test with student participants, we went through the process of starting a visa application outside of the UK and asked, using the information provided, what they would do.

The benefits of the script helped to improve the quality of the usability tests, overall. We collaborated with the SIS team to ensure the scenarios we tested were as realistic as possible.

Moreover, the script served as a guide for the facilitator throughout each test and it meant that we didn’t have to memorise the whole scenario, or specific content we needed to test on each page.

It also reminded us of what we expected users to do on a specific page. For example, correctly identify the timeline of receiving a CAS (Confirmation of Acceptance for Studies) number. Of course, we couldn’t anticipate how users would respond to our content. Nothing could prepare us for all the ways a user could behave when navigating the site.

Recruiting test participants

With usability testing, we wanted to test with at least 5 people as this allows us to see trends and patterns in user behaviour. In other words, if enough people in our sample size struggled to do something, then we knew it was highly likely that other users would also struggle.

Reaching out to staff

We reached out to colleagues in other departments, using our team’s mailing list and circulated information about the project via internal Microsoft Teams channels. This communication allowed us to tell the wider University community about the ongoing improvements to the Student Immigration Service and gave people who were interested in our work an opportunity to get involved.

A call-out for students

We sent a call-out for student participants via the Student Immigration Service and asked if anyone was interested in helping us improve the site.

We asked students to complete a form so we could contact them to arrange a test session. In the form, we gave a brief overview of the project and asked some basic questions about their Student visa experience. We also explained that students would receive a voucher payment for participating in a usability test.

The importance of a pilot test

We did a pilot test with a member of our team before testing with staff and students. This was an important part of the usability testing process as it served as a ‘dress rehearsal’ for the real event.

Firstly, it allowed us to review the script with a participant and check their comprehension of the tasks. If something was not clear enough, then we could make edits before the ‘real’ tests began.

Sometimes it highlighted something that was not working – maybe a piece of text didn’t stand out enough because of the formatting and so we could make a minor tweak.

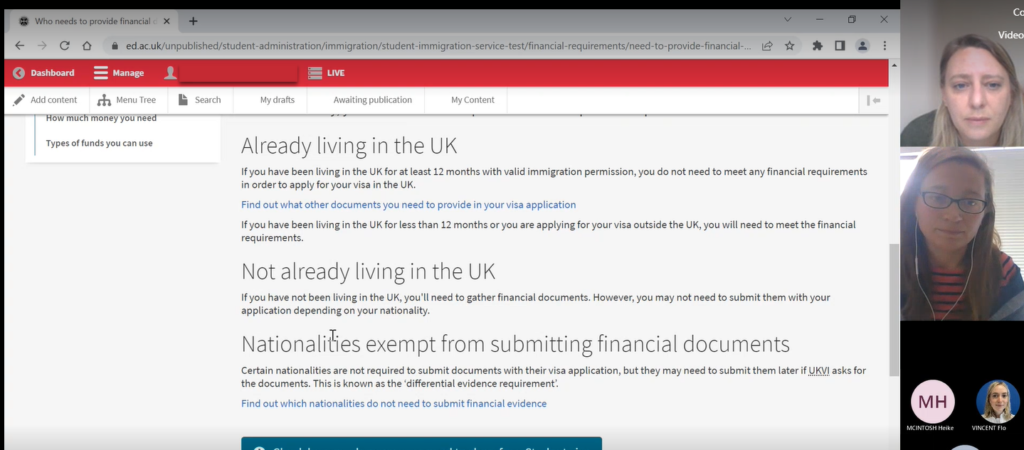

A screenshot from a pilot usability test we did online with a member of our team.

Running usability tests

Once we had refined the script further, and made any extra tweaks like formatting the text, we began testing our prototype content.

Test format

In each test, we followed the same format:

- one content designer would lead while another would take notes

- we ran these tests online using Microsoft Teams

- test participants would switch their cameras on so we could see their reactions

- we recorded the session with the participant’s consent

- each participant would share their screen once the test began

Giving instructions to the participant

At the beginning of each test, we needed to clearly explain why we were doing this test and reassure everyone this was not a test of their ability to use the site.

As such, we stressed: ‘we’re testing the site, not you’.

In our experience of running usability tests, we found that participants felt like their performance was being assessed; so it was important to emphasise this point before we began each test.

Working through user goals

For each test, we would go through a series of ‘tasks’ but tried to avoid using this word to make participants feel more comfortable. Instead, we would ask participants how they would achieve a goal on the site.

We also ask them to think out loud as much as possible so we can understand what they are trying to do. As such, for each test, we would copy and paste the scenario and task into the chat function so participants could refer to it throughout the test. This is a sample task we asked student participants to do:

‘Based on the information you see on this webpage, what is the first thing you would do to prepare for your visa application?’

Not all participants reacted in the same way to the task; some went a little off-piste, but this was okay.

These tests gave insight into how real students were interacting with our content, and it provided us with some valuable lessons about what worked and what didn’t work.

Finding gems to share with the SIS team

After we finished testing, we discussed what changes we needed to make to improve the content and selected which clips would be best to showcase a particular ‘pain point’, or issue, with the SIS team during our playback session.

We found that sharing the recordings with the SIS team to be a powerful demonstration of what worked and what didn’t. The team could clearly see when a participant was confused or frustrated by the content. This evidence-based approach gave us extra confidence to support our content design decisions.

Making changes

After we finished our testing, we realised we needed to make some essential changes before we published the content.

You can read about some of the changes we made following our usability testing in Flo’s blog post.

Read Flo’s blog post on the approach to simplify complex content

What we learned for future testing

Recruiting students at the end of May

We found it most challenging to recruit participants towards the end of May as this was the end of the academic year and many students were less available.

Despite this challenge, we had to accept this was an unavoidable limitation of recruiting current students. It was beyond our control.

An opportunity to gain more skills

These usability tests were a great opportunity for us to develop as facilitators and continually improve our format for testing in the future. As a team, we have since reflected on our performance and you can read about what we learned in Lauren’s blog post.

Reviewing our usability facilitation skills blog post

The need to be reactive

Moreover, we learnt the importance of not following the script so closely. Personally, I have learnt that usability testing is like a live performance; you can prepare for it, but sometimes things will not work and you need to be able to respond accordingly.

Test participants might not respond or behave in the way you want them to or expect them to. But this is okay. We can’t expect a user to glide from point A to point B.

Overall, we learnt something new about our content with every test and each one taught us something new about the target audience.

So, if you’re invited to participate in a usability test, remember: ‘we’re testing the site, not you’.

Learn more about this project

My team and I have written a series of blog posts about our project with the Student Immigration Service.

Great post Freya. Thanks for sharing.

Personally I prefer to avoid using the T-word altogether! 🙂