An automated Editorial Style Guide? Experimenting with Drupal AI Automators

As part of a day of AI experimentation with Drupal specialists, we learned how to use automated technology to evaluate content against the University’s guidance on house writing style.

Drupal is the open-source content management system powering EdWeb 2 (and its predecessor, EdWeb). Collective efforts of the Drupal community (and in particular the work of Jamie Abrahams, Marcus Johansson, Andrew Belcher and others from Drupal AI agency Freely Give) have enabled

Spending a day with the team at Freely Give, we were keen to explore options to apply Drupal AI to the context of web publishing at the University, to address some of the difficulties we face in publishing and managing content for University websites.

Read more about Drupal AI and our AI day in my separate blog posts:

Bringing AI innovation to University web content management – a day of Drupal AI exploration

Can AI help or hinder search? Trials with Drupal AI-boosted search and AI Assistants

Could AI help apply the rules of the Editorial Style Guide?

The University’s Editorial Style Guide, outlining rules and guidance when writing for the University, is published online and is also available as a PDF download for staff to refer to when preparing digital content. Previous research has revealed that when people edit University websites, they often forget to consult the rules in the guide, or are unsure where to find it to refer to it when publishing content. Drupal AI Automator functionality offered a new potential way to address this difficulty, by assessing content against the style guide rules within the editorial interface. We used an experimental approach to try this out in practice.

Using an AI Automator to enable evaluation of the content on a page

The first step was to create a place where evaluation of content could happen within the editorial interface. To achieve this we added a new AI Automator field to the existing fields of a generic page content type. This field contained information about how the Automator would work, and a way to display the results of the evaluation on the page a person was working on.

Selecting rules from the Editorial Style Guide to test the concept

The Editorial Style Guide contains lots of guidance and rules, and in the spirit of controlled experimentation we decided to start small, to see if we could build an AI to check content against a selected style guide rule. During the day we used a made-up example to test the proof of concept. Afterwards I chose two rules from the style guide section about numbers to try out:

- The rule on writing numbers from zero to nine in words

- The rule for using digits for numbers greater than 10.

Read the Editorial Style Guide rules on formatting numbers

Writing a prompt for the AI Automator

Once the AI Automator was enabled, there was a need to create a prompt to detail the evaluation task the AI Automator would perform on the content. To ensure the best results, it was important that the prompt was clear, avoided ambiguity and gave the AI full instructions of how to evaluate and how to report the results, with context from examples. The prompt read as follows:

“You are a numbers style evaluator. I’d like you to take this content and evaluate how much it fits with the rules on numbers style.

Please evaluate out of 10 and gives reasons.

If they have written all the numbers in the right style only give them 10/10

If they use numbers differently to what is in the guide give them 0/10

## Numbers Style Guide ##

For numbers up to and including the number 10 write them in words. For numbers greater than 10, write them as numerals

## Examples ##

There were five students on the research team.

We will delete the records after 11 years.”

Testing with correct content and content with mistakes

With the prompt set, I published a couple of pages of content – some with numbers written correctly and some with mistakes to test out if the Automator could identify the difference. From the content I tested, I was pleased to see the AI Automator was able to identify when content aligned with the style guide rules on numbers (and award this 10/10) and conversely, to identify when the content didn’t match the style guide rules and award 0/10. As well as the scoring mechanism, the Automator pointed out the reasons for the scores (highlighting the correct application of the rules as well as the errors), as shown in the screenshots below.

Automator output when evaluating incorrect content

I published the following content:

“The School comprises 3 subject areas of History, Classics and Archaeology, together with the Centre for the Study of Modern and Contemporary History, the Edinburgh Centre for Global History and the Centre for Medieval and Renaissance Studies this makes 5. There are over one hundred and fifty staff and approximately six hundred graduate students”

The Automator showed the following:

“I would rate this content 0/10 based on the provided “Numbers Style Guide”.. Here’s why:

- The number “3” is used in numerals, while according to the style guide, it should be written in words (“three”) because it is less than or equal to 10.

- The number “5” at the end should also be written in words (“five”) because it is less than or equal to 10.

- The content uses “one hundred and fifty” and “six hundred” correctly, as these numbers are greater than 10 and are written in words. However, the presence of errors in the other parts results in a score of 0.

Overall, the usage of “3” and “5” in numerals when they should be in words deviates from the style guide”

Screenshot showing AI Automator output assessing content not compliant with style guide rules

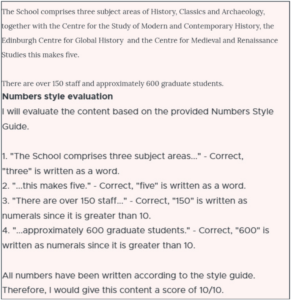

Automator output when evaluating correct content

I published the following:

“The School comprises three subject areas of History, Classics and Archaeology, together with the Centre for the Study of Modern and Contemporary History, the Edinburgh Centre for Global History and the Centre for Medieval and Renaissance Studies this makes five. There are over 150 staff and approximately 600 graduate students”

The Automator showed the following:

“I will evaluate the content based on the provided Numbers Style Guide.

- “The School comprises three subject areas … ” – Correct, “three” is written as a word

- “…this makes five” – Correct, “five” is written as a word.

- “There are over 150 staff…” – Corect, “150” is written as numerals since it is greater than 10.

All numbers have been written according to the style guide. Therefore, I would give this content a score of 10/10.”

Screenshot showing AI Automator output assessing content compliant with style guide rules

I was pleased that the AI Automator had correctly evaluated both pieces of content against the rules in the style guide, and the reasoning given was both accurate and coherent.

Reflections and thoughts about next steps

For a non-developer like myself it was very gratifying to be able to experiment with AI technology and make something to address an area of content publishing difficulty. I was aware, however, that being an author of the Editorial Style Guide, I was very familiar with the rules within it, and therefore my judgement on the usefulness of the evaluation provided by the Automator was a biased one – to assess whether this was useful to web publishers could only be established by testing in context.

Furthermore, from a content design perspective, I was aware that in this short, controlled experiment I had made things relatively easy for the AI, by giving it precisely defined rules to apply, and that in reality, application of editorial guidance requires judgement and consideration according to the context. Fortified by the success I had experienced, however, I was keen to push the AI further, by incrementally building the prompt to include additional rules and contextual examples to see how it performed evaluating content against these.

Another learning point from the experiment related to the format of the Editorial Style Guide. Sifting through the different sections, it occurred to me that in some places, the guidance was clear and easy to follow (and therefore easy to articulate as instructions in an AI prompt) but in others, there was more scope for open interpretation, which made it more difficult to communicate to the AI. This prompted me to revisit my previous work researching the use of the guide with web publishers to identify areas of inconsistency to target for improvement to make clearer.

Read my previous blog posts about testing the Editorial Style Guide with web publishers: