How to tackle a content audit

I recently audited our prospective student web estate, and learned a lot about the planning process as I went. Here’s some advice on how to tackle your own content audit. I’ve broken the process down into a few simple steps.

Auditing sounds scary. It’s one of those things that often gets overlooked because it just feels too hard or because we don’t have time for it. But it’s essential if you want to manage your content efficiently and minimise risk. You can’t manage what you can’t measure.

Here’s how to get started:

Define the problem

It’s important to know why you’re doing the audit in the first place, since that helps to frame the questions that you might ask.

In my case, content aimed at prospective students was spread across multiple websites, and managed by multiple teams. We didn’t know enough about who owned it, how it was being managed, or how well it served user needs. That’s why we needed an audit.

Set the scope

It’s important to be clear about the initial scope of your audit, based on how much time you have to complete it, so that you can set realistic goals.

Think about how broad and how deep you want to go. Will it only cover certain websites, or focus on content managed by certain teams. Or, will it just concentrate on what a particular user needs to complete a task?

The scope might change as you move through the audit, depending on what you find. So it’s useful to have regular check-ins to review what you’re learning and to reconsider the parameters. You might discover that you need to go a little wider or that you need to narrow the focus because progress is too slow.

Decide what you want to find out

As with any research, you need to know what you’re aiming to find out before you can work out how to gather that information.

In my case, I wanted to answer seven key questions about each site:

- What is it?

- Where is it?

- Who owns it?

- What is its purpose?

- How big is it?

- How often is it updated?

- How good is the content?

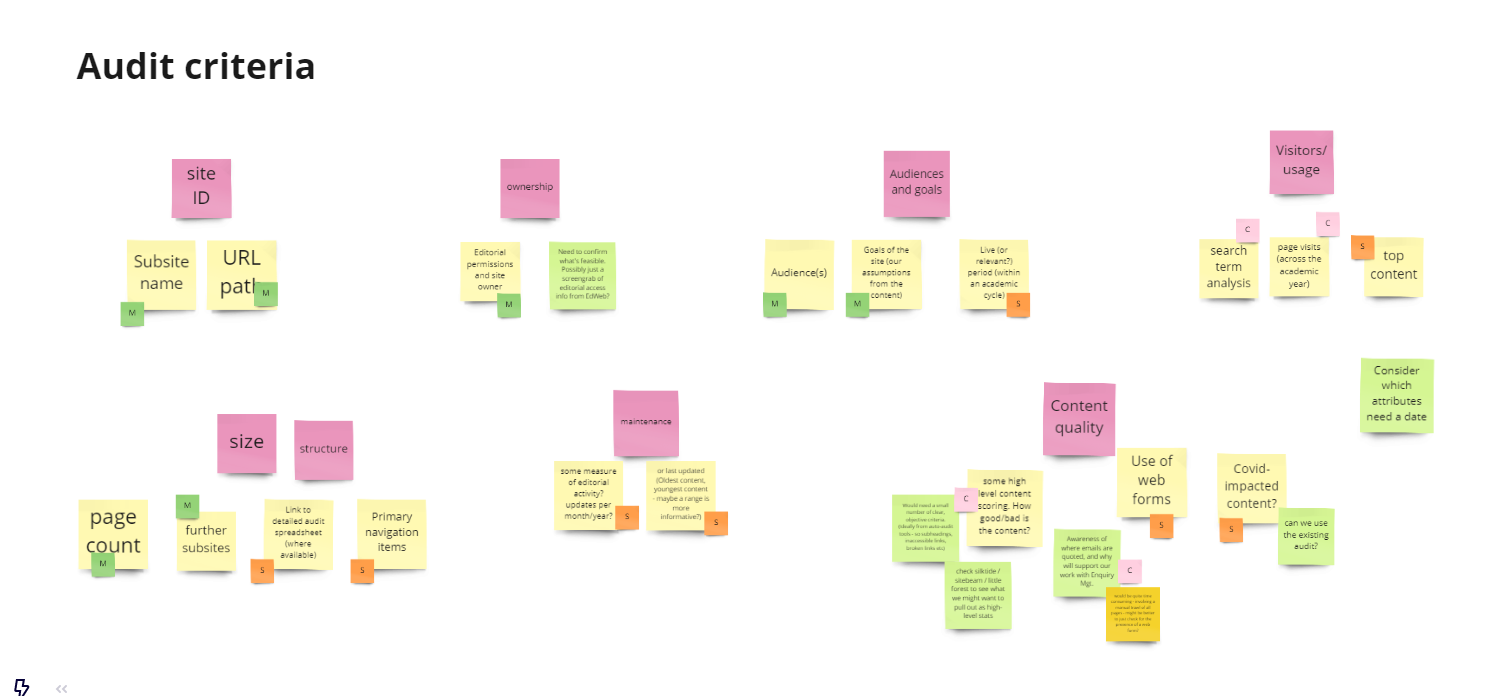

Identify the audit criteria

Once you have those key questions, you can work out what criteria you’ll need to include in the audit to answer them.

After listing my criteria, I produced a quick affinity map to group them into themes.

Based on this, I decided to audit the content across 6 broad categories:

- audience and goals

- ownership and access

- size and shape

- visits and usage

- content quality

- Covid-19 impact

Prioritise the criteria

I then prioritised them using a MOSCOW scale:

Must: data that was essential to capture.

Should: data that we really should gather if it was available.

Could: data that would be nice to have but not essential.

Won’t: data that was out of scope completely.

Read more about the MOSCOW scale (knowledgetrain.co.uk)

Affinity map

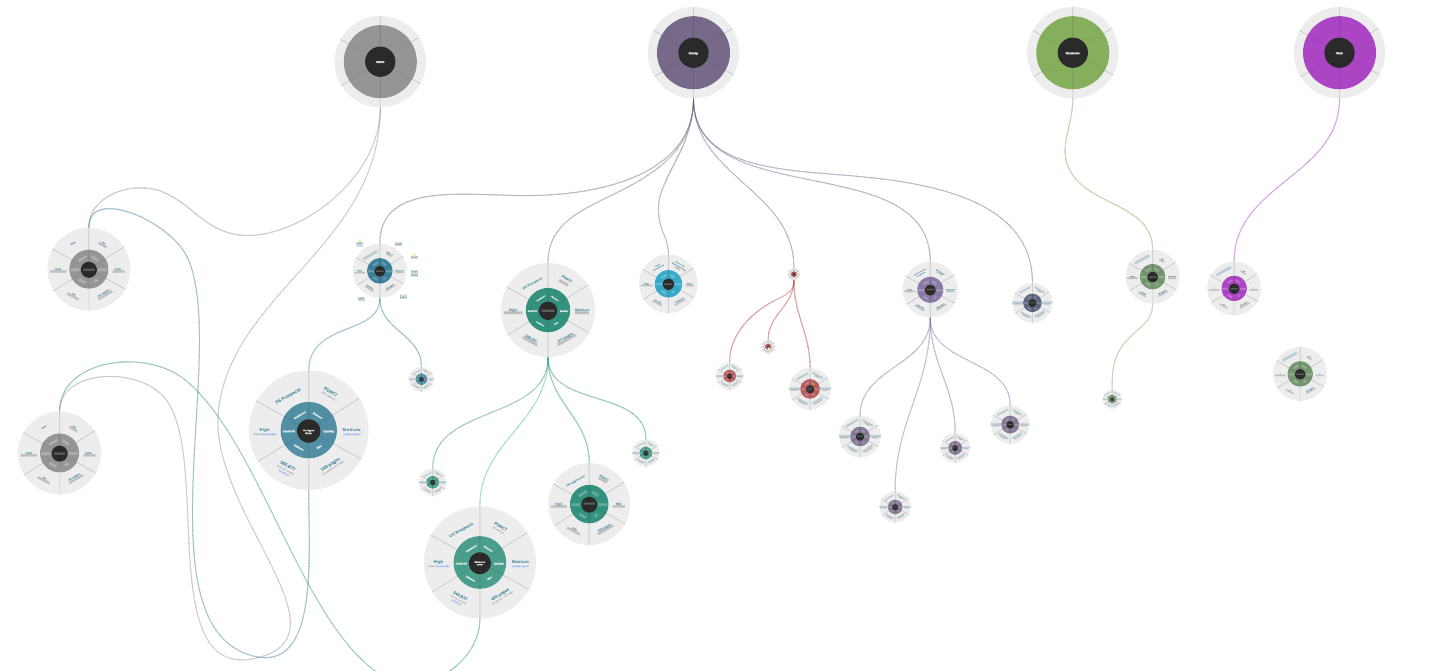

Map out the content

Now it’s time to find out where your content lives. If it’s all sitting within one website, that’s a fairly simple process. You can map your audit against the structure of that site, taking a page-by-page approach.

If it’s spread across multiple sites, as it was in my case, you might need to map it at a higher level. Rather than mapping out every page, you can map out each site. Try to understand how and where they connect, as part of the larger user journey.

Access the data behind it

Once you know where your content is, find out what tools you can use to access the data sitting behind it. This will form the basis of your audit.

I mapped each of my original criteria against the tool that would allow me to gather that data. I was keen to make sure that we had a good spread of sources, rather than relying on one particular tool.

Tools

I used four key tools:

EdWeb: used to find basic ownership and access data.

Snapshot: an internal auditing tool, used to gather data on the size and shape of each site, including site maps.

Silktide: an automated testing tool, used to assess the quality and accessibility of the content.

Google Analytics: used to track how each site was being used, including top pages, search terms, and number of visitors.

Plan how to gather that data

Audits can be really time-consuming. It’s worth putting in some planning up front to make sure you gather the data as efficiently as possible.

I mapped my audit criteria by tool, to identify the best running order. I wanted to take all possible data from each tool in one sweep. I also wanted to make sure that we covered most of the Musts and Shoulds, before gathering data that was rated as Could.

Test it out

Once you have everything in place, choose one site (or one area of your site) and test the process out.

Find out what your audit outputs are likely to be.

Will it produce:

- spreadsheets

- charts and diagrams

- images and screenshots

- a combination of different formats

Think about how this will affect the presentation and storage of the data. Depending on what format the outputs are, you might design your audit space differently.

Build a space to store your data

Based on the outputs from your test run, you can now design a space to store your audit data.

The use case for the data might also impact how you design the space. In my case, I knew that we wanted to use it as a tool for our team, but also as a resource for colleagues and stakeholders. It would allow others to see the size and complexity of our estate.

So, I broke the potential outputs down into primary and secondary data:

- primary data would live in Miro, where colleagues could see it

- secondary data would live behind the scenes, in SharePoint, for our team to use

The Miro space

Start auditing

Once you have all the building blocks in place, you’re ready to start auditing. Try to carve out a few hours at a time and work through it systematically, on a site-by-site or page-by-page basis.

Share your findings

If you want your audit to have any impact, it’s important to share your findings.

In my case, I discovered that prospective student content is spread across 2972 pages, on 26 central websites. It’s managed by over 65 editors, from 12 different teams, across 6 different departments.

That content spread means that information is difficult to find. Contact points are unclear, and we see frequent student task failures. It results in duplicate content and inefficient content management practices. This then leads to CMA compliance risk and student dissatisfaction.

We’ll now be working on measuring the usefulness, quality and accessibility of the content as we begin to look at each site in more detail.

Get in touch

If you’re interested in auditing or have further questions about our prospective student content audit, get in touch.

Email: cam-student-content@ed.ac.uk