Initial insights from UX testing our Drupal AI content assistant tool

This summer, Digital Content Style Guide Intern Mostafa Ebid integrated ELM into EdWeb2 in a Generative AI tool aimed at helping web publishers prepare content. He tested it with a sample of participants to make an initial assessment of the user experience it provided in the context of assisting them with web publishing tasks.

After interviewing University web publishers and researching how they used the style guide to prepare web content, Mostafa Ebid had a clear view of areas publishers found difficult. He used the insight he had gained into these problems together with his knowledge of the capabilities and strengths of LLMs and Generative AI (Gen AI) to build and iterate on an AI-powered tool aimed at helping publishers. Read more about his process in his blog post:

Integrating ELM with EdWeb – Building an AI tool for publishers

Keen to find out if the AI tool helped solve the problems publishers experienced, Mostafa designed and ran an initial round of usability tests with a pilot sample of participants. This blog post pulls together details of the tests conducted, the initial findings made, and the next steps.

Testing Gen AI features favours a participant-led, responsive approach

An important aspect of responsible AI use and application is ‘human in the loop’ – ensuring that at all points, people have the choice whether to use AI and are empowered to apply it and use it as they wish. With this in mind, it was important to design a test scenario where participants were free to interact with the AI as they saw fit to help them achieve tasks in a way that felt natural to them, so that their authentic behaviour could be observed in context and insights drawn.

As such, the test scenario involved participants being presented with a piece of content in a demonstration EdWeb2 site with associated tasks to complete that centred on improving the content. The AI tool was available for them to use to help complete the tasks.

The AI assistant tool had various capabilities available for use

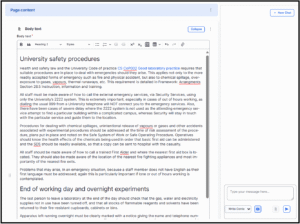

The tool presented in the right-hand side bar of the content editing interface and included the following features:

- A chat window to receive text to be edited along with a query or a prompt (for example: ‘Can you help me structure this text’ [pasted text]) or to receive a query or prompt without having text pasted (for example: ‘Can you give me some suggestions to structure my content?’)

- A drop-down menu with three pre-prompt options to choose from:

- Write Content (default)

- Design Content

- Proofread

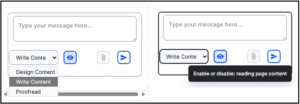

- The option to read page content (default is enabled, with the option to disable)

Screenshot showing the AI tool appearing in a sidebar of the EdWeb2 editorial interface

Close-up screenshot showing detail on the AI assistant tool chatbox

In the back-end, the AI tool had been provided with the Editorial Style Guide in a machine readable format, as well as with details of the structural elements used on University webpages. The AI provider for the tool was ELM, and it functioned with an orchestration of 12 AI agents, each with a particular capability, which included for example, the following:

- Audience Profiler Agent (able to analyse and identify target audience characteristics and needs, can evaluate content for audience fit, suggest improvements for different audience segments and provide recommendations for audience-focused content strategies)

- Style Guide Agent (ensures content adheres to institutional style guidelines and editorial standards. It can check content against style rules, suggest corrections, enforce brand voice and tone, and help maintain consistency across the site – utilises the content from Editorial Style Guide)

- Content Specialist Agent (analyses, writes, and improves website content. It can evaluate content quality, suggest improvements for clarity and effectiveness, provide rewrites, and check acronym usage)

- Web Content Orchestrator Agent (carries out initial filtering and routing of web content publisher queries)

- Page Structure Agent (designs and optimises page structure, can analyse existing page layouts, recommend component arrangements, improve content hierarchy and enhance overall information flow)

- Site Maintenance Agent (helps maintain site content, identify outdated material, identify content gaps)

Working together, these agents were able to deal with queries and respond accordingly, making use of the relevant functionalities.

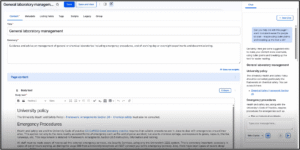

The test scenario required participants to familiarise with a piece of content and then improve it

A scenario based around improvement of written content was chosen for the test, with textual content about safety procedures taken from the University web estate as the piece of content to be worked on. This was presented to the participants in the body field of the EdWeb2 editorial interface, with the AI tool positioned at the bottom of the pane to the right of the body text editor. The participants were first asked to familiarise with the written content, and they were then asked to complete several tasks:

- You have been asked to work on the content on this page and asked to adapt it so it’s easier for people to scan-read. How would you do this with the help of the AI tool?

- Having reviewed the content, you realise it needs to be adapted for people who are newly signed-up first aiders so they know what to do in an emergency. How would you use the AI tool to help you do this?

- You recognise that the content on this page directs people to different webpages and you want to make sure this is as clear as possible, How would you use the AI tool to help you do this?

Participants were alerted to the presence of the AI tool but were not given specific instructions for how to use it, this was left up to each individual to decide.

Results and findings required careful analysis

Four usability tests provided ample material from which to derive initial insights, and while it was helpful to look for patterns across all of the participants, it was also important to consider the end-to-end experience of individuals, since all four interactions with the AI tool were highly dependent on the actions, perceptions and expectations of each participant.

Task 1 – about making the content more scannable – was approached differently by all four participants

Participants’ approaches to the first task were varied, and the responses from the AI tool defined their first impressions of it, setting the scene for the way they continued to interact with the AI tool throughout the rest of the test.

Some participants pasted in text for the AI tool to work on, some asked it to refer to the body text

Of the four participants, one interacted with the drop-down menu with the pre-prompt options, questioning the difference between the options ‘Write Content’ and ‘Design Content’. The others simply used the chatbox without selecting from the drop-down menu, using the default option ‘Write Content’.

Two of the participants typed queries into the chatbox without pasting any text, assuming the AI tool would be able to access the text in the body text field. An example prompt was ‘How could I change the content on the page so it’s easier for people to scan-read?’

One copied and pasted chunks of text across from the body field into the chatbox to give it something to work with and added prompts in relation to this content – for example with the prompt: ‘Suggest subheadings to make the following text more readable’ [inserts text].

Another typed queries based on topics in the content to let the AI retrieve answers – for example using the prompt: ‘Risk assessment procedures’.

Depending on what the participant asked, the AI tool provided reworked text or pointers

Those who pasted content for the AI tool to work with received a response which included reworked text, with words structured into sections with bullet points and headings added and some put into bold text.

In response to the queries without associated pasted content, the AI tool generated a list of numbered pointers to follow to improve the content, for example, advice like ‘Break up Long Paragraphs’ followed by excerpts from the current text with suggested re-writes underneath.

In response to the topic-based queries, the chatbot drew on the content in the body text field to provide answers.

Screenshot showing a participant’s prompt and the response with reworked text

Screenshot showing a participant’s prompt and the response with pointers

All four participants felt the AI tool’s responses were useful and some asked for more work

The people taking part in the test all had varying degrees of familiarity and experience with Gen AI and LLMs, however, once they had interacted with it, they noted its potential and reacted positively, with some commenting that it was better than they had expected. Some said they would adopt most of the suggested changes, others responded to the AI tool with requests for further work on the text, such as shortening sentences without losing meaning, or re-structuring specific sections.

Some participants had reservations about how the AI tool was working

Appraising the AI tool’s performance and its responses, some participants were assured it was working specifically on the text in the body text editor (recognising progress indicators displayed in the side pane) whereas others were curious about how it was working, anxious about where it was getting the content for its responses, and unclear if, since it was using ELM this meant they could be confident it was only pulling information from veritable University sources, such as policy and guidance documents published by the University.

Reviewing the text it produced and raising their unfamiliarity with the subject matter of the content, some participants expressed worry that in restructuring the text some important meaning or detail may have been lost, and said that they would want to check with someone expert in the subject matter before accepting the reworked content from the AI tool.

Task 2 – about shaping the content for a target audience – relied on participants being able to give the AI tool detail about that audience

This task sought to assess how participants might use the AI tool to support them writing content for a specific audience, and they were given the example of a newly signed-up first aider as the persona to adapt the content for.

Since it wasn’t their content, participants struggled to get the AI tool to help them write for a specific audience

Participants began this task by reviewing the content the AI tool had previously worked on to see how it needed to be changed. This required them to imagine what a new first-aider might need. In doing so, some participants felt that the previous work the AI tool had done to make the content more scannable was sufficient to be helpful for a new first-aider, and they couldn’t think of further adjustments. Some gave the AI tool a prompt to rewrite the content on the page for new users, in some cases expecting it to apply preferences they had previously provided in the first task. Others spent time thinking about the subject matter of the content and doing their best assessment of the type of safety procedure that would be applicable to first-aiders, without enlisting the help of the AI tool. In all cases, since they did not have authentic knowledge of the target audience, the participants found it difficult to assess how successfully the tool had been in helping them achieve this task.

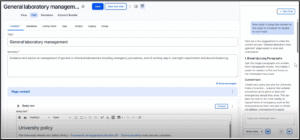

Screenshot showing a participant’s prompt for task 2 and the response

Task 3 – about improving the link text – relied on participants seeing a problem with the way links had been formatted in earlier tasks

This task sought to reveal whether the participants found a use for the AI tool to help them improve link text, and how useful they found it in supporting them to achieve that aim.

Reviewing the AI tool’s previous outputs, some participants felt the link text was good enough, others prompted it with specific link text rules to apply

Similar to task 2, participants began this task by reviewing the work already done by the AI tool. Applying their knowledge of what good link text looked like, most participants deemed the work already done on the content by the AI tool had produced formatted links which were satisfactory, therefore they did not feel the need to prompt the tool to do anything further. Others prompted the tool to look at the links in the content to ensure the links were pointing to the correct destinations. Others sought to ensure the AI tool applied the specific rules of the University’s Editorial Style Guide (specifically, the rule about not using in-line links) and copied and pasted the relevant excerpts into the AI tool chatbox to ensure the AI tool applied the rules about formatting link text. Outputs from the tool included reworked content with special attention to improved links which most participants were pleased with.

Screenshot showing a participant’s prompt for task 3 and the response

Test findings prompted interface improvements and highlighted the need for more testing

The four usability tests provided a wealth of data about possible expectations, perceptions and requirements from an AI tool embedded in an editorial interface, both on a task-by-task level and overall. Taken together, the test results highlighted several ways the AI tool user interface could be improved, as well as identifying direction for further tests.

Observations of participants’ interactions suggested areas of improvements to the AI tool interface

When the AI chatbot produced reworked or restructured content, the participants felt the need to compare what had been generated with the original content on the page, to judge whether it was an improvement or not. They found it difficult to do this comparison due to the fixed width of the chatbot side pane. Furthermore, since the responses provided by the AI chatbot were often around 50 words, the responses often filled the small window occupied by the chatbox, and since the default positioning was to go to the bottom of the side pane, participants needed to scroll back up to read the full response which they did not expect. These observations highlighted several ways its user interface could potentially be improved, to make it easier for people to read and act upon the information ELM provided. These were as follows:

- The AI tool side pane could be expandable from right to left, to avoid the responses displaying in a narrow and long window, which forced users to scroll to read all of the information generated

- Following the response generation, the default positioning should be at the top of the side pane (not the bottom) to avoid users having to scroll back up to read the full response.

Before such improvements were implemented, however, it would be important to carry out accessibility testing of the AI tool, to identify wider interface changes to be made.

We want to test the AI tool using content supplied by publishers themselves

Running the test using the content about safety procedures provided useful learnings, however, it was limited in the sense that since the participants were unfamiliar with the content and its audiences, they could not authentically review and critique the AI tool efforts. To counter this, we plan to do further tests, but instead of providing publisher with content, we will seek to ask publishers to provide samples of their own content that they would like to improve to make the test scenario more natural.

We’re planning further tests on the AI tool to understand its potential to help publishers

With its underlying suite of 12 AI Agents, the AI content assistant tool is capable of much more than it was possible to test in this initial round of research. We are therefore working on designing further tests with appropriate scenarios and tasks tailored to try out its additional functionality, to see if publishers find it useful and usable. Recognising the dependency of publishers’ appraisal of the tool on their prior knowledge, understanding and experience with Gen AI and LLMs, we also plan to gather information and insight into these areas, to aid our interpretation to test results.