My first six weeks as a Digital Content Style Guide Intern

About me

Hi! I’m Mostafa, a second year undergraduate student studying computer science at the University of Edinburgh. I am also the Digital Content Style Guide Intern working in the UX team to:

- understand how publishers use the style guide when editing content

- propose improvements to the guide

- explore new ways of applying style guide rules.

It has been such an exciting learning experience for me so far, and I want to share a glimpse of my journey with you.

Weeks 1 to 2: Learning

As my first-ever work experience, week one was all about learning.

If you didn’t know already, content design is kind of a big field, but it’s all centred around users. This is why user research goes hand in hand with content design: understanding your audience, their needs, their emotions, and their pain points is crucial to ensure you write content that serves them while meeting business goals.

In the first two weeks, I went to workshops like Content Design for Web Publishers, did training courses like the new Effective Digital Content course, and did my own learning through ContentEd+ online material and reading Content Design by Sarah Winters and Rachel Edwards.

Content Design by Sarah Winters and Rachel Edwards

I also delved into the editorial style guide – the focus of my work – going through the website and PDF versions in detail and noting down mistakes and ideas for improvement and collating them on a Miro board next to a hierarchal snapshot diagram of the entire style guide site.

Weeks 2 to 3: Research and exploration

I did a lot of research on how other organisations present their style guides from both a user interface perspective and a content perspective.

All the organisations I researched had an A to Z guide webpage, but how it was laid out differed. Some you navigated using a horizontal A to Z navbar, while some, like GOV.UK, used accordions for each letter.

It was also interesting to see how these organisations were helping their publishers use the style guide in their content editing. The Guardian, for example, created Typewriter – a tool that checks against a database of common mistakes they collected over time.

How we made Typerighter, the Guardian’s style guide checker

Google’s style guide has an entire section titled “Break the rules” to tell their technical writer audience when to break their style guide rules.

Google developer documentation style guide

So, to understand the needs of our publishers at the University, I set up some interviews with those who previously expressed interest in helping us with the style guide project.

Learning about what the Guardian developed in their organisation prompted me to ponder: could I create a “style guide checker” like Typewriter but for the university’s style guide? To explore whether this is possible, I took a closer look at each of the style guide’s rules to determine whether most rules can be programmatically checked. When it comes to language and tone, it was obvious that a more complex tool that understands language would be needed, but most rules (formatting, dates and times, abbreviations) can be detected programmatically.

So, I undertook this project. I started learning about Drupal (the backbone of EdWeb 2) and module development. I also got a copy of the backend of EdWeb 2, thanks to Jen and Billy, so I can start creating my module there.

One important piece of advice I got is that “if you want a feature, there’s probably a module for it.”

Extending Editoria11y

There are some contrib modules that do accessibility checks, but Editoria11y stood out for me for three reasons:

- Simpler to extend – their code repository had sections on how to extend their module from changing the style of the module to creating custom tests.

- Provides report analysis of issues across the site – crucial for auditing sites more robustly

- Live checking feature – publishers get immediate feedback on their content.

One custom functionality that I wanted to add is the ability for users to accept and ignore suggestions. This would reduce their cognitive workload and allow them to focus on crafting high quality content for their audiences.

Weeks 4 to 5: Forming project plans

I met with Caroline Jarrett from Effortmark at the start of week 4, and one of the key ideas of the meeting was to “join forces” with Zbigniew—the Green Digital Design intern.

Auditing the Careers Service site

The Careers Service are currently undergoing a project to change the structure and content of their web estate. Zbigniew has been working on auditing the Careers Service site for sustainability.

It would also be useful to audit their content against the style guide, as it would allow them to measure the impact of their site-restructuring project across both content style and accessibility.

Sustainability in the style guide

The editorial style guide doesn’t refer to sustainable practices in content publishing. However, some practices, like reducing image size before publishing, have become more common and are so important for reducing the net carbon impact of a webpage that it prompts the question: should they have a place in the style guide?

While these technical explorations were happening behind the scenes, I was also out meeting the people who’d use these tools. Most of week 4 was spent conducting interviews with publishers, understanding the process they go through when publishing content, and where the style guide fits within it.

During week 5, I focused on developing the style guide checker, working across each of the following areas.

- Improving the user interface

When a style guide check fails, a tip marker appears next to the failed paragraph, as shown here.

If you hover over the marker, a tip box appears.

The content of the tip box was originally far too long,so I redesigned the user interface for the tip.

- Extending Editoria11y with university style guide rules—used the custom tests feature within Editoria11y.

- Adding accept/ignore buttons for each custom test

It was important to ensure that the accessibility/style checker was itself accessible. So, I made sure to use colours from the EdGel pattern library, check colour contrast, include title attributes for screen readers and allow users to navigate the new tool design using a keyboard. However, I also aim to check with others more experienced in accessibility to improve the accessibility of the tool.

Reimagining the possibilities

Weeks 4 and 5 were also filled with exciting events like the ELM town hall and the digital recruitment unconference. Both inspired me with new thoughts and ideas about:

- How can we help less-experienced publishers design their content better?

- Could I use AI in the style guide checker?

Insights about AI

The unconference allowed me to talk and meet content editors and publishers from around the university and learn about the problems they are dealing with. It was enriching to hear different perspectives on ELM and how publishers use it to brainstorm/get feedback on their content. However, there was a consensus about the limitations of AI, especially regarding its hallucinations.

On the other hand, going to the ELM town hall and hearing about how different teams used ELM to create complex tools that process language meaningfully motivated me to enquire further about what I could do with ELM.

It was incredibly useful to learn about the EdHelp LLM chatbot and how they restricted the LLM’s input and task to achieve a very high accuracy and almost zero hallucinations. It made me think of different approaches to using ELM to check against the style guide: for example, instead of one prompt to check against the entire guide, a series of prompts that check individual sections may provide higher accuracy and reliability?

Can ELM apply style guide rules?

Early on in my internship, I read some blogs that experimented with using ELM to check content against the style guide. While ELM showed some success in experiments with Drupal AI Automators, as explored by colleagues in the UX team, it also had its limitations, as explored by others in the UX team.

In both blogs, the response from the AI was textual feedback, but could I integrate ELM directly with the functionality of the style guide checker?

Week 6: Exploring Drupal AI

After requesting an API key earlier in week 5, I began exploring ELM and Drupal AI in week 6.

If you didn’t know, Drupal AI is kind of big: many contributors joined forces to develop one AI module for Drupal. The Drupal AI module has many features, from a simple API Chat Explorer to AI Agents and Automators.

To start learning about Drupal AI, I spent some time watching tutorials. I then delved into the documentation to learn about the different features that Drupal AI offers. I am yet to explore all the features, but I want to share my experiences experimenting with one of them.

Experimenting with JSON Output

From a high-level point of view, each custom test works like this.

- Search through the content text for relevant elements for the test.

- Check those elements against the test pattern (regex).

- Any matches? For each match, create a tip content box and position a marker next to the match.

- If there are no matches, wait until a change is made in the text editor and start again.

I pondered: could I use ELM to do steps 1 and 2? Meaning, could I give ELM the HTML content and ask it to output a list of matches?

ELM currently does not provide an API for the Llama (free) models, but it does for OpenAI’s GPT models, so I experimented with them.

Using Drupal AI’s Chat Generation Explorer, I started to do some prompt engineering. I designed a system prompt to check against a specific section of the style guide (dates and numbers). I also designed a JSON schema/structured output to pass with the prompt.

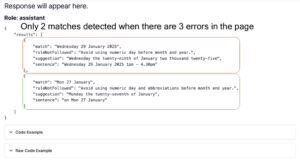

I entered a user prompt of a sample HTML text of a webpage that contains three date and time errors. I got the following output.

The LLM almost detects each error in the input text. However, after subsequent tests, I learnt that the outputs are not consistent/reliable. For example, after I sent the prompt a few more times, I got the following output.

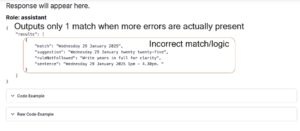

Sometimes, I only got one match.

To combat the issue of inconsistency, I tried to tune the model’s hyperparameters – changing values such as temperature, which controls the randomness of the LLM. I am still experimenting with the hyperparameters and system prompt to try to create a more reliable process. Another issue with this technique is the model sometimes incorrectly matches a piece of text that does not break the rules. This is a disadvantage of the JSON method but can be lessened by improving the system prompt.

Next steps for ELM exploration

So far, this is early experimentation. There are many more parameters that I can change and try to see if some combination of them gives a reliable output. There are also many more features of Drupal AI that I aim to explore to see if they would be useful within the style guide checker.

However, I also need to consider that the issue might not be the LLM itself but where I am inserting it within the checking process, in which case, I would need to rethink the design of the checking stages.

Other solutions

So far, the main solution I have been exploring is the style guide checker; however, I’ve also been prototyping with other solutions, including developing a SharePoint site for the editorial style guide to allow publishers to provide feedback more easily. I’ve also been experimenting with different ways of presenting the A-Z style guide. Currently, it is in PDF form, which needs to change for two reasons. PDFs are not inherently accessible. They are also less effective from a usability standpoint, as there is the extra step of downloading them before getting to the content. Despite this, PDFs have one critical feature that is used by many publishers: control-F search. Therefore, when creating a new prototype, it’s important to ensure that this feature remains or is improved (for example, by using a search bar for the A-Z guide).