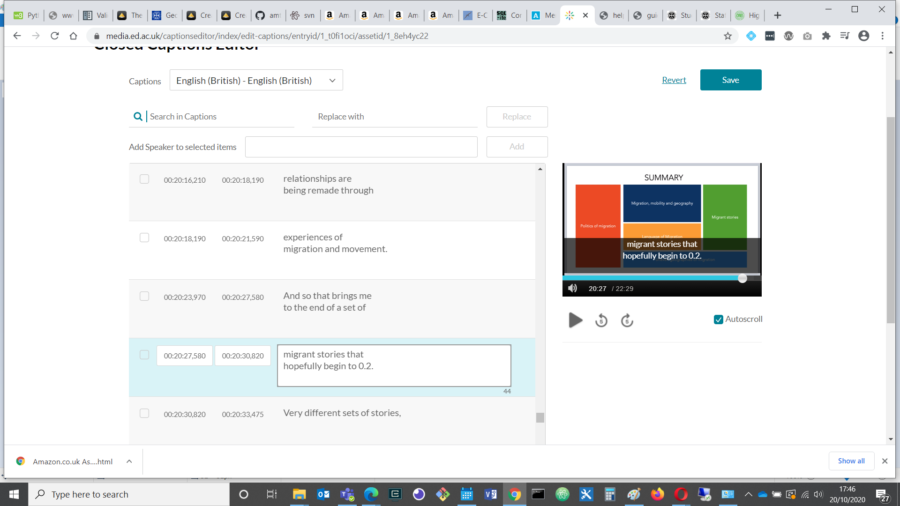

One of the unexpected but nice parts of my job lately has been editing the automated subtitles on some of our teaching videos.

This is a requirement for our course materials, to ensure our videos are more accessible for, for example:

- Deaf students

- Students with other hearing difficulties, for whom subtitles can help with comprehension of fast spoken or unclear dialogue, perhaps spoken with unfamiliar accents, mumbling, or with background noise

- Foreign language speakers, for whom the subtitles might help with translation and following along with the spoken text

- Students with learning disabilities, attention deficits, or autism, who may find subtitles help them to maintain their concentration on the videos

- Students who need to study in a place where they can’t play sound

- Students who need to see the spelling of proper nouns, such as full names, brand names, or technical terms

- Students who just prefer to watch videos with subtitles as a habit

According to research by Ofcom in 2006, 7.5 million people in the UK (18% of the population) used closed captions, and of these, only 1.5 million were deaf or hard of hearing. 80% of those who preferred to watch with subtitles used them for other reasons.

Since we moved to hybrid learning, we have more and more teaching videos to work on, so that is an occasional silver lining to social distancing for me, as subtitling is a nice, quiet and absorbing task, and our videos are often very interesting 🙂