In this extra post, Elena Ioannidou and Kevin Hanley describe a new feedback initiative being used in the College of Science and Engineering to help students engage more with marking criteria. They used an online platform built for peer reviews and group work – Peerceptiv – to introduce peer feedback in ‘Engineering Principles 1′ – one of the School of Engineering’s biggest classes. This post explains the innovative exercise as well as presenting the students’ experience of Peerceptiv through qualitative and quantitative data collection. Elena is a Learning Design and Technology Officer, and Kevin is a Senior Lecturer, both in the School of Engineering.

The assessment and feedback conundrum

Giving helpful and effective feedback is…a trial. One of our biggest challenges is ensuring that feedback we give is helpful and motivating for students’ future development. Helping students understand the marking criteria is another big challenge, particularly those accustomed to receiving high grades in school: why does a 75 now indicate excellence? What’s the difference between a 55 and a 60? Combine this with ever-increasing class sizes and well-meaning but incredibly busy academic colleagues, and we end up with marking schemes that are not fully understood and feedback that is not seen as helpful.

In the College of Science and Engineering, we’re trying to address this gap in assessment and feedback literacy by strategically introducing more opportunities for peer feedback using an innovative platform called Peerceptiv. In semester 1 of the 25/26 academic year, Peerceptiv was used in the Engineering Principles 1 course for the first time.

Engineering Principles 1 in a nutshell

Engineering Principles 1 is one of our biggest courses, being part of all degree programmes in the School of Engineering; we’ve seen enrolments fluctuate between 450 and 600 students over the years. It is a pivotal course for first years, bridging the gap between secondary education and Higher Education, as well as welcoming a significant number of international students every year. The ins and outs of groupwork are at the heart of this course, with all the challenges and effort that come with coordinating hundreds of student groups.

The Reflective Engineer assignment is the first in this course and hence marks the students’ first contact with the University’s Common Marking Scheme. The seminars accompanying this assignment involve a number of group work activities designed to introduce students to the marking criteria and the practice of engaging with the feedback loop: giving, understanding and implementing helpful feedback.

In the Reflective Engineer assignment, students interview an established engineer from industry as part of a group of around five students. These groups are multi-disciplinary and contain students from highly varied backgrounds, meaning that students will take away different things from the same conversation. For assessment, each student submits an essay containing their own personal reflection on the interview and how the interview might inform their choices at the University. They also submit forms reviewing their group’s dynamics.

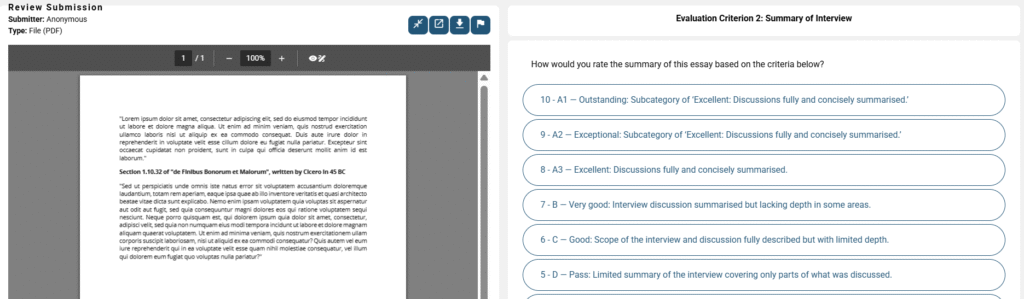

Before the final submission, each student must submit a draft essay for their group members to review and provide feedback on. The students are given a rubric with the same marking criteria as the final essay and scales that correspond to the University’s Common Marking Scheme.

Prior to the 25/26 academic year, the peer reviews were left to the students in each group to coordinate via whatever method they found best. This led to some very real challenges, the greatest of which was the fact that we couldn’t know for sure whether peers actually received feedback on time to improve their essay, or whether they received it five minutes before the deadline.

Engaging with the marking criteria

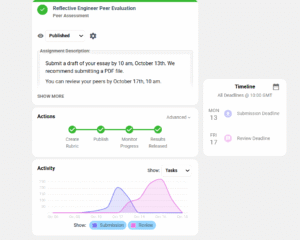

For this exercise, we used a platform built for peer reviews and group work: Peerceptiv. There is always trepidation when inserting new technology — it can all go wrong. However, trust between your teaching team, administrators and learning technologists goes a long way towards bridging the gap. Students submitted their work and then provided feedback on the essays of their group members. Coordinating groups was a simple push of a button and we could easily incorporate the required rubric in Peerceptiv. In cases where students needed to be moved around due to difficult circumstances or changes in the groups, we were able to intervene with little effort thanks to Peerceptiv’s flexible approach to group activities.

But what about the quality of the feedback? Student reviewers in educational settings are often inexperienced assessors. Besides technical knowledge, assessing requires understanding the marking criteria, as well as how those can be fulfilled. How can a student hope to assess when they are not an expert on the topic? This question makes many colleagues nervous of the prospect of a peer review activity.

That is why we took great care to provide appropriate scaffolding to ensure students have the right tools for the job. A robust rubric is one of the strongest weapons in your arsenal: a rubric that explains what ‘good’ looks like and helps students work towards achieving what is required of the assessment. With the help of the rubric, our students provided helpful feedback to each other and pointed out strengths in the essays they were reviewing, as well as areas for improvement. We were pleased with how helpful the feedback was and how many students engaged with the activity.

The Student Voice

But how did the students feel? Another reason why peer reviews are seen with suspicion is we assume students will hate them and view them as less valuable than instructor feedback. Sometimes we wonder: do they even read them? However, the students were clear in their appreciation of the activity:

“But I did think that what we did with Peerceptiv was a really good idea because many students improved their essay drastically through the comments they got from other group members. So I think that was a really good idea. Everyone found it really helpful.”

“You get a perspective from everyone which everyone introduces new ideas, new critiques. I think that was the most helpful thing, and so you get a rounded opinion.”

“Because the people that you’re reviewing the essay of attended the same interview as you, so it provides a new perspective on what they were able to glean from it.”

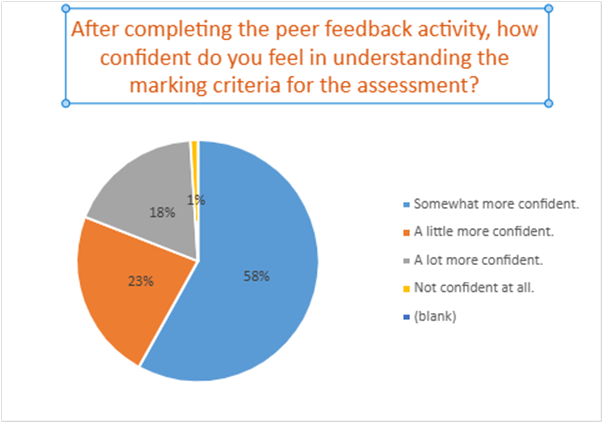

We received these comments unprompted. We also gathered quantitative data that indicates increased confidence in understanding the marking criteria:

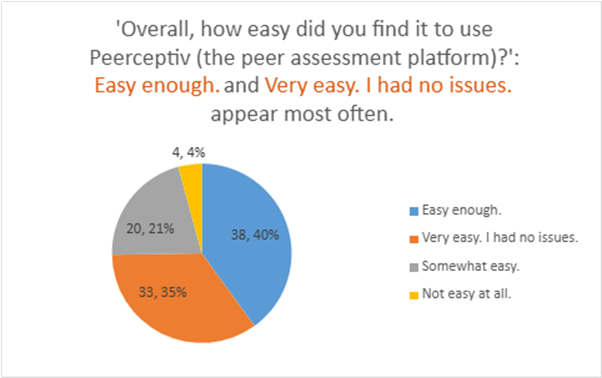

Conscious of the fact that we were asking them to use a new platform, we asked students whether they found Peerceptiv easy to use. They did:

What next?

This activity, adopting Peerceptiv for the inter-group peer reviews in Engineering Principles 1, was very successful. However, it raises further questions:

- We have seen the feedback students are able to give each other. Is that the kind of feedback they find helpful, and what can we learn from them?

- The marking criteria were applied very generously, with A3 as the class average in most criteria. This confirms our suspicion that students have different expectations to UK HE marking norms and are faced with disappointment when those are not met. How do we ensure expectations are set appropriately?

Peerceptiv has helped us remove a barrier in helping students engage with the marking criteria, as well as ensuring a large cohort gets timely feedback that helps them develop. We continue to explore how we can leverage peer feedback to improve student learning — with, perhaps renewed, confidence.

References

Institute for Academic Development (2025) Assessment rubrics. Available at: https://institute-academic-development.ed.ac.uk/learning-teaching/staff/assessment/rubrics (Accessed: 15 Dec 2025).

The Peerceptiv Project (2025). Available at: https://uoe.sharepoint.com/sites/Peerceptiv/SitePages/Peerceptiv1.aspx (Accessed: 15 Dec 2025).

Elena Ioannidou

Elena Ioannidou

Elena Ioannidou is the Learning Design and Technology Officer for the School of Engineering. Her main area of activity is assessment and feedback, particularly game-based learning and student-led peer feedback.

Kevin Hanley

Kevin Hanley

Kevin Hanley is a Senior Lecturer in the School of Engineering at the University of Edinburgh. He has taught on courses assessed by group work since 2015, both within the Chemical Engineering discipline and cross-School courses such as Engineering Principles 1. He has a strong interest in making the assessment of group work fairer.