In this post, Abby Anderson discusses the benefits of skills-based grading, and describes how the Technology Enhanced Learning (TEL) team worked with Dr Itamar Kastner, Richard Wilson and Aleksandra Sevastianova to design a skills-based grading block within a pre-Honours Linguistics course. Abby is a Learning Technologist in TEL team in the School of Philosophy, Psychology and Language Sciences, and presented ‘Implementing skills-based grading in a pre-hons linguistics course‘ at the Learning and Teaching Conference. This post is part of the ‘Transformative Assessment and Feedback’ Learning and Teaching Conference series.

Higher Education is at a pivotal point where changes and improvements to assessment and feedback are not just encouraged but may soon become unavoidable. As Generative AI dominates conversations around academic integrity, it is important to begin thinking of how students can interact with material effectively, in a way which benefits their learning and future.

Technology is embedded in this shift and is being harnessed to create innovative, creative and applicable assessment options. In addition, skills-based grading (SBG) steps away from tradition, placing less of a focus on numerical grades and more of a focus on learning outcomes and skills achieved by a cohort. Some of the reported benefits of SBG include:

- reduction of student stress

- equity in evaluating student work

- more flexibility for students to work at their own pace.[1]

Dr Itamar Kastner, Richard Wilson and Aleksandra Sevastianova have designed a skills-based grading block within a pre-Honours Linguistics course, working with us in Technology Enhanced Learning (TEL)[2] to successfully deliver the assessment.

How does TEL come into this? Our Virtual Learning Environment (Learn) is set up to encourage a traditional assessment and feedback approach, with options to undertake either tests or assignments, complete with numerical grade and any written or oral feedback.

What we wanted to do was mould the virtual learning environment and test it to its limits to enable a SBG approach that was simple from both a student and staff perspective. We also aimed to ensure we were using the systems available as much as possible, as this not only keeps costs lower for delivery, but also avoids the need to train tutors up on systems that may be unfamiliar to them. It would also prevent any extra workload for our Teaching Office, focussing on systems already used by them.

Our goal was to design and implement a simple but thorough approach, prioritising sustainability for future iterations and flexible scalability.

Experimentation and set-up

Dr Kastner approached the TEL team with the intention of setting up this SBG approach for the Syntax block of ‘Linguistics and English Language 2A’. We began the process of experimentation within Learn to see what was and was not possible within the frame of reality. The proposal for the course was as follows [3]:

- 12 topics in the block, with on average two skills per topic.

- For each skill, the students had the three opportunities to prove proficiency.

- Students could opt to answer questions in a test or submit an answer to a tutorial question to demonstrate this.

- There should not be a numerical grade attached to each skill, rather students would receive a status of “proficient” or a still encouraging “not yet proficient”.

Screenshot of the course, LEL2A

Thus began our experimentation to see how Learn could be used to enable this innovative approach. In this phase, we tested multiple options both within and outside of the VLE, to explore as many avenues as possible. Our testing included complicated Gradebook set-ups where calculations were used to determine which skills had been achieved; the use of SharePoint lists to track skills acquired; we also investigated the implementation of digital badges and reporting. We consulted with Dr Kastner throughout this process and, after presenting a number of options, decided that the priority was keeping the interface simple for both students and staff. Our final set-up was as follows:

Tests in Learn

- Each skill had a corresponding test in Learn.

- Each test contained a pool of between 3-5 automatically marked questions, one of which was randomly selected for the student to avoid them answering the same question.

- The test was created with two attempts, so if a first attempt did not demonstrate proficiency, the student could take it again. (We intended for the pool to ensure the second question picked was different from the first but, due to limitations in Learn, this wasn’t possible.)

- There was no due date set so students could undertake the assessment at their own pace.

- The tests were linked to a Proficient/Not Yet Proficient schema in the Gradebook, so, upon completion, the student could see whether they had achieved the skill.

Tutorial assignment in Learn

- The second option to demonstrate mastery was through the completion of a tutorial assignment.

- A tutorial assignment would cover multiple topics/skills.

- The students would have unlimited attempts to submit however there was a due date as it required tutors to review the submission.

- Delegated marking was set-up to allow tutors to only see the relevant submissions.

Should the students struggle with the exercises, they could then demonstrate mastery of the skill by undertaking the tutorial submission.

Results

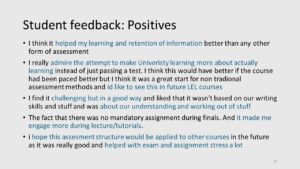

The semester came and went, students undertook the syntax block and generally responded well to the new set-up. This year, more research is being conducted to determine how the students’ attitude towards assessment changed through undertaking the block. Some of the student feedback indicated that the set-up helped with retention of information and that it made “learning more about actually learning”. Other students noted that the fact it was self-paced helped with stress and anxiety around finals time. Tutors indicated that it helped them understand how their students were actually doing in the course.

From a TEL point of view, we managed to prevent most hiccups and the set-up worked as smoothly as possible considering the limitations of the technology available. This is likely due to the simplicity of the approach we took, as well as the flexibility of the tutors within the course. The main issue we had was the lack of flexibility within the VLE, which meant we had to sacrifice some of the intended automation, so manual input was required.

Future plans

It would be beneficial if, in the future, we could use existing technologies to streamline this process further, so the manual input is minimal. This would reduce marking times and allow for more intentional time spent on the course. We would like to see updates to virtual learning environments that accommodate this sort of innovative learning assessment in a way which is scalable and future-proofed. It will be interesting to see how this set-up can be scaled or implemented in a course or subject area where the skills achieved are less defined. SBG could be used in conjunction with other pedagogical techniques such as gamification in these areas, where repetition and low-stakes assessment could deepen learner knowledge. It will also be useful to see how students interact with the approach across multiple academic years; feedback and trends from this will influence how it is then set-up in the future.

Ultimately, SBG encourages both academics and tutors to look at their courses through a new lens. Alongside other educational practices such as flipped learning, if achieved, SBG can allow for more efficient use of time in a course. It can reduce stress and allow for greater transparency between tutor and student. Here, it’s possible to draw parallels with technology in education. When harnessed, technology can assist, enhance and revolutionise the way content is delivered. It can increase efficiencies and allow for more time to deepen student skills. This process has demonstrated that technology is interwoven with modern-day pedagogy; it not only raises questions in the sphere of Higher Education, but answers them.

References

[1] O’Leary, M. (2021, May). Skills-Based Grading: An alternative approach to evaluation. Invited talk at the Workshop on Inclusive Teaching in Semantics presented by SALTED: the SALT Equity & Diversity committee. At Semantics and Linguistic Theory (SALT), virtually hosted by Brown University.

[2] Sylvia Parma, Mate Varadi and myself (Abby Anderson)

[3] Kastner, I. Skills Based Grading in LEL2A Syntax. University of Edinburgh Blogs. https://blogs.ed.ac.uk/itamar/sbg-lel2a/

Abby Anderson

Abby Anderson

Abby Anderson is a Learning Technologist in the School of Philosophy, Psychology and Language Sciences, with in with an interest in innovative assessment design and creating online spaces that are accessible to all.