In this extra post, Wesley Kerr describes the “Scoping AI Developments in EdTech at Edinburgh” (SADIE) project, set up to standardise an approach for service teams in Learning, Teaching and Web Services to test and evaluate the utility and suitability of the AI tools and features being made available in the centrally supported EdTech services. Wesley is a senior Learning Technology Advisor in Educational Design and Engagement, Learning, Teaching and Web Services Division (IS).

In the Learning, Teaching and Web (LTW) directorate of Information Services, we recently carried out a service improvement project entitled “Scoping AI Developments in EdTech at Edinburgh” (SADIE). The project was set up to standardise an approach for service teams to test and evaluate the utility and suitability of the AI tools and features being made available in existing centrally supported EdTech services.

Since the release of ChatGPT v4 in March 2023, there has been a move by many technology companies to add AI features to their products and services. Our Educational Technology vendors have not been immune to this trend, and AI features have been introduced to most services in the last 18 months. Some examples are:

- An AI detection feature was added to the similarity checking service Turnitin in April 2023.

- Various AI helper tools have been added to our virtual learning environment Learn since July 2023.

- Wooclap added an AI wizard to generate multiple choice or open questions in November 2023.

Currently, all AI features are either a paid-for extra that we have not subscribed to or are currently disabled, where the service team have control over their release. It is interesting to note that Turnitin was originally not going to give customers the option to disable the AI detection feature but reversed their decision under pressure from UK institutions.

The SADIE project was set up to standardise an approach for service teams in LTW to test and evaluate the utility and suitability of the AI tools and features being made available in the centrally supported EdTech services. The approach developed looked at the risks of adopting a particular feature, and calls upon the expertise of learning technologists and subject matter experts within the Schools, as well as that of the service teams in Information Services, in evaluating them.

The project team identified six primary risk categories, in line with the University Risk Policy and Appetite.

- Bias and Fairness – the data used to train the AI and on which it generates its output are largely unknown.

- Reliability and Accuracy – AI output is subject to some error -misinterpretation of the data or amplifying mistakes in the data.

- Regulation and Compliance – data protection and privacy legislation; potential breach of copyright.

- Ethical and Social – the process that AI uses is not transparent and could be seen as unfair and not aligned with University values.

- Business – conversely, the University has been at the forefront of AI research and it would seem strange that it did not allow colleagues to innovate with AI.

- Environmental – a Generative AI approach may require several times the energy of an equivalent non-Generative AI method.

It may never be possible to fully mitigate against these risks when using AI tools, but the service managers can remain vigilant about the risks posed by particular features and be transparent in communicating these to their users.

The SADIE process has been aligned with the current processes for the testing of non-AI features that are regularly introduced into our services – to minimise the burden on service teams.

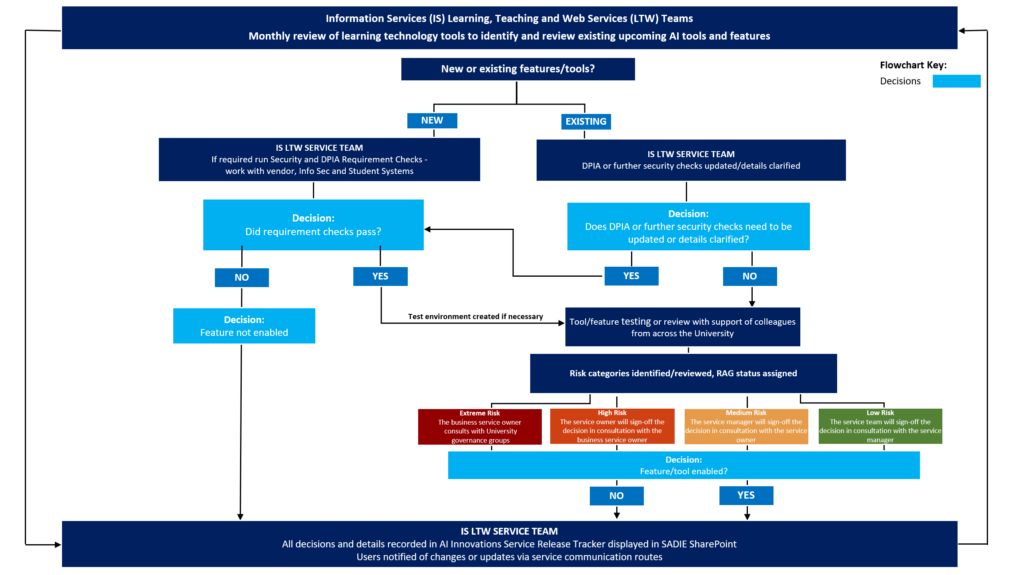

A brief outline of the process is given below full details area available on the SADIE SharePoint site: Processes, Guidance and Support.

- Each service team is responsible for the identification and testing of AI features in their services.

- A check is made to ensure regulatory compliance with, for example, data protection and copyright.

- If this is passed then testing of the feature will commence, with input from the appropriate user community, to determine a RAG status. This then determines if escalation is required before making a decision on enabling the feature. · Once a decision has been reached it is recorded on the AI Innovations Service Release Tracker on the SADIE SharePoint site.

- The decision will also be shared in the appropriate service’s user communication channels.

The following flow diagram captures the SADIE evaluation process:

Further information with a full project report and more details on the risk analysis, evaluation process and feature tracker are available at the SADIE SharePoint site.

Wesley Kerr

Wesley Kerr

Wesley is a senior Learning Technology Advisor. He joined the School of Chemistry in 1991 as a laboratory technician later moving into computer support within the School. From there he quickly became responsible for supporting the School’s first moves into technology enhanced learning. He joined IS in 2007 where his current role is supporting staff and students to get the most out of the learning technologies offered at Edinburgh with a focus on student engagement and assessment.