In this post, Cristina Adriana Alexandru highlights MarkEd, an innovative online tool for marking in the School of Informatics. Cristina and colleagues Andrius Girdzius, Chris Sun, Hamdani Azmi, Xiaofei Sun and Xisen Wang, presented at the Learning and Teaching Conference 2022. This post is part of our Learning and Teaching Conference Hot Topic.

Marking and feedback are central parts of assessment, and offer considerable advantages to students, academics, the school and university as a whole. High quality marking and feedback can help foster students’ learning and improve students’ confidence, while also indicating to academics areas of improvement of their course material and delivery. These processes makes it possible for the school and university to maintain demand, abide to standards and achieve recognition. Moderation is closely related to the processes of marking and feedback, and involves taking steps towards ensuring their quality.

In the School of Informatics (SoI), at the University of Edinburgh, marking, feedback and moderation processes vary considerably between courses because of their subjects and learning outcomes (e.g. analytical vs reflective), types of assessment (e.g. programming vs essays), and preferences of the academics. While some courses have been using online marking tools since before the start of the pandemic, it is only in recent years that have become prevalent for assessment purposes. Unfortunately, this has not led to consistency: while the school holds licences to numerous such tools, many of them do not cover the needs of our academics. Furthermore, they are not integrated with one another, or with the student record system, which leads to administrative overheads both for the academics and the course secretaries. As a result, many academics have developed their own tools that only work for their courses. This is unsustainable, and leads to inconsistency in marking and feedback quality for the students.

To address the above issues, since 2019, five students and their supervisor have been working on the development of MarkEd: an online tool for SoI academics, markers and students. This tool considers their needs, and includes pedagogic strategies for marking, feedback and moderation. The following were the steps we have undertaken in developing MarkEd:

Part 1 (2019-2020) – led by Andrius Girdzius

We collected requirements for MarkEd from SoI academic staff (9), administrative staff (7), markers (6) and students (20). We iteratively developed a low-fidelity (on paper) and then medium-fidelity prototype (in Figma) for the marker/moderator interface. The prototype was evaluated with academics (8) and markers (8), with positive results on its main functionality but views that its usability could be considerably improved.

Part 2 (2020-2021) – led by Chris Sun and Hamdani Azmi

We focused on aspects of quality: marking fairness; efficiency; and feedback quality, to design extensions to the original prototype, also in Figma. First, we approached SoI academics (4), markers (8) and students (12 – only for questions around feedback) to identify the features that could be added to MarkEd to support high quality marking and feedback. Then, these features were added to the design iteratively. Further evaluation with SoI academics (6), markers (8) and one learning technologist was very positive. It suggested that the features could help individual markers keep consistent with other markers and save time in writing feedback. Concerns expressed included being timed, using feedback templates for experienced markers, and markers’ progress being monitored by academics.

Part 3 (2021-2022) – led by Xisen Wang and Xiaofei Sun

We started the implementation of MarkEd, following the original design from part 1, extensions from part 2, and evaluation results from both. The technology used included Django as the development framework, Python as the back-end language, Bootstrap for the interface design, and MySQL for the database. Moreover, evaluation was conducted to assess the usability and impact of the platform with SoI academics (5), markers (6) and students (6).

Interfaces

The current implemented prototype includes interfaces for academics/markers and students, to which users are taken based on their login credentials.

In their interface, academics can:

- set up new assessment and its structure and marking guide,

- create submission boxes,

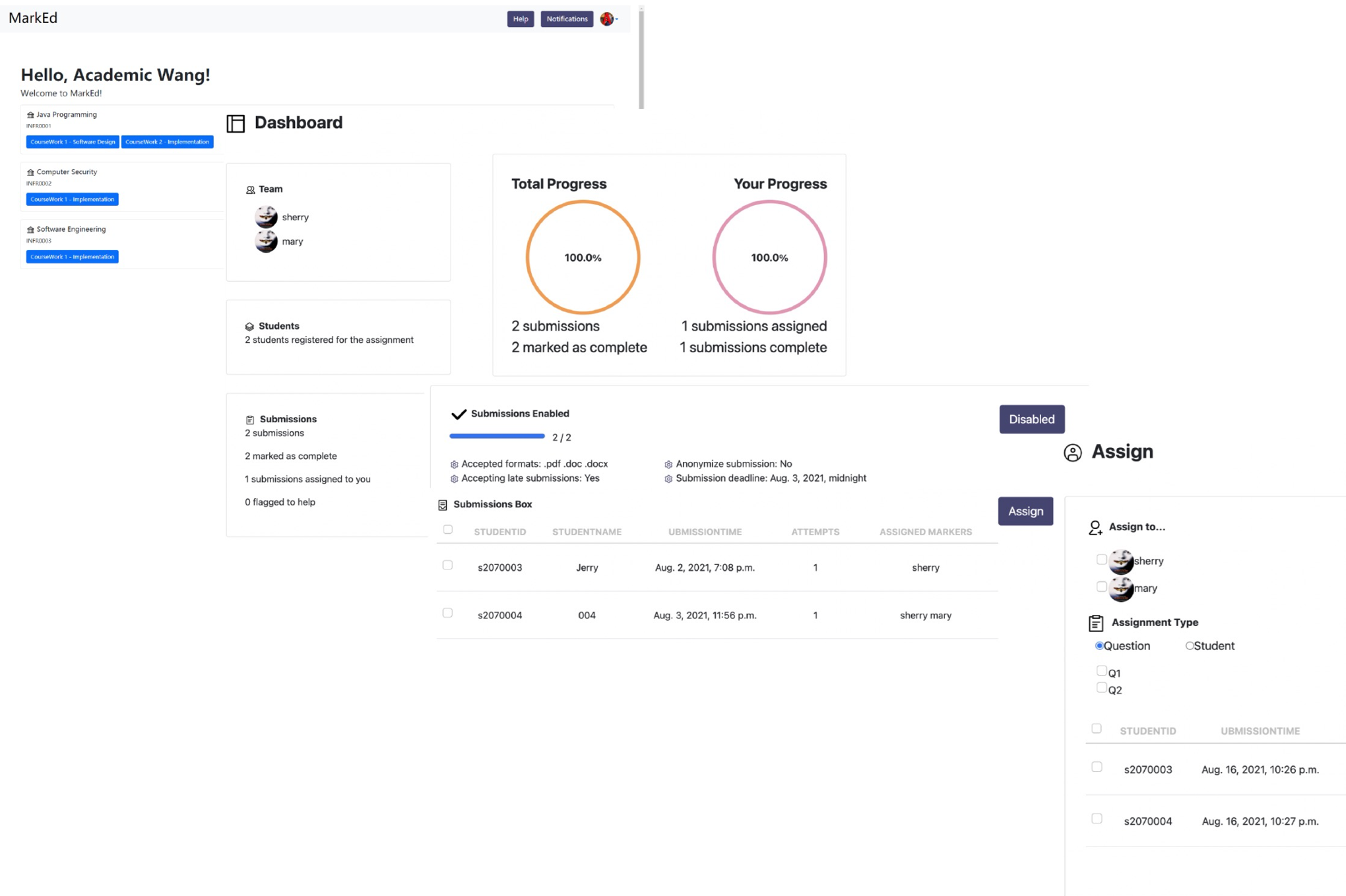

- add and allocate markers per question and/or student (Figure 1),

- change user rights on the system,

- get an overview of progress with marking,set up automated jobs (e.g. emailing them when all marking is finished).

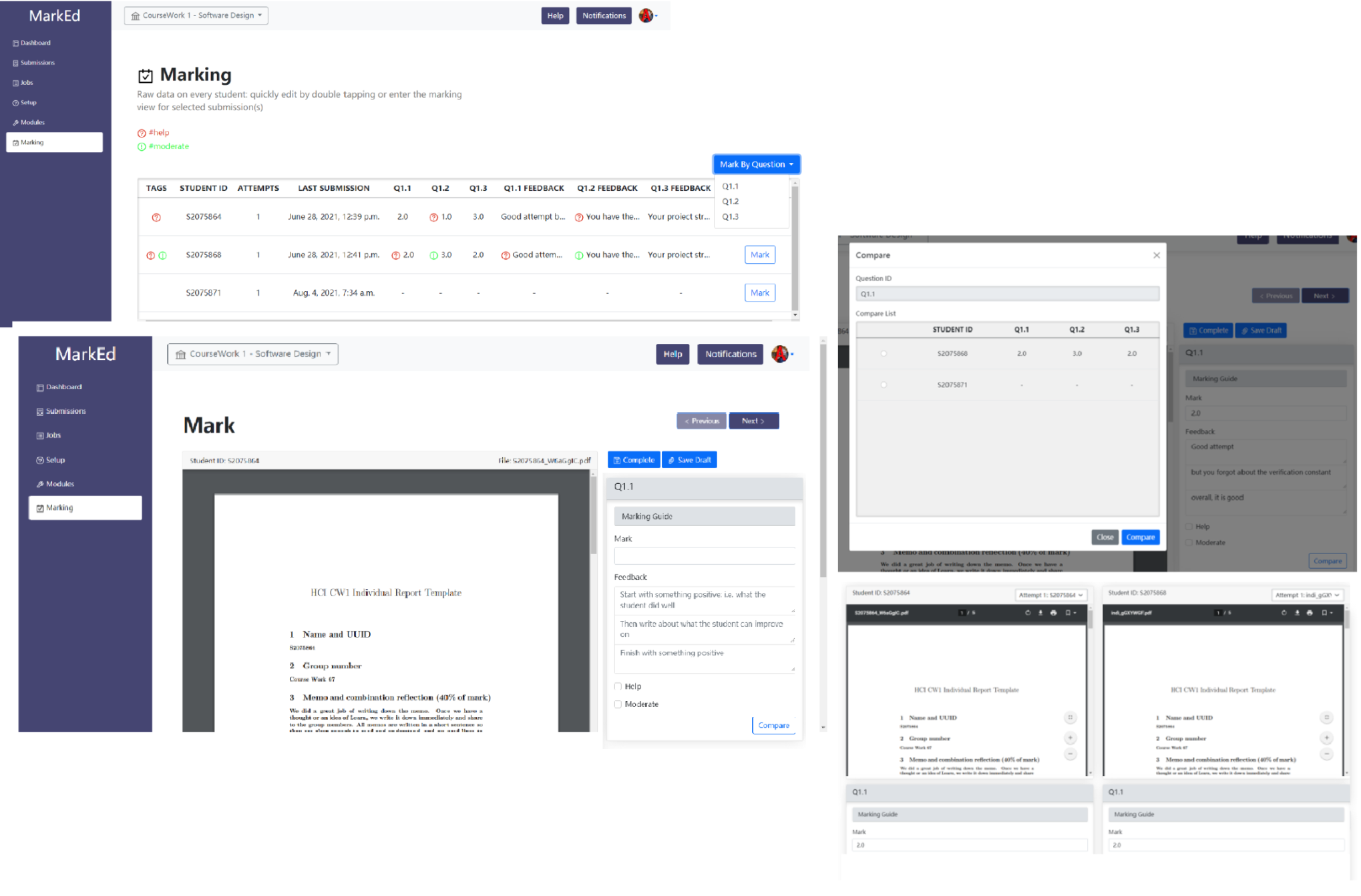

Markers (Figure 2) can:

- mark submissions wholly or by question, and in doing so tag any to request help or propose for moderation to the academic,

- be guided in the format of the feedback,

- be able to compare with previous marks for fairness.

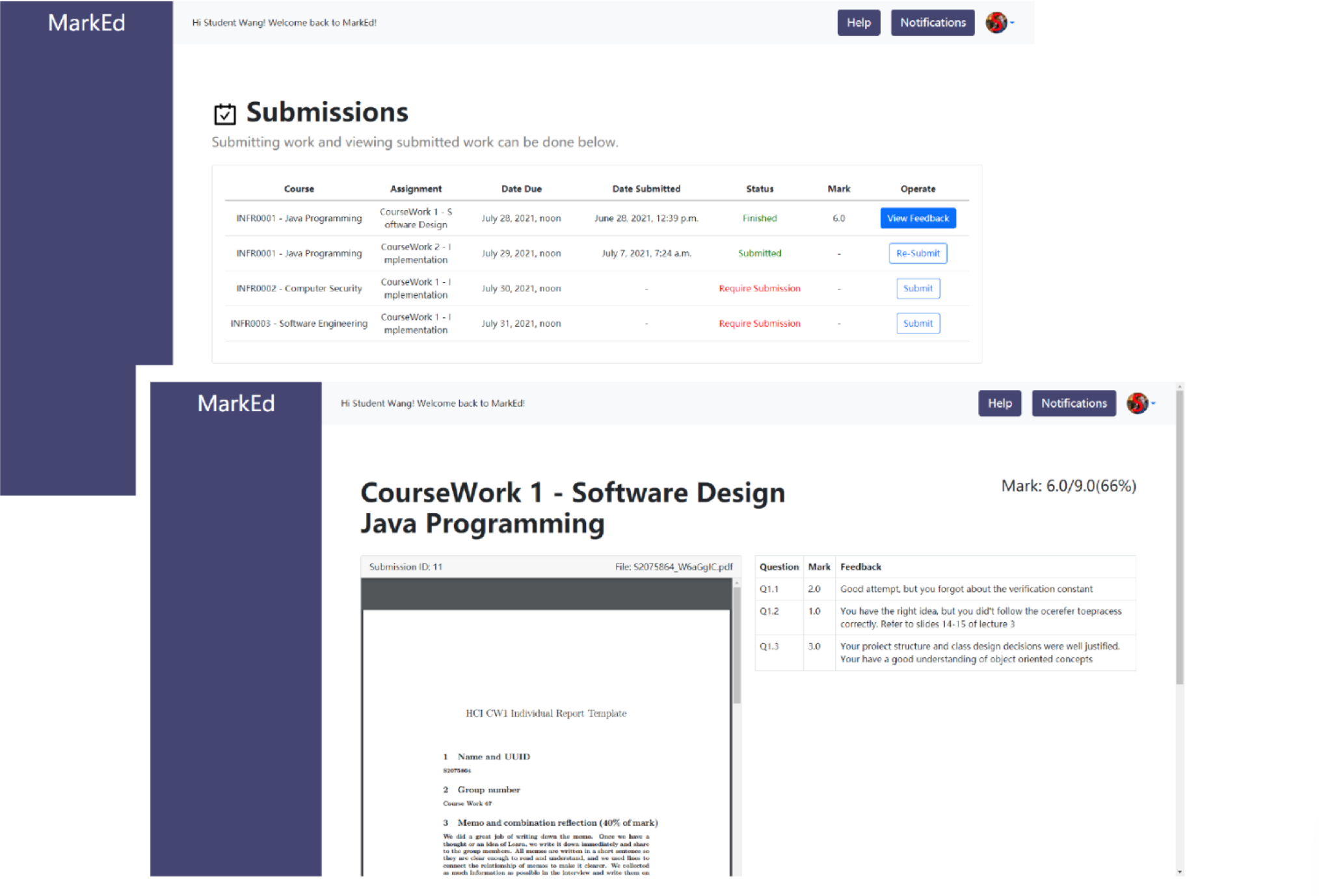

Students (Figure 3) can:

- submit/re-submit assignments,

- view their feedback.

During the latest evaluation, academics and markers rated the usability of both interfaces as ‘good’, while students rated that of their interface as ‘excellent’, and the GUI was praised as being clean and modern. The setup and automated jobs pages were seen as more difficult, and guidance for them was suggested.

Regarding potential impact, most academics and markers agreed that MarkEd would help them reduce marking errors, and that it is at least somewhat helpful in saving time, ensuring fairness, moderating marks, and markers getting help from academics. Students unanimously considered that the system would make it easier for them to submit assignments, check marks and feedback. However, as the participant numbers were low, more evaluation is needed to confirm these results.

Figure 1: Checking progress on marking and assigning markers on the academic’s pages (from MSc dissertations by Xisen Wang and Xiaofei Sun)

Figure 1: Checking progress on marking and assigning markers on the academic’s pages (from MSc dissertations by Xisen Wang and Xiaofei Sun)

Figure 2: The marker pages (from MSc dissertation by Xisen Wang)

Figure 2: The marker pages (from MSc dissertation by Xisen Wang)

Figure 3: The student pages (from MSc dissertation by Xisen Wang)

We plan to apply for a PTAS grant to secure funding for the future implementation of MarkEd. Immediate future work would includev finalising the implementation, taking into consideration latest evaluation suggestions as well as the suggestions received during our workshop at The University of Edinburgh Learning and Teaching Conference. We could also add a variety of possible automated jobs, including: automatic marking through integration with external systems; adding modules for standard functionality; command line interface access; reports generation; and public APIs for integration with other systems.

We would like to continue working in iterations and conduct evaluation after each, and finally run a longitudinal study with several SoI course teams using Marked during a semester. Ultimately, our vision is that acceptance and adoption of MarkEd in SoI could be followed by its adaptation to the needs of other schools in our University.

Cristina Adriana Alexandru

Cristina Adriana Alexandru

Cristina Adriana Alexandru is a Lecturer in the School of Informatics and a Senior Fellow of the Higher Education Academy. She teaches a large-scale, second year undergraduate Software Engineering course, leads the training programme for teaching support members of staff, organises a summer programming course for incoming first year students, and line manages the team of University Teachers and University Tutors. She is passionate about teaching and building usable and useful technology for teaching and learning, which is why many of her undergraduate, Masters and research projects are in these areas. She hopes these projects will have a real impact on her school and community.