Some months ago, an “Angry Developer” posted their opinion on the negative effect of AI code tools on programmers’ core skills (Why Copilot is making programmers worse at programming).

This got me thinking about the effect of AI large language models (LLM) tools on academic skills. In this post, I share my thoughts that might hopefully give a useful perspective on why-or-when not to turn to AI, beyond the perceived rule, “because the uni said ‘No!’”

Erosion of Academic Skills

Just as AI can cause programmers to lose their deep understanding of coding principles, it can also cause every one of us to lose essential academic skills. An important reason for this is that LLMs have no knowledge of their own! They literally make stuff up (Hicks, Humphries, and Slater 2024). Therefore, relying too heavily on AI to write reports will have an impact on students’ development of critical reasoning and writing skills. If students mostly use AI to write their work, there will be a noticeably (negative) effect on how they learn to structure arguments and use evidence to effectively support their narrative. As a marker, one of the things that really stands out about AI-generated work is the “voice”. By relying on AI, students not only remove their voice form the content, but they lose the opportunity of ever even developing their own voice in the first instance.

Reduced practical learning

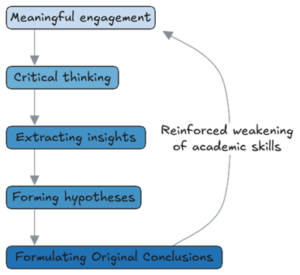

In programming, we never get our code right first time, and our feedback comes in the form of error messages. In our own studies, engaging directly with material is essential for “deep learning” (pun not really intended, but I’ll take it). For example, if students routinely use AI to quickly summarise articles or generate lab reports, they will find it more difficult to develop a whole chain of skills.

And most importantly, if the work is mostly AI-generated, the feedback the student receives does not apply to them personally. So it is worthless!

Figure 1: Over-reliance on AI tools is likely to weaken key academic skills, which once weakened, will lead to a cycle of continued skills impoverishment. (Totally based on my opinion; I got no refs for this!)

Dependence on AI for solutions

AI-generated code often results in code that is… just… a bit… odd! And that is assuming that the code even works. So, when programmers use AI to generate code, they might not fully understand the solutions it has provided. There is a parallel here in academic settings where students might use AI to complete assignments without fully engaging with the underlying concepts. I think it should be self-evident that copy-pasting a generated response does nothing to help you as an academic. But it flows beyond just the academic context. Imagine a situation where you were asked a question identical to a University exam… in a job interview…

Narrowed creative thinking

AI tools can definitely be an efficient way to complete a task, but they might limit creative thinking. In academic work, students might miss out on the creative process of developing unique (think “novel”) ideas and solutions. Yes, AI might sometimes suggest a promising idea, but it really does not have any clue about the topic. And, with an over-reliance on AI for our work, neither do we. For example, using AI to brainstorm essay questions or projects can prevent students from both conceiving and exploring their own interesting ideas. Again, if it helps, I often urge my students to think in terms of “novelty”, often a requirement for publishing later in their careers. This is an important professional skill they should be developing during their studies; one that is at risk if they over-rely on AI.

Promoting students’ lived experience, individuality and own voice

AI models are trained on vast datasets from the internet, reflecting the “most common” values and perspectives, often dominated by only a few societal groups. This can lead to reinforcement of their viewpoints while under representing diverse experiences, especially those from minority groups. Relying heavily on AI for ideas might dilute students’ unique lived experience, regardless of their background. Remember, AI lacks personal insights and creativity. Encouraging students to make sure that their voices are evident in their work is a critical approach to navigating this new AI-era in learning and teaching because this is what distinguishes students’ work from that of others. Students need to be shown how to critically assess AI-generated content, particularly when they have unique perspectives to share. We need to highlight to students that their individuality, lived experiences and perspectives are in danger of being lost in an AI-generated assignment. These are the moments when individuality really matters.

Balancing AI Use

While AI can be a powerful tool to enhance learning and productivity, it’s important to emphasise to students that it should be used as a supplement rather than a replacement for their own efforts. Encouraging a balanced approach can help maintain and even enhance their critical thinking skills. For example, they could use AI to check their work or generate ideas, but they need to also engage deeply with the material and develop their own understanding and solutions.

In summary, while AI tools like Copilot can offer significant benefits, they also pose risks to the development of critical skills, our own ‘voice’ and individuality. It’s crucial for students to engage actively with their own work and use AI as a supportive tool rather than a crutch.

References

Hicks, Michael Townsen, James Humphries, and Joe Slater. 2024. “ChatGPT Is Bullshit.” Ethics and Information Technology 26 (2): 38. https://doi.org/10.1007/s10676-024-09775-5.

John Wilson

John Wilson

John is a Teaching Fellow in the Usher Institute Data-Driven Innovation Talent team where he teaches on the Data Science for Health and Social Care programme. He has a background in ecology and conservation science, and spent a couple of years teaching data science in a Scottish tech academy.