In this post, Nikhen Sanjaya Nyo, Cristina Adriana Alexandru and Aurora Constantin from the School of Informatics describe the work conducted as part of an undergraduate project in improving Gruepr [1], an open-source tool that helps instructors form student groups more efficiently. In particular, they highlight the importance of such a tool and share insights gained during its development and evaluation. They presented a demonstration of Gruepr at the Learning and Teaching Conference 2025. This post is part of the Group Work series.

Why grouping tools matter

Group work is a common and essential component of university learning, providing students with opportunities to learn by doing, engage in real-world tasks, and build teamwork skills [2]. Well-designed groups promote deeper learning and social belonging. However, poorly organised or unevenly-balanced groups can diminish these benefits, potentially disadvantaging students, particularly those from underrepresented backgrounds [3]. For example, research suggests that imbalanced groups with only one female student can result in conversation being dominated by her peers [4]. Students of a minority nationality in the group may end up with lower grades [5], or become isolated by their peers [6].

To create fair and effective groups, instructors often rely on heuristics—rules or criteria guiding how students are grouped [1]. Manually forming groups using such heuristics, especially in large classes, is time-consuming and complex [3]. Grouping tools help automate this, saving time while promoting fairness and inclusion [1].

What is Gruepr?

Gruepr is an open-source, desktop-based tool that automates student group formation based on instructor-defined heuristics. Selected for this project after a review of existing tools, Gruepr is accessible, free, and privacy-conscious, keeping student data securely on the instructor’s local computer.

Functionality and improvements made

To understand how Gruepr could better support instructors, we conducted a study with five teaching staff and one Human Computer Interaction (HCI) expert from the School of Informatics. The study included an interview exploring participants’ needs and challenges in forming groups, a think-aloud walk-through using Gruepr, a post-task interview on Gruepr’s usability and features, and a short questionnaire assessing usability (SUS [7]), workload (NASA TLX [8]), and likelihood of adoption. This helped reveal how teaching staff allocated students to groups in practice, their opinions about Gruepr, and where improvements were most needed.

Initial functions and identified issues

When instructors first used Gruepr, they would upload data or collect it through a form, then assign data types (such as gender, schedule, or multiple-choice responses) to each field that determined which grouping heuristics would apply. However, both the upload process and data type assignment were found unintuitive by our study participants.

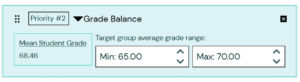

After data types were set, instructors could define grouping heuristics based on group size, gender, minority status, schedule availability, teammate preferences, and class sections. While study participants appreciated the range of heuristics, they considered flexibility to be limited. For example, although Gruepr could ensure that no student is isolated by gender or minority status, instructors couldn’t express more specific constraints—such as requiring at least three women in a seven-person group. Likewise, while the tool supported homogeneous and heterogeneous grouping for categorical data, balancing groups by the average of numeric grades—a widely supported grouping heuristic [9]—wasn’t supported.

Usability challenges were also signalled by our participants. The toggle for setting homogeneity or heterogeneity in multiple-choice criteria was confusing, and assigning teammate preferences was slow without a student search function. The weights system to set the importance of heuristics was unclear, and heuristics like schedule availability and teammate preference could not be weighted at all. Finally, the system displayed how well each generated group met the heuristics as a single numeric score, which participants found difficult to interpret.

Improvements to initial functions

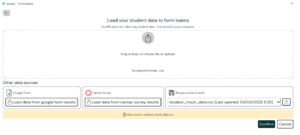

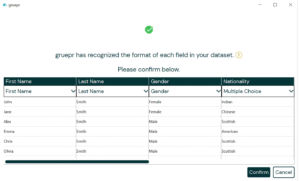

These insights guided redesigns of multiple interfaces. The upload process now features a drag-and-drop interface (Fig.1). The data type assignment screen was redesigned with a spreadsheet-style layout with a built-in help button to explain each data type and its corresponding heuristics (Fig.2).

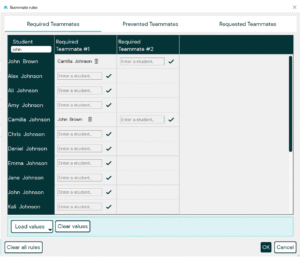

Several improvements were implemented across the heuristic-setting interface (Fig.3). The confusing toggle for setting homogeneity or heterogeneity of multiple-choice heuristics was replaced with radio buttons and icons. To make setting heuristic importance more intuitive, a drag-and-drop ranking system was introduced to replace the weights system, along with buttons that allow instructors to easily add or remove heuristics. The teammate preference interface (Fig.4) now includes a student name search for faster setup.

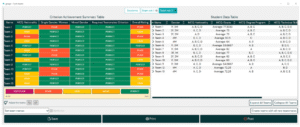

A major redesign was made to the results display (Fig.5): instead of a single numeric score, a traffic-light table now visually communicates how well each group satisfies each criterion, offering clearer and more actionable insights for instructors.

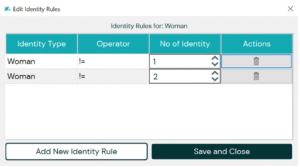

Finally, two new features were added to increase flexibility: a rule-based interface for gender and minority heuristics (Fig.6), allowing instructors to define constraints using logical operators, and a grade-balance heuristic (Fig.7), which lets instructors set target group grade averages to promote more balanced groups. The grade-balance heuristic was added after the final evaluation, described in the section below.

Evaluation

Following the updates, a final evaluation with the same participants as in previous studies was conducted to assess the tool following the same methods as before. Findings showed that the updates enhanced usability, reduced participants’ cognitive workload, and increased the likelihood of tool adoption in courses. Valuable directions for further development were also identified, in terms of validating the grouping algorithm’s correctness, and further improving usability by e.g. offering instructions and clearer guidance on how to make the most of Gruepr’s features. Additional functionality, such as updating student rosters and regrouping as classes evolve, was also suggested. Finally, persistent ambiguities in Gruepr’s data-type system indicated a need for redesign.

Conclusion

By automating group formation using instructor-defined heuristics, the tool saves time and supports the formation of fairer and more effective groups. This project demonstrates how integrating research, user feedback, design, and implementation can enhance educational tools, promoting fairness in group-based learning.

References

[1] Hertz, J.L. (2022) “Gruepr: A software tool for optimally partitioning students onto teams”. Computers in Education Journal, 12. [2] Davies, W. M., (2009).“Groupwork as a form of assessment: Common problems and recommended solutions”. Higher Education 58, pp. 563–584. [3] Layton, R.L. et al. (2001). “Design and validation of a web-based system for assigning members to teams using instructor-specified criteria”. Advances in Engineering Education 2.1, n1. [4] Carli, L.L.. (2001). Gender and social influence. Journal of Social issues, 57(4), 725–741. [5] Shaw, J.B. (2004). A fair go for all? The impact of intragroup diversity and diversity management skills on student experiences and outcomes in team-based class projects. Journal of Management Education, 28(2):139–169, https://doi.org/10.1177/1052562903252514. [6] Jalal Safipour, J., Wenneberg, S., and Hadziabdic., E. (2017). Experience of Education in the International Classroom–A Systematic Literature Review. Journal of International Students, 7(3), 806–824. [7] Brooke, J. (1996). “SUS: A quick and dirty usability scale”. In: Usability Evaluation in Industry. Accessed: April 1, 2025. [8] Hart, S.G. and Staveland, L.E. (1988).“Development of NASA-TLX (Task Load Index): Results of empirical and theoretical research”. In: Hu- man Mental Workload. Ed. by Peter A. Hancock and Najmedin Meshkati. North Holland Press, pp. 139–183. url:https://www.sciencedirect.com/science/article/pii/S0166411508623869 [9] Lyutskanova, G. (2024). Systematic literature review on the team formation problem. url: https://essay.utwente.nl/103550/.Nikhen Sanjaya Nyo

Nikhen is a recent graduate of Computer Science from the School of Informatics, University of Edinburgh. He is currently working as a Software Engineer and is interested in building technology to solve problems.

Cristina Adriana Alexandru

Cristina Adriana Alexandru

Cristina Adriana Alexandru is a Lecturer in the School of Informatics, BEng Software Engineering Programme Director and a Senior Fellow of the Higher Education Academy. She teaches large-scale undergraduate Software Engineering courses, leads the Computer Science Education Group, organises the school’s training programme for academic staff, and line manages its team of teaching-focused academics. She is passionate about teaching and building usable and useful technology for teaching and learning, which is why many of her undergraduate, Masters and research projects are in these areas. She hopes these projects will have a real impact on her school and community.

Aurora Constantin

Aurora Constantin

Aurora Constantin is a Lecturer at the University of Edinburgh’s School of Informatics and a Senior Fellow of the Higher Education Academy. She leads the School’s teaching support staff training programme and co-organises the Computer Science Education Group. Her research encompasses Educational Technology and Participatory Design, including projects involving people with special needs. She is a passionate advocate for accessibility, with a particular interest in accessible and assistive (educational) technologies.

It’s impressive to see how much thought went into balancing fairness and usability in Gruepr. I’m curious during your evaluation, which improvement did instructors say made the biggest difference in their workflow?