In this Spotlight on LOUISA post, Course Organiser, Madeleine Campbell, and Learning Technologist, Jamie Auld Smith, share their experience of implementing an integrated Learn assignment rubric with the aim of enhancing the consistency of essay feedback provided to postgraduate students while ensuring a practical and transparent marking and moderation workflow. Jamie and Madeleine share this work on behalf of the Holyrood Learning Technology team and the ‘Language and the Learner’ course team, part of the MSc Language Education programme within the Institute for Language Education (ILE) at Moray House School of Education and Sport.

Context

Language and the Learner is a large course with 300+ students, 15 marking groups, and 9 markers. The students’ summative assessment consists of an essay with a reflective element.

In previous years, students submitted their essay to a Turnitin dropbox. Detailed assessment criteria were provided as a pdf within the Learn course. Markers gave written feedback as an overall comment in the Turnitin Feedback Studio.

In 2023/24, increased time constraints for marks and feedback turnaround, combined with the large student cohort, multiple markers, and relatively complex marking scheme led to concerns around feedback consistency, as well as transparency in moderation and meta-moderation of this feedback.

Rubric options

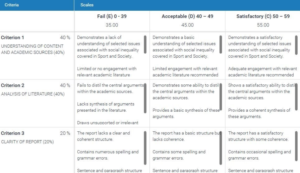

For 2024/25, to help more clearly align the feedback provided to students with the detailed assessment criteria, we explored various options for integrated rubrics within the Learn VLE.

The rubric tools within the Turnitin dropbox were considered but seen as limited.

- Turnitin rubric: markers can select a band for each criterion, and students will see the band awarded with the relevant descriptors as part of their feedback. However, only one point value for each band in the rubric is available, limiting the markers’ ability to award a more specific percentage mark for each criterion

- Turnitin grading form: markers can select a mark for each criterion from a full percentage range (0 – 100). However, the specific bands and descriptors awarded are not provided to students, requiring markers to manually copy relevant information from the detailed assessment criteria.

In contrast, the Learn rubric tool allows for criterion marks to be selected from a full point or percentage range for each band. This offers greater flexibility for markers to award specific percentage marks for each criterion while still displaying the relevant bands and descriptors as feedback to students.

New integration

In previous years, using the Learn rubric tool was not an option, as written assignments needed to be submitted to a Turnitin dropbox to access the similarity checker. The Learn assignment dropbox is primarily used within the School for multimedia or group assessments which do not require the similarity checker.

This year however, we had the opportunity to join the Turnitin Integration in Learn Assignments Early Adopter Programme. This new integration allows instructors to use Turnitin’s similarity checker within a Learn assignment, affording access to the integrated Learn rubric tool for essay assignments.

Revised approach

For 2024/5, we recreated the detailed assessment criteria as a Learn percentage range rubric. Guidance to markers for individualised feedback was also updated to specify that comments should be content-specific with examples from the submitted assignment. Phrases from the criterion descriptors should not be duplicated in this feedback as these were already integrated into the standard feedback provided by the rubric.

Guidance videos were created and shared with the marking team on how to use the new rubric tool and Learn feedback studio, as well as how to view different marking groups in the dropbox using the delegated marking feature. Support from a Learning Technologist was provided during the standardisation meeting and throughout the marking process.

Evaluation

“Because the students could consult the standard rubric descriptors for each criterion and band directly on the screen when they received their results, the role of the markers’ comments in the feedback window could be more focused on providing constructive, content-specific observations and suggestions for each criterion.” – Madeleine Campbell, Course Organiser

Learn Rubric

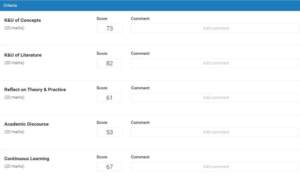

Our evaluation suggests that the Learn rubric tool, combined with the revised feedback guidance, has enhanced the quality and consistency of feedback.

- By embedding standard descriptors for each criterion within the rubric, markers can focus on content-specific comments that engage with learners’ understanding rather than reiterating the standard descriptors

- Individualised feedback is now more consistent in length, with all criteria systematically addressed

- The use of specific percentage marks for each criterion has improved the validity of marking and moderation, while making the process more transparent

- No concerns regarding feedback quality were raised by learners with the Course Organiser.

Learn Feedback Studio

Markers also highlighted some benefits of the Learn feedback studio compared to Turnitin:

- The improved interface is easier to read, allowing markers to efficiently check descriptors, assign marks, and provide feedback while keeping the script open

- Navigating between students within a marking group is smoother

- The ability to copy and paste from submissions enables markers to include specific examples in their feedback, enhancing clarity and engagement.

Learn Dropbox

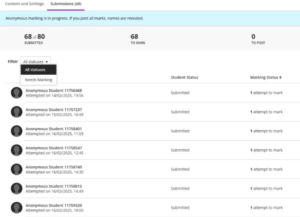

However, when anonymised, the Learn dropbox presents some challenges compared to Turnitin:

- Exam numbers are not visible in the submission list, only a submission ID

- The dropbox is not searchable, making it difficult to locate specific submissions

- There is no option to quickly filter the dropbox by workshop group

- Moderators and meta-moderators must manually assign themselves to a group using the delegated marking tool to view submissions

- Turnitin similarity scores can only be viewed within individual scripts, with no overview feature for batch review.

These factors make course administration, moderation, and meta-moderation more cumbersome, especially for large courses with multiple markers.

Conclusion

“Overall, as both a marker and a course organiser, I found the process of using the rubric more effective and efficient.” – Madeleine Campbell

While some reservations remain, particularly around delegated marking, the lack of an overview for Turnitin scores, and the absence of a search function when the dropbox is anonymised, the use of the Learn rubric was clear, effective, and more straightforward than the previous system. The new approach has led to more consistent and transparent marking and feedback, improving the validity and reliability of the assessment process while reducing the possibility of human error. The clearer and more navigable marking interface has further streamlined the process.

We understand that Anthology (Blackboard) is actively working to enhance both anonymous and delegated marking workflows, which is a promising development.

Following the evaluation, the decision was made to adopt this approach for an additional PGT course in Semester 2, Teaching Texts Across Borders.

Our experience of this pilot has also been presented to the Institute for Language Education (ILE) in order to evaluate whether other Course Organisers would be interested in adopting the new rubric tool in light of the University-wide recommendation to move towards assignment-specific rubrics and descriptors.

This blog was originally published as part of the LOUISA project. For the latest updates and project news on LOUISA, visit the SharePoint page here: LOUISA – Home

We would also like to thank Alan Hamilton, Learn Service Manager, for his continued support throughout the Turnitin Integration in Learn Assignments Early Adopter Programme which made this initiative possible.

Madeleine Campbell

Madeleine Campbell

Dr Madeleine Campbell is Lecturer in Language Education at the University of Edinburgh. Madeleine is Ethics Lead for the Language(s), Interculturality and Literacies Research Hub and Co-Investigator of the AHRC-funded Experiential Translation Network (https://experientialtranslation.net/research/publications/) since 2021. Her research explores embodied, multimodal translation across languages and media, and she has published translations of francophone and Occitan poetry in various journals and anthologies.

Jamie Auld Smith

Jamie Auld Smith

Jamie Auld Smith is a Learning Technologist supporting the Centre for Open Learning and Moray House School of Education and Sport. He works with colleagues to integrate digital tools that enhance learning and teaching, with a particular focus on inclusive course and assessment design. Since 2005, Jamie has held roles in language teaching, curriculum development, and assessment management across the UK and internationally.