In this ‘Spotlight on Alternative Assessment Methods’ post, David Kluth, Director of Teaching MBChB, Colin Duncan, Year 5 Director, Karen Fairhurst, Year 5 Director and Alan Jaap, Assessment Lead MBChB, discuss the effects of remote exams on performance in applied knowledge assessments in the Bachelor of Medicine and Surgery (MBChB) degree…

Introduction

Applied knowledge tests form an important component of assessment in medicine across the programme. These questions typically present the candidate with a clinical vignette which they have to interpret and then determine the correct answer, such as most likely diagnosis, the next investigation or most appropriate management. We use a mixture of single best answer questions (SBA) and very short answer questions (VSAQ). The latter are designed to be automatically marked by computer with a check by two faculty members to ensure all correct answers are identified.

Year 4 is the first clinical year and the students have modules in general medicine and General Practice. Year 5 has modules in obstetrics and gynaecology, psychiatry, paediatrics (one semester) and a number of medical specialty areas (e.g. nephrology, dermatology, ophthalmology, ENT, breast, haematology and oncology) in the other semester. Both years are year-long courses in which we combine papers to make an overall assessment. All of these assessments are preceded by formative progress tests. We also have a sequential test strategy whereby those candidates who do not achieve a ‘clear pass’ score (see below) after the combined papers have a further paper to determine if they have achieved the relevant level of proficiency. The planned assessments were as follows:

Year 4:

- Formative progress test 1 December 2019 (covering all year material)

- Formative progress test 2 February 2020

- Summative paper 1 May 2020 (80 items)

- Summative paper 2 June 2020 (80 items)

- Marks from paper 1 and 2 combined. Total of 160 items.

Summative paper 3 July 2020 (for candidates who do not achieve the pass score plus 1 standard error of the measurement)

Year 5:

- Formative progress test 1 in either obstetrics & gynaecology, psychiatry and paediatrics (a.k.a O&G etc.) or specialties November 2019

- Summative paper 1 in either O&G etc. or specialties December 2019

- Formative progress test 2 in either O&G etc. or specialties April 2020

- Summative paper 2 in either O&G etc. or specialties June 2020

- Marks combined after summative paper 2. Total of 170 items.

Summative paper 3 covering all year material July 2020 (for candidates who do not achieve the pass score plus 1 standard error of the measurement)

These assessments are all delivered via our online platform, Practique and are normally sat under invigilated conditions (including progress tests).

Impact of Covid 19 on assessment

All assessments that were planned were completed up until the end of February. The decision was made to continue with all other knowledge assessments as remote exams but using the time limits originally planned. All exams were constructed to a blueprint specifying the number of items per specialty area. Standard setting was performed using the modified Angoff method. All candidates sat the exams at the same time. We had candidates in time zones from +7 to -8 hours BST, so chose a time of 2pm for most exams. The software enabled the items to be delivered in a random order to reduce the opportunity for candidates to collaborate. Although candidates could look up information we advised them to complete the exam as they would under normal invigilated conditions and flag questions to check at the end, so as not to run out of time. All candidates were able to complete the exams as planned, mostly without any significant technical problems.

Results

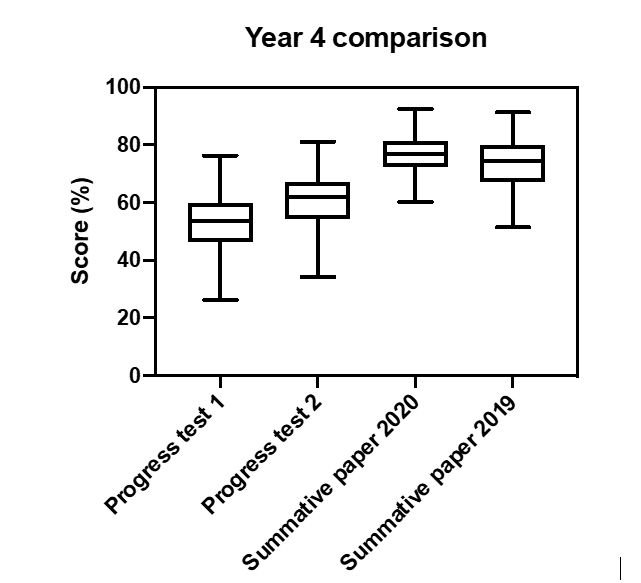

Year 4. The performance of the cohort is shown in the graph below, which also provides a comparison with a summative paper completed by the year 4 2019 cohort under standard invigilated conditions.

The results show a steady improvement by the cohort from progress test 1 to 2 to the summative assessment. The median score in summative paper 2020 was 76.6% vs 73.6% in 2019. The main improvement in performance was seen in those in the lower half of the year.

Year 5. The results for year 5 are displayed differently as each half of the year is doing the exams in a different sequence. The results shown are for two cohorts of students. The first round of formative progress tests and summative assessments were taken under invigilated conditions by first cohort whilst the second cohort took the exam remotely. The performance at end of year 5 2019 is shown for comparison. The pass scores for these assessments were similar.

Obstetrics & gynaecology, psychiatry and paediatrics

There is evidence of progress for each cohort between the formative and summative assessments and the performance is similar across all three summative exams.

Specialities

As with the O&G, psychiatry and paediatrics exam, the performance between summative invigilated and remote exams is similar and comparable to that seen in 2019, which was also invigilated. Unlike in year 4, there was no evidence of benefit to for the weaker candidates.

Discussion

We have successfully delivered remote applied knowledge tests across two years of the Edinburgh MBChB. The performance is comparable to that seen in previous years. The assessments have enabled candidates to demonstrate they have achieved the programme outcomes allowing appropriate progression decisions to be made. In year 4 there is evidence that the remote delivery helps the weaker candidates. This was not seen in year 5. Possible explanations for the effect in year 4 include that the assessment covers core and common medical conditions and good candidates will normally be able to work out the answer without any need to check, whilst weaker candidates may benefit from being able to check some answers they are less sure of. Also students were not having to complete all of their clinical placements so had more time to focus on their knowledge. It is also possible that the remote exam was less stressful. The year 4 data are also directly comparable as all candidates took all assessments, whilst in year 5 we are looking at two different cohorts for each half of the year. It was noticeable that a number of candidates made little improvement between the formative progress test and summative exam and their approach to learning and the exam will need to be explored.

Our change to delivery of the assessments was broadly supported by the students who recognised that it was a pragmatic approach. The main concerns that were raised were:

- Worries about internet problems during exams. The software we used stores answers on the candidates device even when the internet goes down and uploads them as soon as internet connection is restored. In the end a few students had minor problems but these were managed without interrupting the exam.

- That having the opportunity to check information required more time which should have been added to the exam.

- That because it was open book that “less good students” would do better than under invigilated conditions and this would affect their decile ranking (used in the application process for first clinical posts after qualification).

Overall our opinion is that this method of delivery of assessment is effective and could be used in the future if onsite delivery is not possible, without detriment to students. The main concern will be candidate verification. As this was the first time assessments had been delivered this way on such a scale and occurred during the main lockdown the opportunity for cheating or collusion was likely limited. If this method of delivery becomes more common then viable approaches to remotely proctor the exams will be required. It is likely that advances in facial recognition and eye movement tracking technology will make this a realistic possibility in the near future.

Alan Jaap

Alan Jaap

Thank you SO much for sharing your experience. This is the first article that I’ve came across that actually addresses the issues of potential cheating and unauthorized collaborating during exams head on, AND details the ways you’ve dealt with them. Whilst blog or other ‘creative’ formats of alternative assessments are all well and good, we must address the enormous amount of extra work those formats place on the markers, not to mention those creative formats still won’t appropriately assess certain type of materials.