Online knowledge assessments are a vital component of the MBChB (Bachelor of Medicine, Bachelor of Surgery) programme. Although we had a system to deliver exams, this had limitations for staff and students, and so we set about assessing and implementing a new platform.

The system needed to deliver single best and short answer questions and be used for clinical examinations. We also wanted to provide better quality feedback to students following formative and summative exams. The first stage was procurement. This involved collaboration between academics, administrative staff and information services (IS) to determine all the requirements of the system, ranging from “must have” to “should have” to “could have”. Companies submitted their bids to match these requirements. Shortlisted companies were invited to give a one day presentation to formally assess and score their system. Procurement is not something an academic often gets involved in and adds to the challenge and time involved. The key to success was having a project manager from IS. In the end, the system we chose was Practique.

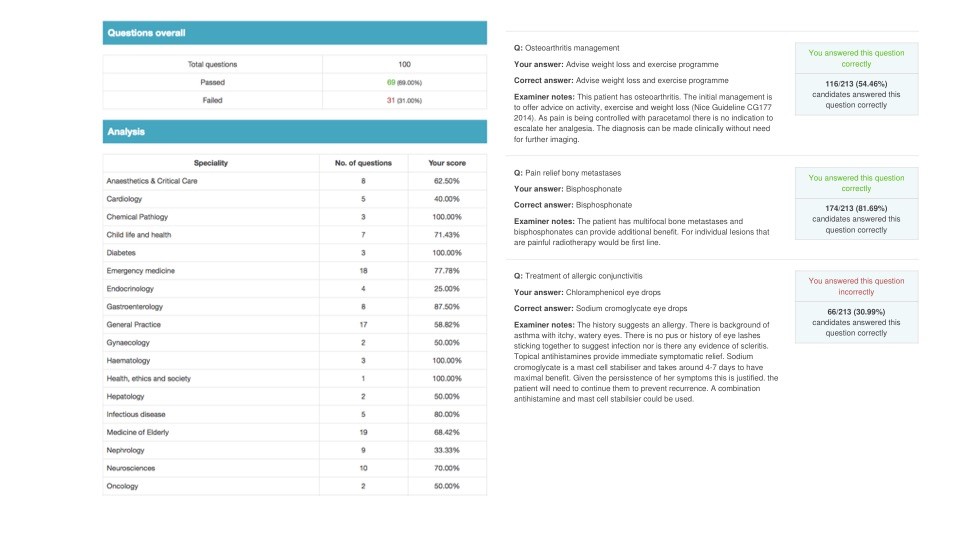

The second stage was importing and “cleansing” our existing bank of questions. This resulted in nearly 5000 questions being uploaded, but a significant number that were duplicates and a number that did not conform to our agreed style. A small group of the faculty worked hard checking and editing all the questions and linking them to the relevant year of the MBChB programme. We also started usability testing with students, with lots of positive feedback. We aimed to give a formative exam to all students in semester one and to ensure all summative assessments could be delivered. Our first deadline was to deliver a formative exam to Year 6 students (final year) at the end of October 2018. The exam worked well, was positively received and enabled a higher quality of feedback to be delivered (figure 1). We were also able to deliver formative exams that students could access on their own devices.

The third, parallel, stage was the question tagging and exam blueprinting. We devised a system that tagged each question to a year of the programme, specialty (e.g. cardiology, neurosciences etc), and skill (e.g. diagnosis, management, physiology etc). Continuing work will involve tagging each question to a specific presentation and condition. We used the specialty tag to blueprint and automatically generate each year’s exam.

The final stage was to deliver and standard set summative exams for every year. After these we gave students feedback based on their performance broken down by specialty and skill. These reports are automatically generated by the system. After Finals (Applied Clinical Knowledge Test) we additionally gave the candidates a report of the key learning point for every question.

Future developments involve enhancing the precision of question tagging and improving and refining student feedback. We will run most formative assessments remotely so students can access in their own time. We also aim use the system to deliver clinical exams which will also enable more rapid feedback.