In this extra post, Ross Anderson, a fourth year Astrophysics student, and Thomas Gant, a fourth year Mathematics student, discuss their involvement as research assistants on the ‘Classroom Practices and Lecture Recording’ project, funded by the Principal’s Teaching Award Scheme…

Lecture recordings can be a godsend for students, allowing us to re-watch explanations of a tricky topic or to catch up on lectures we’ve missed due to illness or… otherwise. They can also be a useful resource for researchers looking to better understand the relationship between teaching practices and learning. Exactly how one might extract useful data from these recordings, however, isn’t immediately obvious. How do we convert the analogue/qualitative data provided by a lecture recording into something digital/quantitative that can be used for meaningful analysis? And when we do, is this useful? These were questions we helped to answer over the summer, as Research Assistants on the Classroom Practices and Lecture Recording project, which was funded by the Principal’s Teaching Award Scheme.

Characterising lectures

We helped to develop FILL+, a tool for characterising lectures by recording the activities that take place as seen from the student’s perspective. It builds on the Framework for Interactive Learning in Lectures (FILL) developed by Wood et al. (2016), which characterises lectures by recording the type of activity taking place and producing a second-by-second timeline of events. FILL was designed with a focus on ‘Peer Instruction’ (Mazur, 1999). Peer Instruction is a teaching technique commonly used in physics courses (Dancy and Henderson, 2010) and across STEM courses in general at The University of Edinburgh. FILL defines 12 activities across two systems, one for recording the activity and the other for recording the associated stage of the ‘Peer Instruction Cycle’.

We wanted to be able to analyse lectures whether or not they used Peer Instruction whilst also making the tool more efficient and capable of providing a more accurate representation of the student experience; so we made some changes and created FILL+. In FILL+ there are ten types of activity that are grouped into three levels of interactivity:

- Non-interactive – where there is no active student participation, such as when the lecturer is speaking to the class or ‘lecturing’ in the traditional sense;

- Vicarious interactive – where there is some active participation carried out by a minority of students that engages the majority (e.g. a student asks a question and the lecturer responds);

- Interactive – where there is a clear expectation that the majority of students are engaged in actively thinking about the course material, e.g. using TopHat, discussing a Peer Instruction question, or when a lecturer asks a question to the whole class (“raise your hand if you think that …”).

Over the course of the summer, the tool was refined via a process whereby three coders would watch and code a set of lectures independently, compare the results and discuss disagreements until a consensus was reached. The definitions for each activity were then updated accordingly and the process repeated until percentage agreement between coders reached an acceptable level.

Results

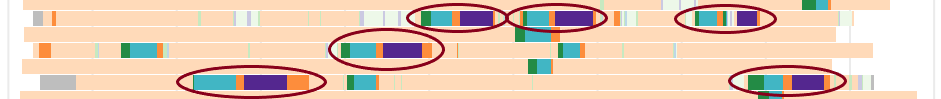

At the time of writing, a total of 183 lectures have been coded using the final version of FILL+, with an average percentage agreement of 95.6% between coders. This was calculated by comparing the timelines produced by different coders across 10% of the dataset. That is, 95.6% of the time FILL+ users agreed on both what is happening and exactly when it is happening. This figure increases to 97.1% when comparing only the degree of interactivity. The improved resolution of FILL+ meant that we were able to preserve some key features of FILL, specifically, we were able to clearly identify Peer Instruction cycles as well as the duration of each stage (as shown in fig. 1).

We have also been able to gain insight into the range of teaching practices that exist across STEM subjects at The University of Edinburgh. Within this dataset, the percentage of a lecture spent on lecturing in the traditional sense, i.e. speaking to the students without the expectation of student participation, ranges from 42% to 97%. The hope is that this kind of data will help investigate the effect that interactive learning and other teaching practices have on student performance.

Personal experience

Undergraduate study is an anxious affair. Choosing which courses to take, stumbling through careers fairs, and desperately trying to figure out which of the many paths that lie ahead will take you to where you want to go. Without experience, however, knowing where you want to go can be quite challenging. We applied to be research assistants on this project with that in mind, and have found it to be an invaluable experience.

For Ross, gaining insight into the research process and the satisfaction that comes with asking interesting questions and taking the time to search for answers has made him more confident in his plans to continue on to postgraduate research – an outcome for which he is particularly grateful.

For Thomas, while he is unsure if going into research is the right path for him, he is interested in the teaching and learning of mathematics, and so found it very rewarding to be working on a tool that attempts to shed some light on what was going on in classes across several University departments.

The benefits of working on something so collaborative and disciplined, and the friends that were made in the process, have made this a more than worthwhile experience and one we would gladly recommend.

References

Dancy. M. and Henderson. C., (2010). Pedagogical practices and instructional change of physics faculty. American Journal of Physics. 78, 1056. https://doi.org/10.1119/1.3446763

Mazur. E., (1999). Peer Instruction: A User’s Manual. American Journal of Physics. 67, 359. https://doi.org/10.1119/1.19265

Wood. A. K., Galloway, R. K., Donnelly, R., and Hardy, J. (2016). Characterizing interactive engagement activities in a flipped introductory physics class. Physical Review Physics Education Research. 12, 010140.