‘’It just generates output that is likely or plausible, but not necessarily true’’ (Mirella Lapata, The University of Edinburgh) [1]↗️

Since November 2022, all of us have been amazed by the potential of Generative Artificial Intelligence (Gen-AI) tools—AI with generative powers. Many researchers and developers are working on new-specific implementations of Gen-AI technology, specifically exploiting the power of “Generative Pre-trained Transformers”, aka GPTs. I presume that we have partially experienced such tools at a certain level, including various types, different extensions that we can benefit from, and other AI-empowered tools. Whatever we experienced within the last couple of months has sparked a great space to discuss how the universities should be adapting or what their role should be in the era of AI in general.

‘’No one knows exactly what capacities consciousness necessarily goes along with, it is hard not to be impressed, and a little scared’’ (David Chalmers, New York University) [1]↗️

Although the history of AI tools is old, the potential impact of Gen-AI may be huge for various sectors. The current form of these tools has the real potential to transform the way we live and work, regardless of whether we like it or not. As a matter of fact, we started to hear various news about their potential use cases in diverse fields, including the Mayo Clinic research hospital, which tries to implement Google’s medical AI chatbot, or Harvard University which announced the new version of the online Computer Science (CS50) having a new teacher bot feature [2↗️, 3↗️]. In the meantime, there are various opposite thoughts about the rise of AI-powered tools, including those who think that this is the beginning of Artificial general Intelligence (AGI) or that these tools are nowhere near as mind-blowing as they presume.

‘’They learn in such a fundamentally different way from people that it makes it very improbable that they ‘think’ the same way people do’’ (Tal Linzen, New York University) [1]↗️

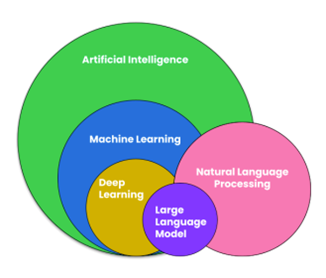

Specifically, without knowing the underlying algorithms, it is easy to fall into the trap of thinking how magical these tools are. Starting with the Dall-E platform (good at creating images from text), nowadays we start to see various types of content generation. Especially, the power of the ChatGPT relies on its capabilities regarding casual chat and somehow keeping the conservation history at a certain level. On the other hand, the mechanism of Gen-AI tools becomes clearer when you zoom in on the main components and related abbreviations that we have been experiencing. To start with, all they can do is heavily rely on a statistical model, namely Large Language Models (LLMs), that serve to make word predictions. More technically speaking, LLMs, as specific subset of the foundation models, are trained by using a corpus of large text data with the help of neural networks [4]↗️.

‘’While these chatbots are far from replicating human cognition, in some narrow areas, they may not be so different from us. Digging into these similarities could not only advance AI, but also sharpen our understanding of our own cognitive capabilities’’ (Raphael Milliere, Columbia University) [1]↗️

Generally, it seems like we have two tails; the naysayers and the hype merchants. In the last year, the initial reactions from the higher education community were mixed, including certain banning intentions across different countries. Mainly, one of the main concerns has been the potential for students to use such tools to cheat and generate unauthentic works, having big impacts on their integrity, whereas we all know that this is not a new problem indeed. A more critical concern is the possibility of over-reliance on generated outputs, which hinders the capacity to think independently or detect incorrect output from Gen-AI tools. Furthermore, the use of such tools raises a number of ethical concerns, such as the propagation of bias in the generated content and the disclosure of personal information to the operating companies. As the use of Gen-AI could have a profound impact, it is becoming increasingly vital for students and faculty to comprehend the capabilities and limitations of these systems.

In the light of all of these, the second part will be about personal experiences, opinions and concerns regarding the potential use cases of such tools in higher education environments.

REFERENCES:

[1]Living with AI, AI Special Report, New Scientist, 29 July 2023. [2]Google’s medical AI chatbot is already being tested in hospitals, available in https://www.theverge.com/2023/7/8/23788265/google-med-palm-2-mayo-clinic-chatbot-bard-chatgpt↗️ [3]Harvard’s New Computer Science Teacher Is a Chatbot, available in https://uk.pcmag.com/ai/147451/harvards-new-computer-science-teacher-is-a-chatbot↗️ [4]Lewis Tunstall, Leandro von Werra, Thomas Wolf, 2022. Natural Language Processing with Transformers: Building Language Applications with Hugging Face. O’Reilly Media. Ozan Evkaya

Ozan Evkaya

Ozan Evkaya is one of the University teachers in Statistics at the School of Mathematics at The University of Edinburgh. Previously, he has held postdoc positions at Padova University (2021) and KU Leuven (2020), after completing his PhD at Middle East Technical University in 2018. His academic curiosity lies in the fields of copulas, insurance, and environmental statistics. He is ambitious about improving my computational skills by leading, organising, or being a part of training workshops and events.

https://www.linkedin.com/in/ozanevkaya/↗️

https://twitter.com/ozanevkaya↗️

https://www.researchgate.net/profile/Ozan-Evkaya↗️