In this episode, Dr. Michael Daw, Director of Quality in the Deanery of Biomedical Sciences, continues discussing his analysis of marking schemes and assessment styles, particularly addressing why it using the full range of marks might be something to strive for. This episode accompanies our July-August ‘Hot Topic’: “Lessons from the Learning and Teaching Conference 2021“.

In this episode, Dr. Michael Daw, Director of Quality in the Deanery of Biomedical Sciences, continues discussing his analysis of marking schemes and assessment styles, particularly addressing why it using the full range of marks might be something to strive for. This episode accompanies our July-August ‘Hot Topic’: “Lessons from the Learning and Teaching Conference 2021“.

The fifth episode of our Learning and Teaching Conference series is a continuation from our latest episode, “Does your assessment really discriminate learning attainment”. Michael Daw began analysing marking schemes and assessment styles to determine how we can better use the full range of marks. However, during his presentation, an interesting questions popped up: what’s wrong with using a narrow range of marks? Why should we strive for using the full range?

In today’s episode, Michael addresses this question, while also touching on marking culture and student motivation. Later in this post is Michael Daw’s original blog post which goes along with his presentation, where you can read about his counterintuitive findings with helpful visuals. His post complements these podcast episodes, providing a more holistic complimation of Michael’s findings, their meanings, and the discussions they prompt. We hope these discussions can continue in your own space.

Listen now:

Michael’s original post:

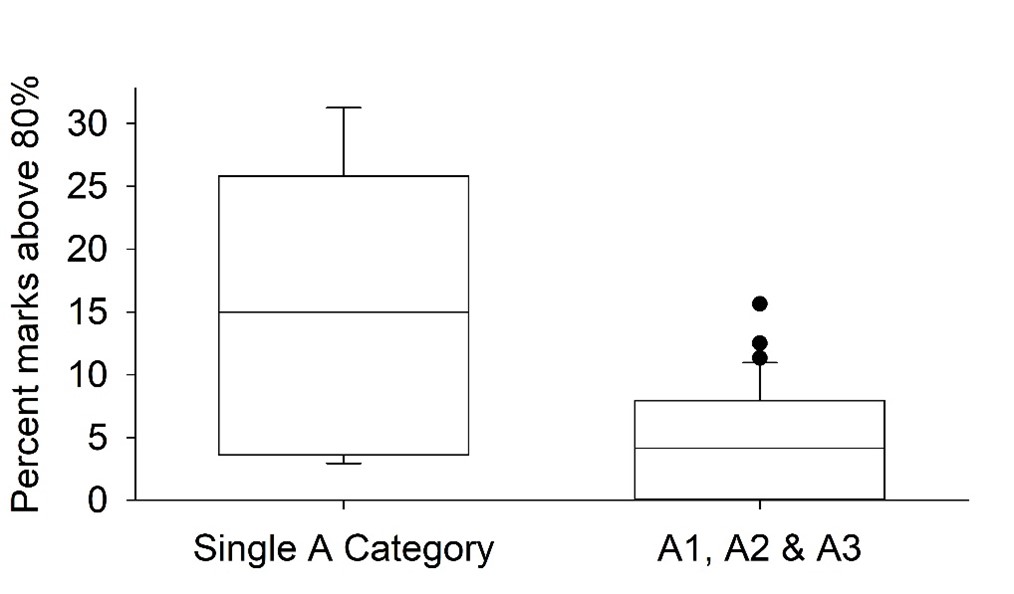

As Director of Quality in the Deanery of Biomedical Sciences I have become aware that a number of our external examiners comment that we do not use the full range of marks. Most often this has referred to a lack of high marks (e.g.: above 80%) but also to a generally narrow range of marks. As a marker in the Deanery, I am aware that a range of different marking schemes are used on different courses. Deanery-wide guidance on preparing and using marking schemes has long been proposed but I felt that, before issuing this guidance, we needed to understand the impact of different marking schemes on mark distributions. For example, external examiners have pushed subdivision of A grade descriptors to give specific descriptors for A1, A2 and A3 in the belief that it will encourage high A marks. However, there is currently no evidence to suggest whether this is effective.

To address this I analysed all the marking schemes for year 1-3 courses run by Biomedical Sciences in Edinburgh along with all the 4th year courses that are required core courses for specific Biomedical Sciences honours programmes. I excluded programmes only available to intercalating medical students and 4th year elective courses which often have very small cohorts. I also analysed the mark distributions for each assignment. In total this covered 152 assessments with 13745 submissions.

For brevity I will not describe all of the analysis or results here but I am happy to share them along with details of statistical tests supporting conclusions with anyone who is interested. Analysis of the example above shows that, surprisingly, assignments where A descriptors are subdivided resulted in a smaller proportion of marks of 80% or greater than those with a single A grade descriptor.

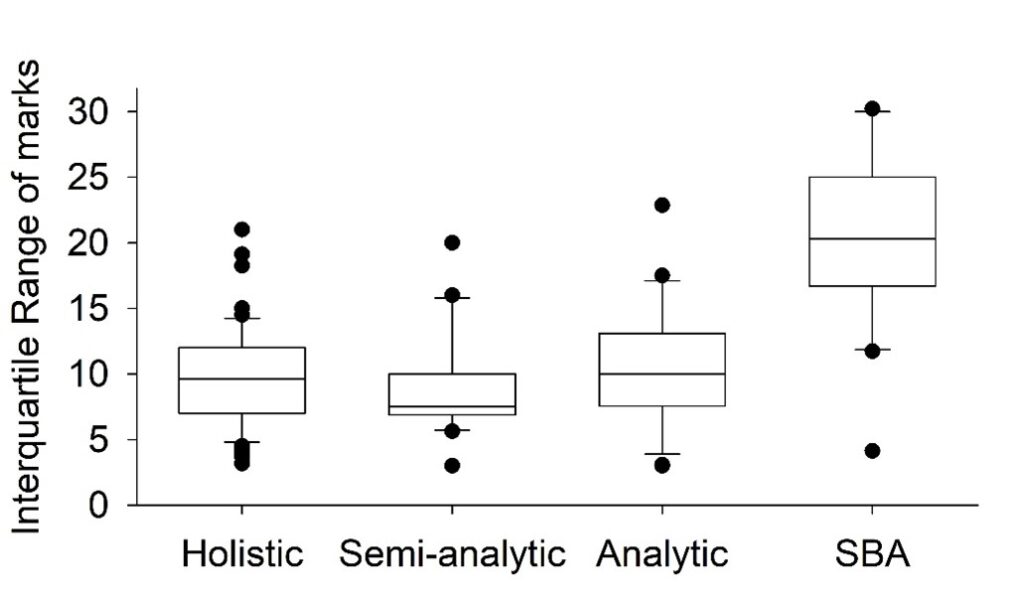

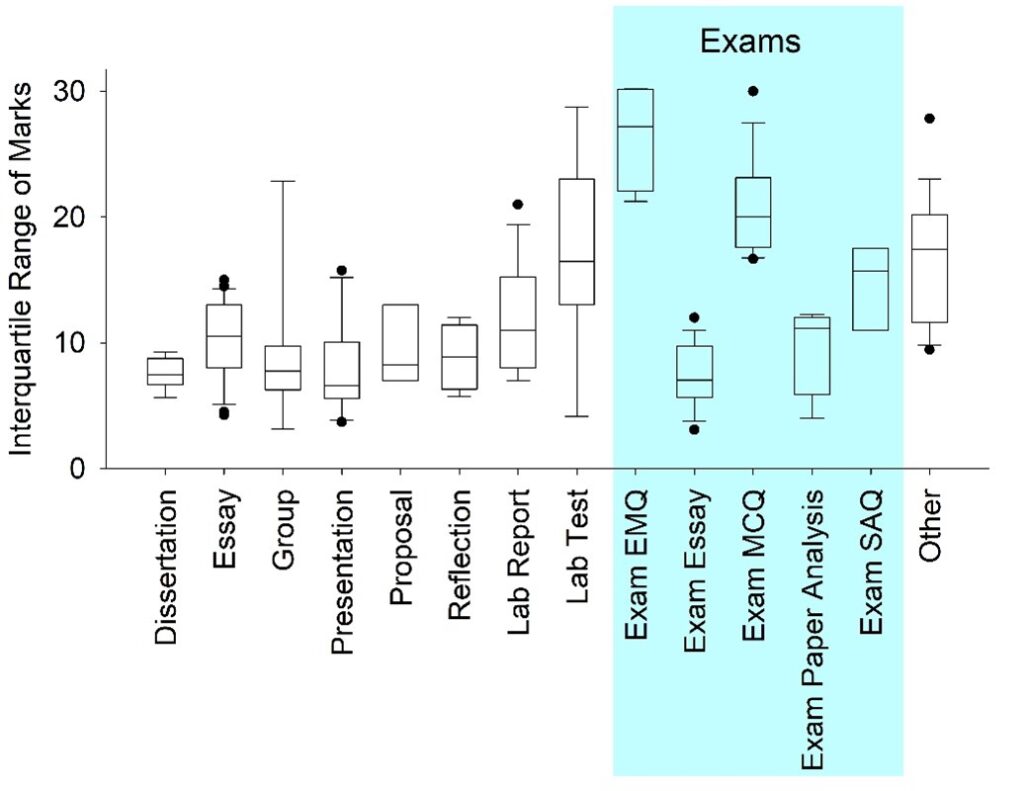

One major categorisation of marking schemes is whether they are holistic of analytic. A holistic marking scheme results in a single mark that represents the whole of the piece of work whilst an analytic scheme results in a series of marks for individual components which are combined to produce a final mark. A significant proportion of our marking schemes lie between these categories: there is a single mark but a grid is provided to give indicative grades for each category. I term these “semi-analytic”. Other assessments, such as multiple choice exams or calculations have a single best answer (SBA) so do not use either type of marking scheme. Proponents of analytic schemes argue that they provide greater reliability of marks and agreement between markers whilst critics argue that they may result in narrow ranges of marks by failing to properly reward work that is brilliant in a single respect. I found no difference in the interquartile range of marks between holistic, semi-analytic and analytic schemes whilst SBA assignments resulted in a much larger range. This argues against the restrictive effect of analytic approaches.

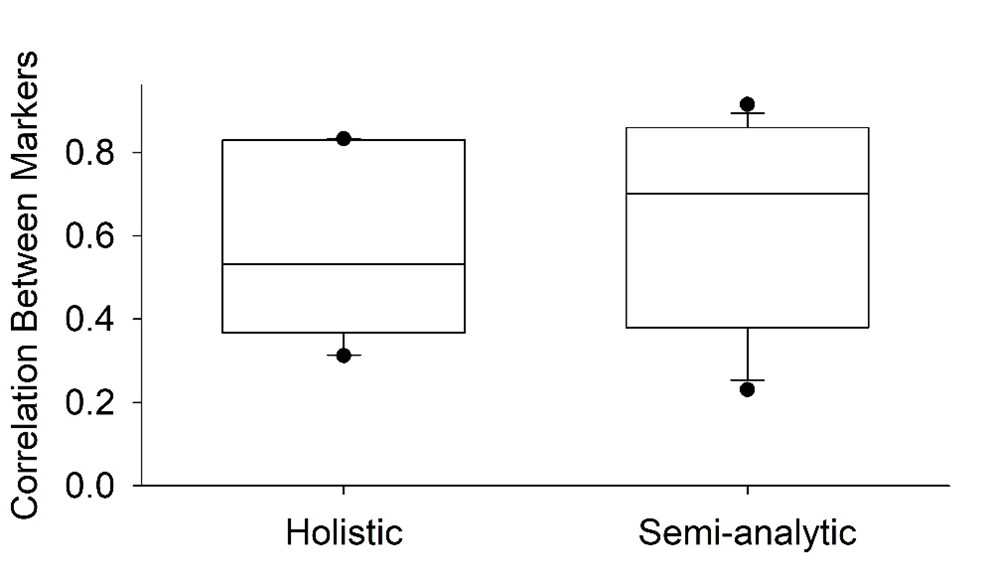

None of our analytic or SBA assignments were double marked but there was no difference in correlation between markers for holistic and semi-analytic schemes suggesting that neither approach is inherently more consistent.

Together with other findings not discussed here, the analysis does not preferentially support the use of either analytic or holistic schemes.

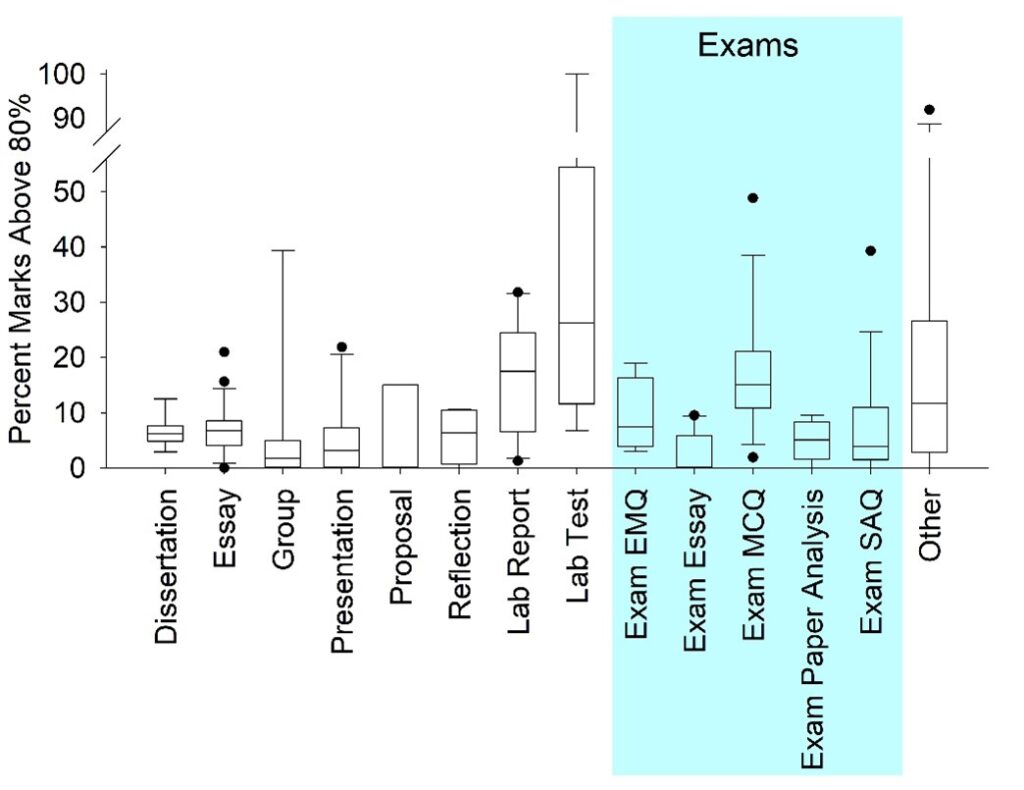

One of the main findings was that the style of marking scheme made little difference to mark distributions. However, in the course of the analysis, it became apparent that it is difficult to disentangle the effect of marking schemes from that of the type of assessment used. In fact, the type of assessment appears to have greater effect on both the interquartile range of marks and the proportion of marks of 80% and above.

One especially striking example is that of exams with long-form or essay-style answers. These are often thought of as a great test of the ability of students to apply knowledge and, as such, an effective discriminator. Contrary to this, these assignments produced the smallest proportion of marks above 80% (8 out of 14 courses did not award any marks in this range) and only presentations had a smaller interquartile range. Whilst presentations represent an important graduate skill and authentic assessment, the same cannot be said for exam essays. This finding questions the utility of this type of assessment or, at least, our expectations of student performance in them.

Overall this analysis shows that our beliefs about what we are achieving when assessing are not always backed up by evidence. These sort of counter-intuitive findings can be very difficult to identify at the single course-level suggesting that School-level analysis is important to guide our practice.

Preliminary analysis was carried out by Omolabake Fakunle

MICHAEL DAW

MICHAEL DAW

Dr Michael Daw is a Senior Lecturer and Director of Quality in the Deanery of Biomedical Sciences. He was an Edinburgh graduate in 1998 and returned in 2010 as a Research Fellow before developing an increasing interest in teaching and learning.

Produced and Edited by:

Eric Berger

Eric Berger

Eric is a Mathematics and Statistics student at The University of Edinburgh, and a podcasting intern for Teaching Matters. Eric is passionate about university student mental health, interviewing researchers for the Student Mental Health Research Network at King’s College London, leading the University of Edinburgh’s WellComm Kings Peer Support Scheme, and conducting research on stigma for People With Mental Illnesses (PWMI). In his free time, he enjoys watching and playing sports, over-analysing hip-hop songs, podcasts, and any sort of wholesome shenanigans.