Electronic Management of Assessment and Assessment Analytics

In early June Abertay University in Dundee hosted a pre-conference workshop of the EUNIS (European University Information Systems Organisation) E-Learning Task force, on the current “hot topic” of Electronic Management of Assessment (EMA) and Assessment Analytics.

The event was delivered in partnership with JISC because of its current work on Electronic Management of Assessment (EMA) and provided a great opportunity to network with elearning staff across Europe – over 60 people from 12 countries attended. The University of Edinburgh has been involved with several JISC assessment projects in the last few years, including Transforming Assessment and the current EMA work.

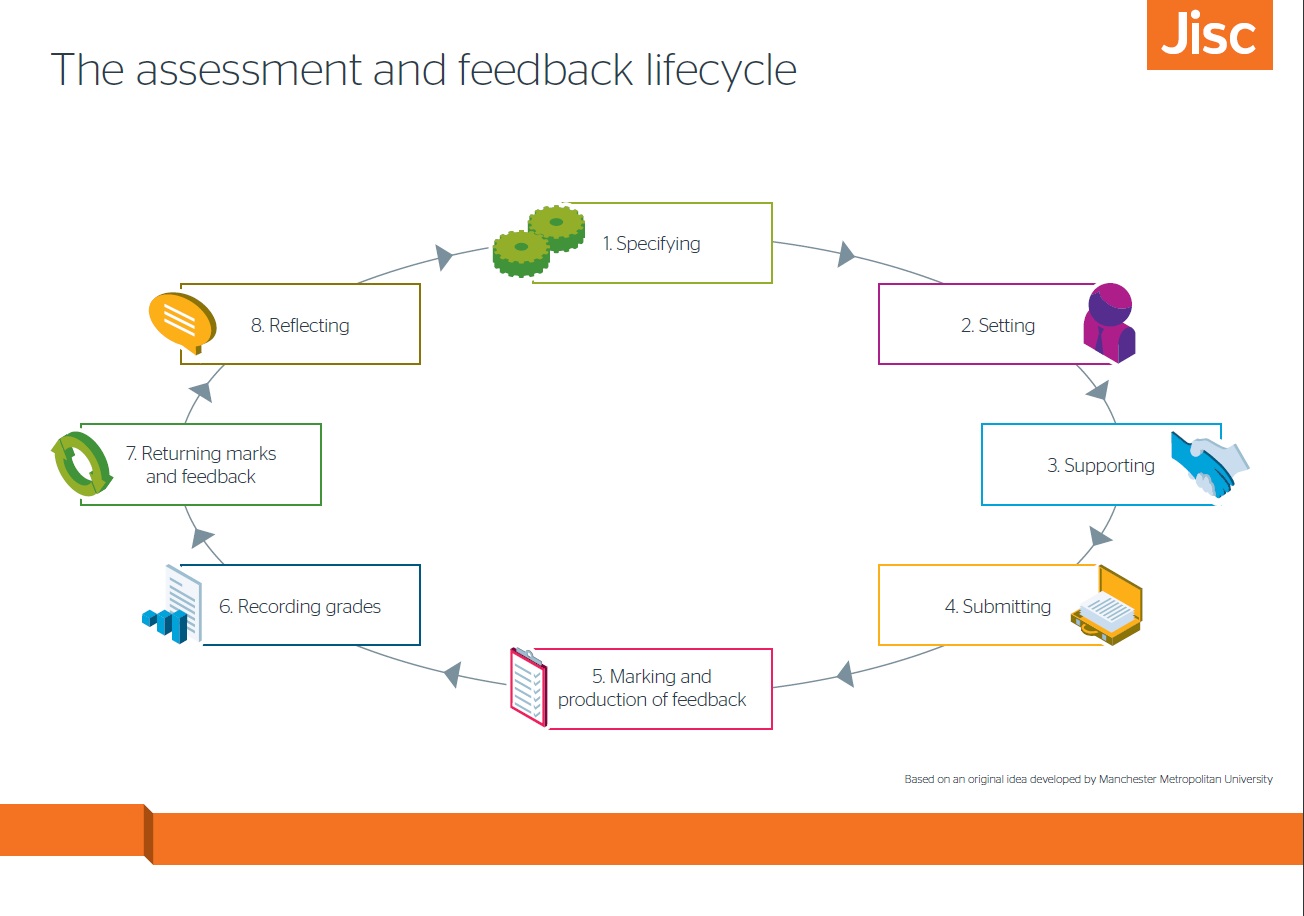

The morning was mainly given to presentations: Gill Ferrell outlined the background to the JISC EMA project and consultation process which identified the priorities for action. Current work is looking at mapping the assessment lifecycle and capturing requirements for systems and processes. During the day delegates added their thoughts and suggestions to the lifecycle charts on the walls – and they also clustered around the main “pain points” where current systems do not adequately support business processes. These clustered especially around items 5 to7 in the lifecycle map.

I was particularly interested to hear that the work so far on the process of modelling assessment workflows indicates that there are not as many different models as may at first appear. Discussion is ongoing, but it looks like key points around delegated marking and moderation still only lead to 4 different models. But the discussion continues and you can participate through the EMA blog.

Freddy Barstad from UNINETT e-Campus in Norway described the government-funded project on digital assessment, which has focused more on finding suitable IT solutions, but has had to address the same issues as the UK work. He illustrated some workflow models which look very similar to work carried out here by myself and colleagues in student systems when looking at management of grades workflow. They are working to establish what the necessary infrastructure is, and then to create it.

Meanwhile they have compiled a number of technical current best practice documents, which are being translated into English over the summer. This process has been informed by standards work carried out in the telecommunications field, as many of the issues of communications, standardisation and interoperability apply. I was very impressed by the practical approach – identifying areas where coordination is preferable to unification, because the diversity of pedagogic practice must continue to be supported.

Tona Radobolja from the University of Zagreb introduced the TALOE Webtool, which aims to support staff thinking about assessment methods by matching staff selection of learning outcomes with assessment methods. The intention is to help ensure alignment of assessment with learning outcomes. You can explore the tool at and the project team welcome suggestions for developments – suggestions at the session included widening the range of assessment methods and tools covered, and an integration with LMS so that staff can use it while they design their course. The SRCE centre in Zagreb already has strong links with the University of Edinburgh and their webinar series features several contributions from University of Edinburgh staff.

A number of suppliers attended the event and after short presentations, there were opportunities to look more closely at each of their products. Three of them – Pebblepad, Learn, and DigitalAssess Adaptive Comparative Judgement system, are in use or being piloted here in Edinburgh. All of these, and Canvas LMS, are looking at developing their support for peer review / marking, mobile devices, and provision of feedback. D2L have a different sort of LMS, Brightspace, which aims to use a combination of text mining and data analytics to monitor and support student performance by personalising their pathway through learning. One can imagine this approach might appeal to managers with very large cohorts and a subject area which could be “programmed” suitably, but I have my doubts whether this approach can truly support improved learning or improved content transfer.

In the afternoon Gill Ferrell gave us much food for thought when reviewing the JISC related work on encouraging institutional change for assessment practice and where the main barriers may lie. The issues around cultural change and provision of appropriate staff support and development are clearly widespread beyond the UK. The scalability of some proposed innovations is only one of a number of issues.

Rola Ajjawi from the University of Dundee described the interACT Project within her programme which aims to introduce a more dialogic feedback process, at a scale to be workable for the very large online MSc in Medical Education on which she works. This involved re-working the assessment schedule and style, to assess over a longer period but to include feed-forward dialogue opportunities. An interactive cover sheet for the assignment allows students to highlight those aspects of the work they particularly want feedback on, against a rubric specific to that assignment. The tutor than provides this feedback, and the cycle is completed when the students create a self-evaluation entry outlining the action they plan to take, to improve their submission next time. The whole cycle is tracked online so that a mapping of the interaction is available for students and staff. Staff training was essential in the success of this project, and it did take more time than the previous traditional marking process, but the evidence suggests such an improvement that both staff and students are pleased with the process.

Nora Mogey from the University of Edinburgh’s Institute for Academic Development (IAD) outlined some findings from pilot work IAD and Information Services have been supporting, using the Adaptive Comparative Judgement (ACJ) tool from DigitalAssess. We have been especially interested in using this tool with students to provide them with a peer review opportunity. Findings from an early pilot suggests that the students who benefit most from this sort of exercise (i.e. using rubrics to review others work, having multiple comments from others on their own work) are those in the middle range – improvements may be as much as a whole grade classification. There are further pilots being evaluated at present, but interim feedback from students suggests that this approach is encouraging a more reflective approach to creating assignments.

The final session of the day started with a stimulating presentation on Assessment Analytics from Adam Cooper from CETIS and the LACE Project (Learning Analytics Community Exchange). He emphasised the need to keep learning analytics focused on actionable information which supports student learning, and listed some areas he feels are currently neglected:

- What can we get from small data

- ‘Intermediate technology’ (e.g. Excel)

- Contextualisation of data

- Understanding without prediction

- Personalisation of actionable information.

He gave some interesting examples of ways in which “small data” can be used to help staff and students understand their actions and therefore identify what remediation would be helpful. One which stuck in my mind was a visualisation of student grades against rubric-based essay feedback which identified whether an introductory paragraph was good, inadequate, or absent. As the “absent” and “inadequate” feedback clustered around the lower grades, with a scattering around the median, this could be a useful tool to take into discussion with students around “Why a good essay structure is important”.

This very practical but powerful use of learning analytics at a course and individual level appeals to me very much, as it chimes neatly with our current work on understanding how to present student activity data to students in a context that is meaningful and actionable. In the discussions that followed, it was clear that many of us are wary of the “big data” approach and claims made for analytics at institutional level, and that we should be working with teaching staff to identify key questions which would help them to improve courses as well as support student learning.

I left the day rather regretting that I could not stay for the rest of the conference and more discussions with such a diverse and experienced group, but with plenty to follow-up on, looking at assessment workflow within the University here and seeing how well the EMA lifecycle mappings would fit. I will also be watching with interest the developments in Learning Analytics and see how we can all be encouraged to consider how to make data analysis meaningful for teaching and learning.

The presentations from the day should be available from the JISC EMA site shortly.