The Moodle quiz offers a huge range of settings for the timing, number of attempts, and feedback that is offered to students. Deciding how best to use these options is a tricky question – and actually forms the basis for some of the questions in the research agenda that I recently developed in collaboration with several colleagues:

Roles of e-assessment in course design

Q27. How can formative e-assessments improve students’ performance in later assessments?

Q28. How can regular summative e-assessments support learning?

Q29. What are suitable roles for e-assessment in formative and summative assessment?

Q30. To what extent does the timing and frequency of e-assessments during a course affect student learning?

Q31. What are the relations between the mode of course instruction and students’ performance and activity in e-assessment?

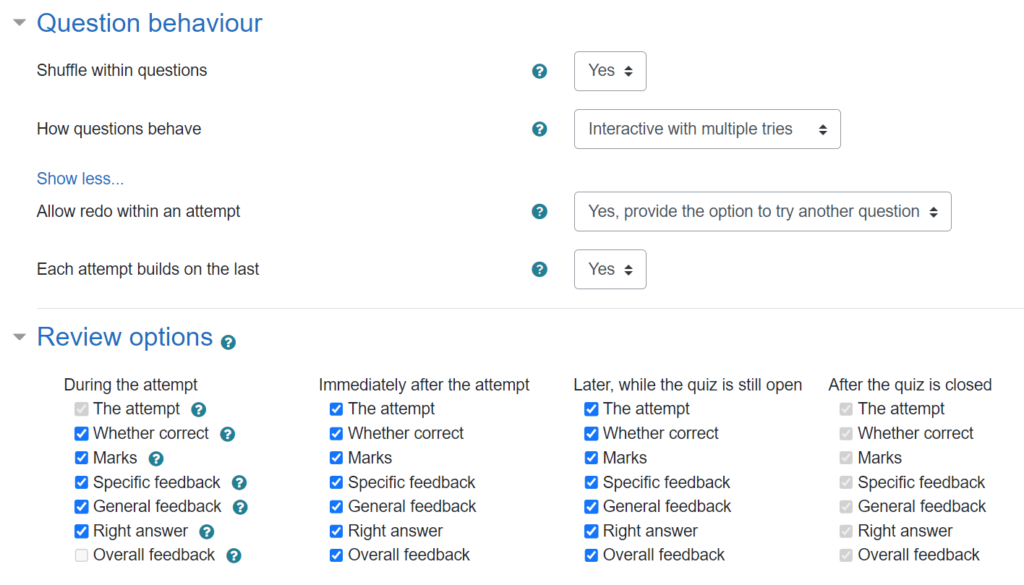

I’m sure that I’ve not explored the full potential of all the Moodle quiz options, but here are some examples of settings that I use in my Fundamentals of Algebra and Calculus course.

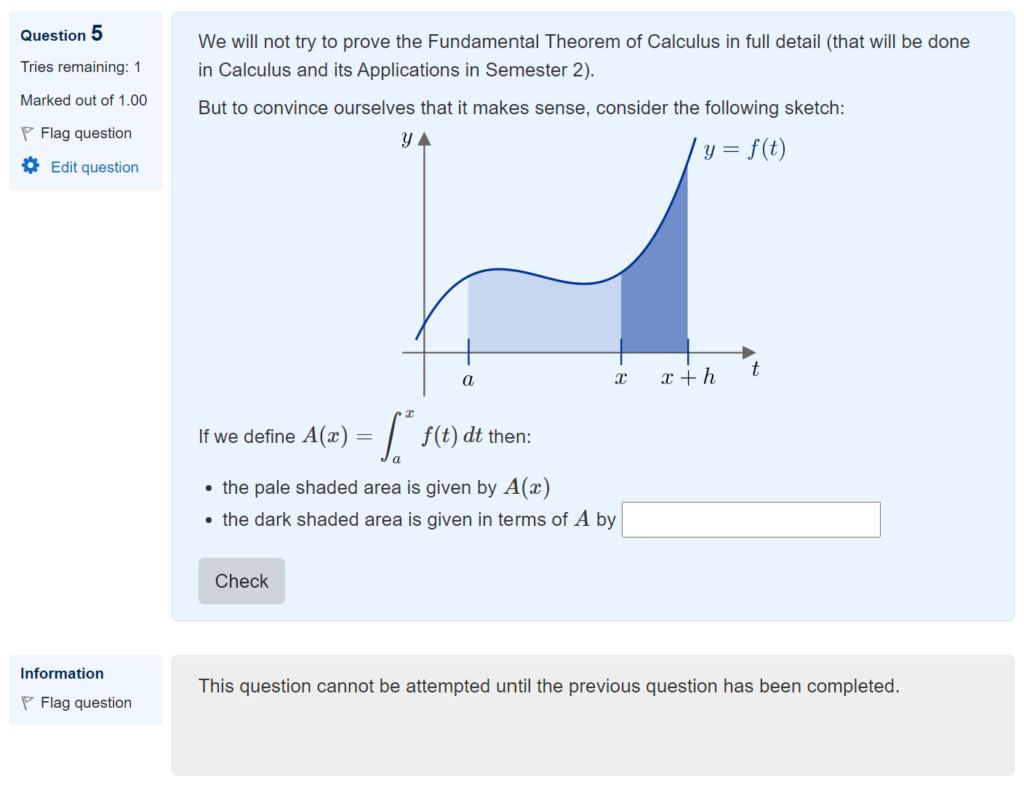

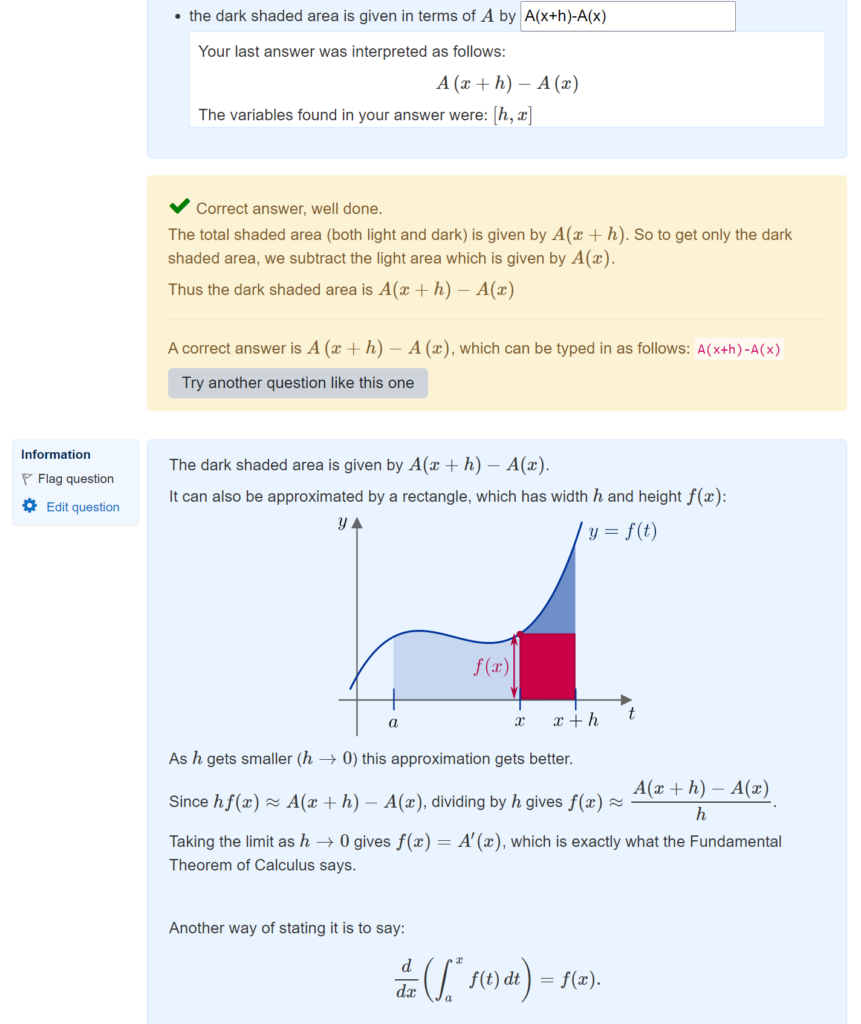

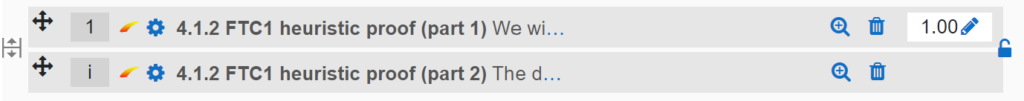

Course materials

For the course materials within FAC, I have put all the feedback settings to the max, including the option to redo individual questions:

The “scores” on those quizzes really don’t matter for anything, and actually most students never even submit the whole quiz to be graded, since they can see the results question-by-question as they go through.

Practice quizzes

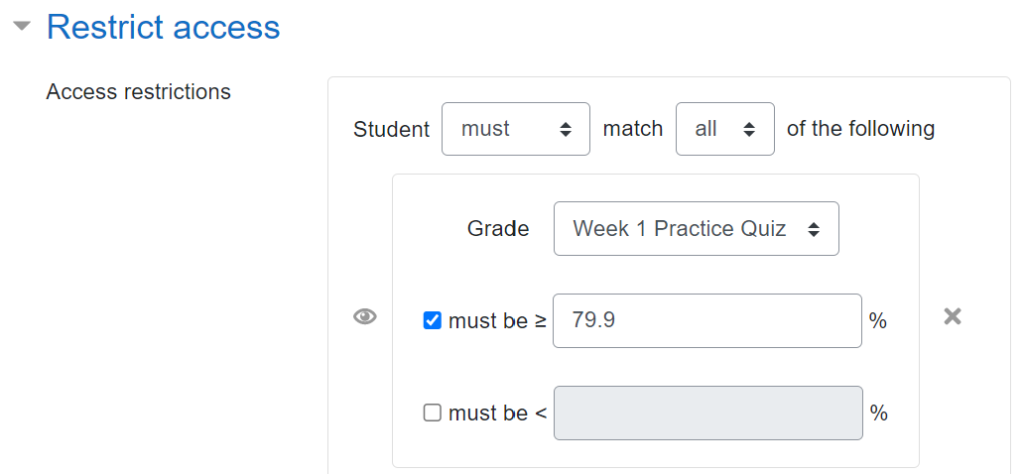

Each week there is an assessed quiz that contributes to the students’ grades (see below) – but before they can take that quiz, they need to score at least 80% on the week’s Practice Quiz.

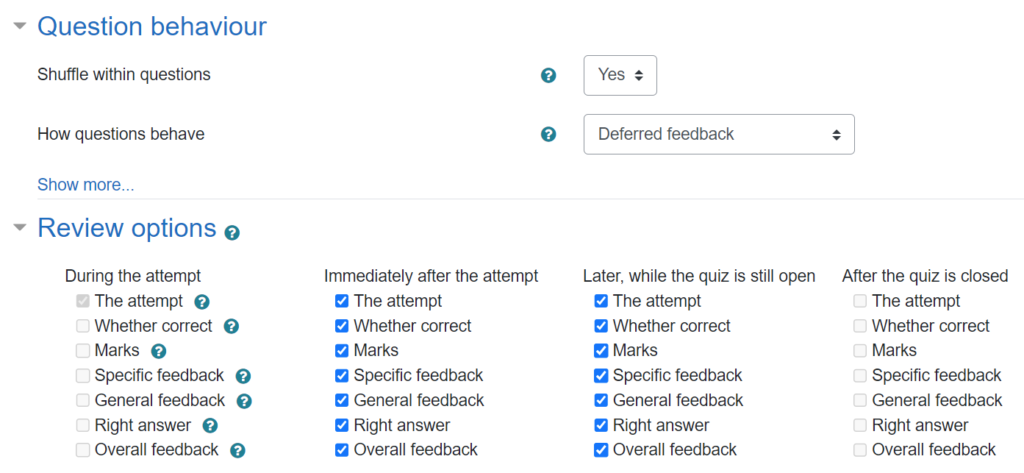

For the Practice Quiz, students can have an unlimited number of attempts – but there is no way to replace individual questions. Instead, students need to complete the whole quiz and submit it to get their score at the end (as preparation for the way it will work in the assessed quiz).

Assessed quizzes

Each week there is a “Final Test” quiz that contributes to the students’ grades. Scores of over 80% are a “Mastery” result, and students need to get at least 7 Mastery results across the 10 weeks to pass the course. The grading scheme is a bit more complicated than that; you can see the full details in my paper about the course design and my post about how to set it up in the Moodle gradebook.

Having the requirement to score at least 80% on the Practice Quiz (which typically has very similar tasks to the Final Test) means that students should be well-prepared to succeed.

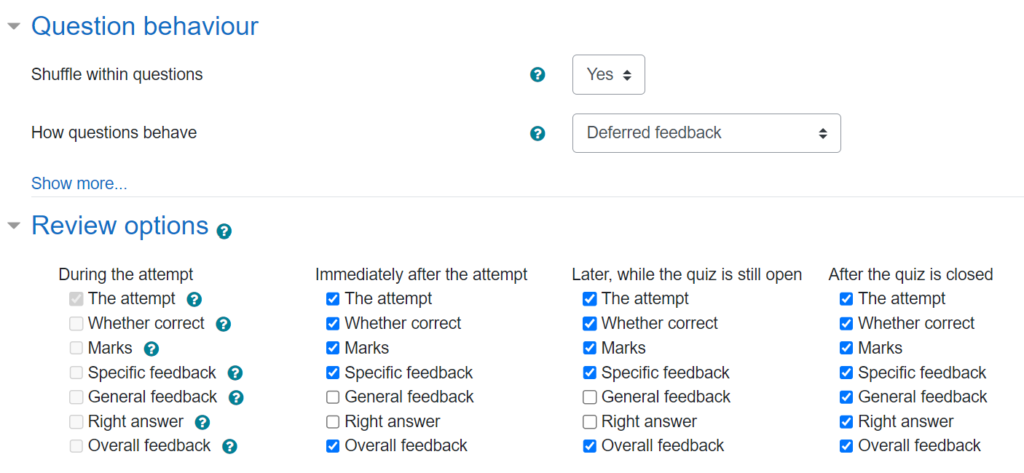

The Final Test itself uses more restrictive settings to control when feedback is available, since I wanted to avoid having worked solutions circulating while other students have yet to complete the quiz. In particular, the “general feedback” (i.e., worked solution) and “right answer” are only available after the quiz is closed:

There is only 1 attempt allowed at this quiz, with a time limit of 90 minutes from when the quiz is opened. Students need to complete each week’s quiz by a regular deadline. However, if students don’t meet the Mastery threshold, there is a resit version that becomes available the next day (again, set up using the “restrict access” feature so that it only appears for students who need it).

Other approaches?

As I mentioned, I’ve only scratched the surface of what’s possible with the Moodle quiz settings. I know other colleagues have set up quizzes where students can make multiple attempts, and the grade is based on the average of the attempts (so as to incentivise trying hard on the first attempt, but allowing for students to improve if they’re not happy with a bad first attempt). It’s also possible to set penalties within questions, so that you can use the interactive quiz mode (like the course materials example above): that allows students to redo an individual question if they’re not happy with the score, but possibly with a penalty (again, to encourage students to take the first attempt seriously).