Don’t Forget your Pleases and Thankyous

The home-assistance technology industry has exported its complex softwares and programmed human-isms into our domestic homes. Apple’s ‘Siri’, Amazon’s ‘Alexa’ and ‘OK Google’, pose a new challenge to how my body tunes into space as they blur the categorisations between subject and object in everyday life.

Attunement is widely understood as ‘tuning in’ to the body and environment (Brigstocke and Norrani, 2016; Stewart, 2011). Upon listening to BBC Radio 4’s Digital Human ‘Subservience’ podcast it became apparent that my iPhone’s Siri is part of my ‘attunement’ with how I live and act in space.

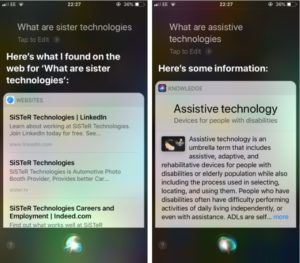

I rarely use Siri, Apple’s ‘intelligent assistant’ (Apple, 2019), finding it slow and rather incapable of understanding me (figures 1-4). On the few occasions I have used Siri, I speak calmly and slowly, though never asking please. I wonder whether my slow broken English, coupled with over-pronunciation is symptomatic of my attunement to Siri’s technology. An article by Rachel Withers (2018) picks up on how one speaks to these anthropomorphised technologies reflects highly on how one values other humans. The fact that I talk not in a demanding and brash tone, but slightly patronisingly to Siri, showed me how attunement can act an analytical tool to address my own relations to technologies in the private sphere.

Though avoiding the pitfalls of ‘attunement’ as invoking normative ideas of being ‘in harmony with’ as good and being ‘out of tune’ as dangerous, Janette Pols (2015) uses attunement to explore smart technologies in elderly healthcare (Brigstocke and Noorani, 2016). Pols instead posits that my engagement with Siri is a result of the activities and passivities of all partners. Myself, Siri and our environment are continually attuning to one another and creating a normativity of relation in my home.

Pols’ research shows that my interactions with Siri reveal a sensorial aesthetic. The Kantian aesthetic theory of ‘imagination’ coupled with ‘understanding’ is something I only partially experience (Brigstocke and Noorani, 2016). I talk to Siri in a way which exercises my freedom of imagination; directing my body and voice (figures 5-6) with a tone of recognition to its ‘humanness’ and therefore its possible- though non-existent (yet)- emotional capacity. I believe however that assistive technologies work in many ways to pollute my understanding of my environment. I talk to my abiotic phone as though it were a person yet remain unable to determine its place in a social hierarchy. I automatically slip into the homocentric tendency to assume all more-than-human agents are beneath myself (Pols, 2015).

My attitude and tone of addressing these assistants, (representative of the historically female/BME/Other servants/slaves) exhibits a deeper implicit reification of my perception of my position in a human hierarchy. I think I need to remember my pleases and thankyous if I am to consider these entities as part of a human hierarchy, whilst navigating the tricky business of not succumbing to the determinism which goes along with such a structure. I wonder how I might physically and verbally attune to new iterations of these assistants as advancement of home-assistive technology seems scarily limitless.

Word Count: 505

Cover Image: George Barr (source: http://70sscifiart.tumblr.com/post/183019701127/george-barr)

Bibliography

- Apple (2019) Siri. [online] Apple (United Kingdom). Available at: https://www.apple.com/uk/siri/ [Accessed 18 Mar. 2019].

- Brigstocke, J. and Noorani, T. (2016) Posthuman attunements: aesthetics, authority and the arts of creative listening, GeoHumanities 2(1), pp. 1-7.

- Pols, J. (2017) How to make your relationship work? Aesthetic relations with technology. Foundations of Science, 22(2), 421-424.

- Stewart, K. (2011) Atmospheric attunements, Environment and Planning D: Society and Space 29(3): 445-452.

- Withers, R. (2018) I Don’t Date Men Who Yell at Alexa. Slate. [online] Available at: https://slate.com/technology/2018/04/i-judge-men-based-on-how-they-talk-to-the-amazon-echos-alexa.html [Accessed 18 Mar. 2019].