Data and AI are increasingly central to public service media (PSM) ambitions and strategies for the future but there are serious concerns around the development, use and societal impacts of AI.

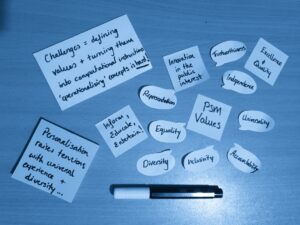

Ethical principles for AI now abound but how such ethics are enacted in practice varies widely across contexts, and PSM must align such guidance with their own mission- and value-driven frameworks. This workshop focuses on the related issues of value and values – asking questions about how AI and data-driven systems can contribute to the creation of public value and how public service values can be (re-)articulated and translated into computational form, and embedded in socio-technical systems.

In this workshop, we explored:

- How might AI be used to help PSM deliver public value?

- How might this be achieved while upholding or strengthening public service values (e.g. impartiality, independence, objectivity, fairness, accuracy, universality, diversity, accountability)?

- Is there a need to re-imagine/re-articulate these values to ensure any benefits of AI are harnessed and shared, and risks minimised?

- What research and development is needed to answer these (and other) crucial questions?

We identified 5 themes:

-

Difficulty of defining values & imperfect nature of approximation

-

Defining and articulating values like impartiality, tolerance or inclusion, is difficult, contested and context dependent – and is a normative act

-

Making these definitions suitable for use in building and deploying AI systems adds another level of complexity.

-

The inherently reductive nature of doing this should be recognised and explicitly discussed, for example in relation to the impact that simplified and imperfect approximation, and the tensions/trade-offs between competing or incommensurate values or their proxies, can have.

-

-

How might PSM turn their value frameworks and the normative concepts that underpin them, into computable instructions within sociotechnical systems, in ways that are implementable and measurable? Is this even always possible?

-

Do PSM need to be more explicit about the criteria they use to define a process as e.g. impartial? To explicate and concretise ‘gut feeling’ etc?

-

This explication could be a very fruitful exercise and help create clarity around standards and to enable accountability to those standards

-

But (how) can PSM deal with the inherently contextual dimensions of principles like impartiality when designing automated systems? And how can they ensure values they are not impartial about and want to promote are effectively articulated and interpreted for computational processes?

-

-

Who gets to decide how to define and operationalise these concepts? Whose responsibility and task is it to define public values in an emerging space such as AI?

-

Operationalisation of algorithmic systems involves ranking elements to optimise for, so decisions have to be made about priorities. This could be explicit user choice or implicit inference from data, or both – more research is needed on these approaches

-

Is there yet space for serious discussion with the public and other stakeholders? Are there mechanisms for contestation and resistance, co-design approaches?

-

If PSM develop metrics to score how well value goals are met, might this incentivise types of measurement that end up distracting from citizen-driven valuation? What forms of valuation might be useful here?

-

-

Lots of different groups are approaching these same questions and concerns from different perspectives (academia, public sector/regulatory space, technology think tanks, plus private sector industry analysis) – who should PSM listen to and how do they assess what is valuable?

-

Reflection on the epistemology of knowledge here is necessary

-

-

Re-thinking public interest-driven media

-

Is there also a need to revisit what public values are and what public value means for PSM in relation to data, AI, autonomous and algorithmic systems?

-

Are PSM currently using old definitions and not adequately reflecting on the contemporary environment – so could they benefit from reassessing what they are trying to accomplish and why?

-

Moving too quickly to use these tools without reconfiguring may be detrimental and more investment into responsible use and development seems necessary

-

-

There is a need to reflect on PSM’s obligation and unique position to ‘innovate in the public interest’ and focus on providing good practice examples in this area that could bring broader positive externalities

-

E.g. Recognising that adopting wider industry standards of optimising data-driven media systems for increasing clicks and engagement is unsatisfactory in a PSM context and other measures of value and impact need to take an more prominent place

-

E.g. Via experimentation with different types of recommendation algorithms that can optimise for different values/balance values against each other, and can give the option to users to choose from these different systems to empower them and allow them also to see the impacts that it might have on their information and content diet.

-

-

There is a case for building in positive goals, not just constraints (e.g. legal, regulatory), which could contribute to PSM’s distinctiveness

-

For instance, asking what is missing from existing PSM value frameworks that is needed when dealing with (personal) data and autonomous systems, e.g. new forms of trustworthiness? Algorithmic accountability? Intelligibility?

-

Beyond legal responsibilities, what does PSM owe to citizens/audiences and end-users in this regard?

-

-

New questions arise about what is acceptable for PSM to do, e.g. application of behaviour change such as nudge approaches. We need to question who gets to decide what is ethical.

-

Finally, the question of impacts – how might AI and automated systems change public institutions and shape public interest (intentionally or accidentally)?

-

Tensions between universal experience, diversity and personalisation

-

One concrete case in which value tensions are clear and require urgent attention is public (service media) and personalisation, which simultaneously creates opportunities to target certain groups in society and undermines a ‘shared experience’, traditionally valued by PSM

-

Do we know enough about the benefits of personalisation across different domains to base widespread efforts to personalise PSM services so extensively as has been proposed in many PSM? Are there borrowed assumptions across for example news, entertainment, sport etc?

-

How/when/why do users benefit from/become negatively impacted by personalisation? What should the balance be between meeting individual preferences and exposure to diverse content?

-

There are levels of personalisation, from in-content, to content selection, to menu display etc

-

-

How might the input of users (and which users) be better included in their data-driven ‘personalised’ experiences?

-

E.g. How can we best provide users with agency and autonomy in engaging with news recommender systems? Might doing this allow them to reflect on the impact of optimising for certain values over others – or help users understand how key values such as impartiality, trust, and diversity filter into their recommendations?

-

-

Personalisation raises issues of power asymmetries and second-order effects in relation to third parties

-

Lots of public service content is primarily accessed through links shared on other sites – which aggregate and recommend content in their own ways – this suggests a need to take into account how these separate systems might feed into/influence one another.

-

PSM often do not have direct link to audience data, with big tech companies mediating data access, creating a challenge

-

-

Role of oversight & governance to maintain PSM value-alignment

-

A key challenge will be providing the kind of accountability, transparency and contestability needed to ensure new technologies like AI do not steer the public service media ecosystem out of alignment with its fundamental tasks

-

Automated systems require more investment in human oversight and judgement, not less – so what new systems of human oversight need to be added to preserve this alignment?

-

Forms of governance will have to be very close to practice and dynamic, which requires new bodies, approaches, skills

-

Need to move from a guidance-based approach of principles and check-box exercise structures to a culturally-embedded governance approaches

-

-

With political, financial and societal (e.g. legitimacy) pressures causing strain, how to ensure that public media has the capacity (skills and expertise, will and licence etc.) to deal with questions emerging around data and AI becomes a core question

-

On an organisational level, the ‘will’ to ensure responsible AI is often there but ensuring practical measures are prioritised and reflected in top-level metrics, objectives and key results frameworks is a live challenge

-

Some applications of AI may be useful in providing oversight of and insight into the large swathes of content PSM produce, including organisations monitoring e.g. representation of communities, diversity, political balance, due impartiality etc.

-

But need to ensure this bolsters more discerning human judgment rather than for example quantifying and creating ‘scores’ that then become ground truth

-

-

Dialogue, transparency & explanation in value-driven AI systems

-

PSM need to explore how to better engage end-users and intermediate users such as journalists in working through these value questions and ask how they might better incorporate the public’s views on data and AI.

-

But can there be a meaningful feedback loop with users without clear definition, explanation and implementation of a value framework?

-

We don’t yet know what the roles and responsibilities of end-users are or should be, as informed citizens, information consumers, and active nodes in the algorithmic feedback loop

-

-

If PSM agree that user/practitioner agency is important here, it is necessary to work out how agency can be defined and made practicable and to ask which values and end-goals motivate organisations/teams to work towards agency and autonomy in relation to data-driven systems?

-

They face additional challenges including automation bias and competing demands for attention

-

-

For example, if PSM want to help users shape the recommendations they are shown, how can they best create a transparent dialogue between user, system and developer/designer?

-

Explainability will be important here but there is a tendency to see it as a secondary feature that does not create much value. Making explanations interactive (e.g. by enabling users to question/challenge the system or its predictions, personalise it, or provide feedback), would involve users in the process of building and improving AI/ML systems.

-

Can engaging with metadata be a lever for users/practitioners to better influence algorithmic systems? How?

-

With thanks to our participants

Dr Bronwyn Jones

Postdoctoral Research Associate in Intelligible AI, University of Edinburgh

Dr Shannon Vallor

Baillie Gifford Chair in the Ethics of Data and Artificial Intelligence at the Edinburgh Futures Institute (EFI), University of Edinburgh

Dr Caitlin McDonald

Postdoctoral Research Associate at Design Informatics, University of Edinburgh

Dr Oliver Escobar

Senior Lecturer in Public Policy, University of Edinburgh

Dr Ben Collier

Lecturer in Digital Methods, University of Edinburgh

Sarah Bennett

PhD Candidate, University of Edinburgh

Youngsil Lee

PhD Candidate, University of Edinburgh

Auste Simkute

PhD Candidate University of Edinburgh

Aditi Surana

PhD Candidate, University of Edinburgh

Anna Rezk

PhD Candidate, University of Edinburgh

Prof Dr Natali Helberger

Professor of Information Law, VU Amsterdam

Sanne Vrijenhoek

Researcher in Faculty of Law, UV Amsterdam

Dr Stephann Makri

Senior Lecturer in Human-Computer Interaction (HCI), City University of London

Dr Pieter Verdegem

Senior Lecturer in School of Media and Communication at University of Westminster

Eleanora Mazzoli

PhD Candidate, LSE

James Fletcher

Responsible AI/ML Lead, BBC

Dr Rhia Jones

Research Lead, BBC

Léonard Bouchet

Co-chair of the European Broadcasting Union’s Artificial Intelligence and Data Initiative (AIDI) & Head of Data and Archives at Radio Télévision Suisse