The following is Part 5 of a five part student blog series sharing the excellent work of Edinburgh Law School undergraduate and postgraduate students on the Contemporary Issues in Medical Jurisprudence course.

Applications of artificial intelligence (AI) in the COVID-19 crisis response have proven to be crucial supportive tools for governments and medical professionals alike. AI has been implemented in the contexts of detection and diagnosis, disease management and prevention of spread, and vaccine and drug development. While AI’s positive impact should be duly noted, it has been noted that under the pressure induced by the pandemic, demand for rapid response technological interventions hindered responsible AI design and use, particularly in relation to privacy. This blog will consider both sides of a legal debate that has arisen in this area: in the face of a global health emergency, how should legal establishments balance between the preservation of individual rights of privacy and the protection of collective interests?

Unregulated applications of AI give rise to privacy concerns

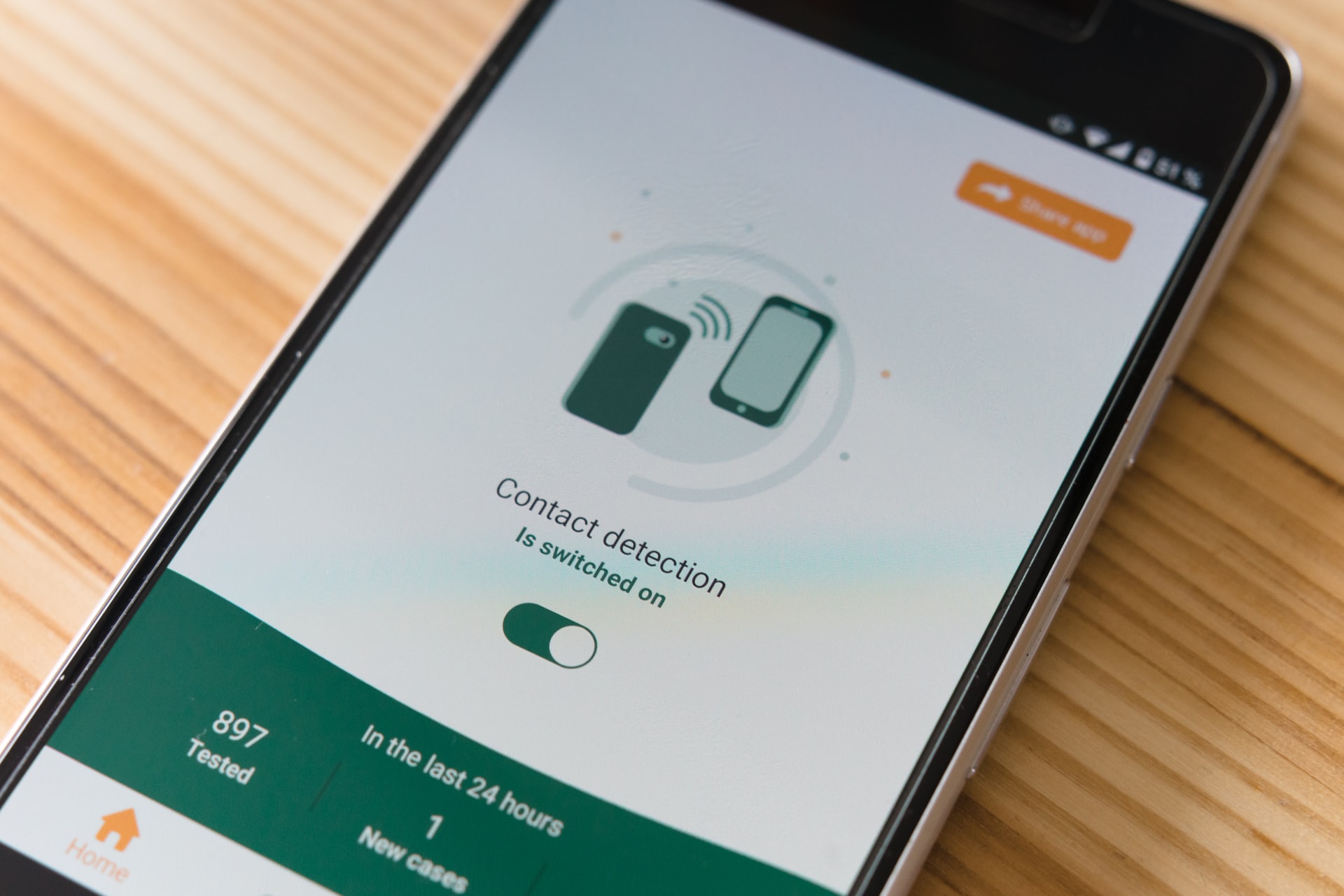

In an attempt to control pandemic risks, many countries have depended heavily on artificial intelligence as a tool for population control and contact tracing, with varying degrees of success. In order to adhere to established data protection laws, measures such as contact tracing applications must be “anonymised, secure, not easily re-identified and kept for no longer than necessary.” In practice, however, and perhaps due to pressures to quickly develop technological solutions, legal preoccupations of privacy were widely overlooked by developers and medical practitioners. As a result, the use of geolocalisation and facial recognition technologies has given rise to widespread concerns of health data protection breaches. These concerns are not entirely misplaced: an assessment of 40 Covid-19 contact tracing apps revealed that “72.5% of the apps use at least one insecure cryptographic algorithm” and that “three quarters of the apps contained at least one tracker that reports information to third parties such as Facebook Analytics or Google Firebase.”

Furthermore, it is important to note that these legal concerns have cross-jurisdictional relevance. Both the centralised and decentralised database models – the former of which is largely practised by countries in the East-Pacific region, while the West champions the latter – can give rise to privacy risks. Consider, for example, the UK’s Track and Trace application’s original ‘centralised’ database, which triggered legitimate worries about potential data misuse. However, even its current decentralised model gives rise to “endemic privacy risks that cannot be removed by technological means, and which may require legal or economic solutions.”

Overly-regulated applications of AI stunt more effective responses to a deadly pandemic

These legal missteps can arguably be excused when considering the unanticipated nature of the pandemic. Some have gone even further, however, positing that eventual regulation of AI tools came with trade-offs in AI’s efficiency and efficacy in responding to the pandemic, which in and of itself raises ethical concerns. For example, White and Van Basshuysen argue that despite legitimate privacy concerns, centralised database models are intrinsically better equipped to effectively and quickly trace positive COVID cases. Due to the blind adoption of decentralised database models that have been inaccurately depicted as “privacy preserving by design”, the debate about digital contact tracing has ultimately “ignored the fact that the failure of a system being effective involves ethical risks too.” In a companion blog, White specifically critiques the UK’s Track and Trace app, stating that the NHS’s original centralised strategy “could have allowed for faster tracing and thus more effective epidemic mitigation.”

The successes of ‘unregulated’ centralised database models are best exemplified in the Asia-Pacific region’s vastly superior performance in suppressing the pandemic in comparison to North Atlantic countries. Widespread and swift application and social acceptance of restrictive safety measures in East Asian countries such as Singapore, China, South Korea, Taiwan and Japan are cited as key factors for these early and sustained successes. Western detractors of China, for example, are quick to criticise these measures as aggressive mass surveillance “imposed by an authoritarian government.” Less sceptical analyses give more weight to the data available: the Asia-Pacific region’s consistent success in suppressing COVID can be directly linked back to these countries’ decision to prioritise efficiency over perceived privacy and has resulted in a mortality rate (deaths per million) 42X lower in the Asia-Pacific region compared with the North Atlantic region. In light of such statistical evidence, is it possible that Western preoccupations with regulating AI out of concerns for privacy has caused the legal establishment to lose sight of what matters most: protecting human life?

How should the West’s legal establishment respond to these tensions?

Despite the West’s apparent problematic fixation on privacy, there has been little to no actual enforced legal regulation in response to the anxieties expressed by academics and civil society. In the US context, for example, it has been noted that “data regulation would likely have helped determine what types of emergencies should be subject to the collective interest over individual rights” but that “congress has made no progress in the last two years on such a law.” Similarly, European responses to “technological solutions that infringe on individual freedoms” seem to begin and end at re-emphasising the continued relevance of existing legal frameworks relating to data protection, such as the Council of Europe’s Convention 108(+).

A balance must be struck between dignitary concerns surrounding data protection breaches and the protection against imminent risks to public health. Ho et al. explicitly state that “weighing these considerations is a complex endeavour”, since “in circumstances as severe as a pandemic, failure to deploy effective AI can itself lend to dignitary harm by neglecting tools that could help control a pandemic more effectively.” Despite such complexities, these proposals to institutionalise “robust evaluation frameworks” can become some of the first tangible steps that the law can take in assessing the legality of AI tools. Ultimately, there is much to learn from the legal establishment’s reactions (or lack thereof) to applications of AI in the pandemic – it would be irresponsible to not take advantage of the information we have gathered to be better prepared for the possibility of another global health crisis.

Image by Markus Winkler on Unsplash.