Creating the Seville 2009 ant habitat

Firstly the 10m*10m experimental area was divided into a 1m*1m grid using metallic markers. This allowed the layout of grass tussock and bushes to be mapped onto squared paper . This mapping provided the 2D position of all vegetation but not their heights.

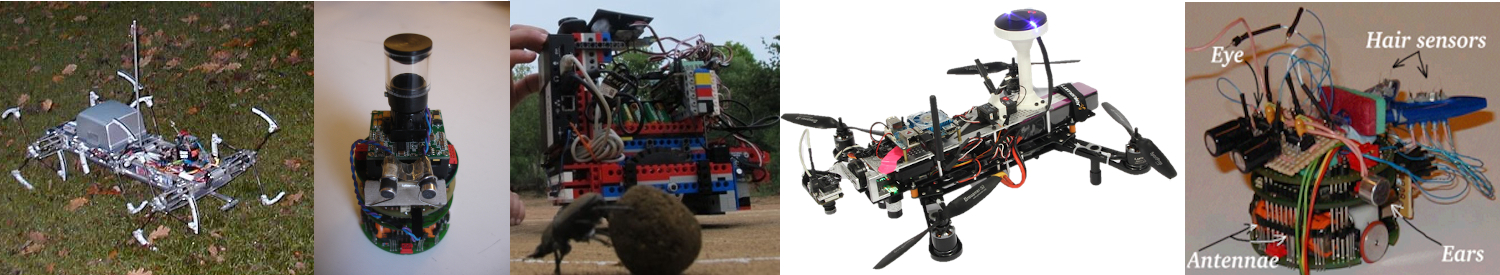

Thus a database of panoramic images was collected from which the height of grass tussocks could be measured. A custom wireless panoramic camera system was constructed for this purpose (see image below). Images were captured on a 50cm*50cm grid (after alignment and levelling) to a concealed wirelessly-connected laptop (Hauppauge WinTV-HVR system). In total, 424 images were sampled within the 10m*10m test area. Images were then unwrapped (1ᵒ resolution, 360ᵒ azimuth, 0ᵒ to 45ᵒ elevation) using the OCamCalib Toolbox for MATLAB [1]. By referencing the 2D map decribed above, and with knowledge of the alignement of the camera, all grass tussocks visible in the panoramic image database were manually lablled. All tussocks were identified at least once, with many visible in multiple images. The outcome being a list of heights for each tussock as viewed from different perspectives (image locations). The highest point of each tussock in each image was manually recorded, and a simple trigonometric transform (possible as the distance from camera to centre of tussock and angle of elevation are known ) was used calculate the height of each tussock (see [2] for more details).

The data described above provided sufficient information to construct a geometrically accurate simulated environment. To this end we utilised the Matlab-based world building methodology described in [3], which generates natural looking tussocks from a cluster of black triangles. In our world triangles were constrained to emerge from within the mapped tussocks and projected upwards to a mean height from the measurements above with some noise. Triangles were oriented vertically, and the angle of elevation sampled from a normal distribution.

Finally the we added realistic colouring of tussocks. The model simulates the spectral content of the world using by modelling the visible light photoreceptor of the ant eye [4] which makes up the majority of the central and lower visual field. A photographic image database of the field site at a level of 5cm from the ground was created during our 2012 field study using a specially adapted camera which could capture UV as well as the visible wavelengths of light. Using a bandpass filter (Schott BG18) with a range 415nm to 575nm and a peak transmittance at 515nm, a gray scale image approximates the photoreceptor response. The distribution of gray scale values from a random selection of images was then used to calculate a mean and standard deviation for the visible light component of the scene. In turn these values were used to create for every polygon a random value in the green channel of RGB space.

The complete world is generated from 5000 triangles randomly distributed across the 234 tussocks which produced an optimal trade-off between view authenticity and speed of image rendering. The final 3D environment plus rendering code can be downloaded at AntNavigationChallenge.

References

[1] Scaramuzza, Davide, Agostino Martinelli, and Roland Siegwart. “A toolbox for easily calibrating omnidirectional cameras.” Intelligent Robots and Systems, IEEE/RSJ International Conference on. IEEE, (2006).

[2] Mangan, Michael. “Visual homing in field crickets and desert ants: A comparative behavioural and modelling study.” Ph.D. Thesis, University of Edinburgh (2011).

[3] Baddeley, Bart, Paul Graham, Philip Husbands, and Andrew Philippides. “A model of ant route navigation driven by scene familiarity.” PLoS Comput Biol 8, no. 1 (2012): e1002336.

[4] Mote, Michael I., and Rüdiger Wehner. “Functional characteristics of photoreceptors in the compound eye and ocellus of the desert ant, Cataglyphis bicolor.” Journal of comparative physiology 137.1 (1980): 63-71.