Our research

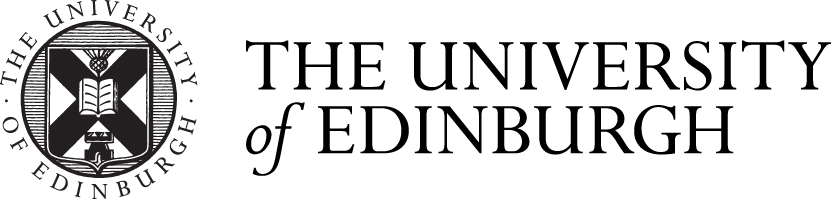

Building robots to understand insect behaviour

We research and model the sensorimotor capabilities of insects. This ranges from simple reflexive behaviours such as the phonotaxis of crickets, to more complex capabilities such as multimodal integration, navigation and learning. We carry out behavioural experiments on insects, but principally work on computational models of the underlying neural mechanisms, which are often embedded on robot hardware. To find out more, see this short video, look at some of our projects, our recent publications, the homepages of people in the lab, or contact us directly.

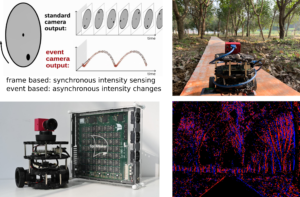

Insect-inspired neuromorphic nanophotonics

Insects are capable of amazing autonomous feats well beyond current computers, such as navigating across hundreds of kilometres. Here, we want to realize artificial neural networks inspired by neurobiology with our combined skills in nanotechnology.

As proof of concept, we target the complete pathway from polarised light sensing in the insect eye to the internal compass and memory circuits by which this information is integrated into a continuous accurate estimate of location. You can find more information about the project here.

This is a collaboration of research groups at Lund University, the University of Copenhagen, the University of Edinburgh, and the University of Groningen; it is funded by the EC in the Horizon Europe programme (GA 101046790); and it started on April 2022.

Insect-inspired artificial intelligence

While contemporary artificial intelligence (AI) has become increasingly powerful, most celebrated AI models are greedy for high-quality data and rely on expensive advanced computing, which brings new scepticisms and challenges, e.g., AI and sustainability. An efficient alternative would thus play a complementary role, benefitting the field of AI, and potentially all of us. We believe insect brains offer naturally such inspirations for novel designs that are both efficient and robust, because insects, despite their tiny brains, are capable of learning, planning, and problem-solving.

While contemporary artificial intelligence (AI) has become increasingly powerful, most celebrated AI models are greedy for high-quality data and rely on expensive advanced computing, which brings new scepticisms and challenges, e.g., AI and sustainability. An efficient alternative would thus play a complementary role, benefitting the field of AI, and potentially all of us. We believe insect brains offer naturally such inspirations for novel designs that are both efficient and robust, because insects, despite their tiny brains, are capable of learning, planning, and problem-solving.

Our project draws insight mainly from the mushroom body (MB) neuropil in insect brains, which is believed to be the ‘computing centre’ of rapid associative learning from minimal data, guiding and coordinating robust behaviour in complex, dynamic environments. We aim to bring together our scientific understandings of the MB and to test insect-inspired AI designs in tasks such as visual recognition and robot navigation.

GRASP Project

The current rather limited ability of robots to grasp diverse objects with efficiency and reliability severely limits their range of application. Agriculture, mining and environmental clean-up are just three examples where – unlike a factory – the items to be handled could have a huge variety of shapes and appearances, need to be identified amongst clutter, and need to be grasped firmly for transport while avoiding damage. Secure grasp of unknown objects amongst clutter remains an unsolved problem for robotics, despite improvements in 3D sensing and reconstruction, in manipulator sophistication and the recent use of large-scale machine learning.

The current rather limited ability of robots to grasp diverse objects with efficiency and reliability severely limits their range of application. Agriculture, mining and environmental clean-up are just three examples where – unlike a factory – the items to be handled could have a huge variety of shapes and appearances, need to be identified amongst clutter, and need to be grasped firmly for transport while avoiding damage. Secure grasp of unknown objects amongst clutter remains an unsolved problem for robotics, despite improvements in 3D sensing and reconstruction, in manipulator sophistication and the recent use of large-scale machine learning.

Ants however, with relatively simple, robot-like ‘grippers’ (their mandibles), limited sensing, and tiny brains, can pick up and carry a wide diversity of items. From seeds to larvae, or other insect preys, these items can vary enormously in shape, size, rigidity and maneuverability. Ants display remarkable abilities that are often outperforming the best robotic approaches. This project aims to understand how the ant brain solves such complex challenge, and derive from this new control mechanisms for robotic grasping.

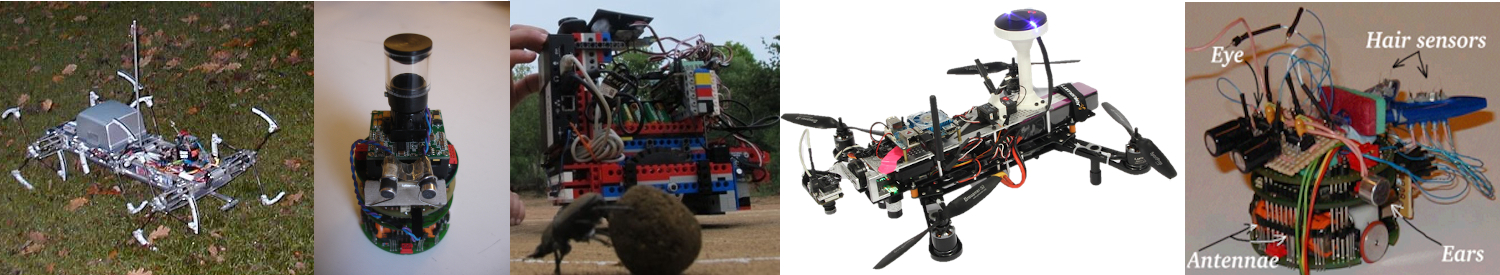

Insect-Inspired Depth Perception

Current depth-sensing technologies are still limited due to high computational demands and a reliance on successful feature extraction. Similarly, alternative methods for extracting depth, like light-field cameras and active-sensing approaches, often struggle with dynamic scenes and have high computational costs or a high power consumption. Our project aims to develop novel depth sensing solutions particularly suited for these challenges by drawing inspiration from the insect eye.

Recent drosophila research reveals that photoreceptors in the compound eye twitch in response to changes in light intensity. These microsaccades enable high resolution and provide stereo vision in a range that has previously been assumed impossible for insect eyes.

Here, we seek to understand how the dynamic properties of the insect eye can be used to recover depth information and implement and test the same principles of operation in multilevel modelling and robotic applications.

This project is a collaboration of the University of Edinburgh, the University of Sheffield and industrial project partners Opteran and Festo. It is funded by the EPSRC.