If you have watched Silicon Valley, the tv series following the struggles of a startup tech company called Pied Piper, you may recall the closing scene of third season’s penultimate episode. Facing the risk of bankruptcy, Pied Piper’s business advisor decides to buy fake app users from a South-Asian “click farm”, in the hope of attracting possible investors.

Click farms, large group of employees compensated to boost the visibility of websites or social media accounts, are just one example of the outsourced, underpaid human labor on which Western tech firms rely.

Astra Taylor has coined the term “fauxtomation” to define the process that renders invisible the human labor and reinforce the illusion that machines are smarter than they are. A typical example of fauxtomation is Amazon Mechanical Turk (MTurk), one among the many crowdsourced platforms to recruit online human labor.

The “Turkers” – anonymous workers who live mostly in India and in lower-income South-Asian countries (Ross 2010) – perform “Human Intelligent Tasks” (“HIT”) for a minimum wage of $0.01 per assignment. The HITs include transcribing audio, inputting information into a spreadsheet, tagging images, researching email addresses or information from websites.

Before a machine is actually capable of understanding the connection between contents (e.g. to recognize a certain object or face from a picture), a human has to address the saliency of such content. Therefore, MTurkers manually label thousands of images, creating large-scale datasets upon which AI developers train Machine Learning algorithms.

Much of the automation of current AI technologies relies on this outsourced, low-paid workforce. As Taylor puts it, “Amazon’s cheeky slogan—’artificial artificial intelligence’—acknowledges that there are still plenty of things egregiously underpaid people do better than robots” (Taylor 2018).

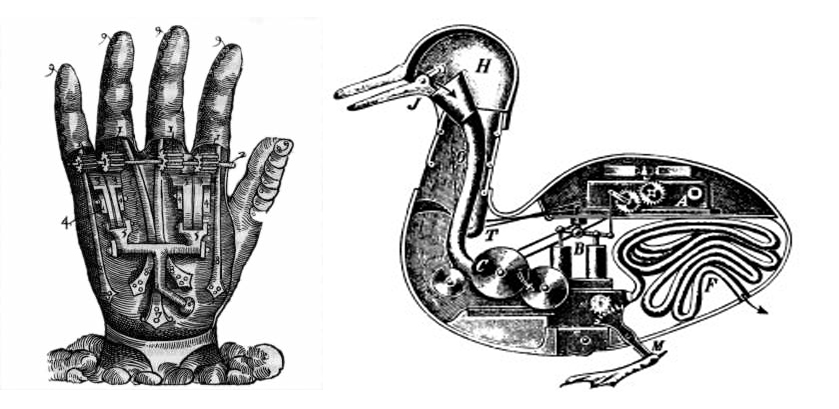

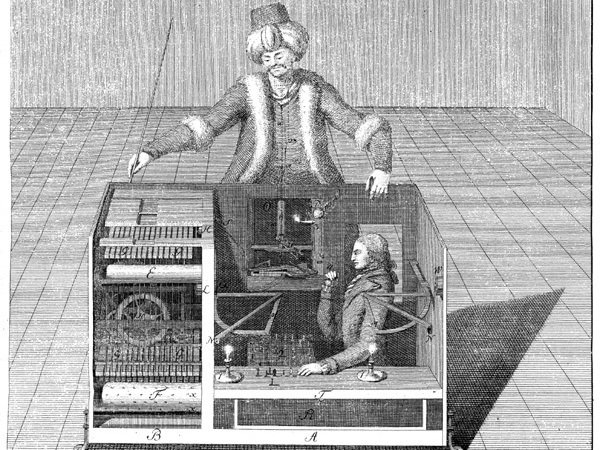

It is not surprising that Amazon’s platform is named after the famous Mechanical Turk or Automaton Chess Player, the chess-playing machine constructed in the late eighteenth-century by Wolfgang von Kempelen. Like other contemporary “automata” explored in the previous blog entry, Kempelen’s creature was a hoax. Indeed, a human operator sat inside the machine, able to play through a series of levers that controlled the Turk’s arms.

This racialized android, according to Ayhan Aytes, embodies the shift of the cognitive work from “the privileged labor of the Enlightened subject to unqualified crowds of the neoliberal cognitive capitalism” (Aytes 2013: 88). Crowdsourcing works here as a form of capitalistic exploitation of the “collective mind”, the MTurk “divide cognitive tasks into discrete pieces so that the completion of tasks is not dependent on the cooperation of the workers themselves” (Aytes 2013: 94). However, strategies of collective resistance have emerged; the Turkopticon has served for many years as a platform for Turkers to share experiences and avoid unprofitable HITs.

Sensationalistic claims on automation need to be carefully questioned, especially as this scenario, in Taylor’s words, “has not come close to being true. If the automated day of judgment were actually nigh, they wouldn’t need to invent all these apps to fake it” (2018).

***

References

Aytes, Ayhan, 2013, “Return of the Crowds: Mechanical Turk and Neoliberal States of Exception”, in Scholz, Trebor (edited by), Digital Labor. The Internet as Playground and Factory, Routledge, New York.

Ross, Joel, et al., 2010, “Who are the Crowdworkers? Shifting Demographics in Mechanical Turk.” CHI’10 extended abstracts on Human factors in computing systems, ACM.

Taylor, Astra, 2018, “The Automation Charade”, Logic Magazine (available online at: https://logicmag.io/05-the-automation-charade/).