In 2016, an Israeli startup launched a facial recognition software, Faception, allegedly able to identify personality traits based on facial images. Facial recognition is not new, the use of biometric for security purposes has rapidly increased after 9/11 and automated surveillance is now commonly employed in immigration control and predictive policing. However, Faception fits into a specific theoretical leading trend in recognition technology, that is what Luke Stark has called the “behavioral turn”: the integration of psychology and computation in the attempt to quantify human subjectivity (Stark 2018).

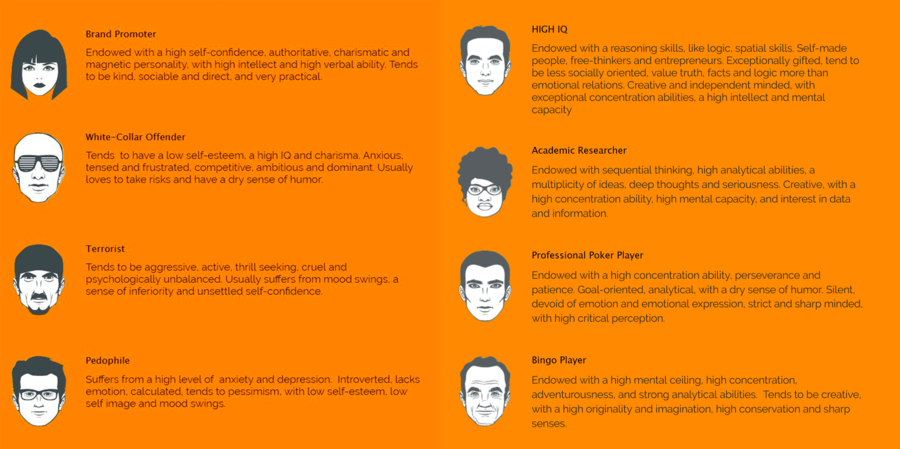

Indeed, the Israeli company claims to be able to successfully identify personality “types” such as “an Extrovert, a person with High IQ, Professional Poker Player or a Terrorist”. According to their website, an academic researcher, for instance, is “endowed with sequential thinking, high analytical abilities, a multiplicity of ideas, deep thoughts and seriousness. Creative, with a high concentration ability, high mental capacity, and interest in data and information”.

Leaving aside the problematic taxonomy (how being an academic researcher is a personality type at all?), a section of Faception website unveils the “theory behind the technology”.

Mentioning a study conducted at the University of Edinburgh on the role of genetics in personality development, together with an unspecified research on the portions of DNA that influence the arrangement of facial feature, the company concludes syllogistically and without further evidence that “the face can be used to predict a person’s personality and behavior”.

The idea that it is possible to reveal behavioral traits from facial features – the practice of “physiognomy” – became popular in nineteenth century thanks to the Italian anthropologist Cesare Lombroso. He fueled the idea that phrenological diagnosis (the measurement of the skull) would have made identification of criminals possible. Lombroso believed criminality to be hereditary, and therefore visible in facial features, which would have been similar to those of savages or apes.

Despite having been discredited as a scientific theory for its racist and classist assumptions on human identity, today physiognomy is not completely out of the picture.

In a long interview with The Guardian, Stanford Professor Michael Kosinski – who in 2017 claimed that face recognition technology could successfully distinguish sexual orientation with more accuracy than humans – declared that he could see patterns in people Facebook’s profile pictures. “It suddenly struck me,” […] introverts and extroverts have completely different faces. I was like, ‘Wow, maybe there’s something there.’”

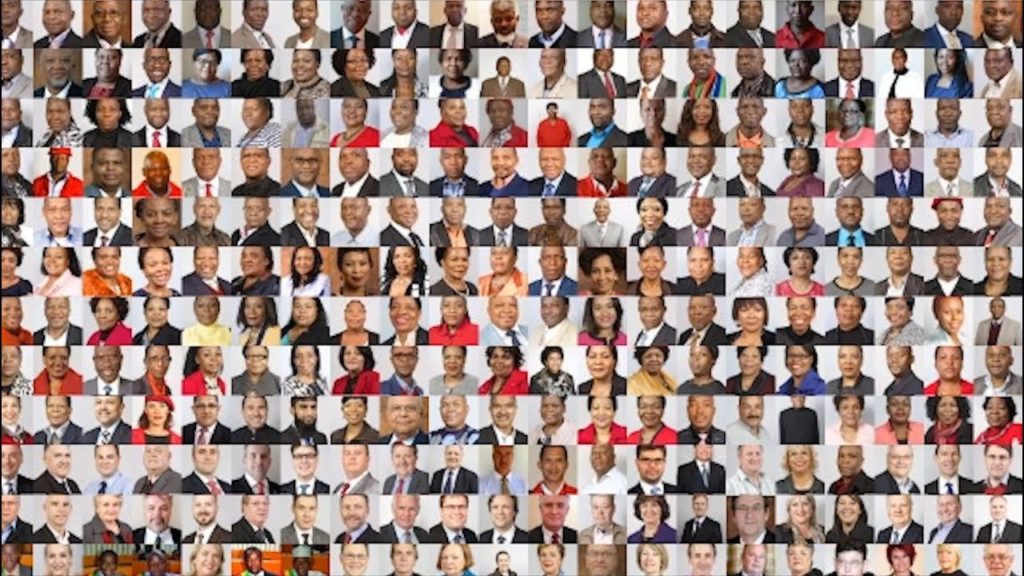

The use and development of such technologies raises issues regarding identity, policy and discrimination, especially when employed for surveillance purposes. Recent studies within the field of computer science have shown that the design of facial recognition technology is still highly biased. Joy Buolamwini has tested three commercial software (IBM, Microsoft and Face++), showing that all of them misclassify women of color, while their error rate for lighter-skinned males is close to 0%. This is due to the lack of diverse datasets (which at the moment include mostly images of white men) upon which AI developers train their algorithms. Once encoded, such misclassification could propagate throughout the infrastructure (Buolamwini and Gebru 2018).

Finally, Faception website enlists the advantages of its software: objectivity, accuracy, real time evaluation and ultimately, “no prior knowledge needed”. This technology doesn’t require any associated data or context to determine, with lombrosian certainty, the essence and fate of its target, instantly assessing whether it is a pedophile or a bingo player.

***

References

Buolamwini, Joy, and Timnit Gebru, 2018, “Gender shades: Intersectional accuracy disparities in commercial gender classification”, Conference on Fairness, Accountability and Transparency, 77-91.

Stark, Luke, 2018, “Algorithmic psychometrics and the scalable subject”, Social Studies of Science, 48(2), 204-231.