A previous blog entry addressed the need for the inclusion of counter-narratives of the Internet in the public discourse on digital technologies. I offer here a very short account of alternative practices and theoretical frameworks stemming from feminist and queer politics.

In 1997, the American Internet provider MCI (later bought by the telecommunication corporation Verizon) broadcasted a dazzling TV advert, describing the web in such terms: “People here communicate mind to mind. There is no race. There are no genders. There is no age. There are no infirmities. There are only minds. Utopia? No, the Internet”.

The commercial reflects the enthusiasm that people in the 90’s had for the “cyber-space”, a “virgin territory which could be shaped and developed according to a different set of values than those which predominated in the physical spaces of our world” (Evans 2013: 82).

Unfortunately, as we have seen before, the capitalization of ICTs has soon crushed the cyber-dream.

However, hidden in the structured rigidity of the Internet, there is an interstitial moment of freedom, the glitch. The baffling pixel fragmentation of an image, the interference of white noises or the alteration in audio files. Digital artists define the glitch “a happy accident. […] a good place to be to find pleasure in things that are normally upsetting” (PBS 2012).

To address the revolutionary role of glitching, the writer and artist Legacy Russell has coined the term “Glitch Feminism”, a feminism that “embraces the causality of ‘error’. Glitch fragments representation as queer is opposed to body normativity, “Glitch Feminism is not gender-specific—it is for all bodies that exist somewhere before arrival upon a final concretized identity that can be easily digested, produced, packaged, and categorized by a voyeuristic mainstream public” (Russell 2012).

New digital strategies, such as the glitch, may be embraced to challenge the inequalities encoded in the ICT infrastructure. The artistic project developed by Zach Blas, “Queer Technology”, provocatively addresses “the heteronormative, capitalist, militarized underpinnings of technological architectures, design, and functionality. Queer Technologies includes, transCoder, a queer programming anti-language” (Blas).

Since the early days of the web, feminist scholars and activists have explored the potential of digital practices, long asking themselves: could we use technology to dismantle inequality?

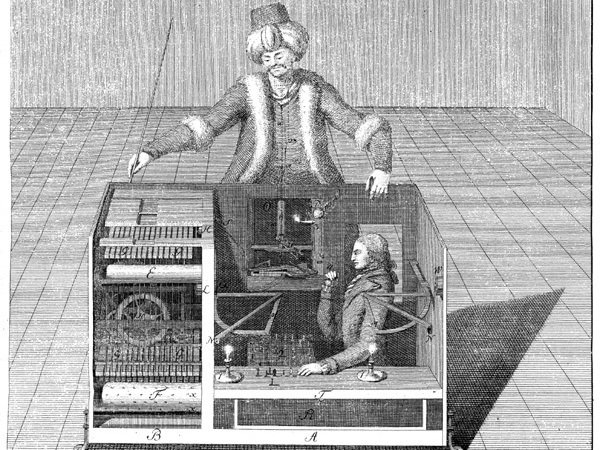

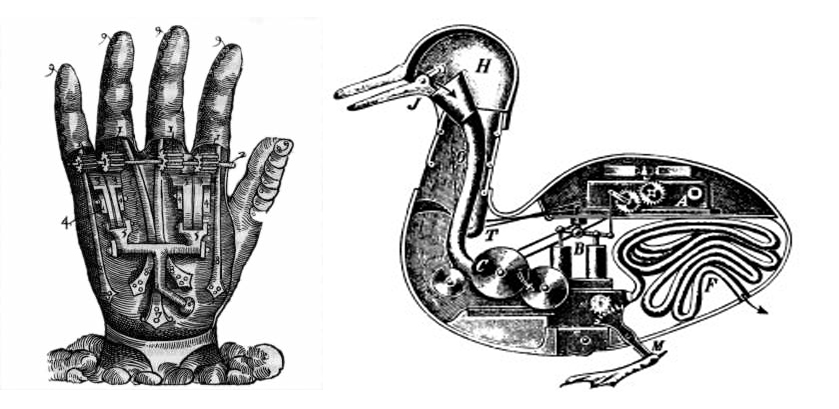

Following on Donna Haraway’s A Cyborg Manifesto, encouraging a liberating, chimeric union between humans, animals and machines (Haraway 1991), “cyberfeminists” were fierce advocates of the ICTs as a means to eliminate gender division. However, soon after the First Cyberfeminist International (which took place in Germany, in 1997), the artist Faith Wilding promptly argued that “contrary to the dream of many Net utopian, the Net does not automatically obliterate hierarchies through free exchanges of information across boundaries. Also, the Net is not a utopia of nongender. It is already socially inscribed with regard to bodies, sex, age, economics, social class, and race” (Wilding 1998: 9).

In the later 2000’s, more critical versions of cyberfeminism have arisen. “Black Cyberfeminism”, for instance, recognizes how “categorical inequalities” (discriminations on the grounds of gender, ethnicity, religion, class, dis-ability, etc.) are reproduced and even reinforced through the digital infrastructure (MacMillan Cottom 2017).

How should we reconfigure techno-scientific practices in the push for social justice? The Xenofeminist Manifesto, developed by the Laboria Cuboniks collective, addresses this question: “Technoscientific innovation must be linked to a collective theoretical and political thinking in which women, queers, and the gender non-conforming play an unparalleled role” (Laboria Cuboniks).

***

References

Blas, Zach, “Queer Technologies 2007-2012”, retrieved from http://www.zachblas.info/works/queer-technologies/.

Evans, Karen, 2013, “Rethinking Community in The Digital Age?”, in Orton-Johnson, Kate, and Nick Prior, (edited by), Digital sociology: Critical perspectives, Springer

Haraway, Donna, 1991, “A Cyborg Manifesto: Science, Technology, and Socialist-Feminism in the Late Twentieth Century”, in Simians, Cyborgs and Women: The Reinvention of Nature, New York, Routledge, 149-181.

Laboria Cuboniks, Xenofeminism. A Politics for Alienation (available online at http://www.laboriacuboniks.net/index.html#interrupt).

McMillan Cottom, Tressie, 2016, “Black Cyberfeminism: Ways Forward for Intersectionality”, in Daniels, Jessie, Gregory, Karen, and McMillan Cottom, Tressie, 2016, Digital Sociologies, Bristol, Policy Press.

Russell, Legacy, 2012, “Digital Dualism and the Glitch Feminism Manifesto”, Cyborgology (available online at https://thesocietypages.org/cyborgology/2012/12/10/digital-dualism-and-the-glitch-feminism-manifesto/).

Wilding, Faith, 1998, “Where is feminism in cyberfeminism?”, Paradoxa, Vol. 2. 6-13.

The Art of Glitch, PBS Digital, 2012, August 9, retrieved from https://www.youtube.com/watch?v=gr0yiOyvas4