a. an understanding of the constraints and benefits of different technologies.

Building a building block for Blackboard Learn

Description

Edinburgh College of Art (ECA) frequently assesses to multiple learning outcomes (LOs). This means that for each assignment, a student will receive multiple grades and sections of feedback. In the past, ECA used a custom VLE to support this kind of assessment. Tutors would select grades for each learning outcome which was being assessed (often three) and feedback for each learning outcome. Students would then need to leave a piece of self-evaluation and ‘submit’ in order to access their tutor’s feedback. As we migrated from the Portal to the centrally supported VLE (Blackboard Learn) I went about trying to identify how best we could leverage the tools of the new system for a workflow which was familiar, and favoured, by teaching staff and students at ECA.

The challenge was as follows:

- Allow markers to leave multiple grades and feedback for each assignment

- Allow support staff to download these grades for upload to the central assessment and progression software with minimum intervention (and risk of mistakes)

- Allow students to receive their multiple grades and feedback with as few clicks as possible, and represented in a clear and consistent way.

I identified the rubric tool within Blackboard as the most useful tool we could use to replicate this workflow. While this addressed point 1, it did not address points 2 and 3:

- as it stood, administrators could only download the aggregated grade from the Blackboard Learn Grade Centre.

- students would have several clicks (many of which would not be intuitive) in order to access their feedback. Because of the complexity of this task, and the multitude of ways a student could give up half way through, communicating grades and feedback (essential to the learning process) would be severely compromised.

I proposed that ECA use some if its Information Services (IS) Apps development budget for investigating ways of addressing these issues. I met with a project manager from IS and we blocked out the best part of a day for exploring what the current challenges were, what the risks were for continuing with the current system, and the opportunities for developing something which could improve the experience.

I then took these initial findings to the development team in IS at a subsequent meeting. After ruling out some options, we proposed building a new building block for Blackboard which would provide a different view into the Grade Centre. This would have to be accessed via a new tool, rather than a different stylesheet for the existing tool (My Grades). This would introduce a potential cause for confusion, but I balanced this against the potential confusion from the existing workflow and argued the benefits would outweigh the challenges.

The project then moved into the development phase. Multiple modes of communication were employed throughout the project build. Face-to-face meetings were deemed desirable to keep everyone on track (it’s harder to explain lack of progress face-to-face). I was fortunate to be working with a very able project manager who was positive, proactive and enthusiastic.

I was tasked with testing the Beta tool. The new tool went live for ECA staff and students in September 2016. After a year of successful deployment, the tool was made available to the rest of the University in September 2017.

Evidence

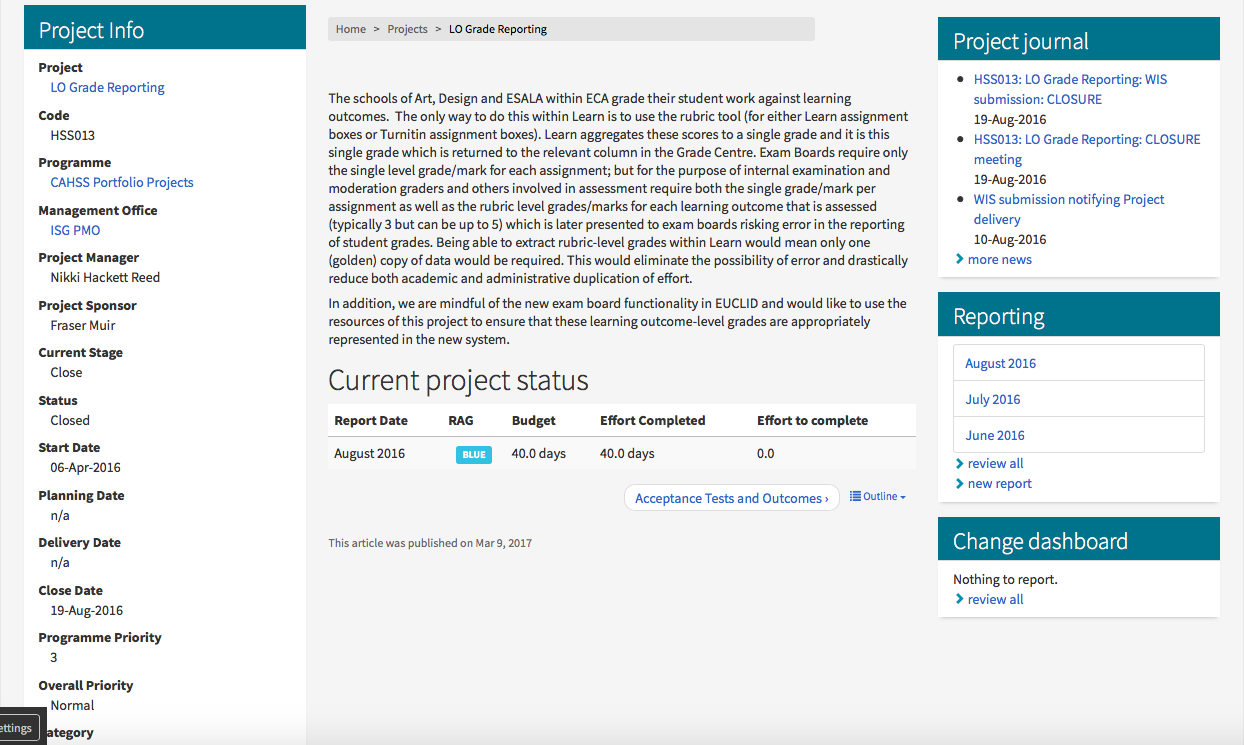

Project page (password protected): https://www.projects.ed.ac.uk/project/hss013.

NB: If accessing from outside the University of Edinburgh, the following screen grab shows an image of the project page.

Student User Experience (pre-building block) flowchart

Reflection

The project was a positive experience for me professionally as:

- It introduced me to how the University manages projects

- I gained experience as a project lead for an IT-related project (I later completed both ITIL and AGILE certificates and I was able to reflect on this experience during those courses)

- I got to work with IS Apps colleagues, in addition to the service support colleagues I was more familiar with

- I could take ownership of a problem and was given the resources to find and implement a solution.

The project was successful on a wider note because:

- It came in on time and on-budget

- There have been no technical issues or down-time

- I was able to condense instructions for staff on how to leave grades and feedback to one page. I also developed a short video (<2 minutes) for staff on how to do the same. This was included in each Blackboard Learn course on the appropriate page, so it was available, at the point of need.

- I trained support staff in how to download the csv output and prepare for upload to the central assessment and progression tool (APT)

- We know anecdotally that students are using it to access their LO grades and feedback.

- Unfortunately, we haven’t been able to get any meaningful data out of Blackboard Learn relating to the building block at present. This is currently a request sitting with IS. With hindsight, we should have included this in the project brief (track number of views and trace these to individuals – similar to how Turnitin displays a simple icon when a student has clicked on the button to view their feedback).

I would also like to explore the possibility of uploading the code to GitHub or similar so we can share with the wider Blackboard community.

And of course, the student experience is still not optimal. Because we had to develop a separate building block, rather than adapt an existing one, we are left with two possible tool links:

- My Grades – the students view of all aggregated grades for all Learn assignments within the course

- My Learning Outcome feedback – the students view of any Rubric / Learning outcome level grades and feedback within the course

The advice I gave course secretaries (face-to-face and on demand) was as follows:

- If your course doesn’t contain an assignment using a rubric, hide the ‘My Learning Outcome feedback’ tool link.

- If your course only contains assignments using a rubric, hide the ‘My Grades’ tool link.

- This was to minimise possible confusion for students. However, there are still instances of courses where both tool links are visible in the menu, but only one is required.

This is part of a wider issue with a lack of ownership of Learn courses – who is responsible for them? Is it the course organiser (academic) or is it the course secretary (support)? It is also, I think, a result of some colleagues not having a mental model of the tools. Unfortunately, I can’t influence the former. I am hoping to address the latter by creating some further documentation to supplement face-to-face training to allow colleagues to be able to see in their minds view what the tools are for.

Because my memories of working on this project are happy ones, I am keen to do so again. However, as I know building blocks can be troublesome to test for each update of the VLE, we are likely to move away from this model and move towards building integrations between tools via LTI or APIs. The challenge remains: how to build an integrated workflow for our students when equipped with an off-the-shelf VLE and a series of bolt-on tools.

b. Technical knowledge and ability in the use of learning technology

Description

I have used a wide variety of TEL related tools over the last 12 years. Working for an organisation such as the University of Edinburgh (UoE) affords me the opportunity to work with many industry standard tools and services, many of which would be outwith the budgets of smaller organisations. I shall focus below on those I have worked with since moving to the UoE .

Administrator for Blackboard Learn (VLE)

In both my previous, and current role at the UoE I am granted the Administrator role for all Blackboard Learn course instances which sit within my School in the Institutional Hierarchy.

This means I can self-enrol as instructor on any course. This is a necessary role as I frequently receive calls from both academic and professional services colleagues with questions regarding how to add content / hide grades / grant instructor access etc. When I receive a call with a question which I believe to be applicable more widely, I write up instructions in my Service blog. Then, the next time someone asks the same question, I can point them to the documentation on the blog.

I am also responsible for designing, developing and iterating School-wide Learn templates (more of which can be found here).

Administrator for Panopto (now retired)

During my time at ECA, I was granted Administrator role for the lecture recording tool Panopto. This allowed me to train colleagues in recording their lectures, in addition to editing and publishing privileges for recordings within my School.

Panopto is a video platform that provides integrated video recording, live streaming, video management, and inside-video search. Possible uses include:

- lecture capture

- flipped classroom

- student presentations

- video introductions

- exam overview

- field recordings

- events and marketing.

I was particularly impressed by Panopto’s auto-transcoding function which facilitated searching within a video. I tested this feature across a few videos, and found the accuracy, in particular with native English speakers, to be excellent. We know that students primarily use lecture recording for revision purposes (usage peaks towards the end of semester). Therefore, the ability to search within a video is critical.

Administrator for Media Hopper Replay (Echo360)

When the UoE made a commitment to widen out lecture recording facilities to all three Colleges, a procurement exercise began. This resulted in Echo360 winning the contract.

Echo360 hardware solution differed from the previous Panopto software offering. Recording boxes would be installed in teaching rooms across campus, connected to the switcher outputs located in the teaching desks. This meant that whatever was being projected to the screen in the room (eg slides from the teaching PC or presenter laptop, visualiser etc) would be captured, along with audio via the lapel mic in the room. If a video camera was also connected, this too could be captured as an additional stream. Recordings could be scheduled centrally and there would be a button on the teaching desk with a light denoting recording status. This would follow broadcast, rather than traffic light convention. All of which meant that lecturers would advise local support in advance if they wished their classes to be recorded. If so, all they had to do was turn up to the venue and switch their mic on. The new service would be able to scale well.

Unfortunately, what we lost in this move was the auto-transcoding feature of Panopto. What we gained in being able to scale our recordings in an efficient manner, the end user lost in feature functionality.

Assessment tools

When I moved to the School of Informatics, one of the first tasks I set myself was to audit current practice regarding content delivery and assignment workflow. I discovered that more than 80% of courses received coursework via a command line titled ‘submit’. This allowed students to submit their work to the course organiser, which ran some basic checks on the submission (ie were the files packed as expected, or missing anything). However, this required students to be familiar with command line (not a problem for most, but increasingly a problem for students from other Schools taking Informatics courses as an option). It also didn’t integrate with any marking or feedback mechanism and the codebase was becoming incredibly old and unreliable. I therefore surveyed colleagues on their assessment submission requirements, and used this to address if and how current centrally supported tools and services may meet this need.

Resource Lists (Leganto)

I work with the service lead for the centrally provisioned Resource List service to encourage colleagues to make use of this valuable service for students. Most Informatics courses recommend open access resources, and students rarely require to visit the on-campus library. It can be a little harder therefore, to persuade colleagues of the benefits of using the service.

Evidence

Submit Requirements Survey Responses with comments

Informatics Learning Technology Service blog site.

Reflection

It’s easy to forget, or take for granted, how much I’ve learned since starting work at the University of Edinburgh. Some skills have also degraded a little too. So, for example, when I worked at ICAS, a large part of my role was creating multimedia assets using eLearning software such as Articulate Storyline. Since working for the UoE, there is very little asset creation I undertake. Instead, I see my role more as onboarding colleagues with various learning technology related tools and services. This onboarding can take the form of training sessions (both face to face and online), drop-in clinics, as well as more informal chats at various workshops and events. In order to have any confidence in doing so, I have to be completely familiar with these tools and services. Such confidence can only come from getting your hands dirty with the tools themselves. To this end, I always ensure I have playground / sandbox instances of Learn, Echo360, Turnitin etc where I can quickly test a particular workflow before advising colleagues.

Writing and maintaining simple how-to guides is essential to this process. I am enjoying my current workflow of responding to questions from colleagues by writing up my response as a blog post. I am therefore building a nice repository of guides I can share when a similar question is asked in the future.

In addition to being able to support colleagues in the learning technology available now, a key part of my role is horizon scanning to anticipate where future trends may lead, as well as how to meet School requirements that are not currently being met by centrally provisioned tools. I am lucky in that, being based in the School of Informatics, I get to work with highly experienced computer scientists and computer science students. I am looking forward to acting as co-supervisor on a couple of MSc projects this coming year. One is looking at developing a more integrated assessment and feedback workflow that will meet the needs of our colleagues and students. The other is looking at using voice commands in a VLE such as Blackboard Learn.

Regarding horizon scanning, one of the biggest learning technology related challenges I see facing the University in the coming years relates to the Equality Act of 2010 and implications for subtitling of video assets. The University is currently undertaking a subtitling pilot however it’s hard to see how this could possibly scale to the size of our lecture recording output. Auto-transcoding of speech is essential to facilitate subtitling of unscripted long videos. I am therefore encouraged to see Automatic Speech Recognition included in the Echo360 roadmap.

c. Supporting the deployment of learning technologies

Migration to Learn as principal platform for content delivery

Description

When I moved to the School of Informatics in February 2018, the post of Learning Technologist was a new one. Informatics is somewhat of an outlier in the University, with extensive in-house IT support. The School was looking to leverage the affordances of some of the centrally provisioned services and as such, the first task I set myself when taking up post was to perform an audit of current practice regarding content delivery and assessment workflows. As can be seen from the next steps suggested in the paper, I set myself the following goals:

- Contribute to the University wide consultation on VLE standards, and develop an Informatics template

When considering course sites we must ask ourselves: who is the ‘owner’? Is it the course organiser? The course secretary? The tutor(s) teaching on the course? Is there a hierarchy of ownership? Or do different actors have ownership over different areas? It must be clear to all actors which areas they are responsible for. This should feed into the university wide consultation on developing a standard Learn template. - Build better integration with third party tools

Continue to talk to, and work with local teaching and support staff, and colleagues in IS to develop better integration with third party tools, and locally written programs where these offer better value. These integrations may be LTI plugins, building blocks, or REST APIs. - Migrate content from the various course sites currently used to the one, centrally supported VLE – Learn

There is currently little to no drive from course organisers themselves to use Learn as the primary platform to deliver course content. As such, if we want to persuade them, we need to clearly outline the benefits of doing so and ensure there is no disincentive to do so. The act of migrating the content therefore cannot result in more work for the course organiser. Options include:- It would be simple enough (if inefficient) for me to copy and paste content from the current course page into a Learn course site. However, not only would this be inefficient, it raises the question of *when* to perform the action. Content for a course can grow and change over the course of semester. If we wait until semester end, we may not have enough time to copy the content over.

- For users who keep content on their local drive, Blackboard Drive can offer some efficiencies here. However, it is worth noting that Drive is not Linux compliant.

- Alternative options, such as data scraping and wrangling, may also be explored.

I summarised the rationale for an Informatics Learn template in a paper for our Teaching Committee. This was approved, and I spent the summer migrating content from existing web pages to Learn (aka a *lot* of copying and pasting).

I was also able to contribute to the University wide consultation on VLE standards in early 2019 by sharing our experience of the two iterations of our template in 2018/19 and conducting, in collaboration with the UX Design team, a usability testing exercise.

Regarding integrating content from local systems, I have managed to embed a locally developed coursework planner into the Informatics Learn template using <iframe> wrappers and some javascript. Using the same approach, I have integrated information on course staffing, and course summary information. This prevent duplication of information across systems.

Evidence

Informatics Learn template rationale

Improving student experiences in Learn: usability testing showcase

<iframe> wrapper and javascript

course staffing embedded output

course summary embedded output

Reflection

Looking back on the audit, one year later, provokes mixed feelings. The UX testing I conducted was encouraging. The student testers were able to tackle most of the tasks we set them with relative ease. This suggests that the move to Learn, and the rationalisation that brought, has been successful in addressing inconsistency of course material presentation.

As expected, there was some resistance to the move. Course lecturers were used to having the freedom to create their own websites, and present their material without restriction or guidance. Some cling to this as closely as they cling to the concept of academic freedom. I addressed this concern by reassuring colleagues that there was nothing to prevent them from directing students back out to their own sites, and simply stated the benefits of Learn in leveraging integration with other services such as lecture recording – which we know is considered highly valuable by our students.

In the end, only a minority of course organisers chose to manage two websites (the Learn site + another). Most have, somewhat reluctantly, used Learn to present their course content. I don’t believe this would have happened had I not copied most of the existing content over already. I learnt to trust my judgement in proposing the move, and build allies amongst academic colleagues to ensure it wasn’t seen as a project being forced on academics by management.

I am keen to continue with this approach, and have convened a working group consisting of myself, my line manager, and two academic colleagues – one acting in the role of champion, the other as a critical friend. I am also keen to explore the affordances of the Learn to the Cloud project. I am delighted that the School now has a rep in the Learning, Teaching, Web Division, within Information Services, who can help advise in these matters.

There is no doubt that our students are requesting consistency, to ensure they can find information about their course quickly and easily. Consistency across Schools should also be addressed, as we encourage our students to take courses outwith their ‘home’ School. However, this is not to deny the limitations of the Higher Education VLE. Whether it be Learn, Moodle, Canvas or any other, a VLE should be customisable, and should support all stakeholders – academic colleagues, professional services colleagues, and students – by allowing them to carry our their tasks with minimal duplication of effort. To this end, we need to start seeing our VLEs as just one part of a wider learning ecosystem. It should be easier than it currently is to build better integration with third party tools. And we need to have honest and open debate about the role of VLEs in data analytics and surveillance capitalism.