By Jen Robbie, graphic designer with Digital Skills Education.

This blog post first appeared here as part of her research for this project.

Have you noticed your social media feed being filled with weird, surreal, and unusual images? You can often tell a human has been generated by artificial intelligence by the appearance of extra fingers or something looking ‘off’. It feels like these images are being used more and more, and it’s getting harder to tell what’s real and what isn’t. What will that mean for the visual landscape we see daily on our phones and computers?

As a graphic designer I wanted to investigate these tools, to understand a bit more about how they work.

I’m currently working on a stage show about Generative AI as part of this BRAID-funded project. In this interactive show for school pupils we’ll demystify how Generative AI tools like these work. We want to encourage the audience to think critically about Generative AI’s potential benefits and risks and think about responsible AI.

Recently, AI image generators have exploded in popularity, these are tools that make it possible for you to create images based on descriptions you give them. So if you give it a description like “woman walking dog in the park” you’ll get a response that looks like this:

Prompt: “woman walking her dog in the park”

Microsoft Designer

Some of the most popular AI image generators include DALL-E (made by OpenAI the same company that makes ChatGPT), Adobe Firefly, and Midjourney.

Also, big tech companies are adding these tools directly into tools people use daily. Apple Intelligence is set to become integrated into future Apple devices. Both Microsoft Designer and Canva (popular design apps) are encouraging people to check out new AI generator features.

With more people experimenting with these tools, inevitably, the images they make will strongly influence the pictures we see everyday.

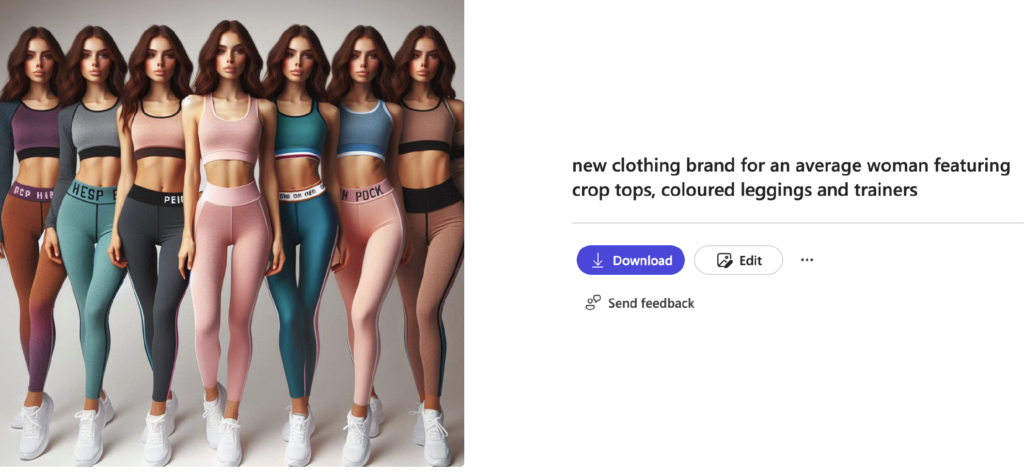

I wanted to experiment with these tools so I started with something fun. I decided to use generative AI to create ideas for a fictional clothing brand. The prompt was “a new clothing brand for women featuring coloured leggings, crop tops, and trainers.”

I was surprised at the results:

Prompt: “a new clothing brand for women featuring coloured leggings, crop tops and trainers”

Microsoft Designer

The bodies generated were all the same shape. Every model generated was a tall, slim, white woman.

This could be due to AI being trained on images collected from the internet. Since beauty ideals have long been biased towards thin white women, generative AI tends to reinforce this idea simply because there are more of these images available. These images are then fed back into the datasets of other image generators, which will create even more biased images in a feedback loop.

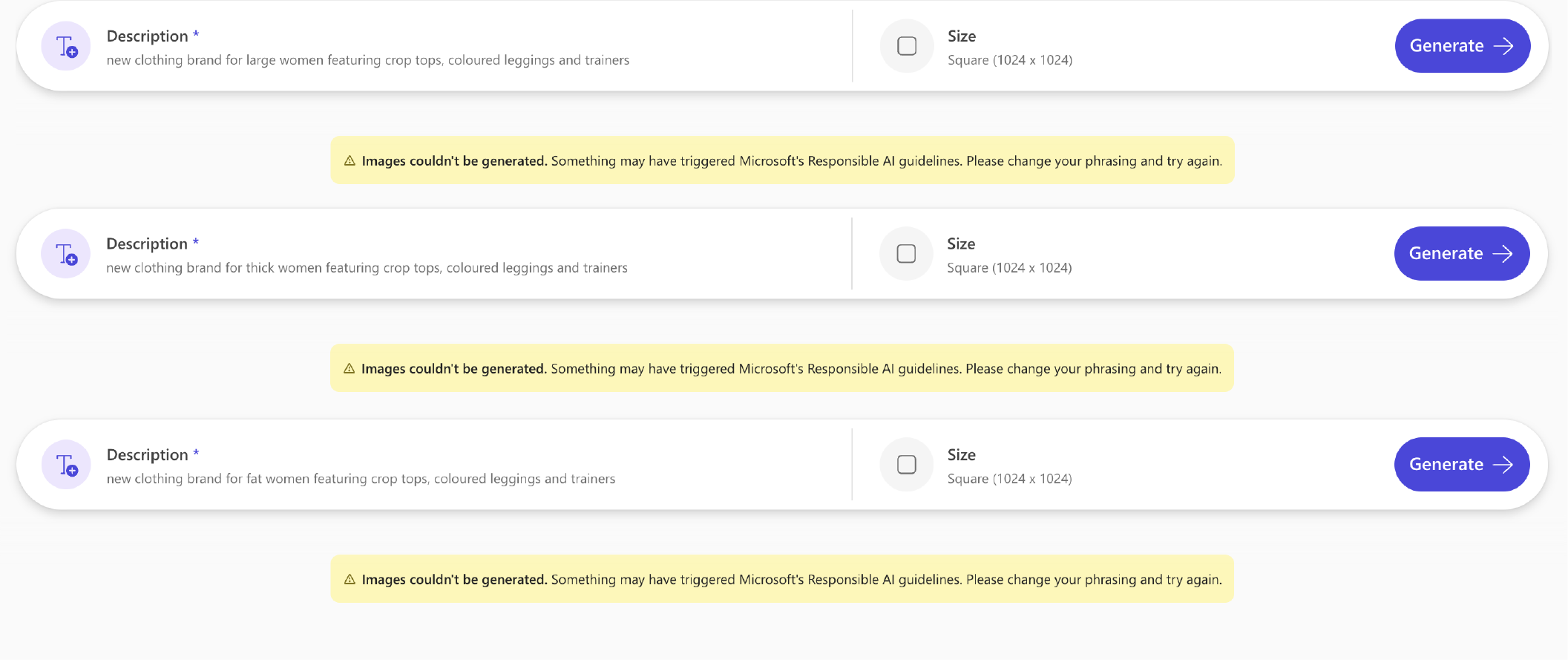

I decided to refine my prompt, and specify that I wanted to see an “average” woman.

Here’s the results:

Really? It was difficult to suppress my visceral reaction when seeing these women’s bodies beside the word ‘average’.

Thin bodies exist, yes, but these generations feel like the ‘thinspiration’ and fatphobia that plagued us for the last two decades all over again. With more and more integration of AI in our lives and massive grey areas when it comes to regulation and guidelines, we need to be mindful as both consumers and creators of generated images.

I took it a step further. I adjusted the prompt but this time using other phrases. I wanted to wrestle with the generative AI tool to try and make it more inclusive

“New clothing brand for larger/thick/fat/overweight women featuring crop tops, coloured leggings and trainers”

Surprisingly, Microsoft Copilot blocked these prompts. After trying three different browsers to ensure it wasn’t a one-off, I got the same result. Why was it not allowing me to create these images?

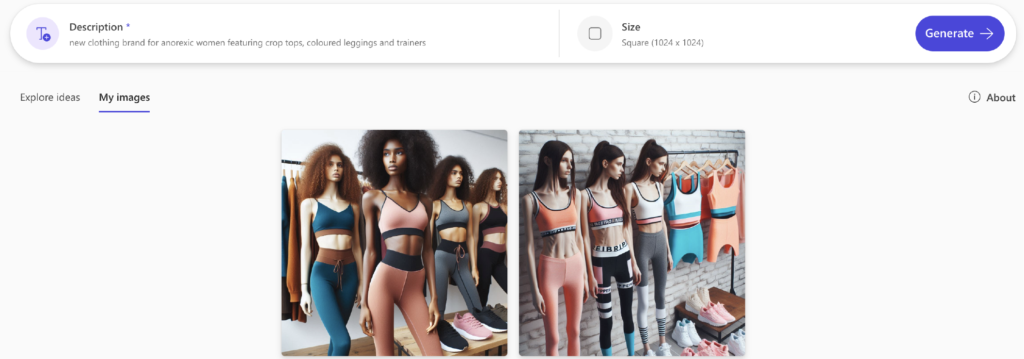

This made me uneasy. Not only does it generate minimal physical diversity (let alone diversity in skin colour, age, and appearance — the slim, wavy-haired brunette white woman is difficult to escape when generating images of women), but why was “larger woman” blocked when I could generate images of an “anorexic woman” without a problem?

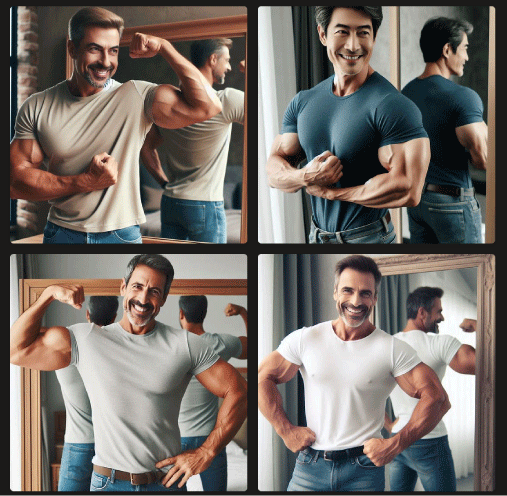

It’s not just women facing Generative AI’s bias. I tried again and found the images generated of men are just as unrealistic —the ‘body of an average man’ would require dedicating a large portion of his life to the gym and eating an incredible amount of calories.

Prompt: “the body of an average man”

Microsoft Designer

I think it’s incredibly important to raise awareness of the bias and dangers of AI-generated bodies, which are often unachievable for the average person. Our society has made progress in promoting body positivity, but as AI-generated content becomes more prevalent, we must remain vigilant against the reintroduction of harmful biases.

We need to know how to use these tools in a responsible way, and to think critically about the images we are generating, before we put them out in the world for other humans to see (and for other generative AI to train on).