Site re-development – Filtering Witchcraft

Hi, this is Josep again! We are currently in Week 10 of our internship, and time is starting to become a limiting factor. Since my last blog post in Week 4 a lot of things have been going on, and in this post I am going to be explaining how the plans I had back then have turned out, what I have managed to accomplish, and the things I still have left to do in the two remaining weeks.

In week 5, I implemented the new filtering algorithm, and everything went rather smoothly – although I had to change the whole data structure. I think this was a significant improvement, as things ran a bit faster, we only had to keep one copy of the array we use to plot, and the code was neater.

However, after that I then spent quite a few days implementing a way to share filter status data across pages, so that when the user changed pages they would have the same filters on as they had in the previous page. Nevertheless, in order to do this without a backend I had to hardcode the filters array into something called Vue Store (so that its state could be accessed from all pages).

The nature of this array meant that it had to change if the data in the database changed, so I would also need a dynamic check, and I thought it was fine because the data is not likely to change, and I had a dynamic check implement just in case it did. But when I pushed the code over for review, the rest of the team felt it was not ideal to have something both static and dynamic at the same time, which in hindsight I agreed with.

Then I thought I could build the list dynamically and then enter it into the Vue Store, but once I had that implemented I realised that that didn’t work because of caching we were doing (which is quite important because of loading time issues). Therefore, in the end we decided we would just not have the filters share across pages until we had a backend. Back then it was a bit difficult as I felt I had wasted quite a lot of time, but at the same time it was a valuable learning experience!

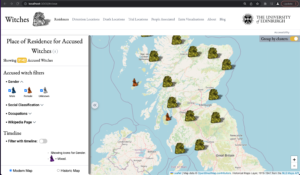

With the new filtering functionality done, I then started changing the filtering user interface to move it to the left of the page, and with the help of the rest of the team I think we managed to get a pretty neat, professional looking design. This is what it is looking like now:

This was the last thing I did before leaving to Spain for holidays for a week.Right now, I have been back from holidays for a bit less than two weeks. In the time that I have been back, I have been working on developing a responsive timeline for the Leaflet Map pages and refactoring the code in order to be able to add more map pages with more filters very easy once Maggie has finished adding all the new data on to WikiData, which should be any time now.

Other than doing these two things, I was hoping to have time to add a backend in Python to process all the data after querying WikiData (as of now there is no backend and we do it in the front-end’s JavaScript) since we will have quite a lot of new data to process, and we are afraid the loading time will significantly increase. However, in the end it has been decided that I shouldn’t do the back end in Python since the team say that they can’t maintain a Python backend in the long term once I’m gone, as they work in PHP. I will try and have time to do the backend in PHP, but I would have to learn it from scratch and there are a lot more other bits and pieces that need doing!

The Data Side of Things

I was off for most of last week as I was in Turkey holidaying for a couple of days, but was back on Thursday for more data work! I finished processing the witches’ meeting places and what went down in these meetings, as well as the calendar customs that were mentioned in the witchcraft investigations. Looking at Agnes Sampson’s investigation page, you can see all the juicy new details we’ve fitted into the Wikidata data model from the Survey and processed using OpenRefine.

Speaking of OpenRefine, I am currently planning and scripting a little tutorial for users of the software or even future interns as I have really enjoyed how intuitive and helpful it’s been for this project. This should be recorded in the next week so keep your eyes peeled…

This week, I started off with doing some data cleaning. Previously, before we created case items for each witchcraft investigation, the shapeshifting and ritual objects data was added to the accused witches’ items. Some helpful Wikidatans saw this duplication and started deleting statements, so we decided we needed to clean this up to not cause confusion.

Using the same Google sheet that was used to add the shapeshifting information, I used the handy “Export to QuickStatements” option to get all the edits into QuickStatements syntax. I did this as with QuickStatements, it’s easy to remove specific statements by prefixing a line with a minus sign.

After this, I started to think about extracting the data added to Wikidata using the Wikidata Query Service. We have been in contact with Navino Evans, one of the co-founders of Histropedia, to help us with the complex SPARQL query. This was the working example he sent us, and I used this example to try my hand at adding calendar customs and witches’ meeting places to the query (the data I added recently), with this query

Leave a Reply