Let me take a moment to share my thoughts around one of our newer services with Learning, Teaching and Web: Anthology Ally. Accessibility is fast becoming one of my favourite topics working within DLAM. A Humanities graduate myself, maybe the link between Hermeneutics (Interpretation theory) and digital transformations in accessible design play a part. Whilst I used to be primarily concerned about the meaning behind the words, I now find myself deeply fascinated by the (digital) transactions that take place to transmit and present information, and the tools it takes to make this happen!

Digital accessibility refers to digital content that has been reviewed and tested to be accessible to the widest possible audience and be free from any avoidable access restrictions when using assistive technologies. In the broad landscape of software, programmes and platforms, readability and interoperability are crucial if we are to enable our users to interact with out content in an output format suited to their needs. Thus, for accessibility to translate to digital media educational design (content focussed), interoperability, and user navigation and software availability all play a part. To achieve this, the ability to review and revise core information – such as file properties, logical input and design elements – this data must be clearly readable to a variety of software before the output can be guaranteed. Who else remembers the wonders of opening a foreign file extension with Word and being faced with code rendered in Webdings?

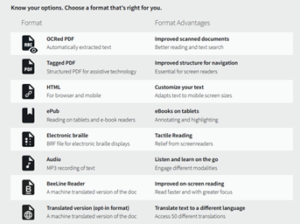

Our ability to alter and edit our content and render it accessible at all levels, is critical. Anthology Ally offers us a window into the system level dialogue of our digital learning environment and the digital media we populate our classrooms with. Ally is a software integration on our primary VLE that scans contents for digital accessibility, its meta text, settings and requirements hidden behind the on-the-face output. It allows the users to identify accessibility issues at software level and supports some measures of fixing these; as well as enabling the conversion of files into alternative formats. Alternative formats can make teaching resources more adaptable to the diverse needs and wants of our students. Changing file type may help in presenting a file more adaptive to screen size; opting for a different display modus can support reading; access to audio can allow you to listen back on materials whilst working out, running chores or on the commute… with a diverse student body, we have the opportunity to fit all our teaching courses into a more flexible learning style. An improved digital experience supports all our learners, and having a chance to gauge better what poses a hindrance to assistive technology will make an impact to our users!

Here at DLAM testing out our service solutions to assure that they work as intended and integrate seamlessly (we can but try!) into our existing service environment is a wonderful part of the job. The Web Content Accessibility Guidelines (WCAG) 2.1 (version 2.2 has been launched in October) offer a detailed list of criteria when reviewing all our websites and applications for legal compliance under the Equality Act (2010) and The Equality Act 2010 (Specific Duties) (Scotland) Regulations 2012 (https://www.ed.ac.uk/about/website/accessibility/guidelines-policy-legislation). One of the core efforts in the standard is that organisations have to lay bare how they identified and thought about accessibility for their products and services, and where possible, mitigated any avoidable shortcomings. In broad terms, our applications are challenged on three aspects: audio, visual and navigation / workflow; the touch points of our digital environment. It is a “dimension of interoperability” to assess whether “assistive technologies [are] working predictably with different combinations of browsers, mobile operating systems, and devices“ (The Next Frontier – Expanding the Definition of Accessibility | SpringerLink). When testing for Ally, a core challenge for me was using assistive technology, often for the first time, and to interpret the behaviour. Whilst I was reasonably familiar with Ease of Access settings for my PC and browser, other more specialist tools I found hard to judge. What if my lack of familiarity with assistive technology in the test makes for a poor result due to difficulties with the assistive tool rather than the target interface? It is the hard to judge places that lead us to use tools to scan and evaluate what meets compliance criteria and helps us to correlate our own experience with the data we interact with.

To guide instructors about digital accessibility, Ally produces a score. It is meant as a guide to how clean a file is in digital terms, i.e. how successful it will be in converting to an alternative file format or being negotiated by any common assistive technology tool. So taking a moment to demystify the scoring for a moment should clear our view to how Ally supports our wider mission to produce accessible learning and teaching materials.

![]() Low (0-33%): Needs help! There are severe accessibility issues.

Low (0-33%): Needs help! There are severe accessibility issues.

![]() Medium (34-66%): A little better. The file is somewhat accessible and needs improvement.

Medium (34-66%): A little better. The file is somewhat accessible and needs improvement.

![]() High (67-99%): Almost there. The file is accessible but more improvements are possible.

High (67-99%): Almost there. The file is accessible but more improvements are possible.

![]() Perfect (100%): Perfect! Ally didn’t identify any accessibility issues but further improvements may still be possible.

Perfect (100%): Perfect! Ally didn’t identify any accessibility issues but further improvements may still be possible.

Now, a scan for alternative text, in spite of the potential for AI to play a part here in future, cannot infer the context for the teaching resource and the focus the image might have in the lesson plan in the assessment of the appropriateness of ALT text. Ally is first and foremost an editing tool for the instructor, and a convenient conversion tool for the students. Accessibility needs remain to be assessed at the human level. So what do we do to assure we keep the service on track?

- Training; we must foster a keen awareness of what constitute accessible design in our digital service landscape

- Testing; we must test out applications and websites to identify and mitigate challenges in accessibility

- Research; we must continue to learn about digital trends, possible new solutions and developments in assistive technology, and accessible design

- Feedback; we must actively listen to user feedback to satisfy ourselves that we are not merely offering a legally compliant but a practical and usable service solution and that users understand the benefits and limits of the tools we provide; evaluating our service data can support this conversation (and I hope to go into more detail about this in my next blog).

What I have found in the course of the launch of Ally is that users, academics and technologists alike, need to know not only what the barriers of student users may be, and what assistive technologies might be employed, but how to make the leap between the informational content, the educational experience or activities, and the digital needs of the programmes to make it operate for other software. Whilst it is important to point out that accessibility needs are as diverse as the subjects we offer in teaching, focused on honing different skills and abilities, the same goes for assistive technologies. There will never be a one-size-fits-all. And that is a positive thing. It curtails our tendency to reduce accessibility to a tick box exercise. Checking for accessibility remains at all times an iterative process. With Ally, we have one more tool to help us orientate ourselves amidst this ever evolving digital landscape.

(https://help.blackboard.com/Ally/Ally_for_LMS/Instructor/Accessibility_Scores)

(https://help.blackboard.com/Ally/Ally_for_LMS/Instructor/Alternative_Formats)

(https://help.blackboard.com/Ally/Ally_for_LMS/Instructor/Accessibility_Scores)

(https://help.blackboard.com/Ally/Ally_for_LMS/Instructor/Accessibility_Scores)

(https://help.blackboard.com/Ally/Ally_for_LMS/Instructor/Accessibility_Scores)