I keep turning a line from Alasdair MacIntyre over in my head,

“You cannot hope to reinvent morality on the scale of a whole nation … when the very idiom of morality which you seek to re‑invent is alien in one way to the vast mass of ordinary people and in another to the intellectual elite” (MacIntyre, 2007, p. 238).

I find myself going back to his work and every time I read it I feel the floor shift a little under the tidy diagrams we draw in ethics classes because it reminds me that before we talk about frameworks or audits or shiny governance models we have to talk about belief, the personal kind that lives behind our eyes and tugs at the choices we make when no rubric is watching.

The course set us a clean‑looking question—how can we work with others to construct more ethical data futures—and I remember thinking we were probably expected to have our writings land somewhere between participatory workshops (something I was actively doing in my personal/professional life) and better legislation or policy.

Yet the longer I looked over all our discussions, all our reading, the more the ground kept crumbling because of two main problems.

The first being the fact that technology, for all its novelty, mostly amplifies whatever rot or solidity is already there in the hands and hearts of the people running it, this came up in one of our last group discussions rather plainly:

“The fundamental ethical challenge isn’t about the technology … it’s about the people making decisions, the tech merely amplifies existing problems” (LEARN, 12 Mar 2025).

In all of our discussions we were using some form of “reason” or dialogue, an “ethical tool set” to navigate the challenges placed in front of us. Yet the entire time, through every discussion and talk I just had this nagging feeling that we were only hitting the surface and never really getting at the crux of the problems in front of us.

This brings us to the second “problem”, which is what “ethics” or “ethical” even means, and if our reason can find a consensus to act “ethically” in the first place. It seemed much of the course content and some of my peers made the assumption that we had already solved this, the focus was on the need for “guidelines” or “checklists” that are needed to operate morally and ethically based on a consensus that does not exist in our modern society, this in turn created a huge friction in my head, and still does.

Before we can even answer the question of working together on an “ethical data future” I think the concept of what it even means to be ethical within a future like the one we are rushing towards needs to be addressed. One where our own reason on what is ethical may be at odds with understanding what an ethical or moral future even looks like.

This question is nothing knew,

Hume had already warned that reason is “utterly impotent” when it comes to morals because it is passions that move us to act (Hume, 1739/1978, p. 457),

…and I feel that every time a compliance checklist gets signed by someone who is motivated by something OTHER than their own passions, it always leads to the audit, policy or “ethical guide” becoming a box tick and the harm rolls on under a veneer of due diligence and somewhere a dashboard lights green while a community power‑cycles its trust into the red.

Even our own course reading had highlights of this very issue, the work from Danaher circles the same problem from the procedural side, insisting that any decision system worth following must leave room for human participation and comprehension, that legitimacy erodes when outcomes arrive wrapped in code nobody can read (Danaher, 2016, p. 253).

That same idea keeps bumping into my memories from my time in the Marine Corps, when I would watch the building of “ethical projects” where metrics dazzled generals yet the enlisted Marines most affected shrugged because the reasons behind those metrics stayed locked behind documents of jargon or opaque algorithms.

Funny enough, the phrase “who benefits and who pays the price” cropped up in the very same LEARN thread mentioned just a moment ago, one about surveillance tech, a subtle tug back to MacIntyre’s complaint about alien idioms, because the moment a system serves some distant stakeholder’s notion of good while the local community shoulders the risk, language fractures, trust bleeds, and we lose the shared map of ends that lets the word contribution mean something bigger than obedience (LEARN, 12 Mar 2025; MacIntyre, 2007, p. 232).

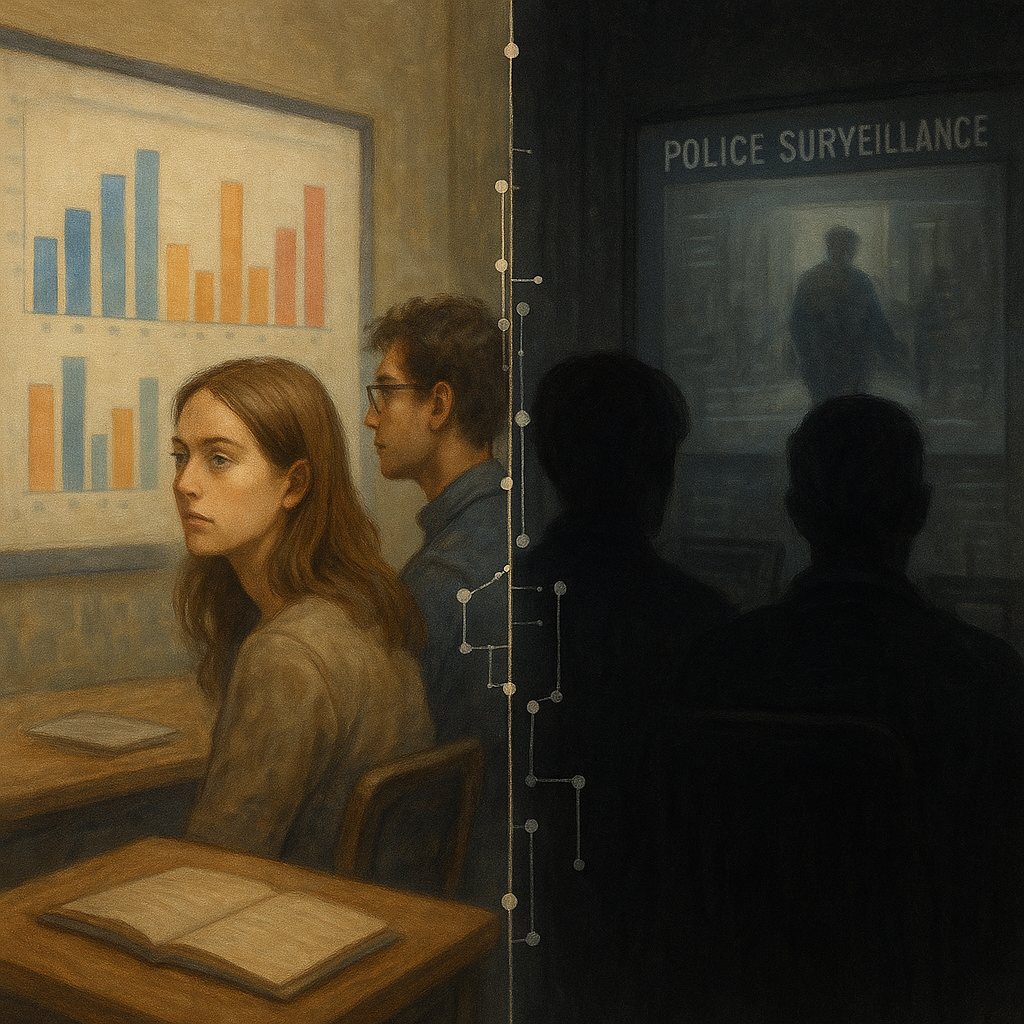

Honestly the discussion on the ShotSpotter Debate is probably where I will spend most of my time giving a reflection of these fractures of “perception” and “obedience” to a narrative of reason rather than the ethics behind that reason.

In our discussion on ShotSpotter, many of my peers framed the issue in terms of implementation, whether the system worked, whether community members were consulted, whether it reinforced systemic biases.

Now in no way dismissing that these are valid concerns, but the discourse often stayed surface level on how to address the concerns, it seemed more focused on generic outcomes than on the roots of the ethical failure.

There was a tendency to treat the technology as the problem, that being said several people, myself included, pointed out that the issue was not the software itself, but the intentions and incentives of the decision‑makers behind it.

Still, the broader class discussion leaned heavily on utilitarian critiques, ShotSpotter is bad because it’s inaccurate, it causes harm, it doesn’t do what it claims. But even if it were accurate, even if the harm could be minimized, I think the conversation missed the deeper problem:

what gives one group the right to define ethical use for another?

This gets to the fragility of imposed ethics. When moral legitimacy is assumed rather than earned, when systems of surveillance or data governance are introduced without true co‑creation or consent, and even if there is an assumed action of co creation but it is not backed up by deep input beyond some “checklist”, they don’t just fail technically, they fail philosophically.

We were often too quick, in class, to speak of “ethical frameworks” as if they were fixed structures that could be applied top‑down. But the ShotSpotter debate and the many other papers and talks showed me something else: that without shared belief or deep alignment, even well‑intentioned ethical systems can become instruments of control or even unintentional instruments of waste and saviorism. I think many can agree at the very least that what’s called ethical can still feel coercive if it’s not rooted in mutual understanding and that understanding comes from something deeper than a framework or consensus of “defined words”. C.S. Lewis saw this danger long before predictive policing, warning that the worst tyranny is the one “sincerely exercised for the good of its victims” because it never tires of saving you for your own sake (Lewis, 1949/1970, p. 292) and Danaher shows how such zeal can slip into what he calls algocracy, a regime where decisions are cloaked in technical necessity and handed down by a fresh epistemic elite (Danaher, 2016, pp. 252, 259).

It is the ones deciding the ethics that will decide who, and what, is in need of ethical attention and when “enough” has been done.

This is an inherent issue.

An issue we bumped into in February when the class argued over mandatory telematics in cars, a policy pitched as pure safety, but right away two things split the room, first the sense that nobody had really asked drivers whether constant data logging felt like safety or like surveillance, and second the deeper worry that once the data started to flow no one downstream could guarantee what ends it would serve, one classmate typed that we have no control over our own driving data, where it goes, who sees it, or how they use it, another followed that the privacy hurt and the fairness skew still outweigh the promised drop in crashes, because safer roads can be built with better design and slower speeds rather than black-box scoring systems (LEARN, 5 Feb 2025).

Right here we see the same facts, the same ethical deliberation leading to very real, and one could argue equally valid outcomes. Listening to that back-and-forth I thought again about Kate Crawford and Trevor Paglen’s work on ImageNet we read right in the very beginning of the class, not because I feel haunted by their examples but because they stand right in the same bind, they show how a dataset freezes messy life into neat labels and how those labels reflect the prejudices of their makers (Crawford & Paglen, 2019, p. 23) yet at the same time the essay leans on the hope that unmasking bias is a step toward fixing it, which keeps the focus on the technology as if the real struggle sits inside the dataset rather than inside the reasons we keep reaching for datasets at all.

I read their line about the violence of naming, the way categories reify what they describe (p. 21) and I do truly agree, but I also see that every human system of knowledge from tax law to a grocery list does the same thing:

bias is not an artificial intelligence glitch, it is a side effect of perception filtered through goals, power, and fear.

That realization steers me away from framing the problem as bad data needing better curation and pushes me toward the older question Hume kept hammering, that reason alone never moves us, only passions do, so the central task is not to engineer a neutral dataset—an impossible standard anyway—but to cultivate the moral temperament of the people wielding it, to make sure their passions tilt toward care rather than conquest (Hume, 1739/1978, p. 457)

Danaher helps here, not because he offers a technical patch but because he notices that authority evaporates when procedures grow opaque, he writes that if the reasons behind an algorithm are inaccessible the system forfeits legitimacy, people need to see why a decision landed on them, or at least trust someone who can explain it in common words (Danaher, 2016, pp. 251-254) and that loops back to our telematics dispute, because the real friction was not the raw data flow, it was the silent handoff of power to insurers and city planners who speak a dialect most drivers never learn.

These critiques and questions can go on for hundreds of pages and hundreds of years(they already have) but I would be falling to the same fallacy I am seemingly against if I only criticized without attempting some sort of reach to a solution, a move forward, three moves really, none of them novel, but each demanding patience that tech culture rarely grants.

First, its as simple as talk early and in person, not as checkbox consultation, not as a class or speech to be had, but as a slow discovery of language we can all inhabit, if the words for harm or benefit belong to everyone in the room the later “math” will rest on soil instead of concrete.

Second, talk is great but at the end of the day the only thing we can truly control is ourselves, keep turning the mirror inward, each designer, policymaker, activist acknowledging the blend of fear, pride, and hope that drives their preference, because unexamined motive leaks into code or policy faster than any bias-audit script can catch

Third, we must design feedback loops that make revision normal, there is no such thing as a finished ethical framework. We must publish models, publish reasoning, invite challenge, reward revision, let systems breathe so they change when the people they govern change, that meets Danaher’s call for accessibility and Burke’s older claim that the freer a society wishes to be the more its citizens must bind their own appetites with conscience first (Burke, 1791/1999, p. 69).

As much as I am hesitant to side with Burke, I chose him and this quote specifically. It is the very understanding that truth comes in packages we may not always like that directly reflects this aspect of feedback. Burke was one focused on control and authority, an authority this paper actively writes against, yet to disregard his ethics, his thoughts, is to disregard the ethics of millions.

None of this guarantees perfection, perfection is a story we tell to postpone responsibility, but these moves at least line up the human heart with the levers of data power, so when bias shows we see it as our own shadow rather than a ghost in the machine

I end where I began, with MacIntyre’s warning that moral projects fail when they speak a language the community does not share, after a semester of readings, arguments, stalled breakouts, and one too many polished frameworks, I believe him more than before, because every ethical future worth living in will have to sound like the people asked to live there and not just in a perspective that the few intellectual elite understand,

otherwise the smartest model in the world will feel like someone else’s rulebook, and we will keep burning trust to fuel new engines that never quite take us home.

References

Burke, E. (1999). A Letter to a Member of the National Assembly (F. Canavan, Ed.). In Select Works of Edmund Burke (Vol. 3, pp. 67–70). Liberty Fund. (Original work published 1791)

Crawford, K., & Paglen, T. (2019). Excavating AI: The politics of images in machine learning training sets. AI Now Institute. https://www.excavating.ai/

Danaher, J. (2016). The threat of algocracy: Reality, resistance and accommodation. Philosophy & Technology, 29(3), 245–268. https://doi.org/10.1007/s13347-015-0211-1

Hume, D. (1978). A Treatise of Human Nature (L. A. Selby-Bigge & P. H. Nidditch, Eds.). Clarendon Press. (Original work published 1739–1740)

Lewis, C. S. (1970). The humanitarian theory of punishment. In W. Hooper (Ed.), God in the Dock: Essays on theology and ethics (pp. 287–293). Eerdmans. (Original essay published 1949)

MacIntyre, A. (2007). After virtue: A study in moral theory (3rd ed.). University of Notre Dame Press.

LEARN Group Discussions

-

LEARN. (5 February 2025). Group discussion on telematics and driving data.

-

LEARN. (12 March 2025). Group discussion on surveillance technologies and ethical foundations.

-

LEARN. (17 March 2025). Group discussion on harm and affected communities.

-

LEARN. (5 February 2025). Group discussion on personal responsibility and fairness in data systems.

Meera

29 April 2025 — 20:53

A lot of reflection would go into discussing ethics/ethical standards/processes. And I agree, fundamentally this reflection should be on issues of power hierarchies, identifying inherent bias in structures, questions of inclusion/exclusion etc, isn’t it? It is a start.