Week 7 – Developing the Final Idea into a Process

This week found me conversing on my ‘Final Idea’ with fellow students at UofE and family members. As a result, I was able to bounce several ideas around and to refine the ‘first-draft’ idea I’d first cooked up. Last night, whilst working on a separate project for GHN was when the journey ahead of me finally gained clarity, and when I was able to divide the process into three clear steps. Firstly, however, the original idea to have AI create a series of text/images from a prompt and then compare it to those I’ve created in response to the same prompt(s) has changed somewhat.

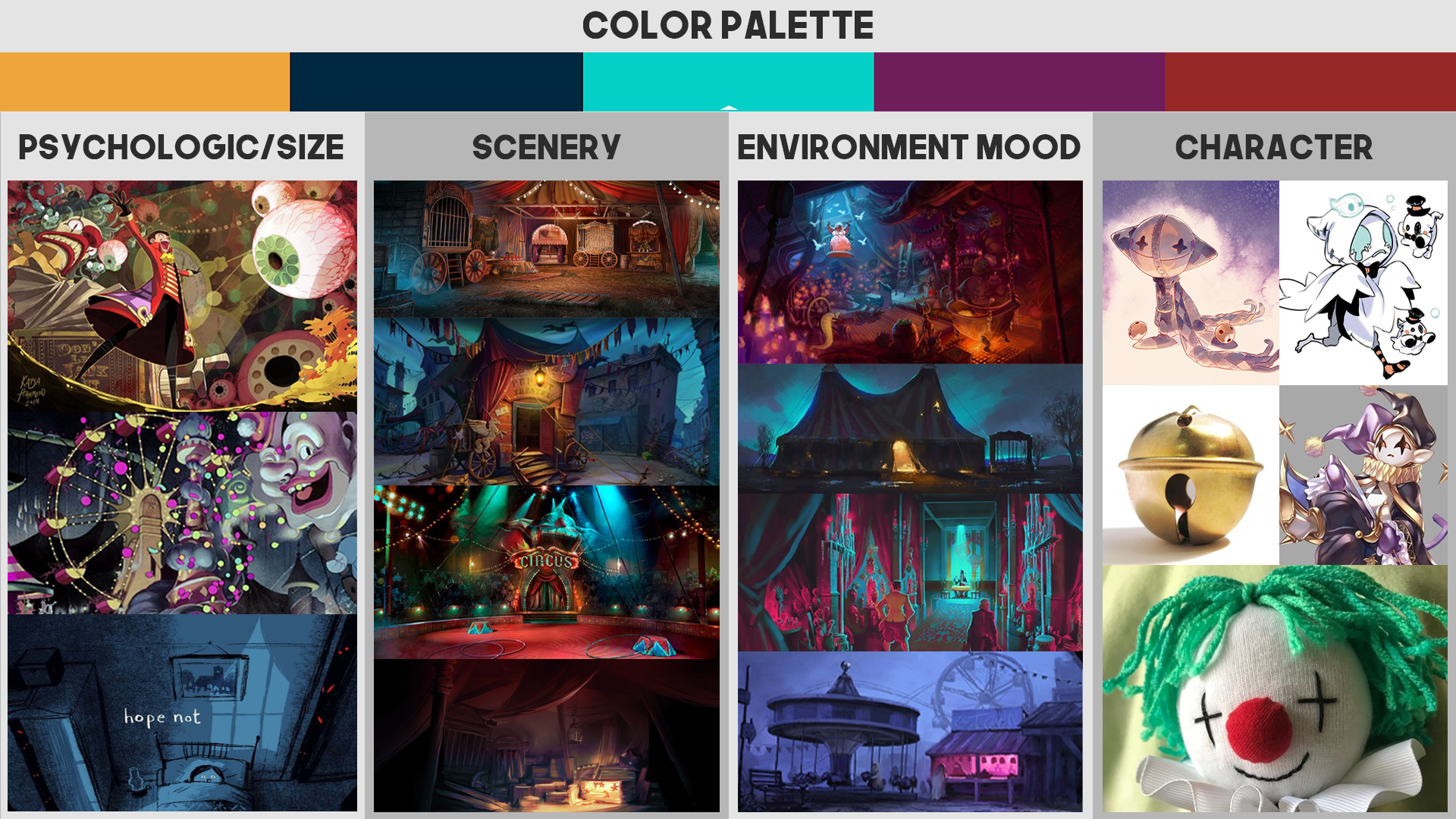

Now, instead of feeding the AI a written prompt, I’ve decided to offer the AI a visual board/storyboard/mood board where there’s a clear sequence of events happening, and where a product is being pitched/advertised. The task of the AI would then be to deduce a) what product is being advertised and b) the components of said product (in accordance with the visual board). This same ‘prompt’ [the moon board/storyboard] will be presented to two human participants (one an expert, and one a layman) and their reactions/deductions will be recorded. The final product will be a reflective essay discussing the differences and similarities in response between the three, and analysing how ‘useful’ or ‘accurate’ the AI is in comparison to a human response.

Thus, the three aforementioned steps are as follows:

- Create a visual storyboard detailing the steps of a product. I am currently considering using something similar to my ‘game-pitch’ from Gamifying Historical Narratives. Ensure that the product, its components, and the overall narrative is clear. It might also be interesting to include a historical element here to see if the AI can accurately guess it?

- Present the storyboard to the AI, and ask it to respond to the image and define what it being advertised, to who, and what it’s key features are. The results will then be recorded. During this process, questions might be tweaked or re-attempted in case the AI fails to initially understand what is being asked of it.

- Ask two human participants to respond to the same piece. One with expertise in the subject matter (historical gaming/games/visual storyboards) and one with little-to-no experience in the given field. Present them both with the same series of questions than the AI and record their responses.

The final task would then be to collect all results and carefully analyse them to see how their responses differ and correlate. The overall intent of the experiment would be to see how well AI can read a visual prompt, and deduce a game(?) from the steps shown. And how it’s response stacks up with two human participants on opposite spectrums of experience.

The questions going forward are:

- Are two human responses sufficient to make assumptions on how a human response may look like?

- Should one type of AI be utilised or two and their responses also compared and contrasted?

- Should a control be used to demonstrate all three participant’s ability to recognise and react?