BUBBLE WHISPER: TAKE REMOTE AND ONSITE VISITORS TO AN EXPLORATION GAME OF THE EFI BUILDING

Jin MU, Tongye LIU, Feijia SUN, Fan YU, Jenny TANG

PROJECT PREVIEW

Bubble Whisper is an interaction design that allows users to explore the EFI building with each other no matter they are online or onsite. The pandemic has changed our interactions, isolated us, changed our spatial habits and the way we occupy the urban space. The EFI building once was the much loved Old Royal Infirmary of Edinburgh on Lauriston Place, which carries a lot of histories and memories of Edinburgh.

The main purpose of our project is to allow our audience to get familiar with the building about its histories and current usages through an interactive game and enhance social contact between remote and onsite users.

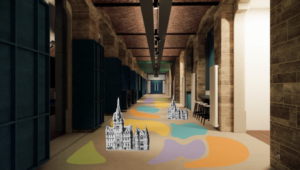

EFI BUILDING

https://efi.ed.ac.uk/online-tour/

The corridor of EFI building is one of the busiest public spaces where numerous conversations and activities take place. We expect to bring the students and staff members who are on campus or online together by endow this space more possibilities through Virtual Reality technology.

PROJECT DEVELOPING

Inspiration

Inspired by the bubbles to help people maintain social distancing in many places around the world, we imagine the bubbles to be the trigger of an interactive game that could connect visitors from both the virtual and real world.

Initial idea

After several rounds of brainstorming, we settled down on the idea that users participate in the game by entering the circle projected on the ground. When two people stand on the same colour bubbles, they could watch a video related to the theme together.

Case study

Case 1: Working remotely in VR using Vive Sync. https://www.youtube.com/watch?v=IA90a6ymLlc

Case 2: Augmented reality street art https://artivive.com/augmented-reality-and-street-art-social-and-cultural-engagement-in-the-city/

By going through other similar VR based cases, we try to summarize the advantages and disadvantages of the products, so as to better develop our project. Case 1: a visual reality remote conference application on Vive, which provides an immersive experience. However, everyone needs to wear VR glasses. In our project, we hope to preserve the onsite users' positive experience as much as we can, so VR headsets are not suitable for visitors that enter the building in person. We searched for some alternative ways for onsite users. Case 2: The creative project - Artivive, combined with augmented reality technology, enables collaborative creation and a timely interactive user experience. This idea keeps the real-world experience and a surprise virtual view at the same time. This gave us inspiration on using AR technology to provide a better user experience for onsite visitors.

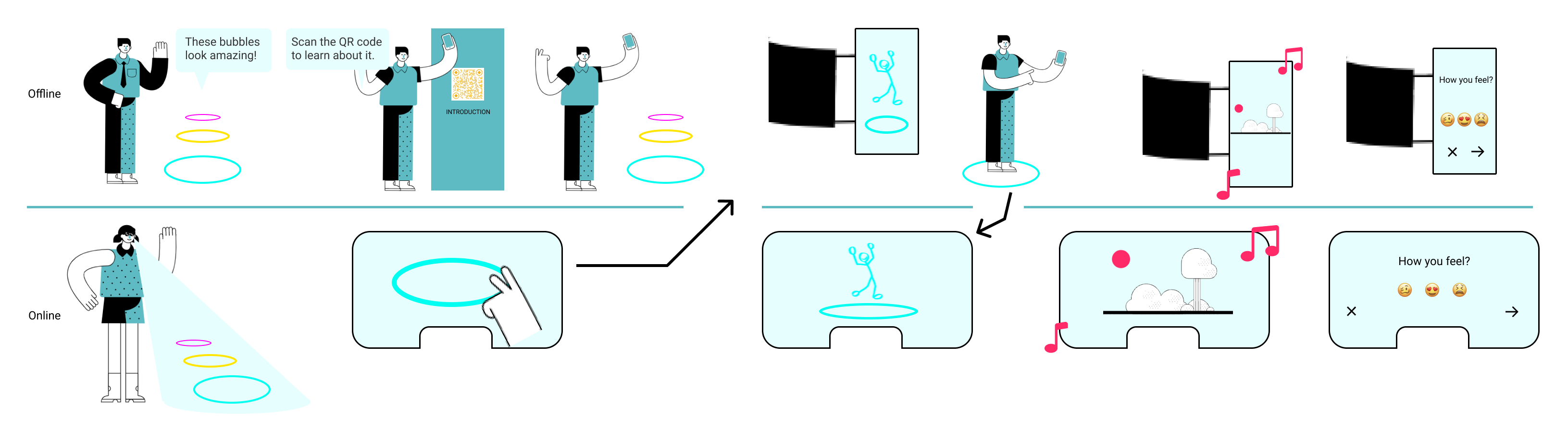

Idea iteration and User flow

After a couple of iterations, we finally get an integrated user flow.

We encounter some issues that influence the interaction process, so we adjust the original design to improve the user experience. Online visitors approach the interaction via the VR sets, while onsite users using their phone to enter the AR vision. Then we define the colours of different bubbles to represent different themes by using the initial idea as shown above. Specifically, the blue bubble represents the histories and culture of the EFI building, the green one delegates information of campus and the pink one means the usages of the EFI building.

Technology

The most challenging part of the technical issue is how to make the connection between two different spaces: the virtual model in VR set and the physical space in the building.

Depth Sensor:

https://jahya.net/blog/how-depth-sensor-works-in-5-minutes/

A depth sensor is comprised of three parts: Infra-Red Projector, Low-res RGB Camera and Low-res Infra-Red Camera. Depth sensors are a form of three-dimensional (3D) range finder, which could recognize and capture people's movements.

We position the sensors on the walls beside the corridor, capturing the onsite users' movements to tell the system whether they are participating in the game.

https://github.com/IntelRealSense/librealsense/issues/2944

VR: The goal of VR is to provide humans with a virtual environment where we can interact with computers as we would in the real world, i.e. by speaking, writing and drawing. In a humanised virtual environment, we can interact with the computer without difficulty or obstacles. When VR technology generates a virtual world, we can go there as if it were a real landscape. Not only is a 3D image of the landscape provided, but also sounds helping us to enjoy it. VR headsets are used for remote users to enter the game, visit the building and experience the video of each bubble.

AR: Augmented reality is the technology that expands our physical world, adding layers of digital information onto it. Unlike Virtual Reality, AR does not create the whole artificial environments to replace real with a virtual one. AR appears in direct view of an existing environment and adds sounds, videos, graphics to it. We chose AR for onsite users to enter the game view and experience the contents of each bubble.

FINAL OUTCOME

Storyboard

Online users

When the online user wearing the VR set enters the corridor, the pop-up window of introduction will show in the user’s VR set to tell him how to interact with other visitors, including other online visitors and onsite users. Then the bubbles appear on the ground. When online users and the onsite standing on the same colour, they can play the video and audio of the bubble, along with some interaction options.

Onsite users

The onsite user scans the QR code by phone to view the introduction of the interaction, then choose a bubble that is projected on the ground to stand on. After that, waving his hand in front of the depth sensor to confirm participating in the interaction. Finally, when someone online is standing in the same colour, they can play the video and audio of the bubble, along with some interaction options.

The interaction also shows in the user flow.

REFLECTION AND FUTURE WORK

The most challenging part of our design is technical application. The completion of this project was not very smooth. We encountered many problems at the technical level. For example, the software version was not suitable and the given VR file could not be opened. The computer model was not matched and the software could not be run. In addition, the pictures we drew could not be smoothly imported into the software. But in the end, with the help of Mark, we successfully realized the preview of the renderings. Here we thank Mark Kobine from uCreate for his selfless help!

Due to the tight schedule, many details of the plan have not been implemented. In the future, in order to create a more complete immersive environment, we need to set Avatars for users in VR environment instead of a single line image; in addition, our project only revolves around the background of the freshman welcoming week. Therefore, in the future, we can think about whether this scheme can be used in other scenarios to ensure the sustainability of the design.

In practice, more tests are needed to determine the actual physical technology. How can offline users participate without affecting other passers-by in the corridor? Are there better ways for offline users to communicate with online users? How to ensure the participation of online users? This project is for users around the world so how can people in different time zones communicate effectively together?

REFERENCES

Skalski, A. and Machura, B.(2015) 'Metrological Analysis Of Microsoft Kinect In The Context Of Object Localization'. Metrology and Measurement Systems, 22(4), pp.469-478.

Schäfer, A., Reis, G., & Stricker, D.(2021). 'A Survey on Synchronous Augmented, Virtual and Mixed Reality Remote Collaboration Systems'. arXiv preprint arXiv:2102.05998.

Tarr, B., Slater, M. & Cohen, E. Synchrony and social connection in immersive Virtual Reality. Sci Rep 8, 3693 (2018). https://doi.org/10.1038/s41598-018-21765-4