School of GeoSciences Ceph Architecture

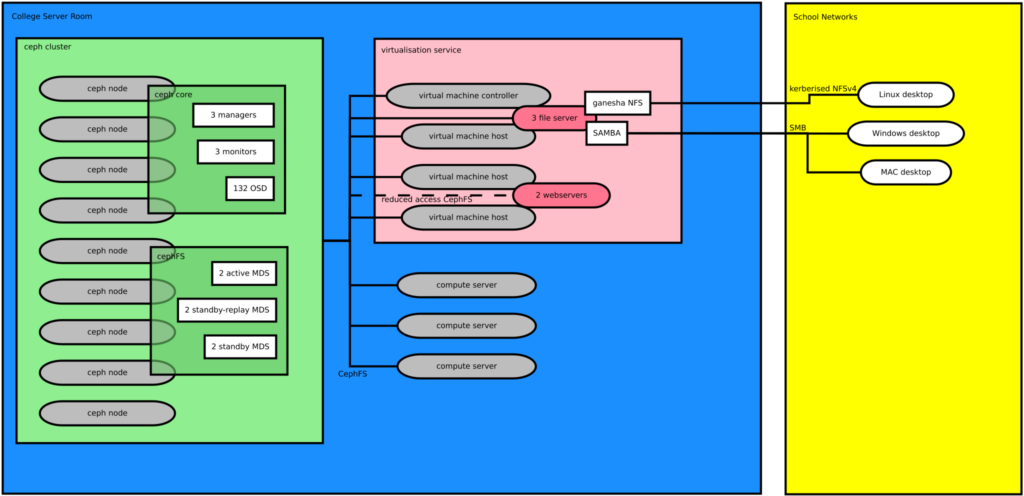

Schematic overview of the School of GeoSciences ceph based storage cluster and virtualisation service. Grey, rounded boxes indicate physical computers. The pink rounded boxes indicate virtual machines. Square boxes are process running on some machines.

In one of my previous posts I outlined the ceph setup used in the School of GeoSciences. In this post, I would like to describe how the various bits fit together in some more detail.

The diagram above shows a simplified version of our computing setup. Broadly speaking we distinguish between computers in the college server room (CSR, blue) and computers outside the CSR (yellow). The main difference is that we trust clients inside the server room while we do not trust the network outside the server room because of physical access to the network. All access to services inside the college server room from outside are authenticated (ssh, kerberised NFS and SMB). Inside the server room access is unauthenticated. All machines inside the CSR are connected to a stacked 10GE switch via bonded networks.

Inside the server room we run two major multi-host services: the ceph cluster (green) and the virtualisation service (red).

The ceph cluster currently consists of 9 physical nodes. Each node has a number of 5.5 – 11TB disks. One OSD (object storage daemon) is run for each disk, 132 OSD in total. The OSDs communicate directly with each other and the ceph clients. The other fundamental part of a ceph cluster are the managers. We run three managers, each on a different node in the cluster. They form their own cluster and keep track of the state of the storage system. We also run three monitor daemons on three different cluster nodes. These are used to query the state of the cluster.

The ceph filesystem uses the basic ceph storage cluster outlined above. We run two active MDS (meta data server) daemons. Each active MDS has an associated standby-replay MDS that can take over should one of the active MDSs fail. We also have another two standby MDS. These warm up when a standby-replay MDS becomes the active MDS. So, in total we have six MDS that each run on a different node in the cluster.

The iptables based firewall on the ceph nodes allows ceph traffic from any machine on the CSR network but blocks traffic from outside the CSR network. General purpose compute machines and the file server frontends use the same ceph key to access the CephFS. The key is delivered via our secrets mechanisms (using ssh and subversion).

The OpenNebula virtualisation service consists of three virtualisation hosts and a manager. The virtualisation service uses a directory in the CephFS to store virtual disk images. We run about 50 virtual machines for various services. Among these are three file and two web servers. Each file server runs SAMBA on top of the kernel cephfs module mounted file system. CephFS gets mounted using the same automounter maps as all our Linux machines. This ensures a consistent view of the filesystem across different platforms. The ganesha NFS server talks directly to the ceph cluster and exports the filesystem to networks outside the CSR via kerberos authenticated NFSv4. The file servers are now based on LCFG managed Ubuntu systems. Each file server sees the same underlying storage. We attempt to balance the load by directing staff to one, students to the other and group storage users to the third server. We did gain some ressilliance by using virtual machines. Should there be a hardware fault, the VMs automatically migrate to one of the other VM hosts.

The web servers work in a similar way. One of them serves students’ files and the other files owned by staff. They are different in that they use a special ceph key that restricts access to certain directories: only some directories are read/write and the web directories are read-only. The web servers cannot access the users’ home directories. This arrangement is due to security considerations as we allow users to run CGI scripts.

(CC-ShareAlike Magnus Hagdorn)