Group members:

Xinxuan Zheng (s2506313)

Han Zou (s2505422)

Yixuan Yang (s2503597)

Jiayi Sun (s2506655)

I. Initial Concept Generation

Brainstorming

When brainstorming, we initially branched out around two keywords, nature and memory, generating some rough themes.

Nature Theme: Jellyfish Crisis / Awakening Flower Device / Cyber Garden

Memory theme: Alzheimer’s disease/Cyberworld/Travelling memories

Secondly, each team member independently researched two potential project topics in greater depth: Alzheimer’s disease and the jellyfish crisis. After reviewing the references individually, the team voted to deepen the Alzheimer’s disease-centred idea during the first group discussion meeting.

Concept Expansion

In the course of our research, we watched a number of Chinese and Western documentaries on Alzheimer’s disease, including A Marriage to Remember [1], Please remember me [2], and the high-profile film The Father [3], and found that this is a globalised phenomenon that deserves to be brought to the forefront. We were all deeply moved and solidified the idea of the project.

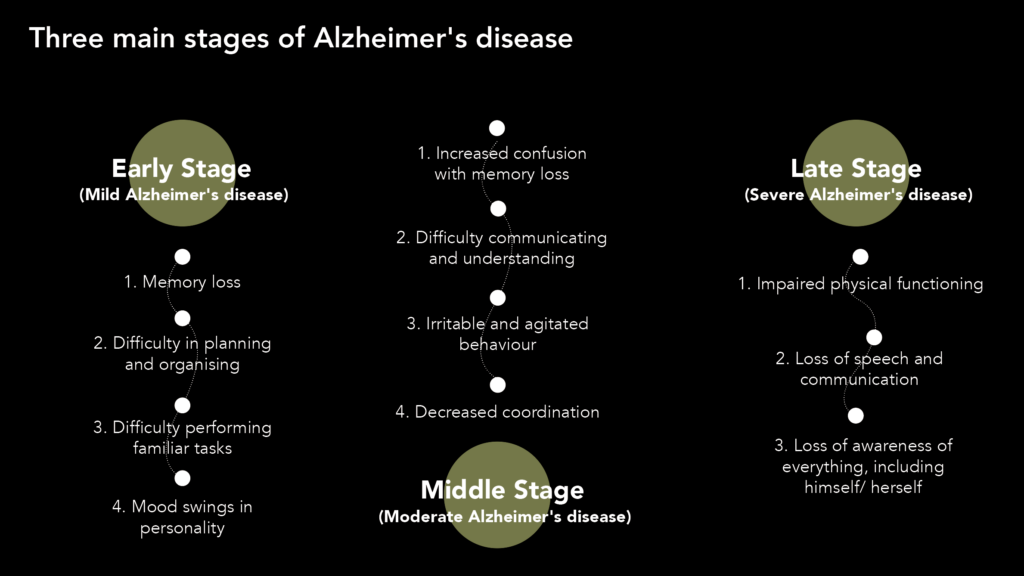

Since Alzheimer’s disease is a progressive neurological disorder, symptoms that evolve over time include memory loss, language deficits, and decreased physical functioning. Among them, we are in agreement about memory loss being the most brutal and helpless part of the disease. Therefore, we focused on memory changes over time to design an immersive experience.

Research

During the initial research, we mainly adopted the desktop research method to understand the basic characteristics of Alzheimer’s disease as well as the pathological features. After our discussion, we chose to develop the project based on the different stages of memory changes of Alzheimer’s patients. Meanwhile, we also critically analysed relevant art cases as well as technical feasibility.

Alzheimer

- Fundamental characteristics and data of Alzheimer’s disease

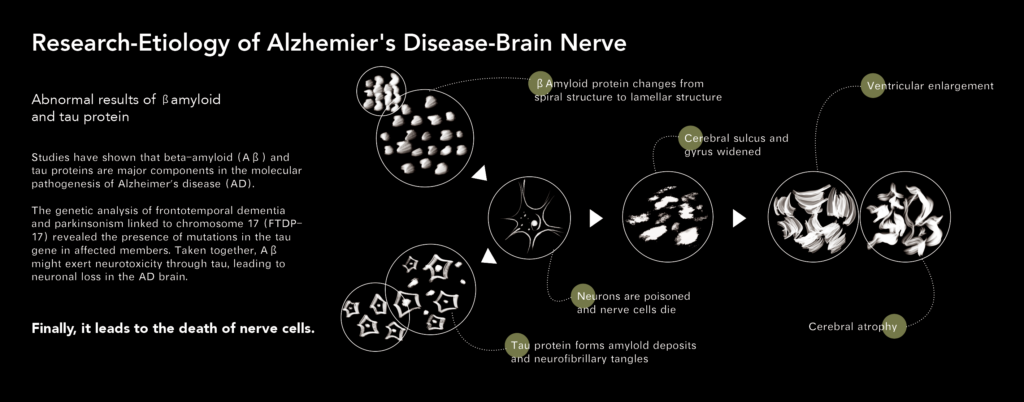

Causes and Principles - The progression of the illness

Related Works Analysis

Case1: ROBOTIC VOICE ACTIVATED WORD KICKING MACHINE[4]

Advantages: literal visualisation of sound, as well as generating interaction through the loudspeaker installation, enriching the interactive experience.

Weaknesses: The metaphors of some of the installations are too subtle

Benefits: The connection between text and music can be borrowed and the effect of text scattering and stacking can be used in the prologue of this project.

Case2: Remember me[5]

Advantages: using jigsaw puzzles to express the memory disorientation of Alzheimer’s patients, visually striking series presentation

Weaknesses: confined to the graphic realm of the poster, no interaction

Lesson: Similar objects can be borrowed as a metaphor for memory change and applied to the memory disorientation part of this project.

Case3: NMIXX DASH MV[6]

Advantages: The effect of using text to flow backwards and accumulates gives the audience a good visual experience and is very creative.

Weaknesses: The form is too neat and cannot express confusion and chaos well.

Benefits: We can learn from the effect of text accumulation, and then change the effect of text appearing so that it flies in from all directions.

Possible Technologies

- Touchdesigner: visual effects involving image and model processing in projects

- Runway: AI-generated videos of projects

- AE: video editing

- Max: real-time interactive audio and video processing

- Speechify: AI vocal generator

- Ableton Live: Sound effects and music production

II. Project Brief

Definition of Process

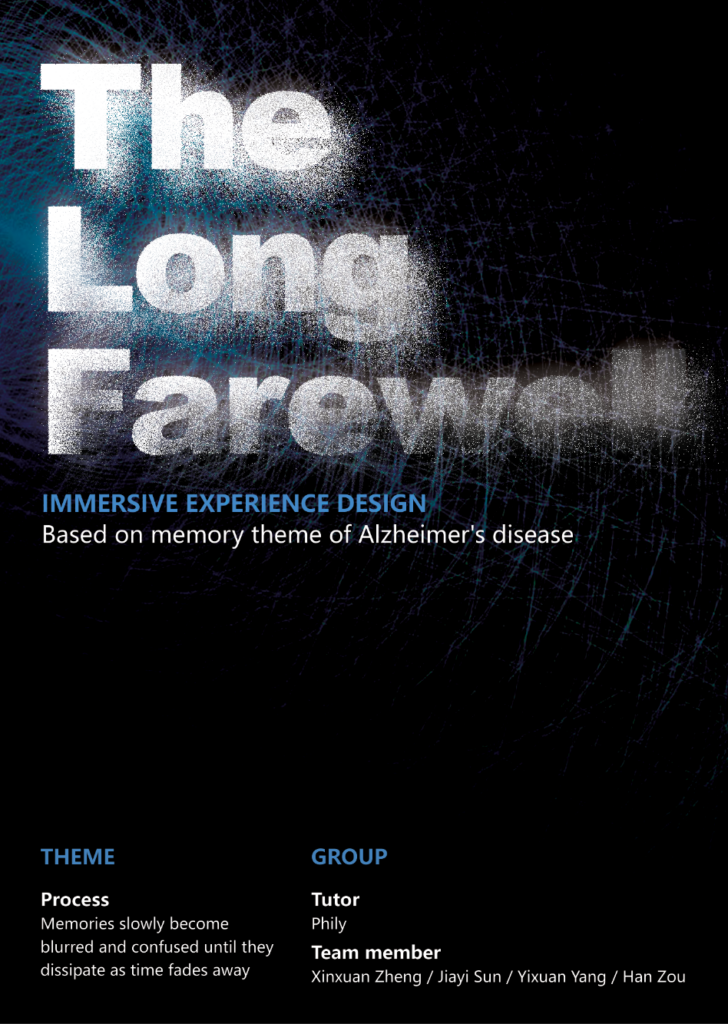

In the context of this project, process refers to the process by which memories slowly become blurred and confused until they dissipate as time fades away

Purpose:

The purpose of this project is to use an immersive experience to demonstrate to the public the challenges faced by people with Alzheimer’s disease by combining art and technology, thereby arousing social attention and understanding of this group.

Project Name

The Long Farewell

Project Content

This immersive installation guides viewers through an experiential journey depicting the stages of memory decline in Alzheimer’s patients. Divided into four stages — Prologue, Blur, Fade, and Vanish — the installation sequentially leads participants through each phase using one integrated device.

Stage 1: Prologue

In the initial stage, visitors will physically walk into the center of the black screen. At this time, in terms of sound, voices came from all directions and as time passed, the speaking speed became faster and faster, accompanied by the overlay of different human voices. On the visual side, textual visualizations float into projected picture and pile higher and higher. This stage presents a tense atmosphere where participants have no time to react. Visitors can experience from a first-hand perspective the overload of memory information in the brain of an Alzheimer’s patient during the disease.

Stage 2: Blur

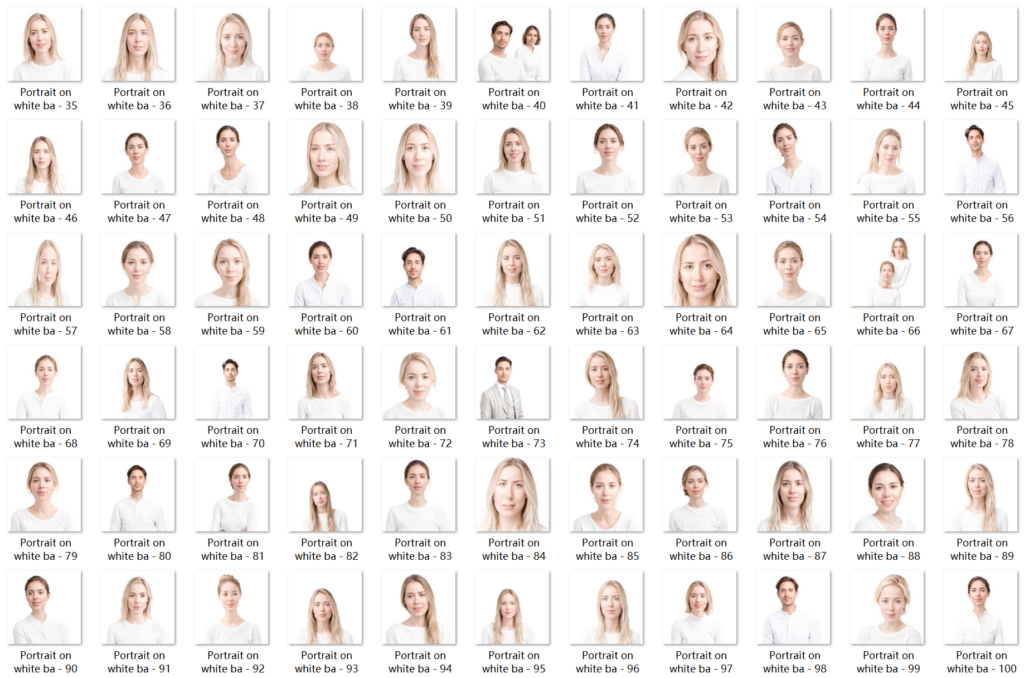

The blur stage involves confusion in recognizing faces and memorizing daily objects. Regarding face recognition, the project will select portrait pictures depicting individuals from four different races and age groups and use AI to generate more similar faces. Visual processing will then be conducted in TouchDesigner. For object recognition, the project will choose close-up images of family scenes from various countries, applying visual effects such as blurring to objects within the scenes using TouchDesigner. This stage aims to deliver a striking visual impact to participants based on the global prevalence of Alzheimer’s disease.

Stage 3: Fade

In the Fade stage, participants will be prompted to take a photograph of themselves in front of a camera, maintaining the visual impact of the previous phase’s abundance of facial images. Afterward, participants can pronounce their own names. Depending on the volume of their voice, their image on the screen will change in various ways like blurring. When participants stop speeking, the faces on the screen will gradually fade away.

Here, we simulate the perspective of individuals around Alzheimer’s patients by calling out their names, prompting the patients to recall their own appearance. The fading face on the screen mirrors the cognitive response of the patient to external stimuli. This interactive experience is implemented via Max/MSP/Jitter or Vizzie.

Stage 4: Vanish

In the Vasnish stage, visitors will immerse themselves in the third perspective of the final stage faced by Alzheimer’s patients as they confront the dissolution of self-awareness. The screen will present a 3D-scanned digital model dissipating like particles blown away by the wind synchronized with music and sound. Participants will activate this effect by pressing a button. Finally, the screen will fade to black, accompanied by a line of text reflecting on life: Perhaps memories will fade away with time, but love is eternal.

Significance:

- Improve public awareness of Alzheimer’s disease: Through immersive experiences, visitors can more intuitively feel the difficulties experienced by Alzheimer’s patients and increase their knowledge and understanding of this disease.

- Reverse society’s prejudice and stigma against Alzheimer’s disease: Use art and technology to show the lives of Alzheimer’s patients in a more warm and humane way, reduce discrimination and rejection of them, and promote social understanding of Alzheimer’s disease. Their love and support.

- Arouse society’s attention and respect for the elderly: By paying attention to the lives of Alzheimer’s patients and their families, we can arouse public attention and respect for the elderly and promote society to build a more friendly and inclusive environment for the elderly.

- Exploring the application of art and technology in solving social problems: This project combines art and technology, using AI animation, Touch Designer, Max and other technical means to provide new ways and approaches for the presentation of social problems, and explores the role of art and technology in solving social problems. Innovative applications in social issue communication.

III. Prototype

Prologue (Jiayi)

Initial Idea

This part is the background part of the entire project, and its purpose is to introduce the protagonist of this project to the user—the old woman suffering from Alzheimer’s disease. Being tortured by the disease every day often caused her life to become chaotic, unable to distinguish her relatives, forgetting important things in life, etc.

Practices

Initially, we planned to show this part in the form of AI-generated animation. Here are a few attempts I made.

Problems and Improvements

However, there are some drawbacks to AI-generated animations:

In terms of emotional expression, AI cannot fully simulate real human emotions.

At the technical level, although AI technology is constantly developing, there are still some problems with the smoothness of movements.

After the group discussion, we set out to create a new way of telling the story of everyday life with Alzheimer’s disease.

We plan to make a video with conversational sentences from the daily life of Alzheimer’s patients floating in all directions on the screen, such as: Mom, have you eaten? I am your daughter! Why did you soil the bed again? etc. When each piece of text comes out of the air, it will be accompanied by a corresponding audio sound. Finally, the text is piled up little by little at the bottom of the screen, as shown in the figure below:

In this part, we plan to use projections on the wall. The experiencer will stand in the middle and feel the words from all directions. At the same time, various sounds will linger in the ears, allowing the experiencer to experience the helplessness and confusion of Alzheimer’s patients.

Blur (Xinxuan / Yixuan)

Xinxuan

Initial Idea:

This part shows the character’s memories of people getting confused and not being able to tell who the people they are facing are. We propose to collect real portrait images from open source websites[7], train them into models through AI, and then continuously generate similar faces to simulate the confusing memories in the minds of characters.

Practices

In the beginning stage, I started by collecting 30 portraits of different ages and applying the white background process to them.

Secondly, I trained the database myself with Runway’s AI and generated similar faces. Then I used cutout video software to make a fusion effect, blurring real people into the converging faces generated by the AI. Finally worse, making the confusion grow from one person’s recognition difficulties to multiple people’s confusion.

Secondly, I trained the database myself with Runway’s AI and generated similar faces. Then I used cutout video software to make a fusion effect, blurring real people into the converging faces generated by the AI. Finally worse, making the confusion grow from one person’s recognition difficulties to multiple people’s confusion.

Problems and Improvements

- Suggest a broader globalised view of the disease, e.g. include more ethnicities

- Make image effects more abstract

Yixuan

Initial Idea

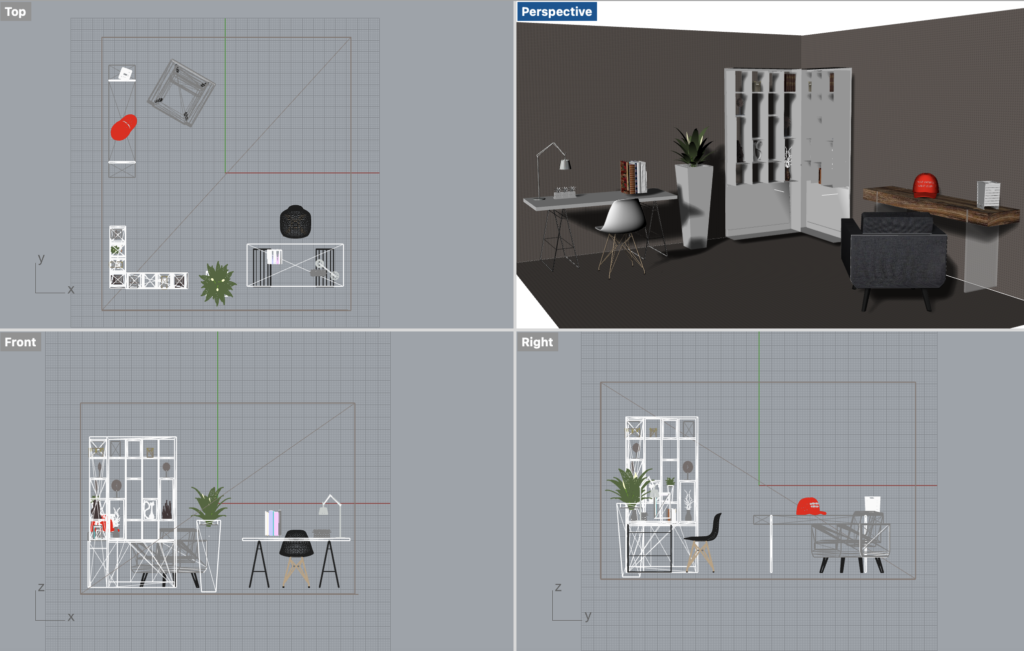

In this section I selected close-up images of family scenes from different countries and processed the objects in the scenes with visual effects such as blurring in touchdesigner, with the aim of simulating the memory and cognitive aspects of the objects that the patients felt when they were looking for them.

Practices

In the beginning stage,I focus on the patient’s memory of everyday life that is gradually blurring, especially everyday objects.I used Touchdesigner to create fluid or mosaic effects on old everyday objects to simulate the process of fading memories. Thus, I found four representative elderly objects, namely a teapot, an old telephone, a loudspeaker and a pair of old glasses.

Problems and Improvements

We are suggested that the disease be looked at in a broader globalised perspective, for example by including footage of Oriental families and objects. I can’t just focus on a single object so that it doesn’t connect with the condition or our subject matter. After the group discussion, we set out to demonstrate the audio-visual interaction between the audience and the camera by modelling the physical scene + props.

Firstly, I built a small home scene through Rhinoceros modelling software and added a little colour to the chair, book, hat and plant respectively to make it easier for the patient to find the items.

Next, I switched the camera over to a single object and used touchdesigner to generate dots + lines + blur + distortion kinematics to help the experiencer feel the emotional ups and downs of finding the object.

Interaction 1: Give me the hat! Where’s the hat?

Interaction 2: Could you pass me the yellow book, please?

Interaction 3: Have you seen the white desktop bins?

Interaction 4: Pick me a yellow petal, please. Thank you!

Finally, I edited the four video files into one in PR and added screen motion effects (warping and distorting as well as cross-cutting, etc) to create the feeling of scrambling to find items and increase the immersion of the experience.

Fade (Han)

Initial Idea

Compared to other sections, this part was designed in the fourth week after considering Jules’s feedback on the project, primarily based on the following two suggestions:

Complex interaction methods like Leap Motion is unnecessary, which can opted for using a standard webcam and utilizing Max’s Vizzie for interaction design.

Incorporating bystander perspectives is essential, as Alzheimer’s disease significantly impacts the people surrounding the patient, which is an indispensable aspect.

Therefore, we plan to bring visitors into the perspective of the patient’s relatives and friends, calling the patient, and the images presented on the screen simulate the changes in the patient’s memory of the patient’s face when he hears them speak.

Technically, we first capture participants’ photos using a camera. Then, voices are picked up via the microphone, and the sound data is transferred to Max patcher and affect visual processing consequently. The visual changes depends on voices level. When visitors call out loudly, the image becomes relatively clear, and as the sound diminishes, the image blurs. If the calling voice dissipates, the screen gradually fades to blank.

Practices

Screen Recording of testing video effects in Max. The audio sample in this test demo is generated by speechify[9].

Problems and Improvements

At present, we have only experimented with the feasibility of image processing in Max. However, the detailed adjustment of picture processing effects still needs to be completed, and mapping the modulation parameters of visual effects to sound data will require extensive adjustments.

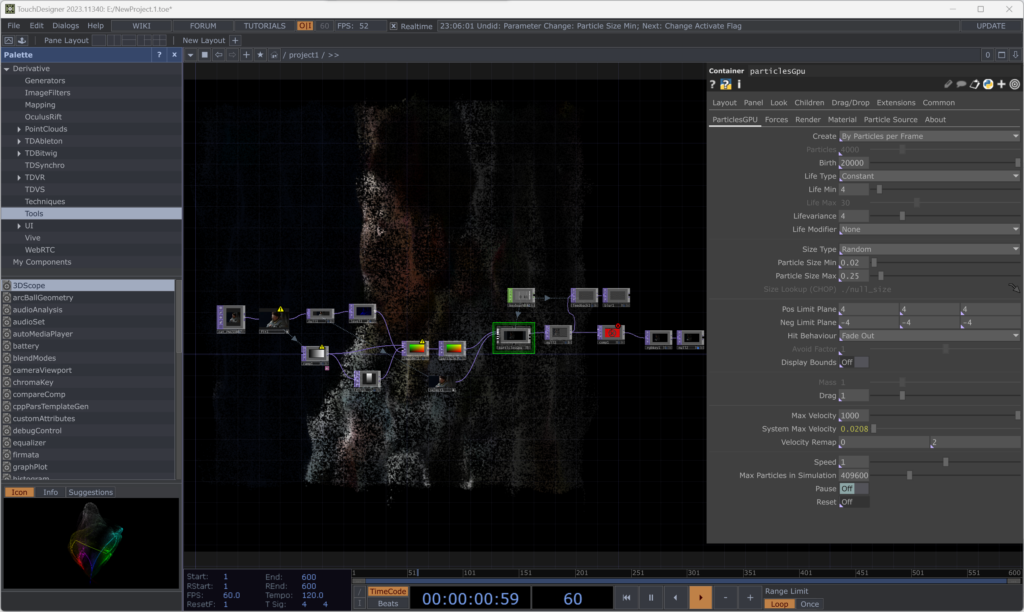

Vanish (Xinxuan)

Initial Idea

This section is really a dialogue between the characters and their selves and their lives, and it relates to existence. We want to convey the value of Perhaps memories will fade away with time, but love is eternal. Therefore, we scan a scene of an old man sitting on a chair and import the model into touchdesigner for particle effects. Then we will design a hand waving gesture interaction through leapmotion or kinect. When the visitor walks in front of the screen and waves his hand, the particles in the screen will dissipate with the gesture and a sentence of truth will appear.

Practices

First, I tried a mobile APP called Scaniverse to scan the scene, but found that the model accuracy was limited. Secondly, due to limited time, I switched to using pictures to try the more difficult particle dissipation effect in touchdesigner.

Problems and Improvements

- Remove unnecessary hand interactions.

- The last part can be interacted with by the visitor, e.g. allowing the visitor to experience the feeling of dissipation from the first point of view.

Audio (Han)

Music

During the initial meeting discussion, the team proposed a concept: The installation is divided into four area. As visitors navigate different areas of the installation, they wear headphones to experience corresponding sound feedback along with visual effects. However, recognizing the continuity of the memory forgetting theme, we abandoned this idea and decided to merge the four parts into one whole.

Therefore, there will be a total of four pieces of music in this project. These four paragraphs will be based on the same motive and have a similar style. In addition, the four parts will be distinguished by the use of different instrumentations, and the mood will gradually build up to match the narrative clue of the worsening of the disease.

Here is a demo illustrate the desired music style, primarily piano sounds. Further refinement is adding more instrument and combining closer to the visual style in each part.

Vocal

To evoke participants’ sense of empathy, we have decided to incorporate more human voice materials to improve the effect of story-telling. Moreover, the treatment of human voice effects varies in each section. In the initial Prologue section, human voices will be played in dry sound, with some simple adjustments such as volume and panning. In the second and third sections, the human voices will add some distortion effects, while in the final stage, the voices will be processed to the point of being completely unrecognizable.

The audio below is some attempts at special effects with vocal samples.

Regarding the source of human voice audio materials, we originally planned to find actors that fit the vocal characteristics of Alzheimer’s patients and enter into the studio to record the sound materials. However, due to time constraints and difficulty in finding suitable resources, we decided to use AI voice model instead to complete the production of raw vocal materials.

Here is a AI generated voice samples.[9]

Other Sounds

In addition to the music and vocals as the main body, to match the visual effects, some special sound effects will be added to those generated videos, which mainly appear in the second and fourth stages.

This project will also pick up some live sounds. Details on this part is demonstrated in the description of the third Fade stage.

IV. Others Preparations

Materials

- Projector

- Speakers

- Arduino button

Project Mood Board

Timetable

Week 1: Lecture

Week 2: Brainstorming, Group Discussions

Week 3: In-depth Research

Week 4: Revision and Improvement of Project based on Jules and Philly’s Suggestions

Weeks 5-6: Concurrent Practice and Sound Design for Various Parts of the Project

Week 7: Indoor Testing

Weeks 8-10: Completion of Testing

Weeks 11-12: Modifications

Week 13: Final Exhibition Preparation, including Venue and Equipment Rental

Week 14: Video Shooting and Editing

V. Appendix

[1] A Marriage to Remember | Alzheimer’s Disease Documentary | Op-Docs | The New York Times

[2] Alzheimer’s documentary in China: Please remember me

[4] ROBOTIC VOICE ACTIVATED WORD KICKING MACHINE

[7] Open source Character materials; Portrait pictures sources

[8]Images Sources:

https://www.pinterest.co.uk/pin/91901648638671628/

https://www.pinterest.co.uk/pin/1618549862356870/

https://www.pinterest.co.uk/pin/1109785533161637939/

https://www.pinterest.co.uk/pin/73465037663450281/

https://www.pinterest.co.uk/pin/391179917643670352/

https://www.pinterest.co.uk/pin/823806956845791472/

[9]Speechify: AI text to speech tool

[8]

[8]