In this project, music serves as an element for shaping the atmosphere of the scene. I designed a continuously played generative music, primarily using Ableton Live, along with plugins from Max for Live, and used Max/MSP to control transitions between musical sections.

Initial Idea

During the initial background research for the project, I discovered that stories of Alzheimer’s disease evoked a persistent, underlying pain in me. Like the gradual distortion of a patient’s memory, it is a slowly worsening process that affects the patients and also causes deep-seated pain in their loved ones. Therefore, I wanted to create an ambient music piece that is slow, steady, and subtly sad, conveying the feeling of slowly telling a story. In seeking inspiration, I found that the slightly detuned piano was perfect for conveying this mood. When one hears this sound, it evokes the image of an aged person, sitting in front of an old piano at home, gently recounting memories through playing piano. Based on this, I composed a four-bar chord progression as the foundation of the piece and created the following demo to establish the overall mood of the music.

Further Arrangement

Initially, we planned to create separate musical pieces for each of the four sections of the project, each advancing progressively. However, for better coherence and to enhance production efficiency, we decided to develop a single piece of music throughout.

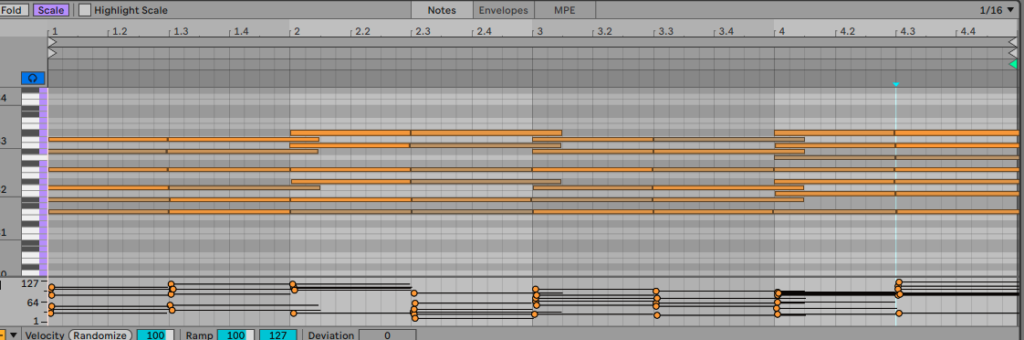

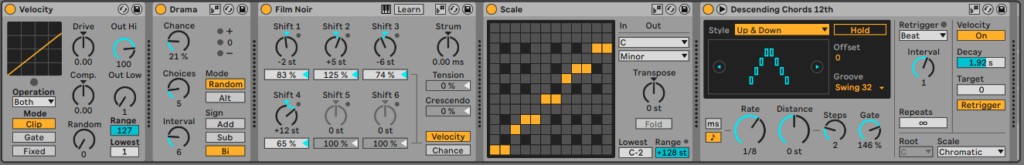

Creating a track that plays continuously for at least eight minutes requires continuous development and variation. Therefore, I expanded on the initial demo by adding more instruments and extensively using Max for Live MIDI Effects on each part. Thus the music, based on a stable chord progression loop, randomly presents some melodies, rhythms, and effects.

For the random melodies, I used an AI text-to-music model to generate some musical fragments[1], selected those that fit our project’s atmosphere, and converted these audio files into MIDI Files to further generate melodies using these plugins.

Utilize AI-Generated Voice

This project aims to evoke the emotions of its audience, and in the creation of the audio, I found that the human voice is particularly effective in conveying emotions. Additionally, voice is a crucial element in topics related to memory[2]. Therefore, I decided to incorporate this element into the music. The process of generating the voice is described in more detail in this blog[3].

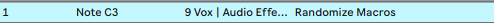

During production, I first arranged these audio clips in the Session view of Live.

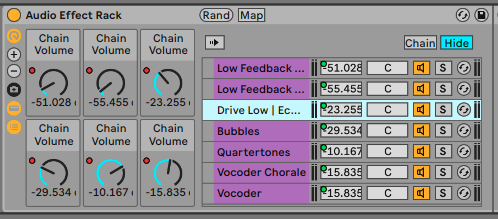

Afterwards, I created several effect chains and controlled the volume of each chain using the Macro knobs in Live.

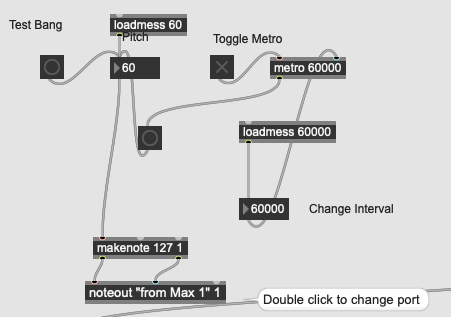

In Live, there is a “Randomize” button that randomly changes the values of each knob within a rack, effectively altering the volume of each effect chain. These chains combine to add rich variations to the voice. To maintain ongoing variation in this section, I set up a Max Patcher using metro and noteout to send timed MIDI signals from Max. This triggers the “Randomize” button in Live at regular intervals.

The Music Version at Previous Stage

Transition Control

After completing all the musical content, I noticed an issue: while every part of the music was continuously changing, the overall piece was too uniform with all parts playing simultaneously. Therefore, I restructured the music to vary the sections as follows, allowing each instrument to alternate in taking prominence.

1-> +vibe +vox 2-> +pad -vibe 3-> +organ 4-> +harp+DB 5-> +saw+lead 6-> -harp 7-> -lead 8-> -pad -DB -saw +harp 9-> -vox 10-> +pad 11-> -harp 12-> -pad -organ +vibe +vox

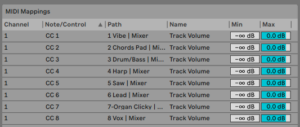

I controlled the faders in Live for each part via MIDI CC signals linked to fader movements in Max. I also set up Max to change the fader values every 8 bars, allowing the music to transition smoothly into the next section.