Project Concept Outline

Team members:Dominik Morc、Weichen Li、Yingxin Wu、Boya Chen、Jiaojiao Liang

Formulating the project idea:

Our objective is to present a collection of art design works that resemble video loops, where dynamic image/motion pictures are played with background music and reactive sound effects. The work aims to showcase a series of illustrations of bio morphosis and hybridities that blend together nature and digital entities through interactive media that can be built with a screen-based exhibition. In other words, this is a digital exhibition that presents visual, audio, and interactive techniques.

Our main idea is to demonstrate creative art that image and depict the evolution of plants under the impact of changing climate as so the whole ecological environment, to show the audience our perspective on environmental issues and emphasize the importance of the relationship between us human, living things, and nature in nowadays’ context of interdisciplinary practices of technology and creative art that are popular in environmentalism discourse.

Ideas, inspirations and case studies:

Our pilot study involves the following aspects:

1. Exploration of arts, projects and practices with themes related to environment and human–nature relationships

Some scholars and artists made their standpoints that we need to be aware that AI art generators can draw back our creativity because rather than supporting our imagination, AI is replacing it. In some circumstances, human creative expression is from a spiritual perspective, and the act of creation can be aroused from the flow state. It inspired us that our project could focus on nature and spirits from the living world.

https://thedruidsgarden.com/2022/10/16/ai-generated-arts-and-creative-expression/

In the journal AI Magazine, a paper indicated that a super-intelligent AI may one day destroy humans.

https://greekreporter.com/2022/09/16/artificial-intelligence-annihilate-humankind/

This gives us an idea to explore stories with the narrative of environmental upheaval and makes us think about the prospect one day, more intelligent beings will change the world we live in. The depiction of nature and ecosystems in Hayao Miyazaki’s films particularly appealed to us. There are a lot of environmentalism, nature and human well-being narratives combined with environmental degradation and ecosystem evolution that can be seen in the stories.

https://hashchand.wordpress.com/2021/06/29/nausicaa-exploring-environmental-themes/

In Nausicaa of Valley of the Wind (1984), surviving humans must live in coexistence with the Toxic Jungle in the post-apocalyptic world. A wonderful natural environment is illustrated in this story. The mutated flora and fauna in the Toxic Jungle that lived and took over the world are at the same time decomposing human-caused contamination of the earth.

Living Digital Forest https://www.designboom.com/art/teamlab-flower-forest-pace-gallery-beijing-10-05-2017/

A Forest Where Gods Live https://www.teamlab.art/e/mifuneyamarakuen/

Impermanent Flowers Floating in an Eternal Sea https://www.teamlab.art/e/farolsantander/

Examples of exhibitions – by teamLab

Vertigo plants an experiential urban forest at Centre Point – the installation is mainly working on lighting bars, and the speaker is playing natural sounds.

A project combining art and technology – ecological world building https://www.serpentinegalleries.org/art-and-ideas/ecological-world-building-from-science-fiction-to-virtual-reality/

2. Case studies of AI-assisted design, creative and production practices,

including concepts like Generative Adversarial Networks (GANs, a class of machine learning frameworks that enable models to generate new examples on the basis of original datasets);

Neural style transfer (NST, the technique of manipulating images, and videos and creating new art by adopting appearances, and blending styles. See: http://vrart.wauwel.nl/)

Procedural content generation (PCG, the use of AI algorithms to generate game content such as levels and environments in a dynamic and unpredictable way);

Reinforcement learning (RL, a type of machine learning that allows AI to learn and practice, such as playing complex games) and etc. There is an adventure survival game, No Man’s Sky. Its player explores and survives on a procedurally generated planet with an AI-created atmosphere and flora and fauna. What inspired me in this game is the diversity of flora and fauna built in the ecology of wonder earth, and all of its configurations are simulated by AI algorithms.

3. Study previous studies on generative art and computational creativity

It has been argued that there are problems in generative art and AI-assisted creative practice, such as “the choice and specification of the underlying systems used to generate the artistic work (evaluating fitness according to aesthetic criteria);” and “how to assign fitness to individuals in an evolutionary population based on aesthetic or creative criteria.” McCormack (2008) mentioned ‘strange ontologies’, whereby the artist forgoes conventional ontological mappings between simulation and reality in artistic applications. In other words, We are no longer attempting to model reality in this mode of art-making discipline but rather to use human creativity to establish new relationships and interactions between components. And he argued that the problem of aesthetic fitness evaluation is performed by users in the interactive evolutionary system, who selects objects on the basis of their subjective aesthetic preferences.

McCormack, J. (2008) “Evolutionary design in art,” Design by Evolution, pp. 97–99. Available at: https://doi.org/10.1007/978-3-540-74111-4_6.

The following elements can characterise the aesthetics of generative art:

- Mathematical models & computational process (employing mathematical models to generate visual patterns and forms and transform visual elements). Case: Mitjanit (2017) ’s work of blending together arts and mathematics. “Creating artworks with basic geometry and fractals…using randomness, physics, autonomous systems, data or interaction…to create autonomous systems that make the essence of nature’s beauty emerge…”

- Randomness (unexpected results and variability in the output): For example, an animated snowfall would generally only play out in one way. When developed by a generative process, however, it might take on a distinct shape each time it is run (Ferraro, 2021).

- Collaboration between artist and algorithm

In this video, it was mentioned that in the relationship between people and AI in the fields of art and creative works, AI is more likely to emerge as a collaborator than a competitor (see – https://youtu.be/cgYpMYMhzXI).

The use of AI assistance in design fiction creation shows cases of generating texts with “Prompts” (prompt programming). The author claimed that it is no suggested direct solution to make AI perfect despite the in-coherency of AI-generated texts. Research results show that the AI-assisted tool has little impact on the fiction quality, and it is mostly contributed by users’ experience in the process of creation, especially the divergent part. “If AI-generated texts are coherent and flawless, human writers might be directly quoting rather than mobilizing their own reflectiveness and creativity to build further upon the inspiration from the AI-generated texts (Wu, Yu & An, 2022).”

Wu, Y., Yu, Y. and An, P. (2022) Dancing with the unexpected and beyond: The use of AI assistance in Design Fiction Creation, arXiv.org. Computer Science. Available at: https://arxiv.org/abs/2210.00829 (Accessed: February 11, 2023).

4. Search and learn a range of open AI systems, and tools that would be helpful during our development of the project

DALL·E 2 – OpenAI (image)

ChatGPT (searching, text-based prompts)

Midjourney (stylised picture-making)

Prezi (can be used to build a web/interactive interface)

Supercollider, puredata, max/msp (programing system and platforms for audio synthesis and algorithmic composition)

X Degrees of Separation (https://artsexperiments.withgoogle.com/xdegrees) uses machine learning algorithms and Google Culture’s database to find visually relative works between any two artefacts or paintings. What I find interesting about this tool is that if we have a particular preference for a certain visual style of artwork or if we want to draw on a certain type of painting, this tool can help us find a work of art that falls somewhere in between two chosen visual styles.

More can be seen at: https://experiments.withgoogle.com/collection/arts-culture

Amaravati: British Museum’s collaboration with Google’s Creative Lab – An augmenting environment project in a museum exhibition. In this introduction video, users use their phones like a remote control to have mouse-over actions with the interactive exhibition.

This is an example of displaying multimedia work and enabling interaction if we are going to create something with installations, screens and projectors.

AI art that uses machine intelligence and algorithms to create visualizations: How This Guy Uses AI to Create Art | Obsessed | WIRED

https://www.youtube.com/watch?v=I-EIVlHvHRM

Initial plan & development process design:

- Designing the concept, background & storyboard of plants → subjecting the plants to be designed → investigating the geographical and biological information of plants as well as cultural background

- Collecting materials → capturing photos, footage and audio → sorting original materials

- Formulating visual styling → image algorithms/AI image blending tools/style transfer/operate by hand → lining out the concept images by hand → rending images with AI → iteration…

- Designing the landscape & environment → geographical/geological condition → climate condition → ecologucal condition

- Designing the UI & UX → Creating a flow chart or tree diagram in the interface to demonstrate the plant’s appearance at each stage. Enable the viewer to control the playback of the exhibition.

- Exhibition planning → projector & speaker

Prototype interactive touchscreen/desktop/tablet/phone application

https://prezi.com/view/qvtX0GwjkgblZsC7aMt6/ + video to showcase

Equipment needed:

Budget use:

Prototype:

Jiaojiao

First, I collected information about bryophytes, learned about the different forms of bryophytes in nature, and chose moss flowering as the initial form. After that, I used chatgpt to ask ai what kind of appearance the bryophytes would evolve in different environments after the ecological environment was destroyed. According to the background provided by the AI, mosses have evolved to withstand the extreme conditions of a post-apocalyptic world, and mutated moss plants can have dark, eerie appearances, with leaves that glow in neon lights.

For example, in the context of nuclear radiation, plants exposed to nuclear radiation may develop genetic mutations that cause changes in their appearance. Change the growth pattern, and they may be stunted or develop differently shaped leaves or other structures. Overall, the evolution of nuclear-contaminated bryophytes is likely to be a complex and ongoing process, as bryophytes continue to adapt to changing environments.

In the context of global heat, for example, some bryophytes may have adapted to extreme heat by developing a thick cuticle, which reduces water loss and helps prevent damage to plant tissues. However, more research is needed to fully understand the evolutionary adaptations of bryophytes to extreme heat.

In the sketch below, I chose a representative bryophyte and hand-drawn the development state of the bryophyte in an extreme environment.

Secondly, I used C4D to make experiments on the appearance changes of different stages of moss plants.

Boya:

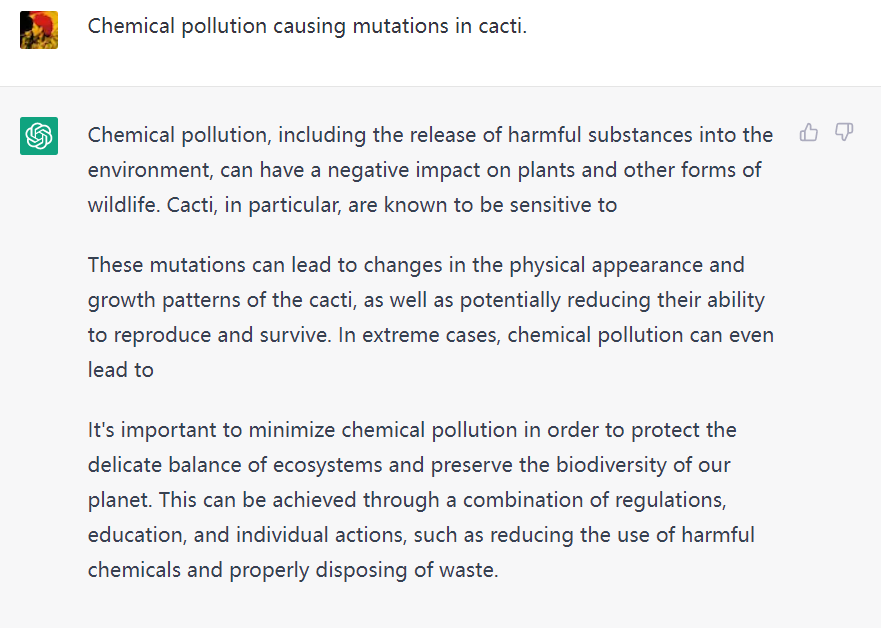

Design iterations and attempts to use artificial intelligence

I tried to use artificial intelligence to analyse the possible effects of environmental degradation on plants. I chose a cactus as the design object and set some basic directions for environmental deterioration such as lack of water, heat, chemical pollution, radiation etc. I used the AI to try and analyse how these environmental factors could affect the cactus and based on this I generated more design keywords.

I then tried to put the AI-generated descriptive keywords into different AI mapping software to test whether the resulting images would meet the design requirements.

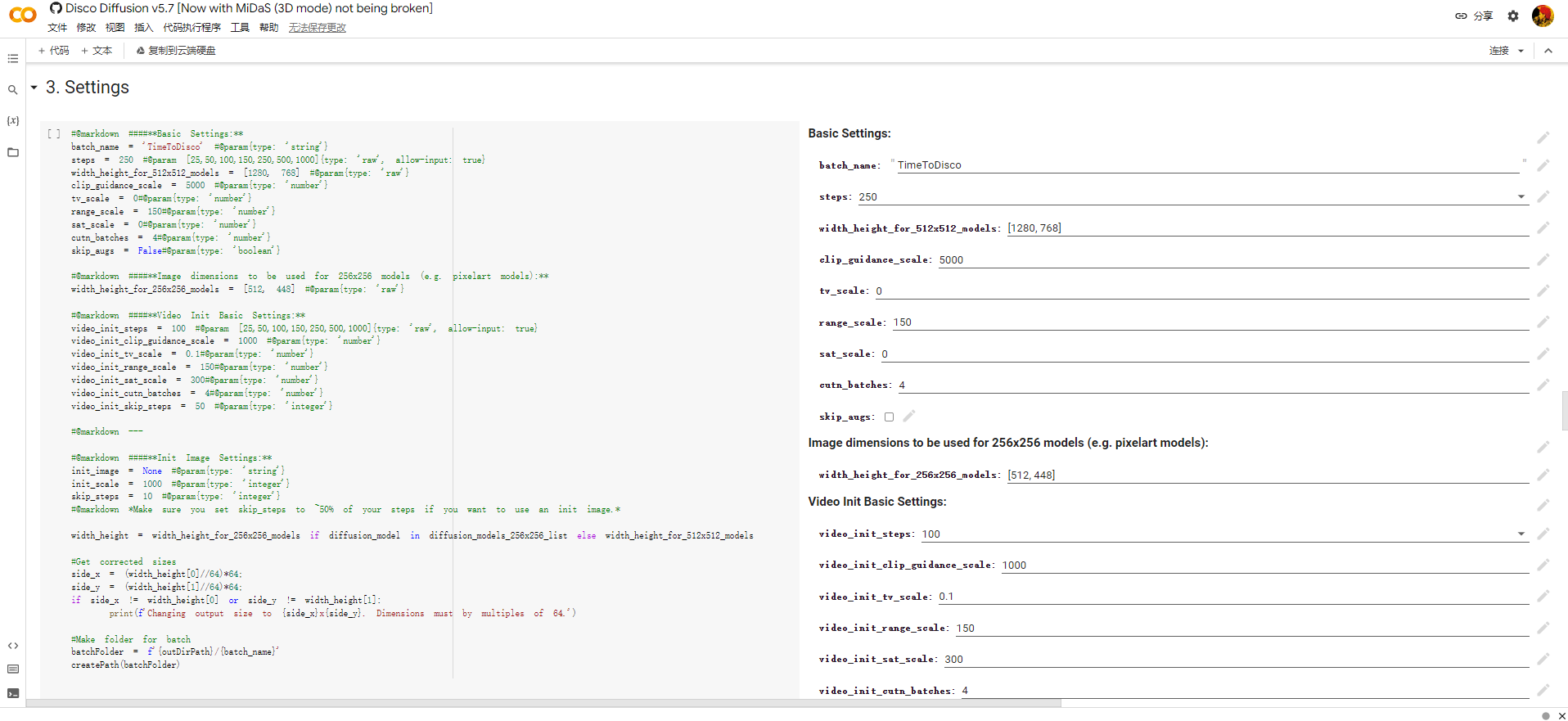

I tried to use Midjourney, Disco Diffusion, DALL-E and other AI-generated images respectively.

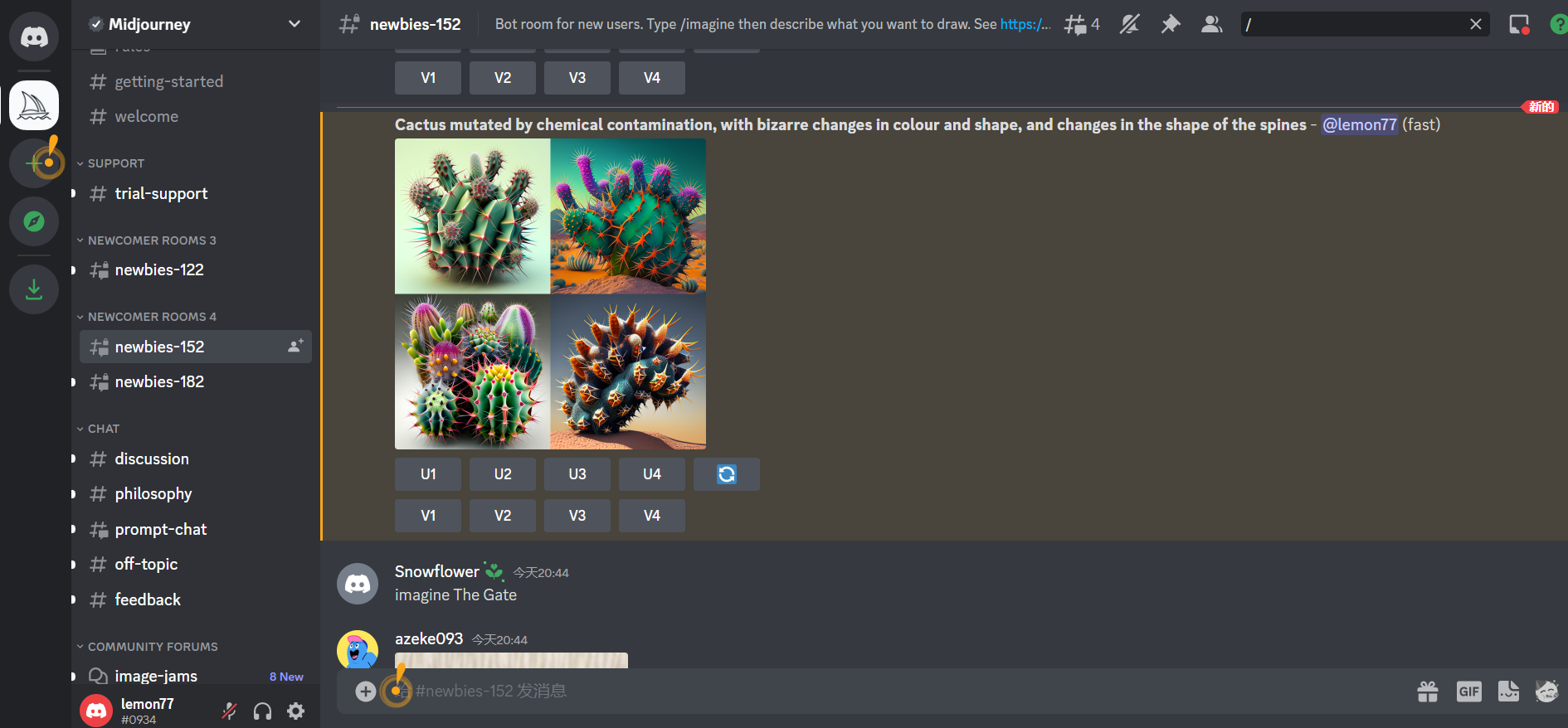

- Midjourney

Direct image generation by description

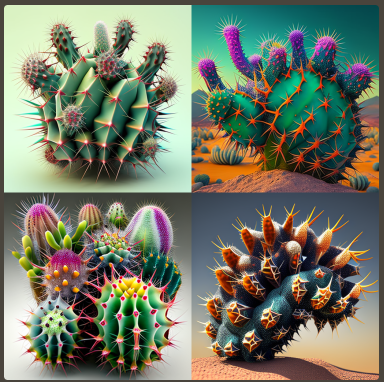

- Disco Diffusion

Images generated by code shipping.

- DALL-E

I also tried to use Processing and Runway to generate an animation of the cactus mutation process.

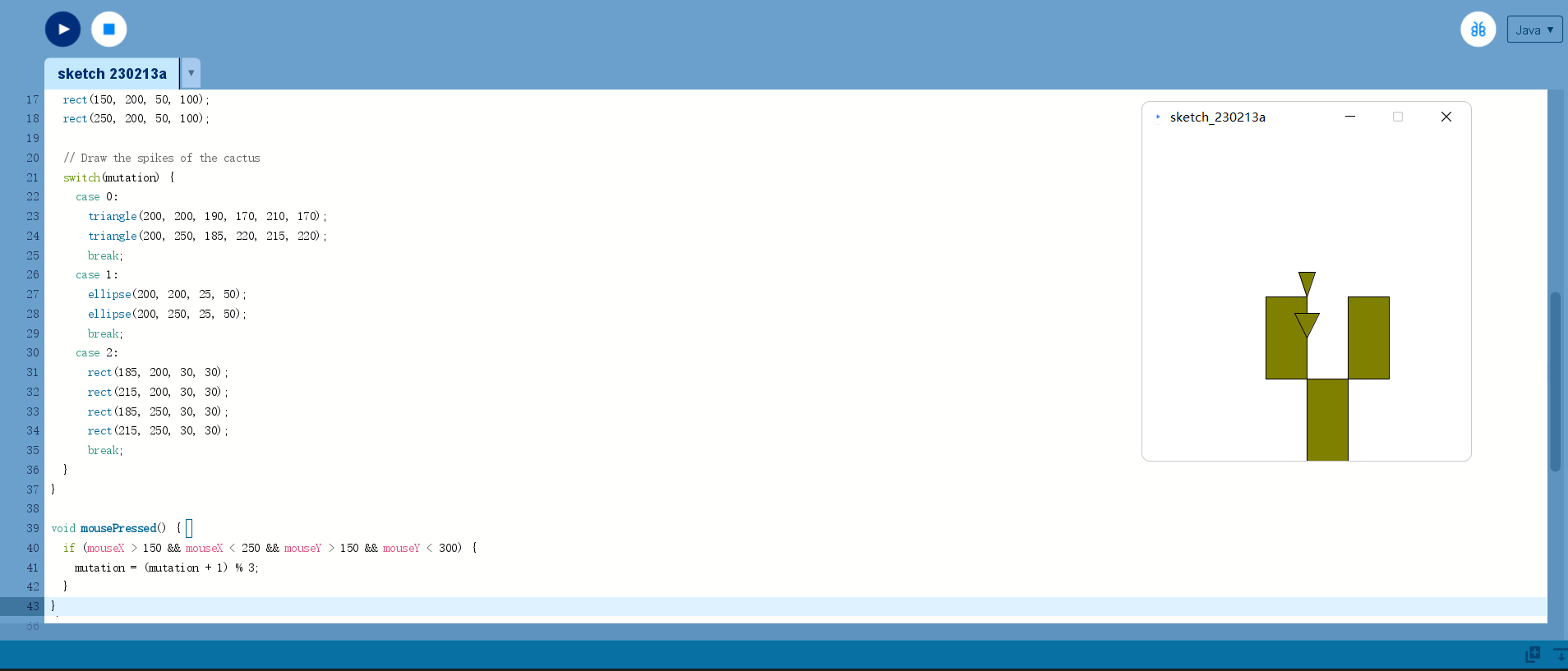

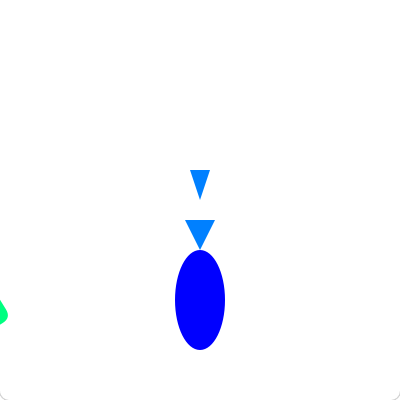

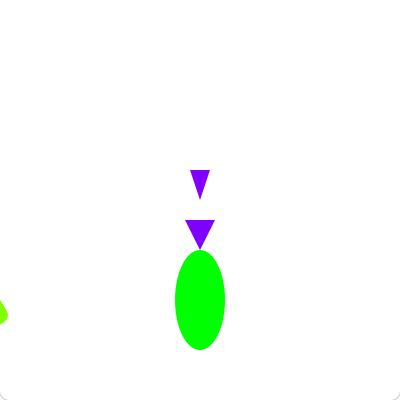

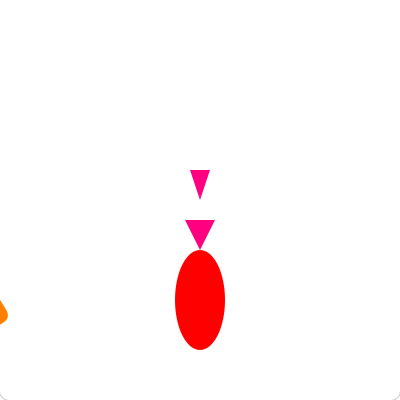

- Processing

I have tried to write an interactive cactus model in processing that allows the cactus to undergo different mutations by the user’s clicks.

Click to change the cactus into different forms.

Click to change the cactus into different forms.

Audio:

Yingxin: “I used AI create a series of audios, did some machine learning on them and made some post-production changes to fit our style. But we felt that using only AI would not be able to fully transmit our creativity and our brilliant ideas. So we will reflect our creativity in the final presentation, from the sound material to the finished product. The music style must fit with the picture. The second is that I want the interactivity of the music to be reflected in the audience’s choice to influence the direction of the music. As an example, if we provide some options in the video. For example, choose to cut the tree or not to cut the tree. The audience chooses to cut the tree, the form of the plant will be different, it may be bad, then the style of the music may be more gloomy or low. Conversely, the music may be livelier and more cheerful.

This is just a style demo.”

We will recording lost of sound and add effects.

This is a recording log:

https://docs.google.com/spreadsheets/d/1wbFCR_z72PRr57pVdQH0de06Fj1HYXHEKiSr81UX4EM/edit?usp=share_link

Audio Reactive:

backgrounds:

- Interpolation or morph animation

Explore in the Latent space.

Through the “interpolation” algorithm, the transition screen will be completed with programs and AI between the two pictures which could replace manual K-frames.

maybe we can try:

- Neural network nvidia provides off-the-shelfStyleGAN has three generations in the past two or three years, and the release speed is faster than learning.

https://github.com/NVlabs/stylegan

https://github.com/NVlabs/stylegan2-ada-pytorchhttps://github.com/NVlabs/stylegan3 - There are a large number of pre-trained modelshttps://github.com/justinpinkney/awesome-pretrained-stylegan

- The AI development environment

- Runwayml

- Google Colab

- Related cloud services

Process:

Add audio-visual interaction in the generation process

- Basic ideas

The original version was not real-time.

- Read the audio file first, and extract the frequency, amplitude and other data of the sound according to algorithms such as FFT.

- Add the above data as parameters to the process of generating interpolation animation, add, subtract, multiply and divide latent.truncate and other values.

- Synthesize the generated animation (modulated by audio) with the audio file.

- Google Colab can be used.

At the beginning, I used p5js to interact with Runway (an Ai machine learning tool) to generate an algorithm, which can automatically fill frames based on the volume of the music, and I finished coding.

Then I kept trying to get away from the Runway because we created the plants by ourselves. I soon realized that p5js had to rely on ml for that. I haven’t found an alternative for it, I try some Max and I believe it can, wanted to use google Colab tools at first but I don’t know Python, I want to make and interactive video and provide an option in the video.

P5js coding:https://editor.p5js.org/ShinWu/sketches/EhbdxwUup

Max Project: https://drive.google.com/file/d/1lnRkFSIrbSu4f4HVRWL02StEU7DSXbgO/view?usp=share_link

[Real-time] generation + audio and video interaction

Need better computer configuration (mainly graphics card).

Using OSC, audio data is sent from other software to the AI module to modulate the generation of the picture in real time. Al uses Python’s OSC library. (Haven’t try yet!)

– https://prezi.com/view/qvtX0GwjkgblZsC7aMt6/