Having been researching how to create morphological variation between our plants’ concept images, I found the Deforum plugin that might be able to achieve the desired effect of bringing the mutated plants to life. This program enables us to morph between text prompts and images using Stable Diffusion.

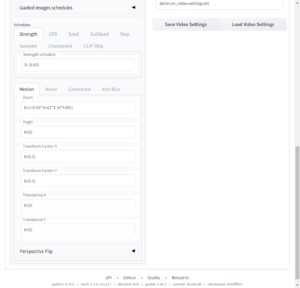

Deforum Plugin in the process of video generation

There are many parameters in Deforum.

I tried different ways in the process of making our plant mutation footage. At the start, I only used prompts. As a result, it seems that simply using the prompt words leads to the video not drawing the plants correctly at some points of the video where they should have been. In both demos, my video has a camera zoom setting. However, sometimes it appears that the plants disappear from the picture. These attempts demonstrated the limitations of using cues alone to generate animation and proved the uncontrollable outcome of Diffusion’s video generation.

More details in the process

In Stable Diffusion, there is a CFG (classifier free guidance) scale parameter which controls how much the image generation process follows the text prompt (some times it creat unwanted elements from prompts). The same setting apply in Deforum’s video generation work flow, on the other hand, there are Init, Guided Images and Hybrid Video settings I need to figure with to morph between images and prompts.

Under the ‘Motion’ tab, there are settings for camera movements. I applied this setting in many times of attempts to generat videos but at the end I didn’t use it in our video.

an early version of video that generated from text prompts

Example of miss settings resulted in generating tiling pictures

In this sample, the output is slightly better when using both prompts and guided images as keyframes. The process has managed to draw the plant right in the centre of the picture. However, there are still some misrepresentations in the generated video. For example, the morphosis between multiple stages of plant transformation could be better represented but rather switches from one picture to the next like a slideshow. Moreover, As I typed something in prompts like “razor-sharp leaves” and “glowing branches”, the image was drawn incorrectly. For example, artificial razor blades come out on the plant’s leaves.

The setting specification of this parameter is like 0:(x). If the value x equal to `1`, the camera stays still. The value x in its corresponded function affects the speed of the camera movement. When this value is greater than 1, the camera’s movement is zooming in. And when this value is less than 1, the camera zooms out.

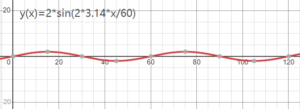

The Zoom setting here is `0: (1+2*sin(2*3.14*t/60))` The effect in its outputted video would be: camera zooms in from frame o to frame 30, zooms out from frame 30 to 6o (and the camera movement speed becomes 0 when it is in frame 30 and frame 60), every 60 frames this camera movent repeat how it moves. The sample video below works then same function but its movement is like: zoom in, stop zoom, zoom in again and stop zoom again.

The Zoom setting here is `0: (1+2*sin(2*3.14*t/60))` The effect in its outputted video would be: camera zooms in from frame o to frame 30, zooms out from frame 30 to 6o (and the camera movement speed becomes 0 when it is in frame 30 and frame 60), every 60 frames this camera movent repeat how it moves. The sample video below works then same function but its movement is like: zoom in, stop zoom, zoom in again and stop zoom again.

Changing from one subject to another in the video

When I try to make intended effect of morph between two things I emplied an other type of function. Below is the Prompt I noted in its setting.

{

“0”: “(cat:`where(cos(6.28*t/60)>0, 1.8*cos(6.28*t/60), 0.001)`),

(dog:`where(cos(6.28*t/60)<0, -1.8*cos(6.28*t/60), 0.001)`) –neg

(cat:`where(cos(6.28*t/60)<0, 1.8*cos(6.28*t/60), 0.001)`),

(dog:`where(cos(6.28*t/60)>0, -1.8*cos(6.28*t/60), 0.001)`)”

}

The prompt function in the video is intended to show a cat from the beginning. With the video playing, the cat morphs into something else. When the video is played to frame 60, the cat becomes a dog. And 60 frames later, it changes back to a cat.

example of prompt and guided images input in later video generation

The spacing between the inserted keyframe images and prompt input should be equally away from their previous/next keyframes: e.g. {

“0”: “prompt A, prompt B, prompt C”,

“30”: “prompt D, prompt E, prompt F”,

“60”: “prompt G, prompt H, prompt I”,

}

reference:

https://github.com/nateraw/stable-diffusion-videos

https://github.com/deforum-art/deforum-for-automatic1111-webui