Week 11 – Preparation for presentation!!

Troubleshooting Unity:

Just one day before the presentation, we had a very intense debugging session in Studio 4. Originally, I was scheduled to finalize the Wwise mix with Evan, but instead, we had to reconfigure the entire monitor setup for quad output and, most importantly, investigate why Unity was not allowing the sound to play in the required format.

We were all thinking very hard on the problem:

Eventually, Jules and Leo discovered that it had been a known bug affecting all Mac computers for over ten years. The final solution was to create a Windows build and run it from Leo’s laptop. The test conducted prior to the presentation was successful, ensuring that all technical components were ready for use.

Final Wwise modifications:

Up until Week 9, our plan included buttons and other sensors triggering one-shot sound effects. However, after shifting to four reactive ambiences instead, many fully prepared sound effect containers in Wwise remained unused. Personally, I did not want to lose the time and effort invested in creating them, and I believed the final soundscape would be much richer if these sounds were included. Therefore, I decided to integrate them into the scenes where they were originally intended to be played.

All sound effect containers—whether random, sequence, or blend—were set to continuous loop playback rather than step trigger, allowing them to play consistently throughout each scene.

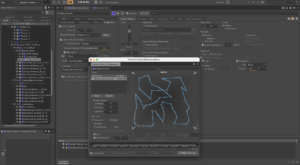

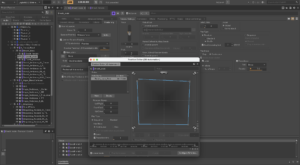

For example, the breath random containers (Female, Male, Reversed) were assigned different transition delay settings (with slight randomization), so that each container would trigger at a distinct periodic rate. Additionally, I implemented complex panning automation to move the sounds across all speaker directions, using varied transition timings and timeline configurations.

Or, the human voice sounds in Depression received more, shorter paths with longer timelines:

The first technique is better for larger amplitude, fast-changing movements, while the second one allowed me to have more control over the direction panning.

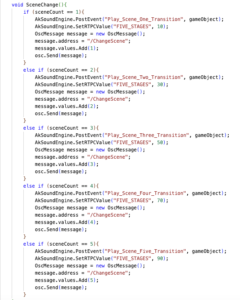

I also added 5 transition one-shot events to be called from Unity when the scene is changed:

We had enough time to test all components prior to the presentation, and I was also able to make last-minute mix adjustments in the Atrium, which greatly contributed to the overall balance of the soundscape.

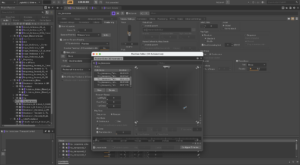

The RTPC values controlling the distance–loudness relationship for the ambience proximity sensors were also modified to fade out at approximately 65 (on a scale of 0–100), instead of the previously used value of 30. This change allowed for a smoother fade over a longer distance range.

Reflection on the presentation:

I felt incredibly proud and happy when the lights, sound, and visuals finally came together. With more people on the sound team than on visuals, I felt a certain pressure for the audio to stand out. Even just hours before the presentation, I worried it wouldn’t feel rich enough or convey the intended emotions. But when everything was played together, I thought it turned out pretty amazing!! I’m quite pleased with the final mix, and adding those last-minute sound effects was definitely worth it – they added so much more meaning to the scenes. I particularly appreciated the use of ‘human’ sounds, like footsteps and breaths. Given more time, I would have expanded on those elements further.

Personally, one area I would still refine is the harmony between visuals and sound. We received the initial visual samples before beginning the audio design, but some were later changed, which led to slight mismatches – particularly noticeable in the third stage, Bargaining.

It was wonderful to see so many people attend and interact with the installation. Understandably, everyone I spoke to needed a brief introduction to the theme, as our interpretation of grief was quite abstract. Still, the feedback was overwhelmingly positive, and many were impressed by the interactive relationship between audio and visuals.

I learned a lot about teamwork during these months, especially how crucial it is to find everyone’s personal strengths. Despite starting out bit slow with assigning the roles, I think by the last 2-3 weeks we all really did feel focused on what we should do and how to contribute in maximum efficiency – and this clearly manifested in the success of the presentation day:))

I am also grateful to have learned so much about Unity and Wwise. In particular, I found it fascinating to explore Wwise in a context so different from its typical use in game sound design!! Furthermore, seeing Kyra and Isha’s incredible work in TouchDesigner definitely inspired me to learn using the software to create audio-visual content in the future.