When we first arrived at the paper mill, we were immediately struck by the vastness of the space. Seeing so many pieces of equipment arranged throughout the site, all covered in layers of dust, left a powerful impression on us. It made us start thinking: could we use TouchDesigner to recreate and convey that initial feeling of awe we experienced upon entering the site?

Now Factory

Thus, at the beginning of learning and using TouchDesigner, we clearly defined our goal—to fully integrate visuals and sound through this software in order to express the thoughts and emotions we wanted to convey. To ensure the efficiency of our work, we conducted a field investigation of the paper mill and clarified the direction we wanted to pursue. By combining the imagery captured with a 3D scanner, we ultimately decided to structure the project around three themes: the past, the present, and the future.

Subject: After establishing the three themes of the past, present and future, we began to engage in divergent thinking and open creation based on the current situation of the paper mill. First of all, the current paper mills are undoubtedly a part that has gradually been overlooked due to the progress of The Times. In the past, if we convey the prosperity of the past paper mills through visual content, I think the key word of this theme is prosperity, and the interaction is carried out through relatively bright visual effects.

Interact with max’s voice

Image

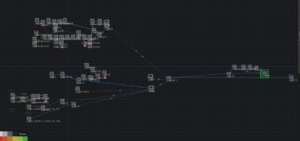

Complete engineering screenshot

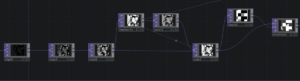

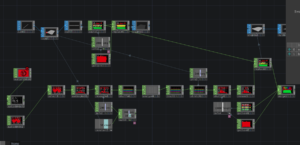

In the “Now” section of the project, audio data is used as a driving force to animate a geometric array in real time. This is achieved through the use of several key operators in TouchDesigner, including line, copy, replace, and choptol. These tools work together to translate sound frequencies into visual distortions, allowing the geometry to react dynamically to the characteristics of the audio. The audio input is brought into the system via the audiofilein node, from which a specific channel is selected for analysis. Spectral data is then extracted using the audiospectrum node, and resampled to ensure a smooth and coherent visual output that flows naturally with the rhythm of the sound.

Now

This section was developed through a creative collaboration between Li and TuTu. The background of the scene is particularly striking—it consists of a 3D scan of an abandoned paper mill, which adds a strong sense of place and texture. This scanned environment is rendered using the geo2 node along with a constant material, resulting in a visually immersive setting that enhances the overall depth and spatial atmosphere of the piece.

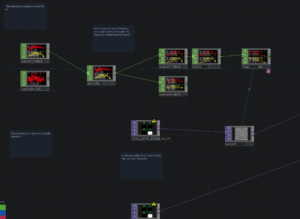

Future:Transitioning into the next section, the “Future” segment presents a different yet complementary visual approach, designed entirely by Shen. In this portion of the project, TouchDesigner is used to visualize audio input through a dynamically transforming 3D wireframe sphere. Real-time audio data is continually analyzed and mapped onto a sphere modulated by procedural noise, resulting in organic, flowing deformations that echo the intensity and tempo of the sound.

To deepen the visual complexity and enhance motion continuity, a feedback loop is introduced, which creates delicate motion trails that follow the sphere’s surface dynamics. The final effect is a pulsating and reactive visual form that synchronizes with sound in real time, blending spatial geometry, kinetic movement, and rhythmic flow into a seamless, immersive audiovisual experience. This section not only showcases Shen’s technical skills in real-time processing, but also reflects a strong artistic sensitivity to the relationship between audio and visual expression.

Future