The first rehearsal is a crucial stage in our stage performance project, which gives us the opportunity to test the venue set-up and equipment commissioning in a real-world environment.

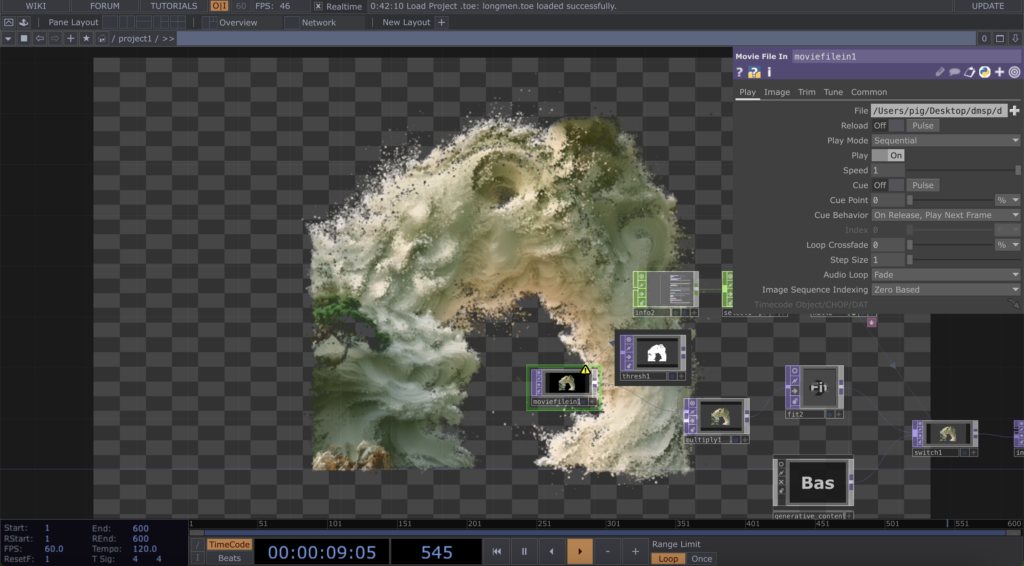

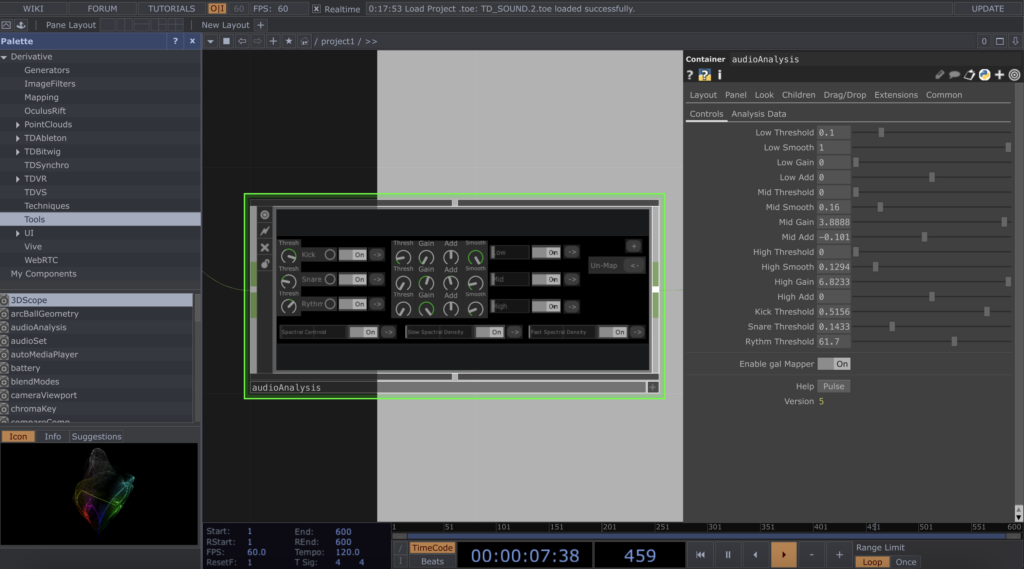

During the process, we made several attempts to test the projection effect of the projector, the induction sensitivity of the light strips, and the lighting effect of the fish lights. We also explored different setup options, including wool wraps, light decorations, and foam paper effects.

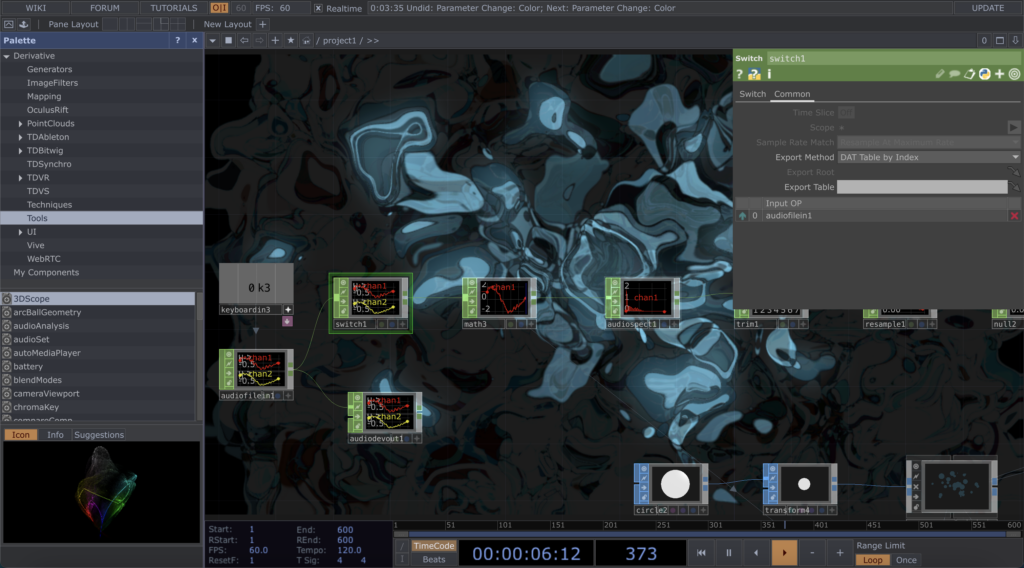

Equipment Commissioning

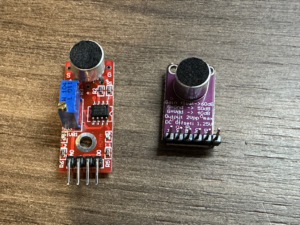

The projector was our main visual device, and we adjusted the projection angle and brightness during the rehearsal to ensure the best projection effect. The sensing sensitivity of the light strips, on the other hand, has a crucial impact on the synchronisation between the music and the light effects, but in the test, the strips had difficulty in accurately capturing the sound changes in the scene.

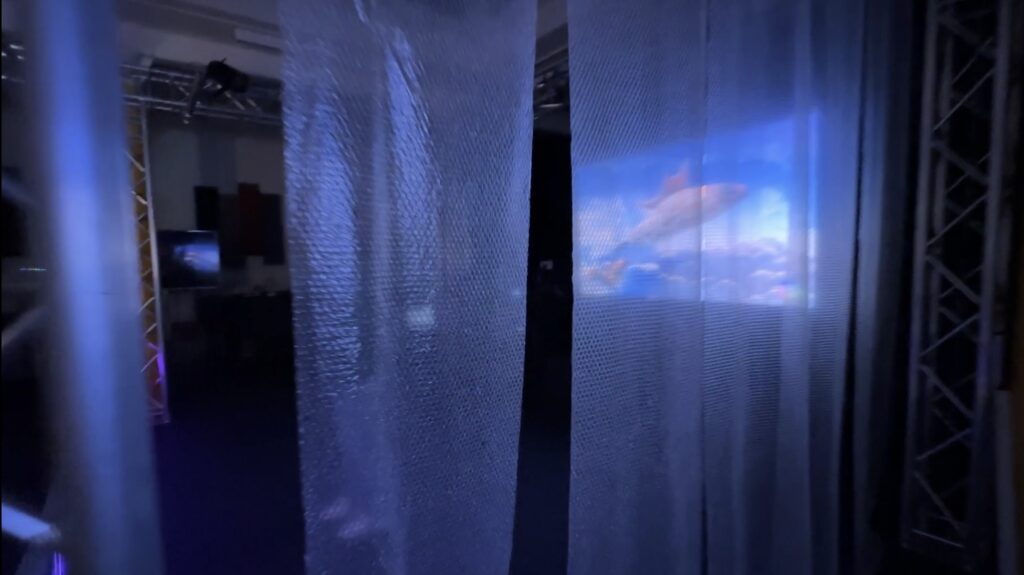

Venue Setup

In terms of venue setup, we first tried the wool winding solution. However, the test results did not meet our expectations. The combination of wool and lights was average and did not create the visual effect we hoped for. We also tried light decoration, but again, the desired effect was not achieved.

Finally, we tried the foam paper effect. This effect was unexpected, it added a hazy feel to the venue and we decided to keep this design. However, we found that the foam paper was easy to stick diagonally and we plan to improve this before the official performance.