Processing

Background

In the second part of our performance, we aimed to portray the inner conflict and struggle of the daughter for the audience. Therefore, we wanted to capture the actor’s movements and project them into a 3D particle space to depict the daughter’s inner world. Eventually, we chose the Kinect V2 sensor for this purpose. As the Kinect is an RGBD sensor, it can recognize the human body and automatically calculate the motion data of each joint based on the person’s proportion in real-time, capturing the person’s outline.

After selecting the sensor, we attempted to use Touch Designer to achieve the motion particle presentation. However, as some team members had more experience using Processing, we decided to use Processing to present the motion data captured by the Kinect for better visual effects.

Process

In the Processing coding, there are mainly three parts: Particle from Kinect capture, particle clustering and dispersion influenced by sound changes, and movement of a red wireframe.

Firstly, the setup() function initializes the libraries being called, sets up the screen, audio input, and particle array.

1)Kinect

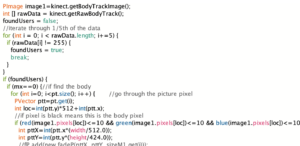

We used the KinectPV2 and peasy libraries to access the Kinect camera and create an interactive camera controller. We called the Kinect data by defining “kinect = new KinectPV2(this)”. In the code, after initializing the Kinect sensor, It creates arrays and ArrayLists to store particle coordinates, image data, and particle size information. Using If statement, Processing checks whether the Kinect detects any human bodies. If the actress is detected, it iterates through the pixels of the body tracking image and looks for pixels that correspond to the user’s body. For each pixel found, it creates a particle-like effect at the corresponding location and adds it to the ArrayList of particles. If no actress is detected, it randomly generates new particles. The particle movement, dissipation, and iteration are also set up at the same time.

2)Sound

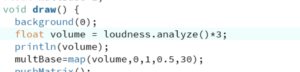

We also used the processing.sound library to capture audio input from the computer and extract volume information. By using “float volume = loudness.analyze()*5”, we can quickly adjust the sensitivity of how sound affects particle dissipation according to the impact of environmental noise during live performances.

3)Red Box

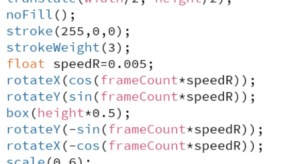

We used P3D to draw a rotating 3D cube in space, controlled by “rotateY(-sin(frameCountspeedR));rotateX(-cos(frameCountspeedR));” to adjust its rotation speed. In the initial design, we used a white wireframe, but later changed the stroke color to red. During live testing, we found that the wireframe was not clear enough, so we adjusted the strokeweight() data to make the wireframe thicker, making it easier for the audience to see.

Max and Processing Connection

We intended to output the sound data generated by the performer in real time to the processing via Max, thus causing a diffusion of the visual particle effect to occur. The LAN is set up in the Arduino and Max is connected under the same port.

Using Max as the emitting device, the volume data is sent to the ip address where the processing is located, setting the data to vary between 0 and 1.

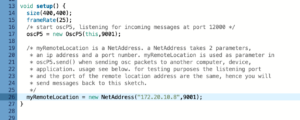

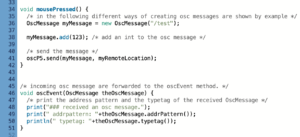

In the Processing part, we conducted tests on data reception using the test code from the OSC database based on relevant references we found online.

After modifying our own IP address and receiving port, we carried out sending and receiving simultaneously.

However, in the end, we did not receive any data from Max on the Processing side. Instead, Max received ‘123’ sent from Processing on the Max side. So we gave up this plan and still use environment sound to affect the particles.

However, in the end, we did not receive any data from Max on the Processing side. Instead, Max received ‘123’ sent from Processing on the Max side. So we gave up this plan and still use environment sound to affect the particles.