I anticipate there to be a series of blog posts on this topic, as determining evaluation aims and KM goals takes time, reflection and discussion (e.g. What is feasible? What would be most useful to all interested parties? Who are the interested parties in the first place…?).

This blog post outlines the very very first few steps that we, as PHIRST Elevate, take to get a gist of what evaluation aims and KM goals might be – even before formally meeting the evaluation commissioners in the local government!

The importance of the first few weeks

As part of PHIRST, we are conducting 10 evaluations of local government public health interventions over the next 5 years. In the first weeks of starting each evaluation, we aim to understand two main things:

- Details on the intervention: How does the intervention work (at least in theory)? What outcomes does the intervention hope to achieve? We use the information gathered here to develop a logic model.

- Details on the evaluation aims: What does the local government want to get out of the evaluation? Why do they need an evaluation now?

Sidenote: The phase of the evaluation in which we aim to generate answers to these questions, decide whether an evaluation is feasible and what it could look is called the Evaluability Assessment (EA) phase. For more information, and a comprehensive framework for EAs, you can look at this paper from one of the PHIRST co-applicant Dr Larry Doi and colleagues. It outlines how they planned an evaluation of Scotland’s Baby Box scheme. I found it very helpful when I first got my head around Evaluability Assessments. However, it is important to note that Evaluability Assessments can be adapted to match the budget and timeline for each evaluation project and more often than not they might be much smaller in scale than the one discussed in the Babybox paper.

Back to KM: For KM purposes, I am particularly interested in the answers from the second point (i.e. details on the evaluation aims). They will help me better understand the context and key stakeholders. Starting the KM process at the very start of the evaluation highlights an important point about KM: it is not an afterthought but integrated throughout the project. It is best practice to start thinking about KM from the outset of the evaluation.

So how do we start discussions around evaluation aims?

The PHIRST EA process takes several weeks , and involves meetings with local government leads and various sub-teams who may be involved in running or commissioning the intervention. For this blog post I will focus on a tiny sliver of the PHIRST EA process: how we prepare for the first meeting with the local government leads (when I say local government leads, I mean the people who have put forward the expression of interest for the evaluation).

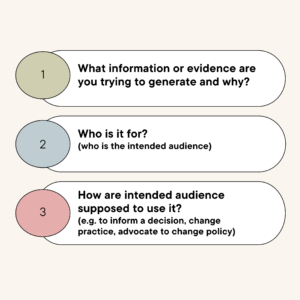

Even before we meet the local government for the official kick-off meeting, we send them three questions in advance. The “3 Questions” (developed by PHIRST Co-Principal Investigator Dr Paul Kelly as part of the Health and Well-being Pragmatic Evaluation Handbook) may seem straightforward at first, but they often lead to in-depth discussions between local government team members and intervention stakeholders, as everyone may have a slightly different idea of why an evaluation might be useful. Sending the question in advance – hopefully – helps start these discussions early, giving enough time to – hopefully – reach a consensus by the end of the EA phase.

The 3 questions are:

So how do these 3 questions help set the scene for Knowledge Mobilisation?

Answers to these questions help get an idea of the following:

Right message: Knowing what information they might want to generate can be helpful in anticipating or planning for key messages. When the evaluation comes to a close, and we have lots of different findings, going back to the 3 Questions from the start will be helpful to extract key messages.

Right audience: The 3 Questions help us understand more details on the audience, for instance are they internal (within the organisation commissioning the evaluation) or external? What is their role, and what is their level of influence? The information from question 2 can help with some initial stakeholder mapping.

Right format: This can be inferred from understanding who the audience is (what is their level of expertise and involvement in the intervention) and their role and level of influence (how much time do they have to engage with the results of the evaluation). Knowing what the right format is for the findings will also depend a lot on the culture within the organisation commissioning the evaluation, so this will need to be explored further.

Right time: Is there a particular time when information is required? Should all information be presented at once, or should it be broken down into chunks across a certain timeframe? This will mainly depend on how the information is going to be used.

…which nicely links back to the different components of Knowledge Mobilsation:

Some final thoughts

We introduce these questions early, and we will revisit them throughout the EA phase but also throughout the evaluation. It helps make sure we stay on the right track and the local government is getting the information they need.

After getting an answer to these questions from the local government leads, we will test these questions, and some preliminary KM approaches, with a wider audience (e.g. partners, public health team, commissioners etc) in so-called EA workshops (more on this in the next blog post). I will also organise in-depth Knowledge Mobilisation sessions with key stakeholders commissioning the intervention, as well as with members of the public, to explore aspects around message/audience/format/timings. At this point, the answers to the 3 Questions will have already provided some important context to build on further.

As well as helping the KM side of things, the 3 Questions are also helpful for the wider evaluation team to start thinking about what type of evaluation might be most appropriate/feasible.