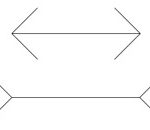

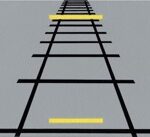

Several correspondences between the senses exist. For example, transferring information about shape between touch and vision. Associating the sound of spoken words and visual shapes (as in the Bouba/Kiki-effect).

These correspondences are visible in crossmodal metaphors too. That is, when people are using words and phrases related to one sense to describe an experience from another sense. Like when they label visual colours, through words that are specific to the sense of hearing, calling them “loud” and “mute”. And define a sound through the sense of touch, as with “a smooth voice”.

I have invited researchers connected with the Diverse-ability Interaction Lab to write this post on how people generate and interpret crossmodal metaphors. These researchers have identified seven association strategies. The Diverse-ability Interaction Lab aims to change the design of interactive technologies in ways that make them inclusive, both for people who are disabled and people who are non-disabled. This post is written by Tegan Roberts-Morgan, University of Bristol.

“Blue tastes like salt, it just does”. That is what one participant told me when I asked them what blue might taste like. We all make connections between our senses. A citrus smell may be sharp; someone may have a sweet voice, or red might remind you of anger. We call these cross-sensory metaphors, as they use words from one sense to describe something which is typically associated with another sense. As a HCI researcher in sensory technologies, this is important, as understanding how these metaphors are created can give us an insight into the methods behind our sensory thinking, supporting us to hopefully design better sensory technologies.

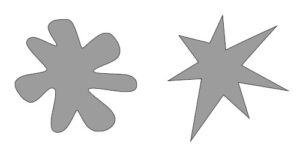

We use association strategies to represent the different methods people use to create connections between different senses. These strategies help us to begin to understand the reasons behind why we make the cross-sensory metaphors that we do. If we can understand why the connections are made, then this can be leveraged in the design of technologies that support communication. To explore these strategies, we designed tasks that encourage participants to think in cross-sensory terms. For example, in Sense-O-Nary, participants are given an item related to a specific sense (e.g. the colour red, a pyramid, or a lemon scent) and asked to describe it using a sense that is not typically associated with it (e.g. what does red smell like, or what does a pyramid sound like?). They then share their cross-sensory metaphor with another team, who must guess which item is being described. This task, along with others we used, helped us to identify the 7 different strategies people use when creating cross-sensory metaphors.

- Participants used personal stories and memories, and we labelled these as the personal connection strategy. One participant, for example, said that the lemon scent reminded them about when they went “on holiday to the Mediterranean” or “this reminds me of my friend”.

- Participants also created cross-sensory metaphors using the familiar experience strategy. This is when the metaphor created uses a common object, emotion, texture etc. “This smells like a banana smoothie” or “this reminds me of a marshmallow” and even “this tastes like soy sauce”.

- Some participants rely on some basic primitives to make an association, which we labelled as the sensory features strategy. This includes words like “sharp”, “smooth”, “soft” “bitter” and “sweet”.

- Participants also used the valence strategy, using negative or positive words in the description, for example “I like this”, “I love this“ and “this would taste horrible”.

- Another approach was using vocalisation. This involved participants using a sound or noise as

- opposed to words to describe an item like “this sounds like Krrrr and tssssss”, “boooom” or when one child just screamed to describe what red may sound like.

- Some participants chose not to use the sense that we originally asked them to use; they would instead use words from a different sense. We called this grasping for another sense. In one study we asked participants to describe how red would taste and they said, “this tastes strong”.

- Finally, some participants did not only use their words to communicate their connection, but they also used their body. When they did this, they used the embodied action strategy. An example includes when one participant said green “feels like this” and then stroked the floor back and forth.

We believe that understanding and using these strategies can support designers, educators, and researchers in creating experiences that align with how people naturally relate the senses. For instance, we found that most adults used personal connections when describing how something would sound, so incorporating prompts or features that relate to a memory the person may have could support their communication.

We have found that age plays a vital role in what association strategies a person uses. Children tend to use familiar experiences the majority of the time, describing the item using something common. Whereas young adults (18-25 years olds) also used familiar experiences, but used personal connections, additionally, to create their metaphor. And finally older adults (65-80 years old) used a much wider range of association strategies, with sensory features being used more often.

These association strategies can be applied in any context that involves multisensory interactions, from educational devices that support children learning about their phonics by using shapes and audio, boards that can help children explain how their pain feels by using scents, shapes, colours etc., and accessible technology to support communication between children who are sighted and children who are visually impaired. Ultimately, association strategies give us a window into how people construct meaning across their senses. By recognising and applying these strategies, we can potentially design experiences that resonate more deeply, communicate more clearly, and build richer, more inclusive multisensory worlds.

See our blog for Activities; especially 70-72.