Week 6 – Artificial Intelligence and Storytelling

This week we combined a discipline I have experience and interest in (storytelling/narrative) with our program’s overarching area of interest (A.I./future [digital] narratives). We’d already had an introduction to this course in the first semester which built a lot of interest in the two-day intensives, and had had a sampling of the course the previous Friday – where we’d been invited to create a short comic alongside A.I. Thus, we were all looking forward to working with the enigmatic Pavlos Andreadis.

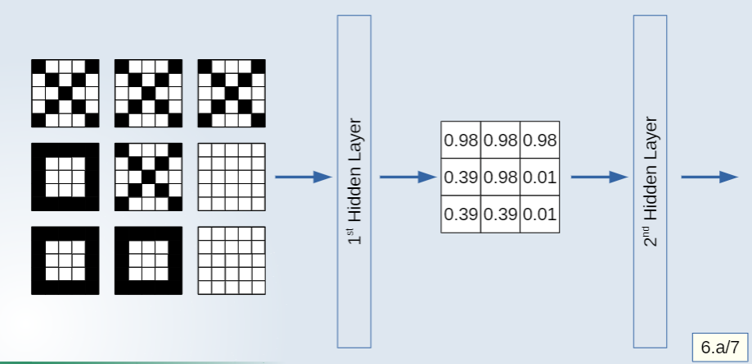

The intensives expanded upon this in detail. We began with a break-down of some key characteristics of data-gathering and ML training which can be summarised as:

- The Importance of Data:

- If it isn’t in the data, the model won’t learn it.

- If it is in the data, the model might learn it.

- You don’t know what’s in your data.

- What the model learns is different from what you think it should or has learnt. Simply looking can trick you into thinking otherwise (it’s not a person).

- Your data isn’t an accurate reflection of the world:

- 1) Data Distribution: e.g., different photo angles or composition in photos not in data.

- 2) Data Representation: we need to choose how to represent the real world thing, and that is by definition excluding information.

- Typical Machine Learning Engineering Concepts:

- Overtraining – Training too much/too many epochs: our model gets better on the data as it has seen at the expense of data it has not.

- Overfitting – Trying to perfectly capture our data: our model starts learning things that are not there in order to explain small deviations in the data.

- Overfitting and Overtraining are often (mistaken) synonymously – since they usually happen together (or close enough).

- Generalisation: How well does our model work on data it has never seen?

- In Conclusion:

- What an A.I. model can do is heavily controlled by the decisions of the Engineer.

- …and finding and selecting Data is crucial for M.L. (not in data = not in model).

- The A.I. model does not understand the world, and it is not saying what it looks like it is saying: e.g., it is not ‘this apple is poisoned,’ it is ‘if I had to say what this representation of an apple looked more similar to, I would say it looks like representations of an apple that were labelled as ‘poisoned.’

- It’s not just what the model is; it’s also (mostly) about what we chose to do with it.

The intensives then led onto an individual project where we had to come up with a storyline using A.I. This was centred around a prose narrative in the first intensive, and a visual one in the second one. Working in the notebooks, we fed prompts to the A.I. and created material in accordance with its response. This then led to a general narrative outline developed through a collaboration effort with the A.I.

We concluded both days by combining our respective narratives into one large, semi-cohesive story. My group followed Dante’s ‘Seven Circles of Hell’ principle to situate each of our six protagonists and their corresponding antagonists! It was a lot of fun working together to figure out a three-arc narrative whereupon each team member’s respective story could play a role. I also greatly enjoyed the image generation element of the second day as it created art from human-drawn images which I’d only loosely dabbled in before. I ended up using an AI-generated image from the notebook as inspiration for another course on the program.

All in all, it was a very fast-paced but incredibly insightful two days! I am currently having fun with the individual narrative-generation exercise and hope to continue learning from it 🙂