Team members & Task allocation

- Xinyi Qian: Project Director

- Can Huang & Li Lyu: Interaction Designer, Vlog Maker

- Ashley Loera & Roulin Liu: Sound Designer

- Hefan Zhang & Yixuan Zhang: Visual Designer

I. Project Brief

1. Theme: The Process of Breathing

This project aims to highlight the often-overlooked importance of breathing, transforming it into a sensory experience that fosters self-awareness, relaxation, and emotional balance. By visualizing and signifying breath in real time, the installation encourages participants to reconnect with their own breathing process, promoting mindfulness and inner stability.

· Name : Inhale | Exhale

· Background & Purpose

In the wake of COVID-19, where the ability to breathe freely was challenged for many, this project serves as a gentle reminder of the value of breath—not just as a biological function but as a therapeutic tool for mental and physical well-being. The interactive nature of the installation offers a calm, immersive space where individuals can explore, observe, and regulate their breath in an intuitive and stress-free way.

By fostering a deeper connection between breath, body, and environment, this project contributes to mental health awareness, encourages stress reduction, and provides an innovative approach to self-regulation and relaxation through interactive art and sound.

2. Project Content: Interactive Breathing Installation

This interactive installation visualizes the breathing process by collecting real-time breathing data from visitors using sensors. The collected data generates dynamic visual and auditory effects, allowing visitors to perceive their breath in an immersive environment created through sound and projection.

- Breathing Guidance: Natural Approach

Participants enter the space and breathe naturally—no fixed rhythm or instructions to follow. The installation responds in real time, with visuals and sounds gently shifting based on breath patterns. Slow, deep breaths create softer lights and calming tones, while quicker breaths generate more dynamic effects. This intuitive feedback encourages relaxation and self-awareness without pressure, allowing visitors to explore their own breathing rhythm freely.

II. Concept Generation

1.Inspiration

The inspiration for this project comes from the concept of “Breath Observation”, a practice rooted in Eastern meditation traditions and widely adopted in modern psychological therapy. Breath observation is not just about noticing the rhythm of breathing; it is a method of enhancing awareness, concentration, and emotional balance by consciously engaging with one’s breath.

By focusing on natural breathing patterns, individuals can reduce stress, improve mindfulness, and cultivate a state of inner tranquillity. This principle aligns with cognitive behavioural therapy (CBT) techniques, which use breath regulation to promote emotional stability and relaxation.

In this project, breathing is transformed into a sensory experience, allowing participants to perceive, visualize, and interact with their breath through real-time audiovisual feedback.

Img1, Image Source:sdominick/E+/Getty Images

2. Case Study

- Resonance Room– A project where the aperture changes in response to the audience’s breathing, offering an intuitive way to observe and interact with one’s breath.

- A live performance integrating human organs and plants– The interaction between the audience and projected visuals, along with atmospheric sound design, creates a captivating and immersive experience.

To bring this concept to life, several key steps were outlined:

- Data Collection: Gathering breathing-related data, where variations in frequency and depth could represent different mental states.

Data Processing: Using machine learning models to transform the collected data into visual representations.- Possible Outputs: Various artistic expressions, including visual, auditory, and even tactile elements, could emerge from the processed breathing data.

- Exhibition Design: A structured exhibition space was envisioned, guiding audiences through different areas showcasing the outputs.

This initial case study laid the foundation for further exploration, with a focus on refining the technical approach.

3.Brainstorming

During our first meeting, we explored multiple ways to integrate breathing as an interactive medium, initially considering AI models, various outputs (TouchDesigner visuals, 3D printing, generative music), and a multi-zone exhibition layout.

Early Explorations:

AI Integration → Initially considered for data processing and generative art, but later removed due to its forced inclusion and lack of necessity.- Multi-Format Outputs → Explored real-time visualizations, physical forms (3D printing), and soundscapes as different expressions of breath.

- Exhibition Zoning → Proposed three separate exhibition zones, each highlighting a different sensory aspect (visual, auditory, and tactile).

After further discussion, we decided to:

- Remove AI and focus on a direct, real-time breath interaction for a more organic experience.

- Unify the exhibition instead of dividing it into separate zones, ensuring a seamless, multi-sensory experience.

- Evaluate Real-Time vs. Offline Rendering → Considered whether breath-driven visuals should be pre-rendered or generated in real-time; ultimately, we leaned toward real-time interaction for a more immersive and dynamic engagement.

These refinements allowed us to create a cohesive, sensory-rich installation, where visitors experience their breath through integrated visual, auditory, and tactile feedback rather than fragmented components.

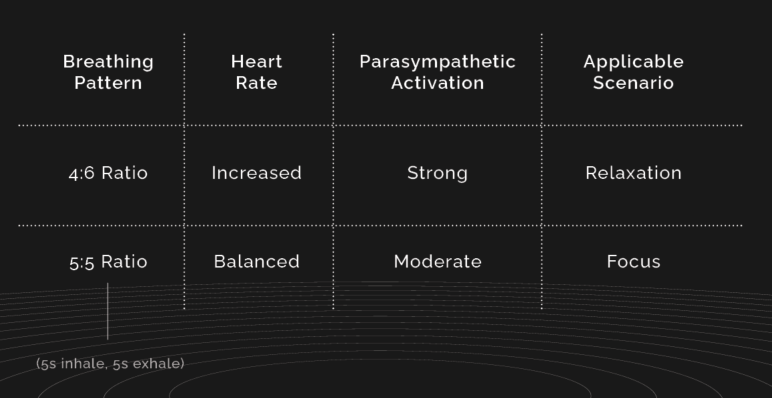

4.Literature Research

III. Prototype

1. Arduino & Sensor Test (Can & Li)

· Initial Idea

Detection and data collection of respiratory states through sensing technology

Interpretation of respiratory states through sensors

Scenario 1: Expression of respiratory rate and state (steady, fast, irregular, relaxed, tense) through acoustic frequency.

Scenario 2: Expression of respiratory rhythm through the recording of respiratory duration.

Scenario 3 Expression of inhalation and exhalation states by detecting the temperature difference between inhalation (colder) and exhalation (hotter).

Visualisation of respiration in different states presented by comparison between the different values collected

Therefore, we plan to use:

- Ultrasonic sensors

- Temperature and humidity sensors Testing

- Infrared body sensors

These three initial attempts, later if the equipment conditions allow us to use pressure sensors for further data testing and analysis

· Practices

https://blogs.ed.ac.uk/dmsp-process25/2025/02/06/arduino-testing/

· Problems and Improvements

Temperature and Humidity Sensor DHT11: We plan to use the DHT11 temperature and humidity sensor to detect the temperature difference between human inhalation (cooler air) and exhalation (warmer air), but due to the limited accuracy and response time of the DHT11, the detection may not be sufficiently obvious and stable.

Problems:

- Accuracy limitations:

The temperature accuracy of the DHT11 is approximately ±1°C. The DHT11 cannot accurately detect temperature changes.

- Slow response time:

The data update interval of DHT11 is about 1-2 seconds. Since the temperature change of human breath is instantaneous, DHT11 will lag or fail to respond quickly to the temperature change.

- Influence of air movement:

The sensor detects the temperature of the surrounding air, and hot exhaled air tends to spread quickly, leading to unstable results.

Improvement.

- The DHT22 (AM2302) may be a better choice, with an accuracy of ±0.5°C. (NTC thermistors or MLX90614 infrared temperature sensors, which respond faster to subtle temperature changes).

- To reduce environmental disturbances, try placing the DHT11 in a small, confined environment (e.g. inside a short duct) to reduce the effects of air convection. Place small ducting around the sensor to direct airflow more centrally through the sensor.

———————————————————

2. Sound Design (Ashley & Ruolin)

· Initial Idea

In our conceptualization of this installation, the sound design can be divided into three main components:

- Background Music: A stable, unaffected soundscape that provides an immersive meditative atmosphere. Approach may involve a variety of arrangement techniques including an automated & randomized approach, a performance, or a pre-recorded ensemble.

- Atmospheric Sound Effects Related to the Human Body and Meditation: Sounds will be pre-recorded or collected real time using audio input. Audio Input, collected by Ashley, will seek to correspond to bodily states and breathing rhythms and will dynamically change in response to real-time audio data to mimic and interpret the physiological process of breath. The Atmospheric Meditation Music will be recorded, arranged and interpreted by Ruolin.

- Sound Effects Synchronized with Visual Elements: These sounds are designed to complement the visual components of the installation and, like the atmospheric effects, will be influenced by participants’ breathing data, enhancing the interactive and immersive experience.

The installation will potentially incorporate the following sensors, including Arduino-based temperature sensors, sound sensors, and various types of microphones, to capture participants’ breathing data. These sensors will detect various parameters, such as:

– The duration of inhalation and exhalation, providing insight into breathing patterns and depth;

– The interval between breaths, capturing the natural flow of respiration;

– The temperature of exhaled air, offering individualized physiological data.

This data will be processed in real time within Max, dynamically shaping the sonic output of the installation. Elements such as reverb, frequency distribution, and timbral characteristics will be modulated based on participants’ breathing patterns, with these adjustments becoming perceptible after several breathing cycles. This approach aims to create a personalized and immersive auditory experience that responds organically to the presence and physiological state of each participant.

· Practices

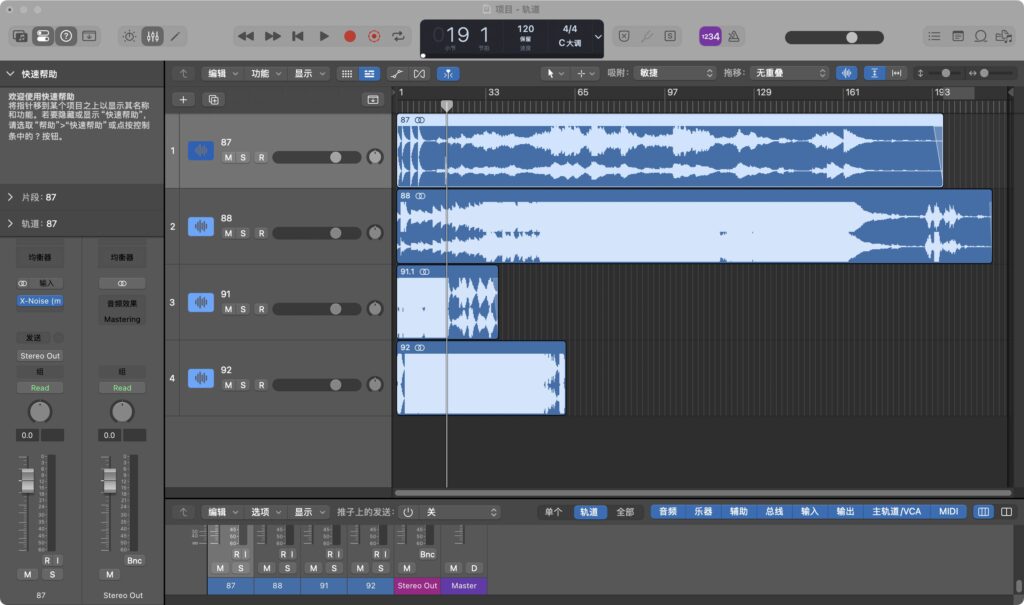

Director of Sound – Ruolin Liu: Based on our discussion, the goal of this installation is to guide participants to focus their attention on their breathing through interactive engagement. To support this objective, I have selected previously recorded meditation-related audio materials featuring instruments such as singing bowls, gongs, and shakers, which contribute to a stable and immersive soundscape. Here is the link of the samples:

Director of Sound – Ashley Loera: Based on our group discussions, a portion of this installation will include a real-time interactive abstract manipulation of sound to emulate the process of breath and fusing this abstract manipulation into the meditative music as an aim to bring the listener and viewer’s attention to the present moment. This will be accomplished by manipulating audio input in MAX MSP to alternate between various sound FX manipulations (including filter, reverb, delay, frequency shift, etc) over time.

Please see the following Blog to see an in-depth analysis of this approach and a list of references:

🎵https://blogs.ed.ac.uk/dmsp-process25/2025/02/11/sound-design-max-msp-approaches-ideas-and-sources-for-project-proposal-ashley-loera/🎵

Recorded Audio Materials

– Reference for Synchronization of Sound and Visual Elements: https://www.youtube.com/watch?v=T7F9RmOAno4

Audio System Requirements

- Data Collection: Microphones will be used to capture participants’ breathing sounds and extract relevant physiological data.

- Audio Playback: The system will employ multiple loudspeakers, initially configured for surround sound to enhance spatial immersion.

- Real-Time Audio Processing: MAX will process the collected data in real time, enabling live audio looping and synchronization with visual elements to create a more interactive and immersive experience.

Problems and Improvements

· Problems and Improvements

It remains uncertain whether the audio variations can be fully realized within the duration of participants’ breathing cycles and overall visit time. Further testing and optimization of the sound design are necessary to ensure that the auditory changes align with the pacing of the participants’ experience.

Additionally, a greater variety of audio materials that correspond with the visual elements is required to enhance the coherence between auditory and visual stimuli. This necessitates a careful selection and design of sounds that complement the visual components, creating a more unified and immersive sensory experience.

3. TD Visual Design (Hefan & Yixuan)

· Initial Idea

Our initial design idea revolves around creating an immersive, interactive experience that connects the audience’s breathing patterns to dynamic visual effects generated in Touch Designer.

We plan to use Arduino to collect real-time data from the audience’s breathing, such as breath rate and depth. This data will then be transmitted to Touch Designer, where it will drive the visual output. The key idea is to reflect the state of the audience’s breathing in the visuals:

When the breathing is uneven or erratic, the visuals will respond with intense fluctuations, distortion, and a lack of clarity. This could manifest as chaotic particle movements, blurred shapes, or rapidly shifting colors, symbolizing instability and tension.

As the breathing becomes steadier and more controlled, the visuals will gradually transition into a calm, clear, and harmonious state. The shapes will become more defined, the movements more rhythmic, and the colors more cohesive, representing balance and tranquility.

The goal is to create a direct connection between the audience’s physical state and the visual narrative, making the experience deeply personal and engaging. By interacting with the installation, the audience becomes an active participant in shaping the visual outcome, emphasizing the theme of “breathing” as both a physical and metaphorical journey.

Another concept introduced is this: Plants release oxygen through photosynthesis, while humans absorb oxygen through respiration, creating a natural energy exchange. By allowing “flowers” to bloom or wither in response to the audience’s breathing, the installation serves as a visual meditation aid. In psychology and meditation practices, breath regulation is commonly used to relax the nervous system. This artwork enables viewers to influence the form of digital flowers by adjusting their own breathing, thereby achieving a deeper state of relaxation.

· Practices

Yixuan:

Progress on Touch Designer Effects — Troubleshooting and New Discoveries

Hefan:

· Problems and Improvements

As we continue developing our interactive breathing visualization project, we’ve made some progress but also encountered a few challenges that need to be addressed.

Current Issues

- Uncertainty in Selecting TD Visual Subject and Material

While we’ve successfully created some dynamic visual effects in Touch Designer, we’re still unsure about the choice of the main visual subject and its material. For example, should the visuals be abstract shapes, organic forms, or representational imagery like lungs or waves? Additionally, we need to decide on the texture and style of the visuals—should they be sleek and digital, or more organic and textured? This decision is crucial as it will define the overall aesthetic and emotional impact of the project. - Lack of Arduino Integration Testing

Another major challenge is that we haven’t yet tested the connection between Arduino and Touch Designer. While we’ve conceptualized how the breathing data will drive the visuals, we haven’t verified whether the data transmission works seamlessly or how the visuals will respond in real-time. This is a critical step that needs to be addressed to ensure the interactivity of the installation. - Visuals Not Aligning with Breathing Data

The visuals in Touch Designer may not respond intuitively or dynamically to the breathing data, making the interaction feel disconnected or unnatural.

- Technical Limitations in Touch Designer

Complex visual effects in Touch Designer may cause performance issues, such as lag or crashes, especially when processing real-time data.

Planned Improvements

- To resolve the issue of selecting the visual subject and material, we plan to:

- Conduct a team brainstorming session to gather ideas and align them in a visual direction that best represents the “breathing” theme.

- Create multiple prototypes in Touch Designer with different subjects (e.g., abstract particles, organic shapes, or symbolic imagery) and materials (e.g., smooth gradients, textured surfaces, or dynamic lighting).

- Test these prototypes with a small audience to gather feedback on which visuals resonate most effectively with the theme and evoke the desired emotional response.

- To address the lack of Arduino integration testing, we will:

- Collaborate with the Arduino team to set up a basic data transmission pipeline, ensuring that breathing data (e.g., breath rate, depth) can be sent to Touch Designer in real-time.

- Develop a simple test environment in Touch Designer to visualize the incoming data and adjust parameters accordingly.

- Experiment with different mapping strategies to determine how variations in breathing data (e.g., erratic vs. steady) can best translate into visual changes (e.g., chaotic vs. calm visuals).

- To improve visualisation inconsistency with respiratory data, we should:ap the breathing data to specific visual parameters (e.g., noise scale, prticle speed, or color gradients) in a way that reflects the intensity and rhythm of the breath.

- Map the breathing data to specific visual parameters (e.g., noise scale, particle speed, or color gradients) in a way that reflects the intensity and rhythm of the breath.

- Create multiple mapping prototypes and test them with real breathing data to find the most effective and engaging response.

- Use smoothing algorithms to ensure the visuals transition smoothly between states (e.g., erratic to calm).

4. Initial idea for Installation (Xinyi)

· Initial Idea

Inspired by works like Vicious Circular Breathing and Last Breath, this installation explores breath visualization, initially designed as a tree-like structure of transparent tubes where participants would breathe through masks, sending air into a central lung- or heart-shaped container. The aim was to reflect on airflow, restriction, and preservation, especially in the context of the pandemic. After discussions with Philly, key refinements were made: replacing masks with a more open interaction method to enhance comfort and placing sensors closer to the breathing source for improved data accuracy while maintaining the visual representation of airflow.

Initial idea in detail: https://blogs.ed.ac.uk/dmsp-process25/2025/02/08/initial-inspiration-for-installation-with-fb/

· Practices

· Problems and Improvements

After receiving feedback from the tutor, I realized that the current height of the installation requires participants to bend down, which might cause discomfort and interfere with the intended meditative experience.

To improve this, I plan to lower the installation so that users can interact in a more relaxed, natural posture without needing to lean forward. Additionally, incorporating cushions or a seated area could encourage a more immersive engagement, allowing participants to fully focus on their breath and the interactive visuals. This adjustment not only enhances comfort but also aligns with the installation’s theme of breath awareness and mindfulness, creating a space where users can feel at ease both physically and mentally.

IV. Challenge

Potential Issues & Challenges (Li Lyu, Can Huang)

1.Data Latency: Is the real-time availability of sensor data sufficient? Is there a need to optimise data transfer?

Optimisation: Use faster sensors and low latency wireless communication (ESP32 / BLE).

2.Environmental Interference:Does external noise, air movement affect sensor readings?

Optimisation: Use more accurate sensors and optimise airflow monitoring locations within the unit.

3.Interaction Intuitiveness: Can the audience quickly understand the interaction? Do they need guidance?

Optimisation: Provide easy visual guidance, such as on-screen instructions or audio feedback.

4.Visual & Audio Synchronisation:

Is the data transfer fast enough to ensure synchronisation of visual & audio feedback?

OPTIMISE: Use TouchDesigner’s MIDI & OSC for data mapping to improve responsiveness.

V. Other Preparations

· Timetable

VI. Appendix

· Web

- https://youtu.be/WS2Ww6zYgJw?si=WEciGTxVPYVflYVt

- https://youtu.be/qLebV9rjqb4?si=SHn13N4ogxACea1D

- https://youtu.be/NuIShUTg3nI?si=wpbzgs0xKmoLTals

- https://b23.tv/vSqo235

· Article

- Jerath, R., Crawford, M. W., Barnes, V. A., & Harden, K. (2015). Self-Regulation of Breathing as a Primary Treatment for Anxiety. Applied Psychophysiology and Biofeedback, 40, 107–115. DOI: 1007/s10484-015-9279-8

- Zaccaro, A., Piarulli, A., Laurino, M., Garbella, E., Menicucci, D., Neri, B., & Gemignani, A. (2018). How Breath-Control Can Change Your Life: A Systematic Review on Psycho-Physiological Correlates of Slow Breathing. Frontiers in Human Neuroscience, 12:353. DOI: 3389/fnhum.2018.00353

· Image

- Img1: https://www.mic.com/life/catch-the-cosmic-show-six-planets-in-a-parade(Accessed on 07 Feb 2025)

- Resonance Room: https://bcaf.org.cn/Studio-Nick-Verstand(Accessed on 07 Feb 2025)

- Live Performance:https://vimeo.com/893472100(Accessed on 07 Feb 2025)

- https://pin.it/BUUEHwlKo (Accessed on 07 Feb 2025)

- https://pin.it/2dsUibXuJ (Accessed on 07 Feb 2025)