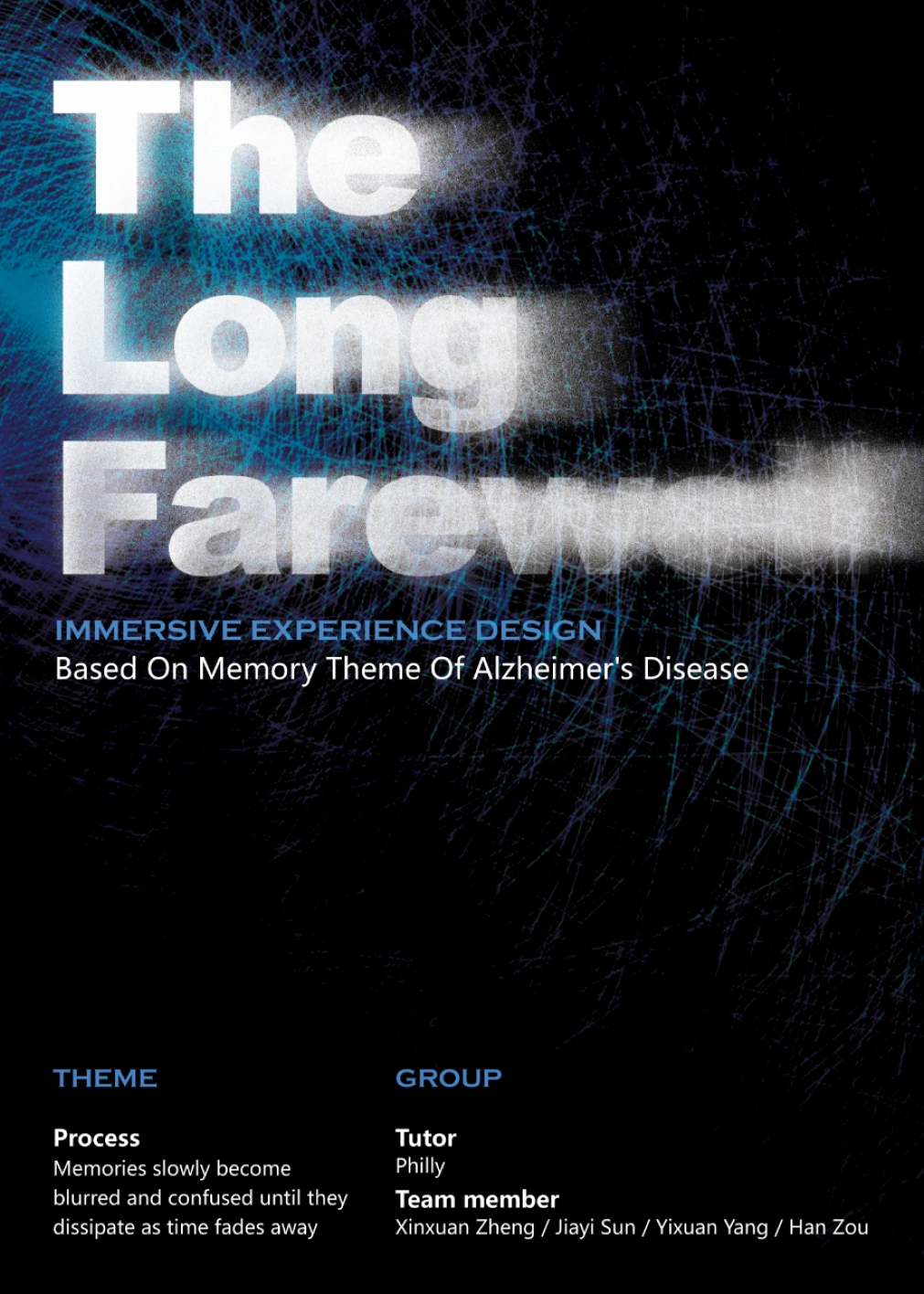

To echo the theme, the poster design primarily combines particles and text to further depict the process of memory fading away. In terms of color scheme, it predominantly features tranquil shades of blue and purple.

dmsp-process24

To echo the theme, the poster design primarily combines particles and text to further depict the process of memory fading away. In terms of color scheme, it predominantly features tranquil shades of blue and purple.

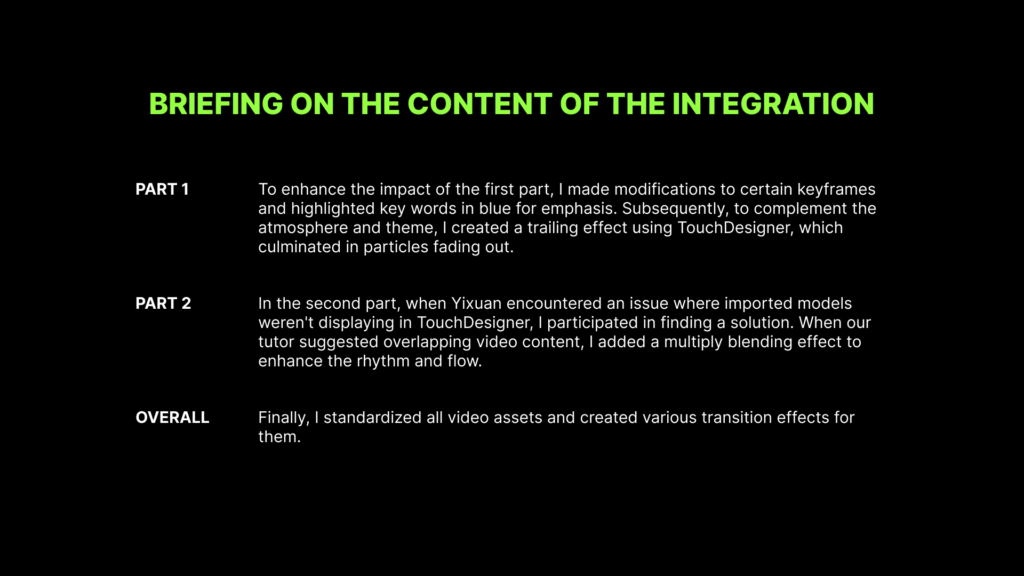

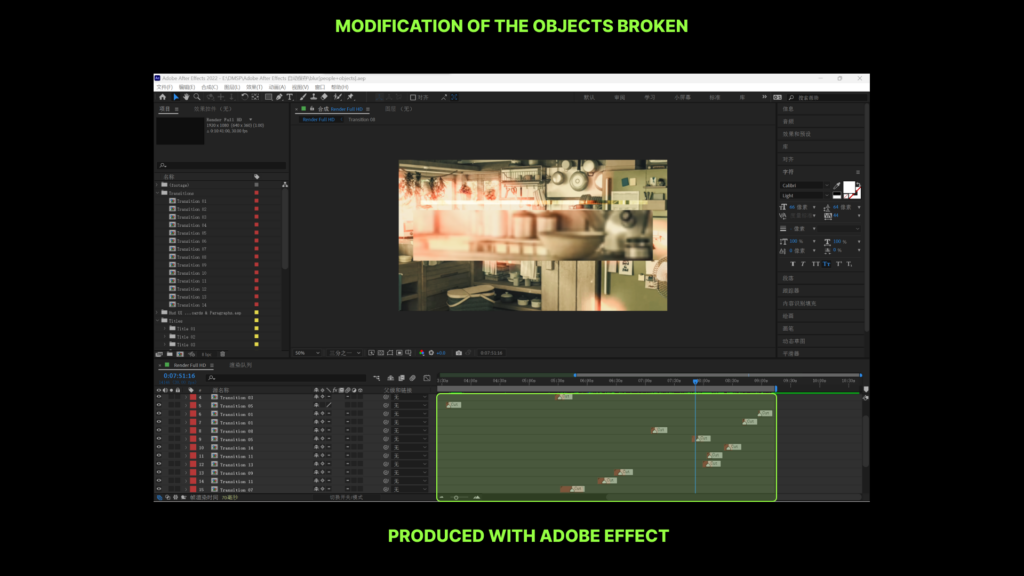

As collator of the final video assets,in addition to completing my own tasks, I actively assisted teammates in resolving technical issues and coordinated visual assets to achieve better visual effects.

As collator of the final video assets,in addition to completing my own tasks, I actively assisted teammates in resolving technical issues and coordinated visual assets to achieve better visual effects.

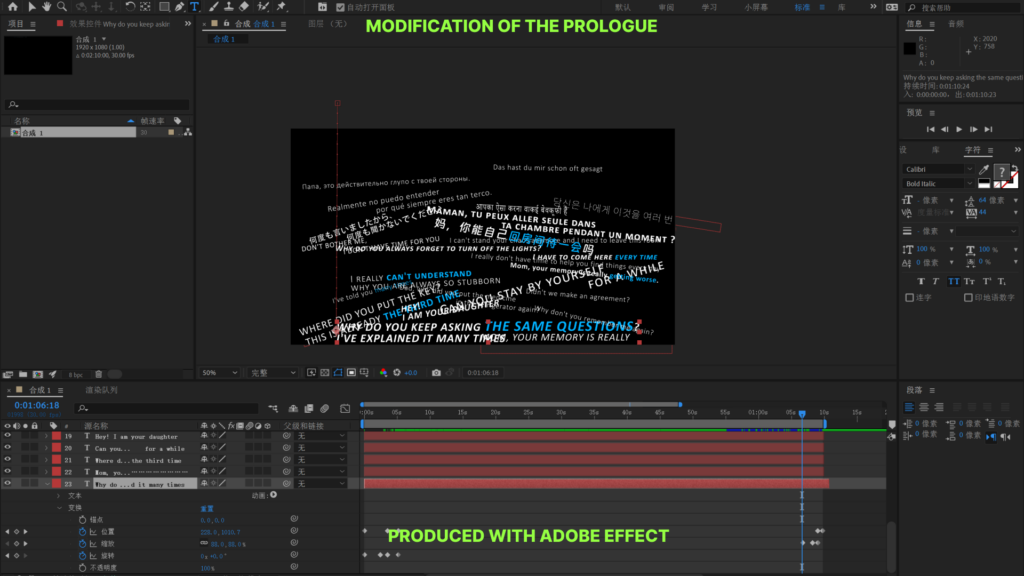

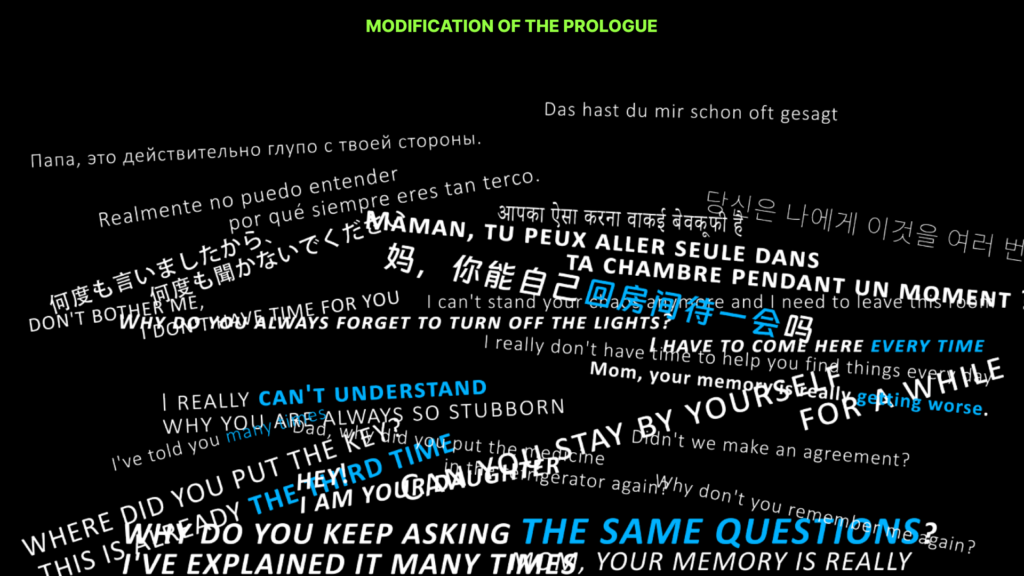

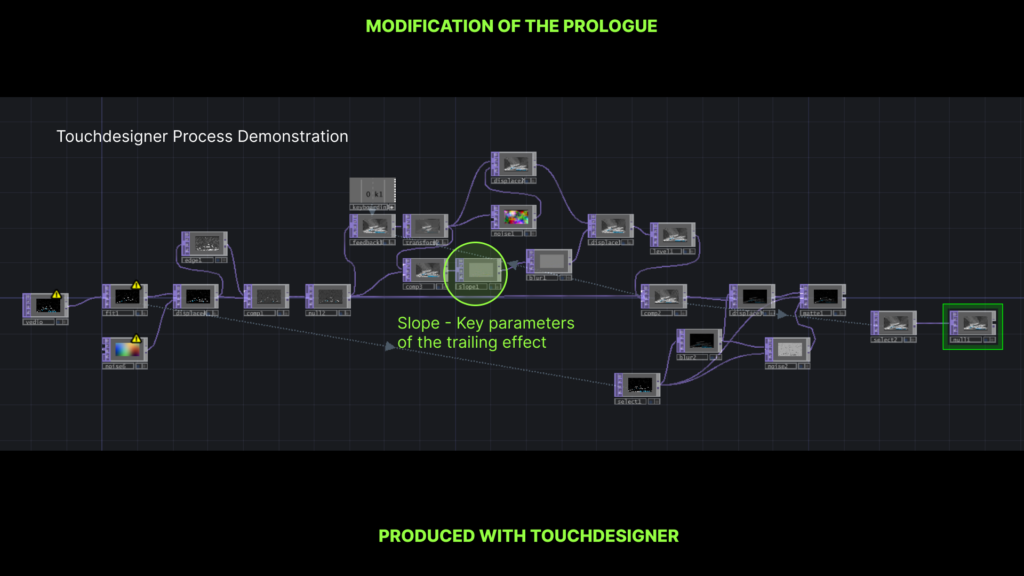

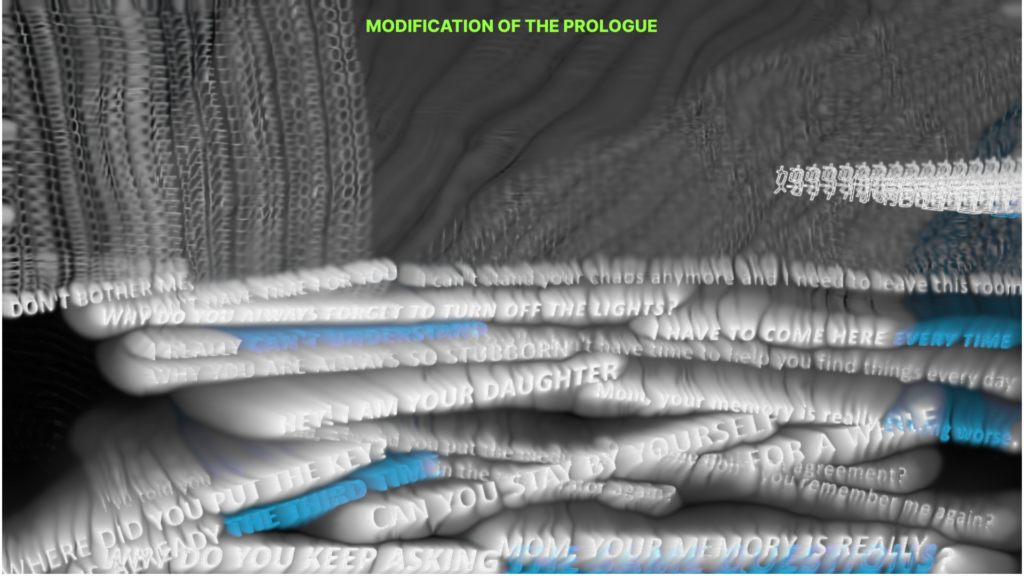

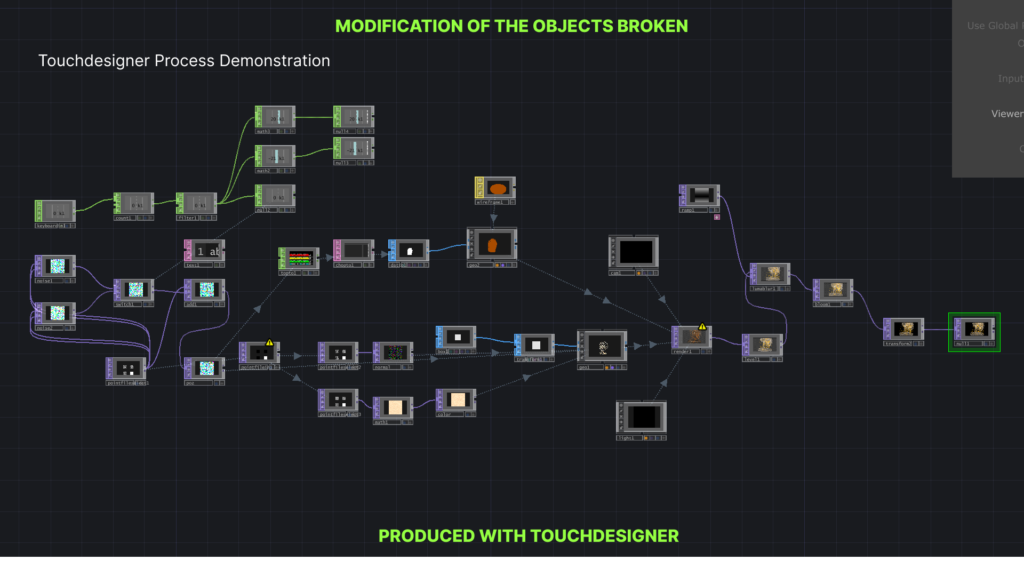

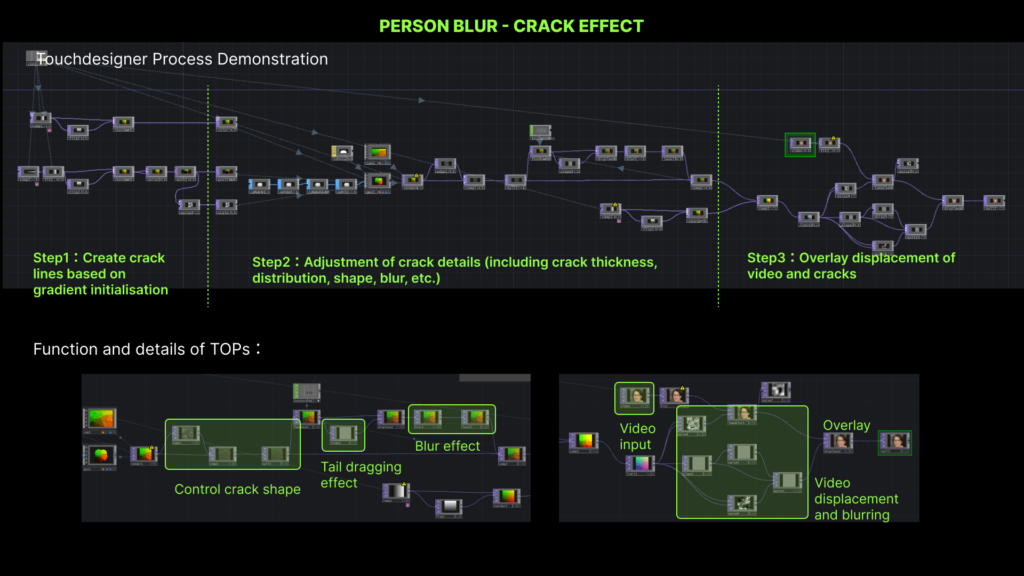

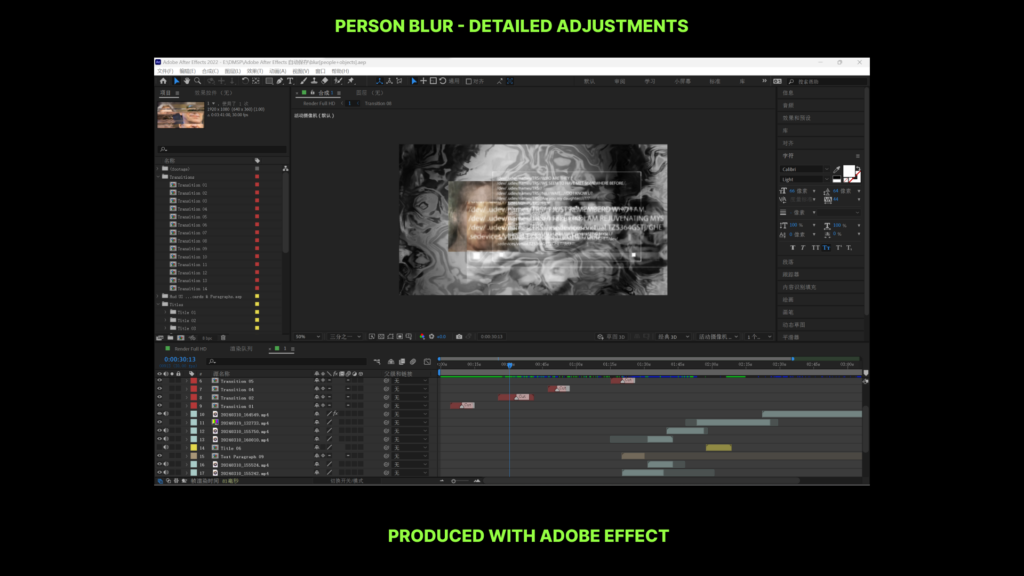

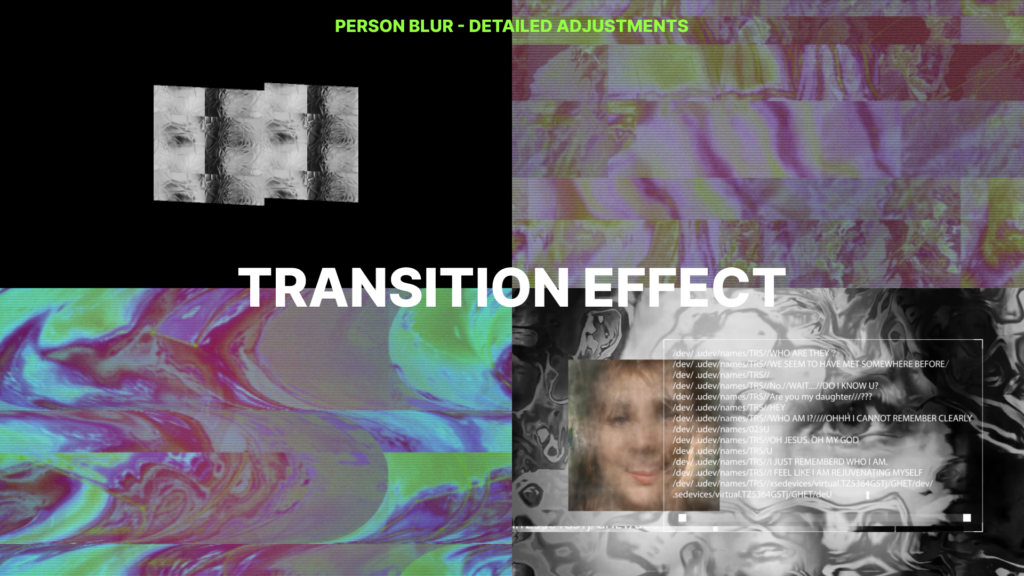

Adjustment of the detailing process:

Final integrated video assets (part 1 + part 2):

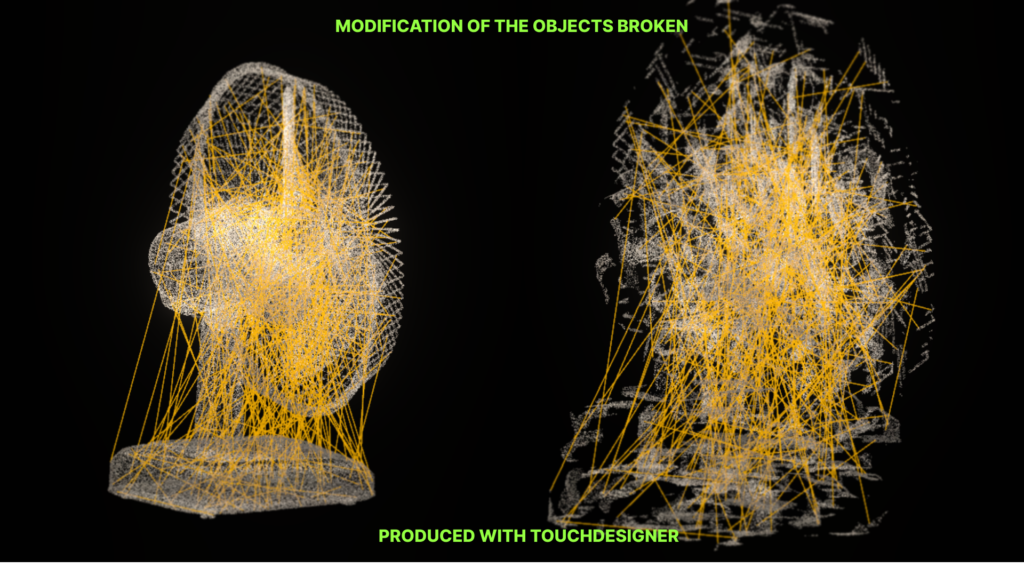

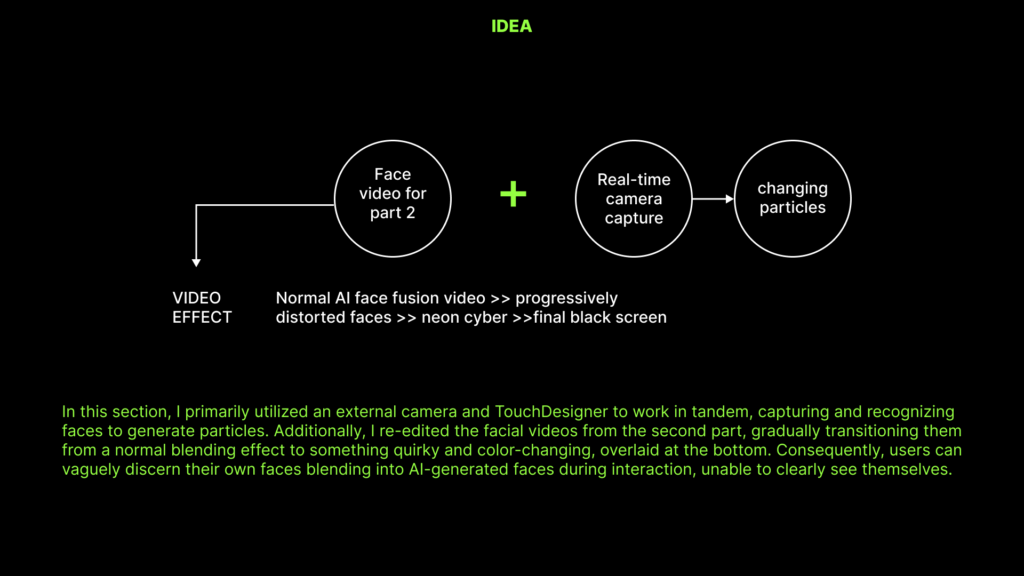

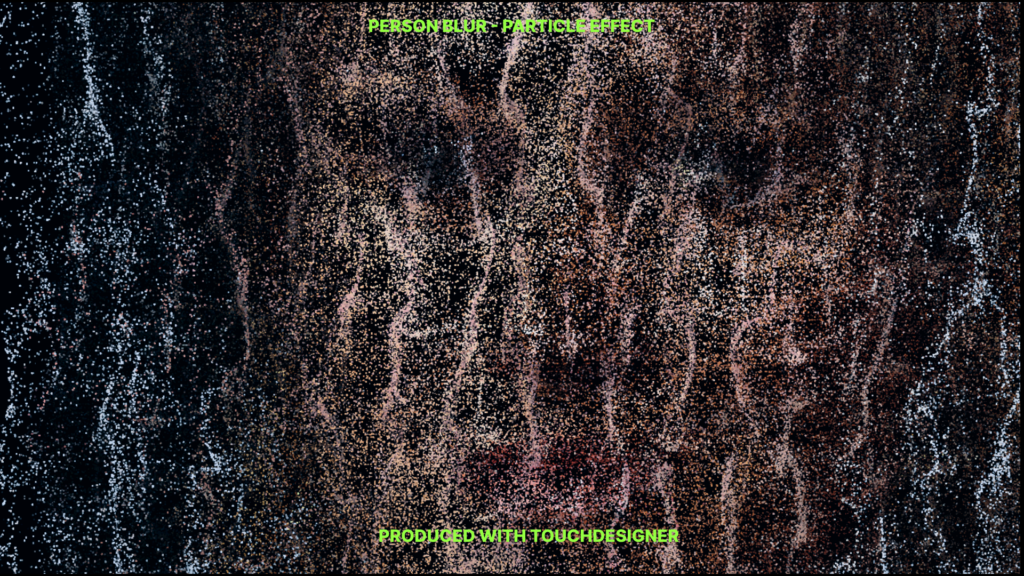

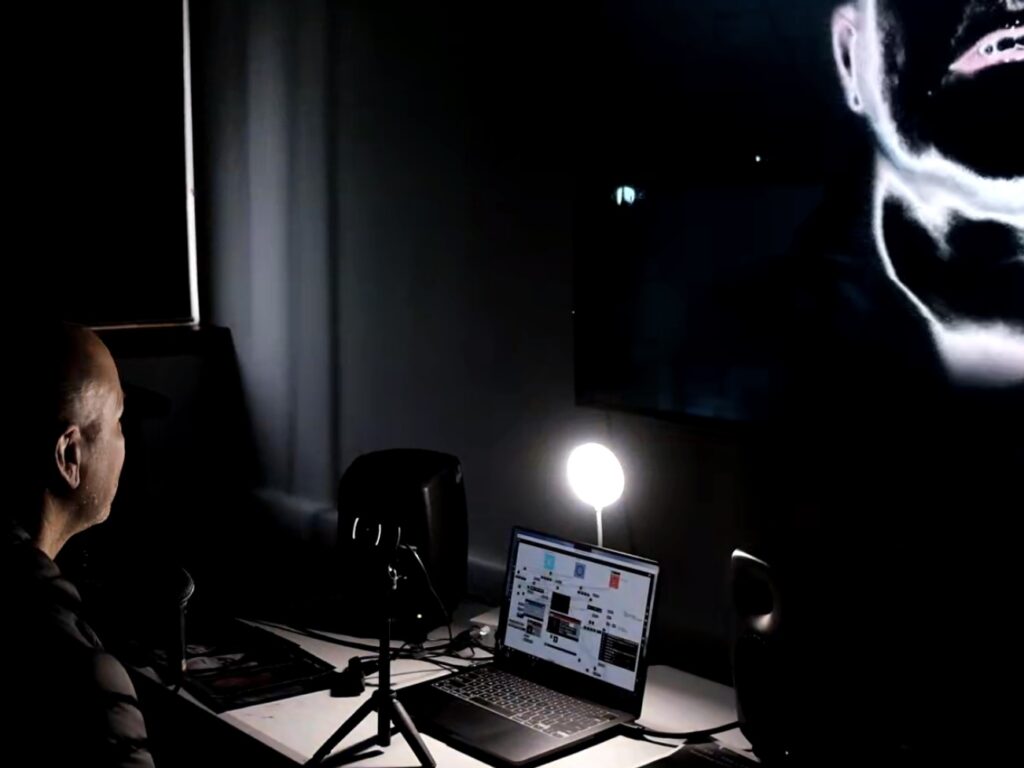

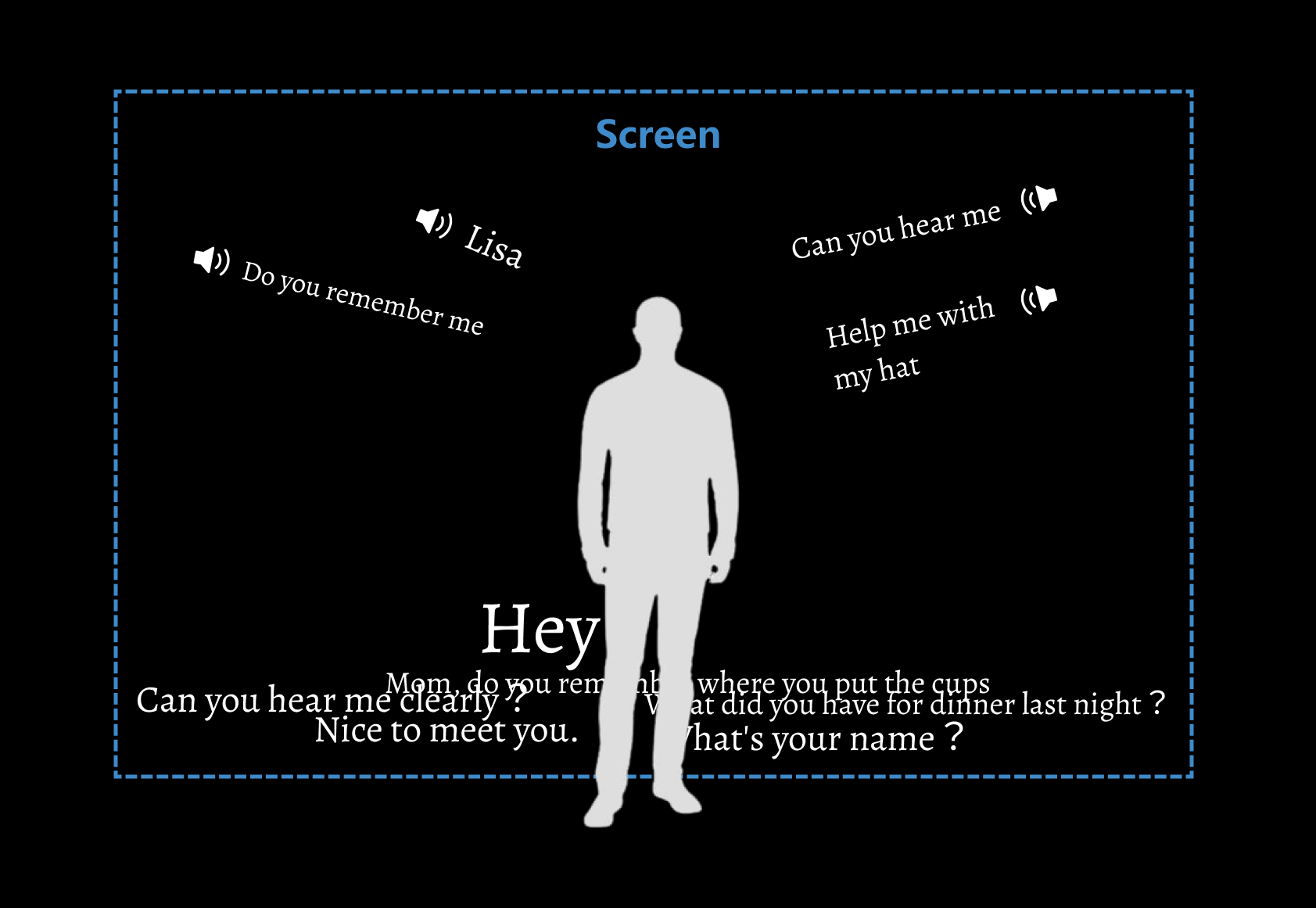

Goal:In the final stage, users have the opportunity to experience the dilemma of not being able to see their true selves in first-person perspective. The blurry human-like figures composed of particles, along with the constantly changing facial videos in the background, symbolize the fading memories of “who am I,” implying that individual lives will eventually vanish like tiny particles disappearing into the darkness.

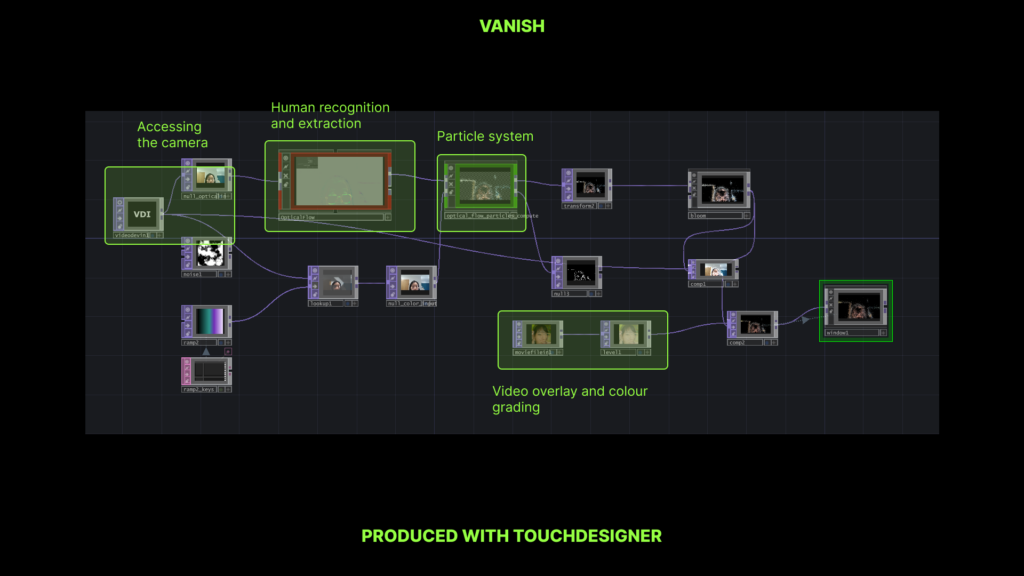

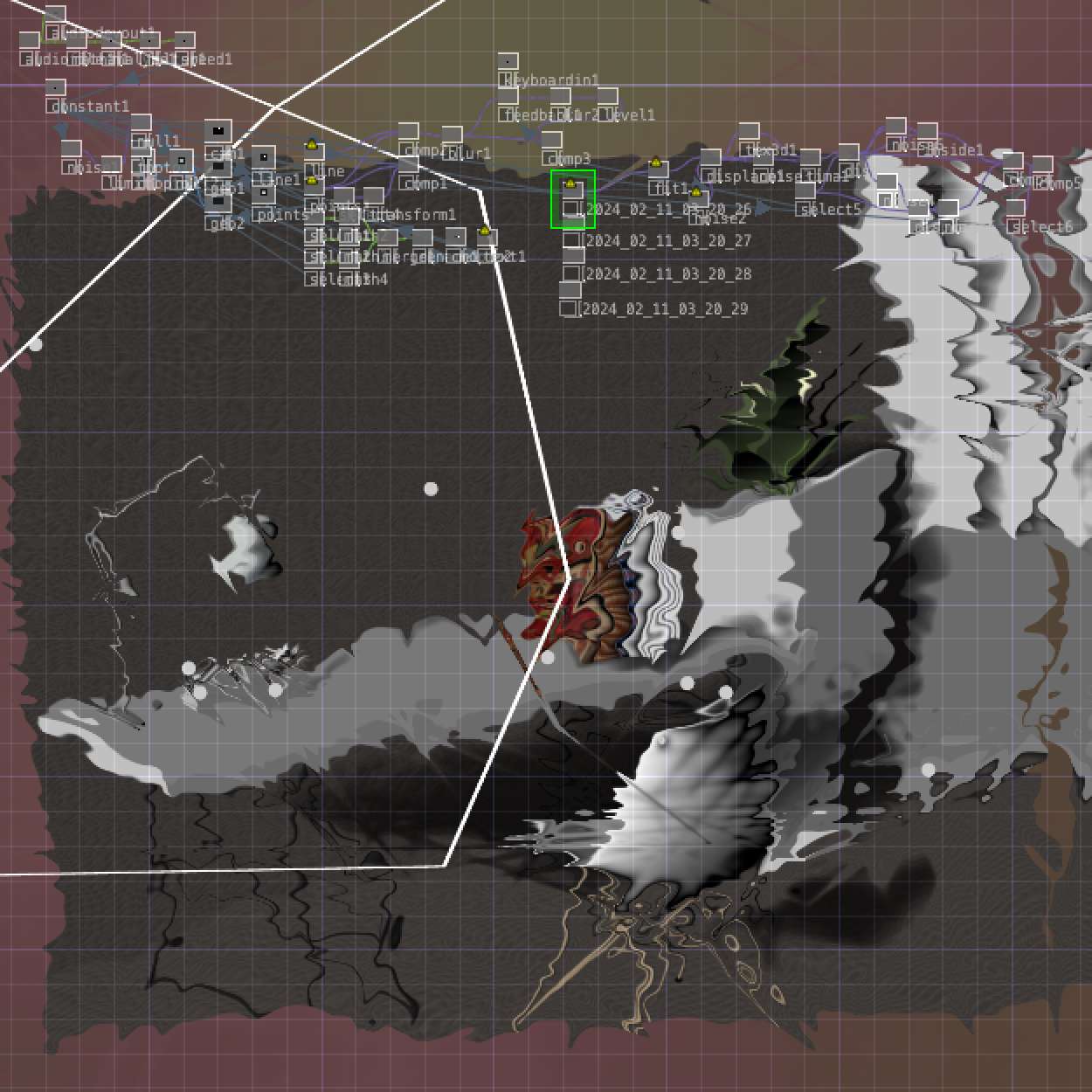

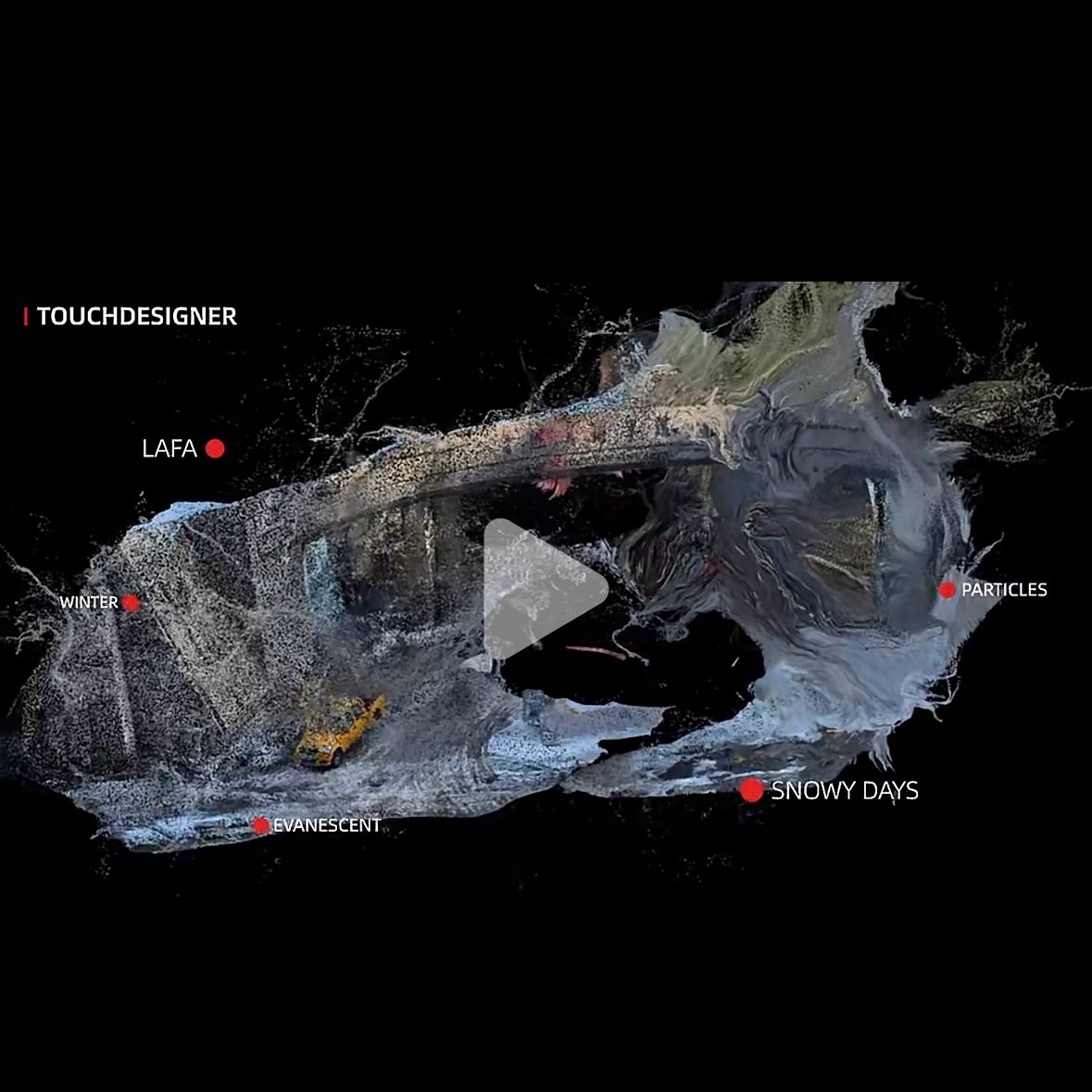

Idea:In this section, I primarily utilized an external camera and TouchDesigner to work in tandem, capturing and recognizing faces to generate particles. Additionally, I re-edited the facial videos from the second part, gradually transitioning them from a normal blending effect to something quirky and color-changing, overlaid at the bottom. Consequently, users can vaguely discern their own faces blending into AI-generated faces during interaction, unable to clearly see themselves.

Technical details:

Overlay video:

The final interaction effect:

Previous attempts at this part(detailed in the mid-term assignment)

Final Process presentation

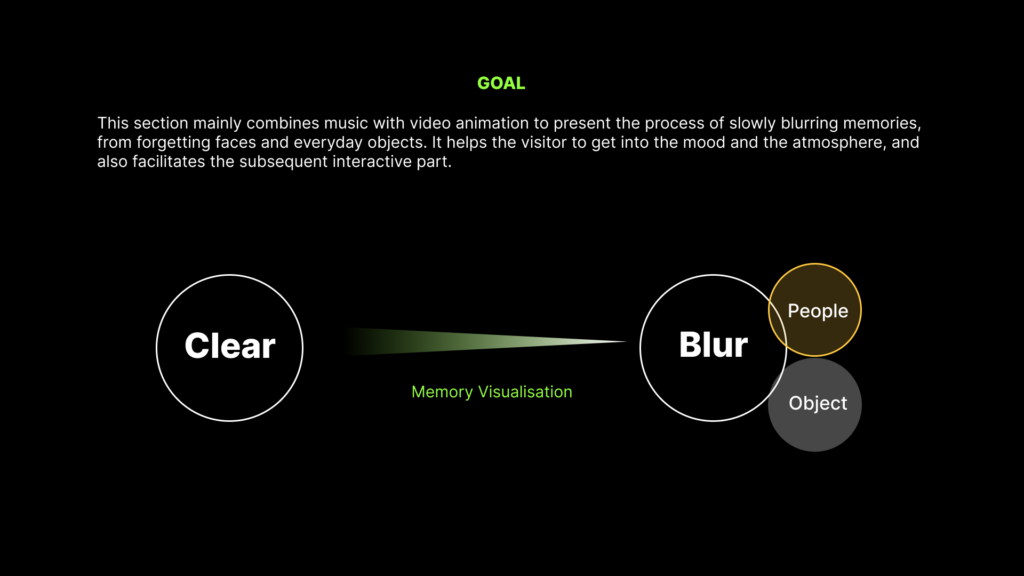

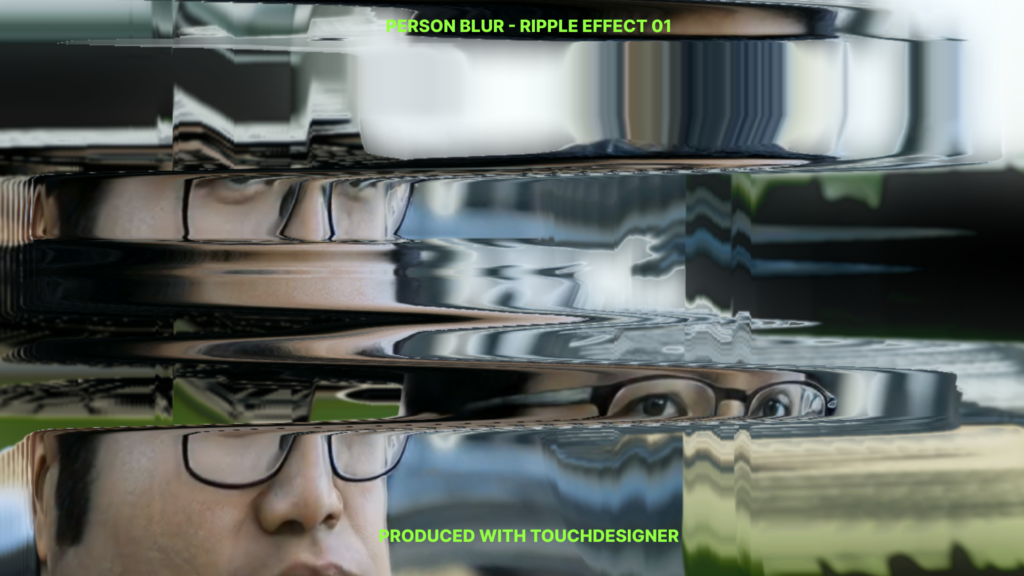

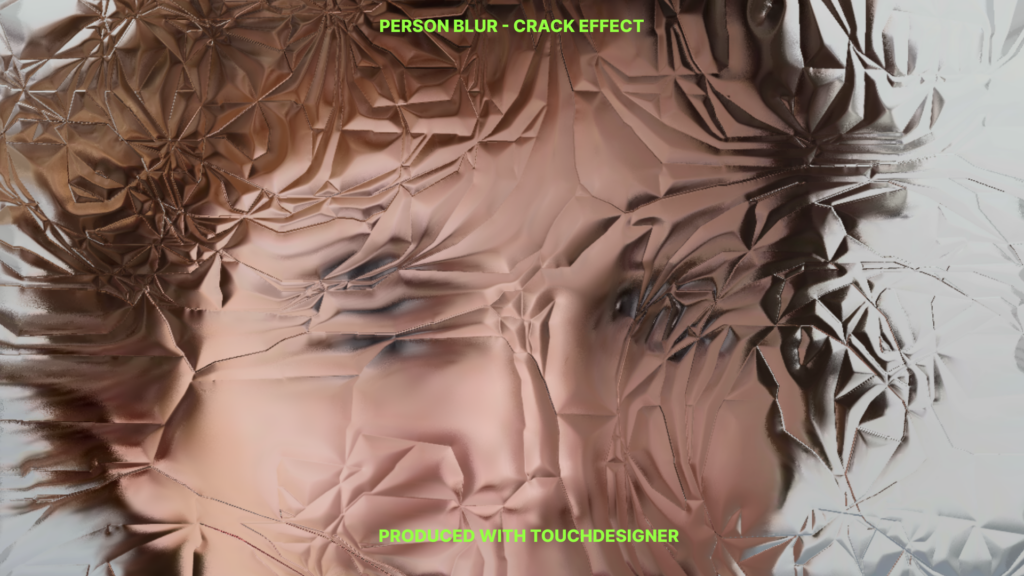

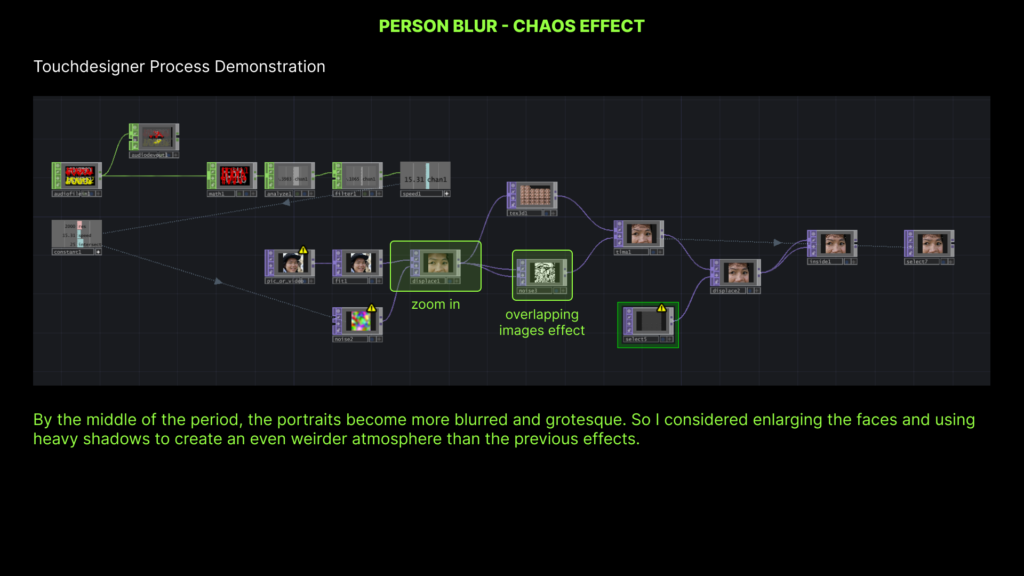

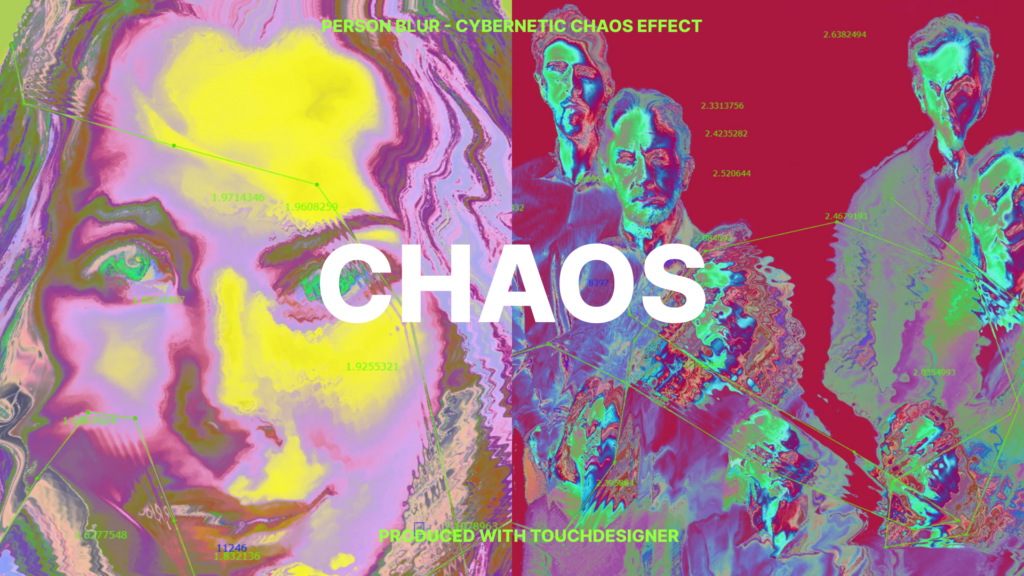

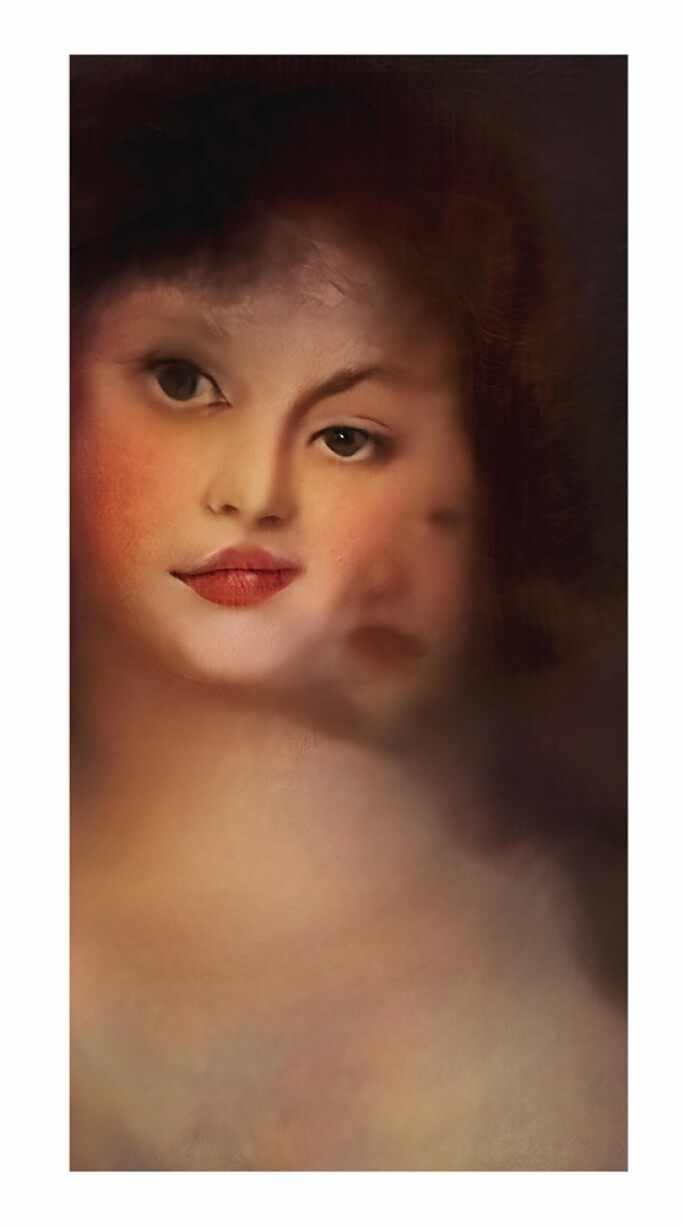

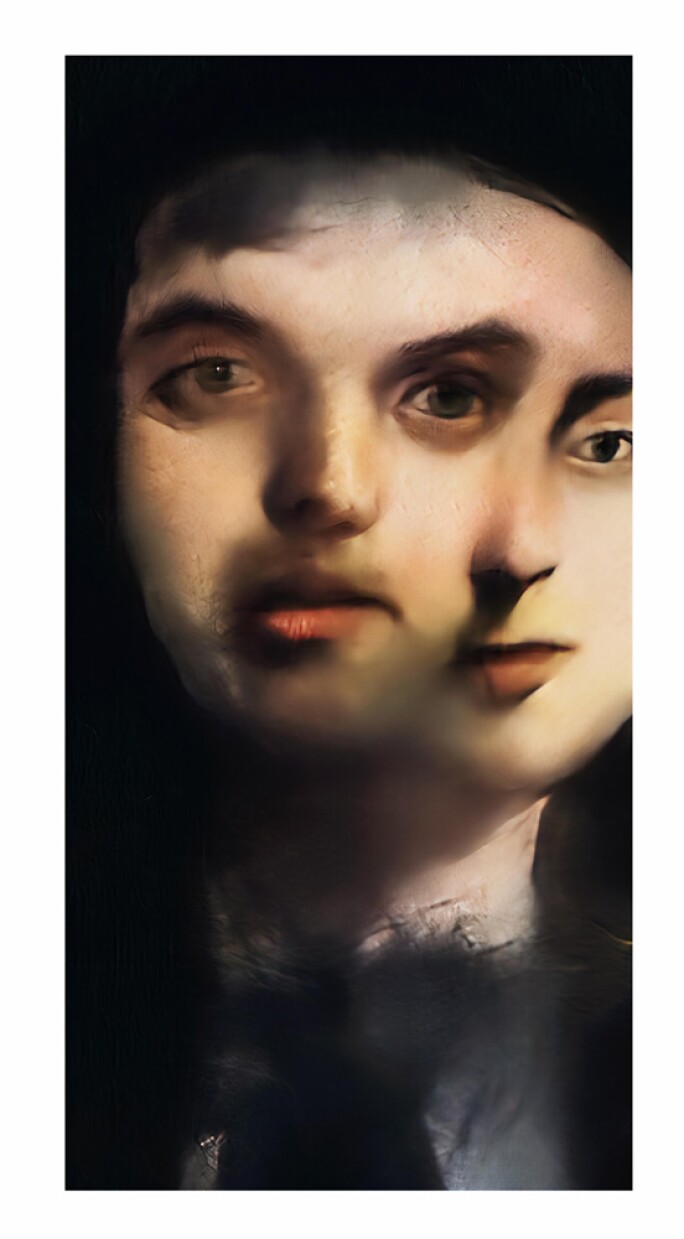

Idea:The long farewell in this section signifies the blurring of faces. As the memories of faces continue to blur and distort, it becomes the ultimate question of who they are and who I am.

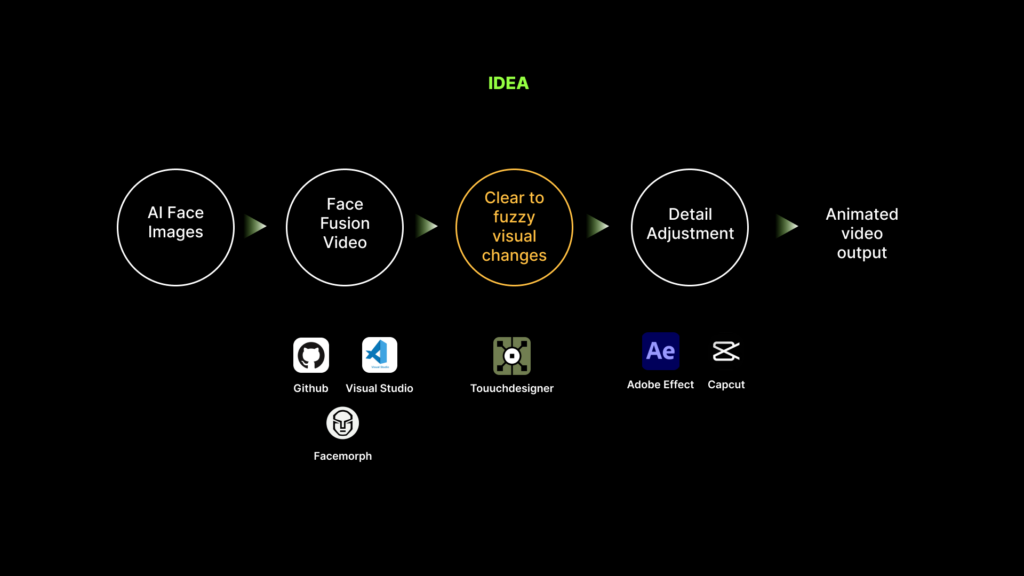

Design Steps:This section is divided into four main production steps:

Design process and technical details:

Step1-AI Face Images

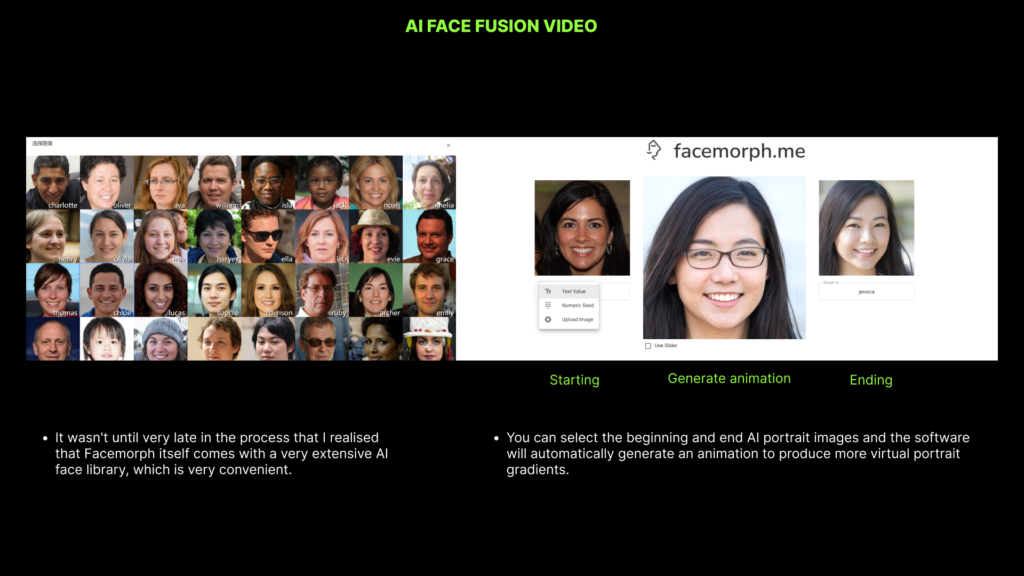

Step2-Face Fusion Video

Step3-Clear to fuzzy visual changes

Step4-Detail Adjustment

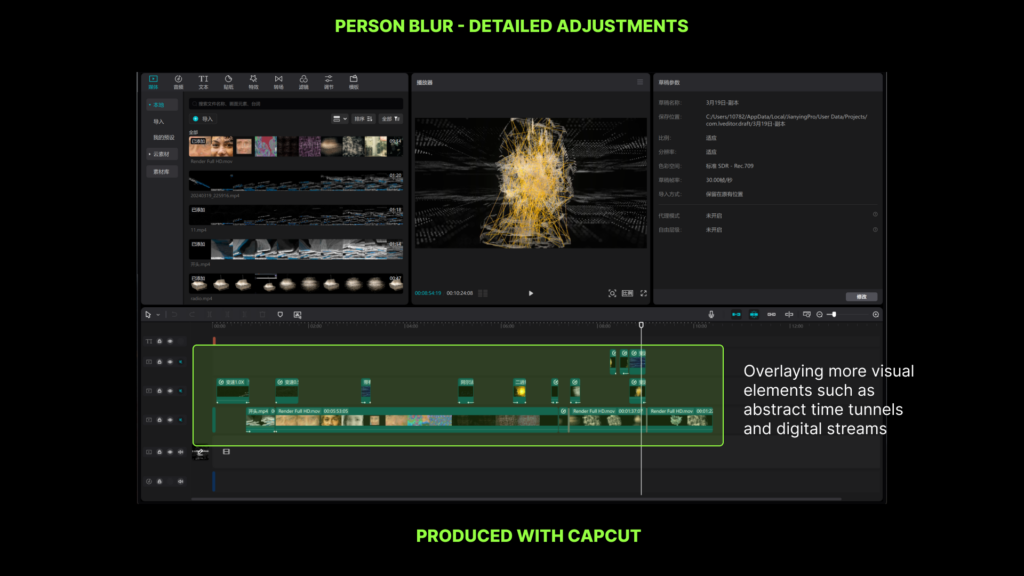

I added some jump words and transitions using mostly AE. Then, based on tutor’s advice in post, I used Capcut to overlay more abstract visual effects, such as digital streams and abstract graphics

Final video (without sound):

Reference and project documentation link:

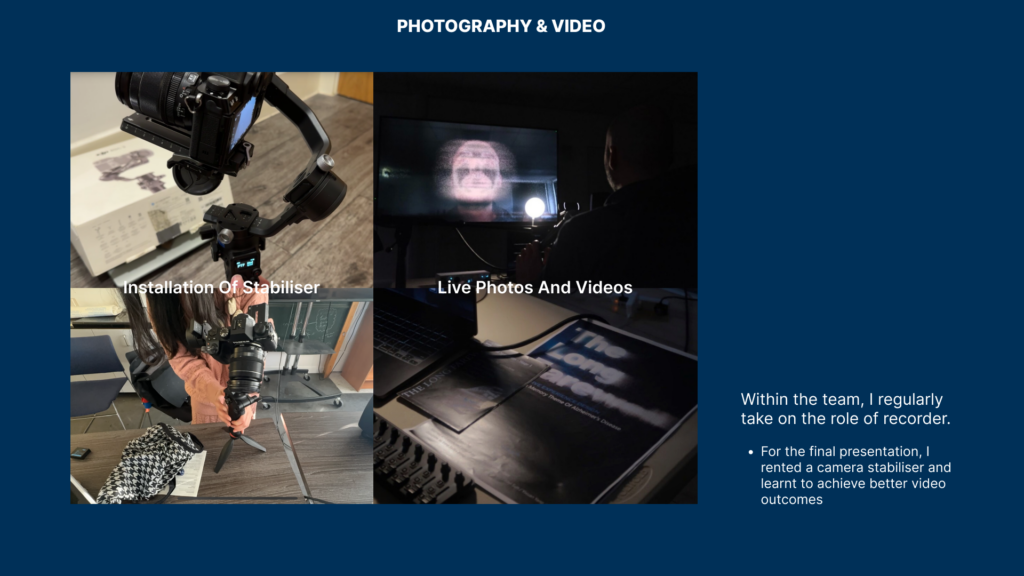

Camera stabiliser rental and test

Preview Tests

We ran two rehearsals in total, making some slight changes to the sound system as well as the environmental setup.

Thanks to Jules for lending us the free black cloth:)

For looped playback:

Given the differences in workload allocation and expertise, we utilized different software for execution. After numerous attempts, we successfully consolidated everything in Max. Currently, the second part of the video plays randomly selected clips, while the first, second, and third parts loop seamlessly. Only the real-time particles in the fourth part require manual switching between TouchDesigner and Max due to signal transmission between the two software. Although this sounds more like the software issue, as you suggested, recreating the same effect using components in TouchDesigner would allow for complete automated playback, achieving seamless transitions and looping. However, this might also imply that the efforts of team members could be in vain, leading to duplicated work.

In addition to the looping random background sounds, the prologue of Part 1 creates a scary atmosphere. The information in the second part is presented in the form of film subtitles in additional snippets of sound, line by line.

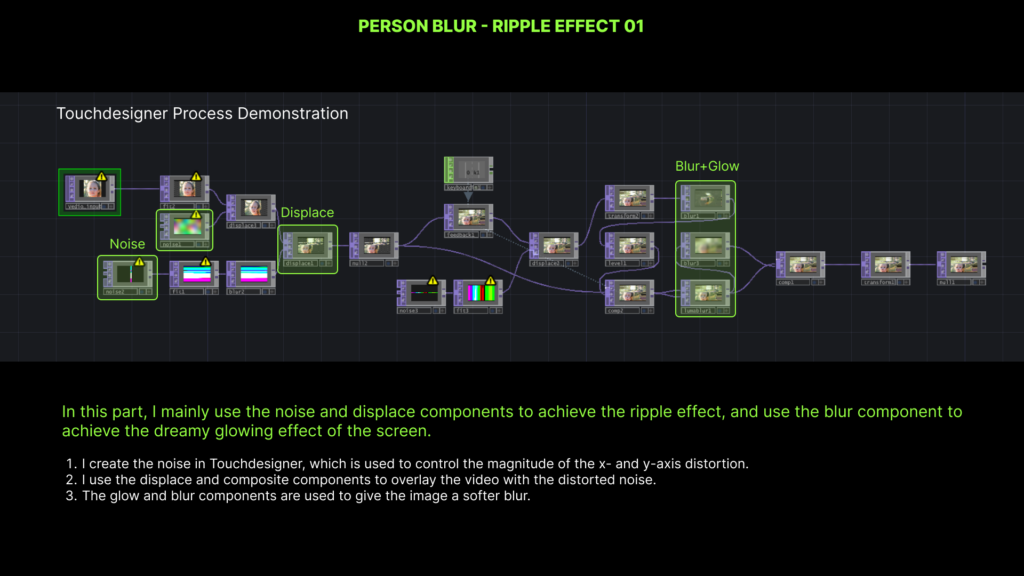

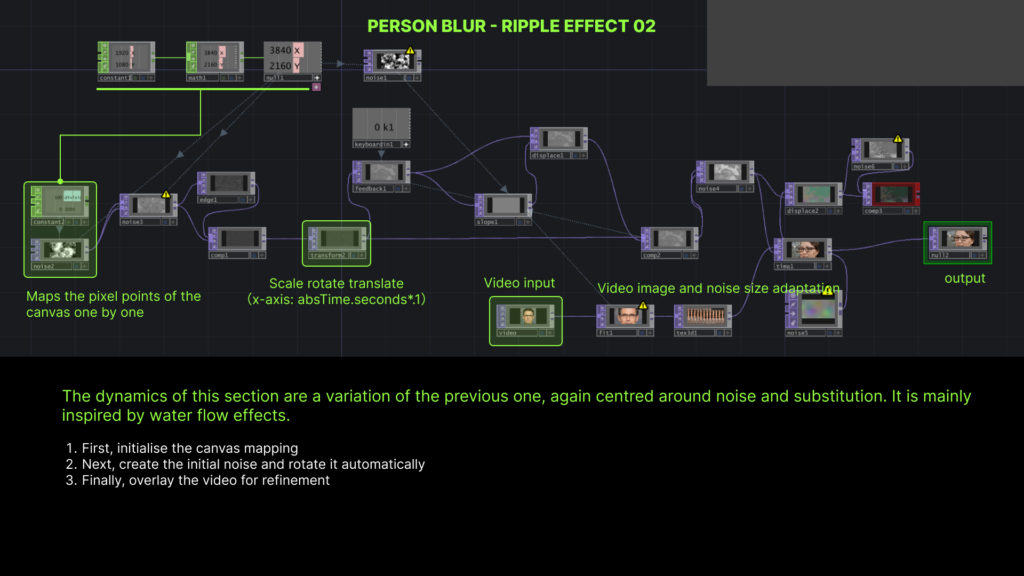

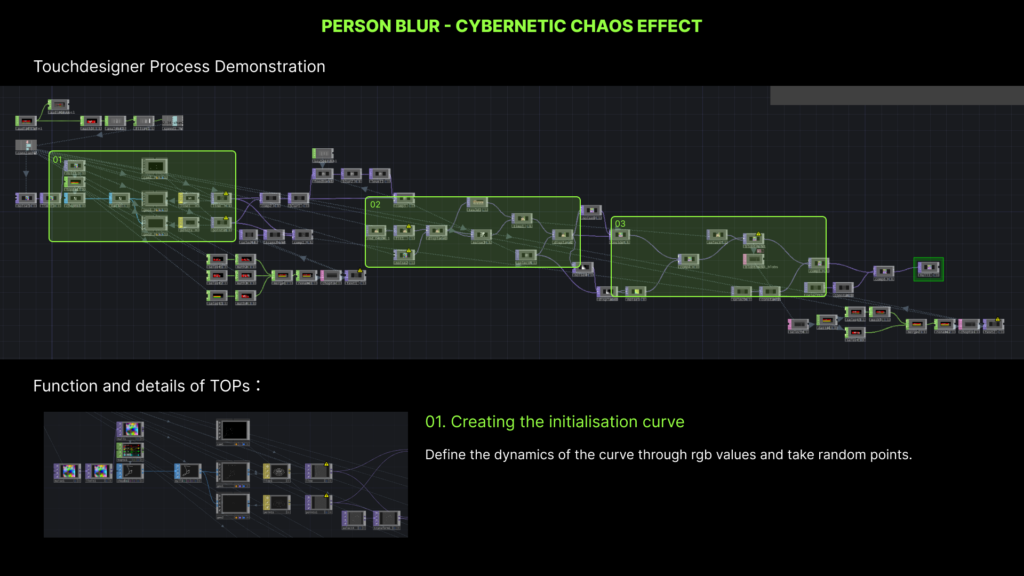

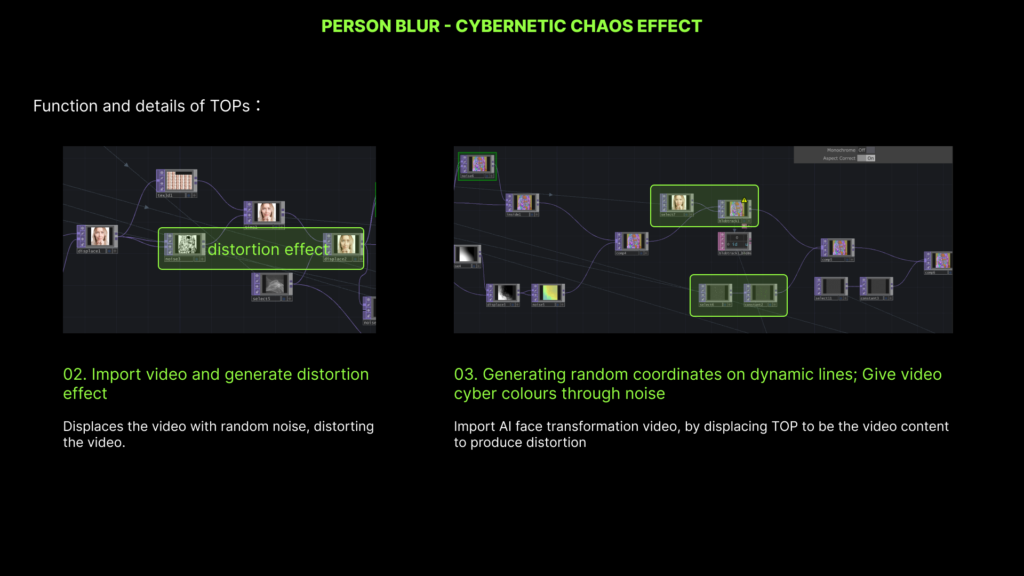

Progress: Based on last week’s discussion and feedback from sub1, this week in part 2 of the blur, I used touchdesigner to focus on a more random and bold experiment on memory visualisation of faces using AI-generated face animations.

Issue:

1. for the video pacing, I’m not sure if I need to slow it down a bit more

2. is the cyberpunk-like magical colouring that appears in the middle and back parts necessary

3. how is the transition to the prologue handled

Progress: based on last week’s feedback, I accessed a live webcam during the interactive part of the installation. In this part of the project, I want the participant to remain standing in front of the screen, while the camera in front of the screen will rotate 360 degrees to record the space the participant is in and load it on the screen in real time in the form of particles. When the camera has completed its rotation, the whole screen will go black and disappear.

Issue:

1. Is there any better suggestion for the interaction form or expression of this part?

Analyse: Memories of Passersby uses a complex neural network system to generate a never-ending stream of portraits: grotesque and grotesque representations of male and female faces created by machines. Each portrait on display is unique and created in real time as the machine interprets its own output. For the viewer, the experience is akin to watching the endless imaginative acts taking place in the mind of the machine, which uses its “memory” of the parts of the face to generate new portraits, sometimes encountering difficulties in computational interpretation, and producing images reminiscent of André Breton’s The result is a “convulsive beauty”: a shocking and disturbing aesthetic that is, as it were, a mixture of attraction and repulsion, whose main function is to present surprising new perspectives.

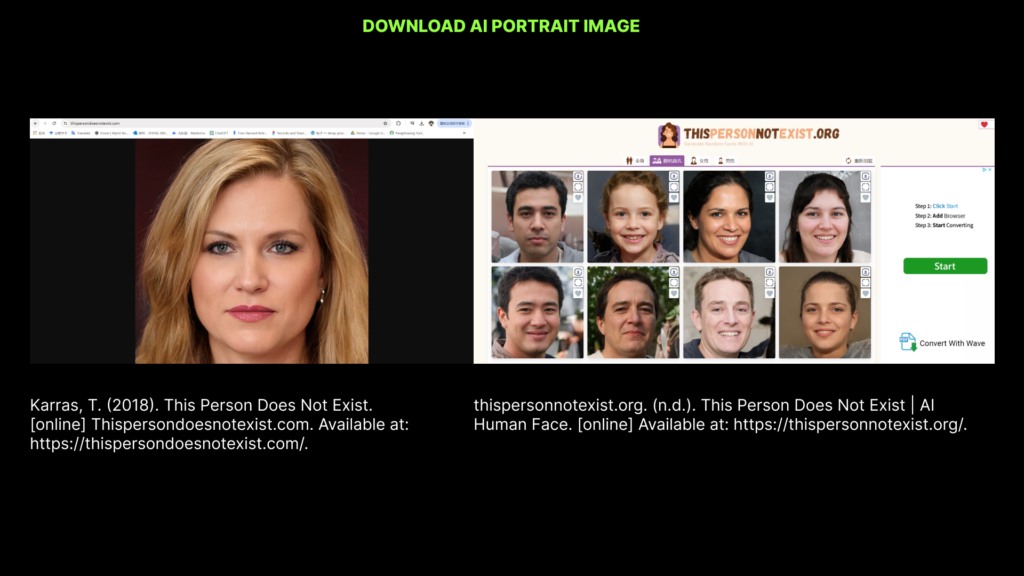

Created by Mario Klingemann

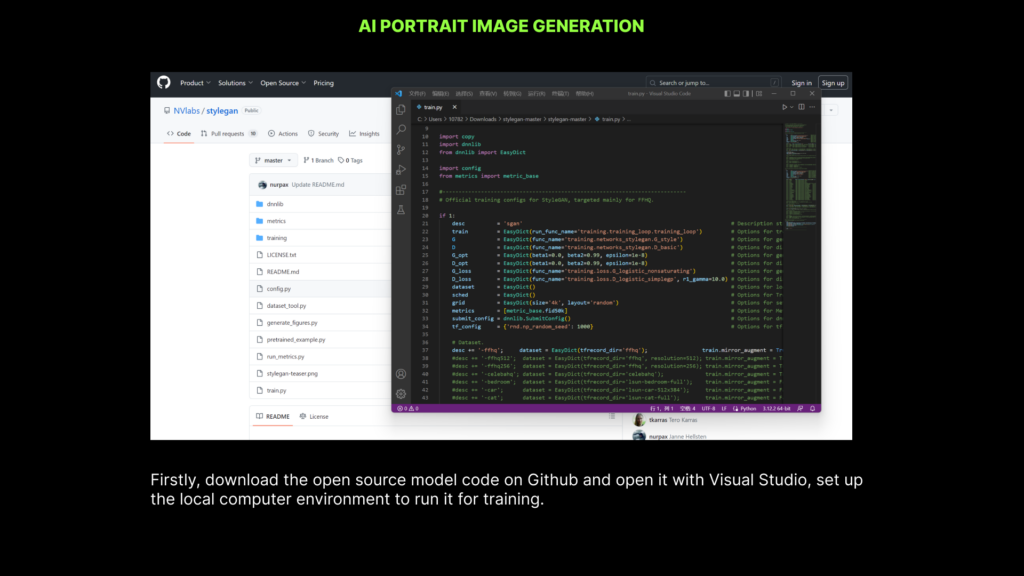

Reflection: Inspired by this example, I explored AI more deeply this week, mainly in the form of a series of videos with virtual portraits superimposed and fused together, which I generated by downloading portrait images from different countries from thispersonnotexist’s website recommended by teacher and training them using the open source StyleGAN2. In order to obtain a more abstract and exaggerated effect to present the memory visualisation, I processed the generated videos using Touchdesigner.

AI face fusion video

I tried two effects in total this week.

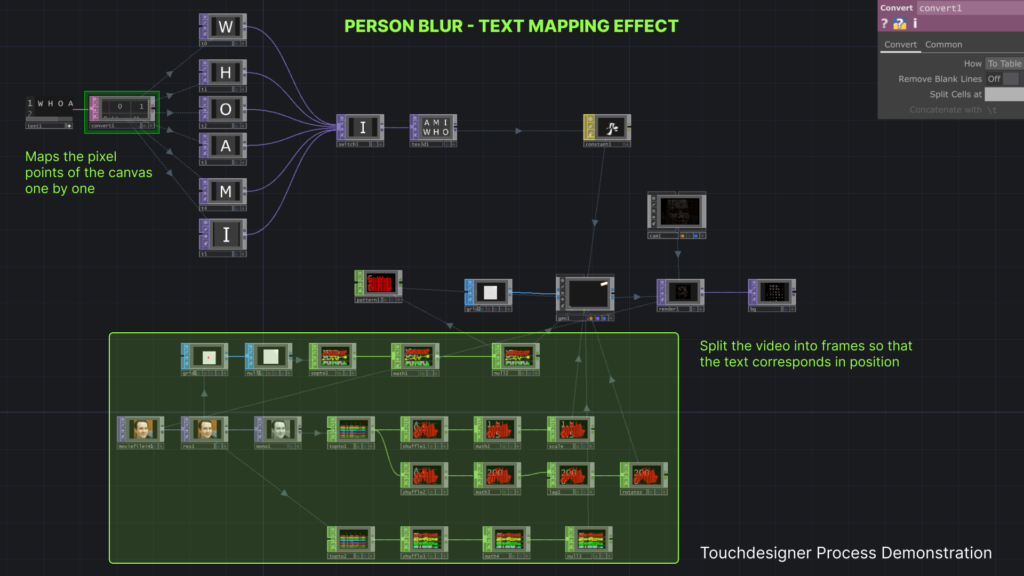

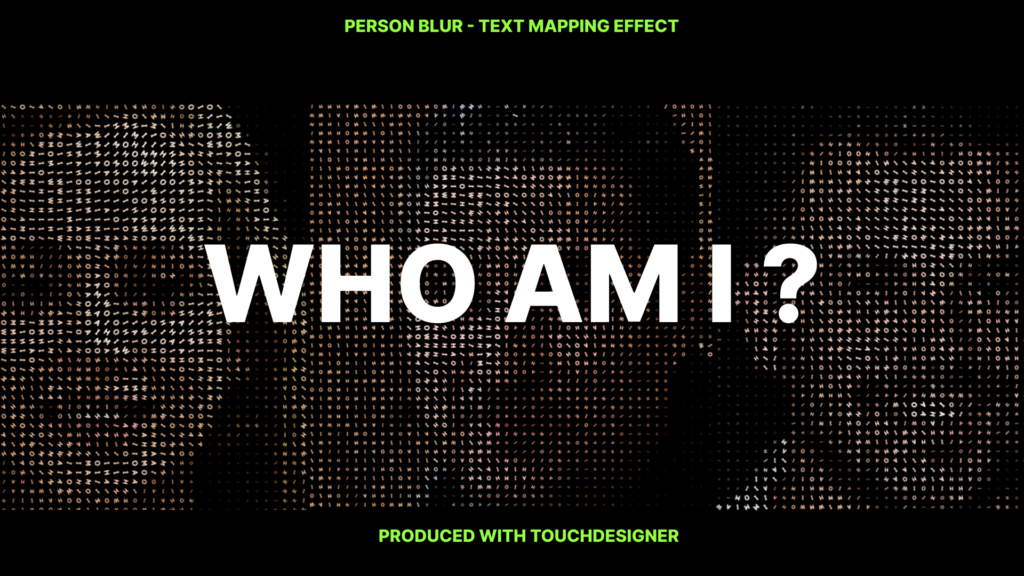

Text Combination:Firstly, the first effect was to echo the prologue of the immersive exhibition, therefore I also tried to incorporate text in the character section. I mainly used the text question “WHO AM I” to map on the video, which makes the original figurative AI face video more abstract and makes the theme more clear.

The prologue format

The prologue format

* Detail question about prologue part:

Attempt about text combination

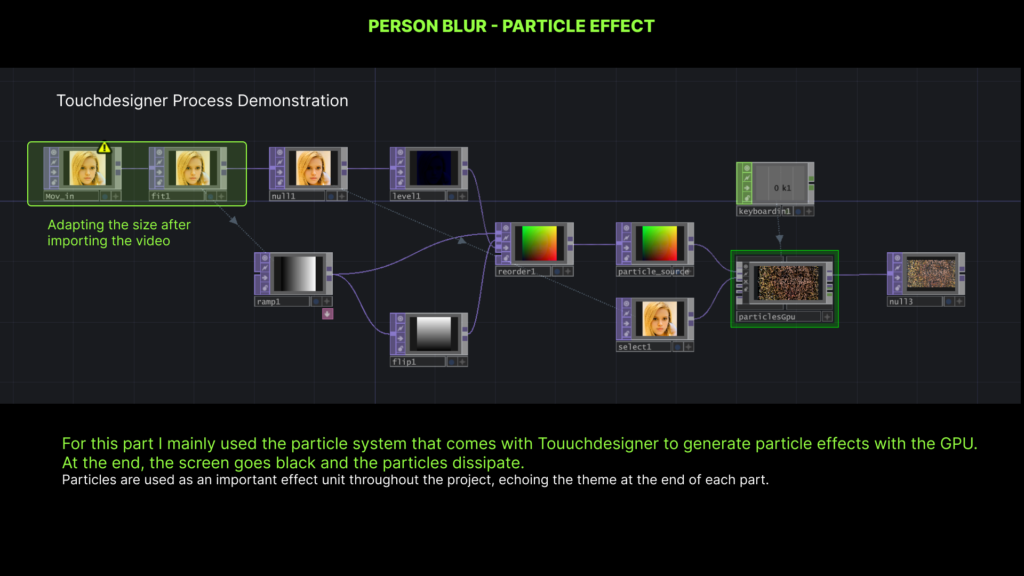

Particle Dissipation: Meanwhile, this week I also continued to delve deeper into particle-related knowledge in Touchdesigner and further experimented with the music example in submission1.

What I’d like to express about this effect is this comment: their hazy output feels like revisiting a memory that can’t be remembered. At the same time, I’m critical of whether AI acts as a memory enhancer or a memory reconstructor or so for people with Alzheimer’s.

Attempt about particle

The vanish section immerses the audience in the experience of Alzheimer’s oblivion, and the form we discussed earlier still retains particle interaction. During the week, I tested capturing a computer camera in real time and producing the effect.

Real-time particles attempt from the computer camera

* Question about vanish part: What needs to be discussed is whether we use real-time particle disappearance or have the user press a button to take a static picture and then disappear with the particles.

* Question about Object-blur part [Detail in Yixuan yang’s blog]

* Question about fade part [Detail in Han Zou’s blog]

The solution what I think is :Not only does it interact with the sound, but the user presses a button to take a photo in real time and then the photo fades. Then use real-time particles to disappear during the vanish part.

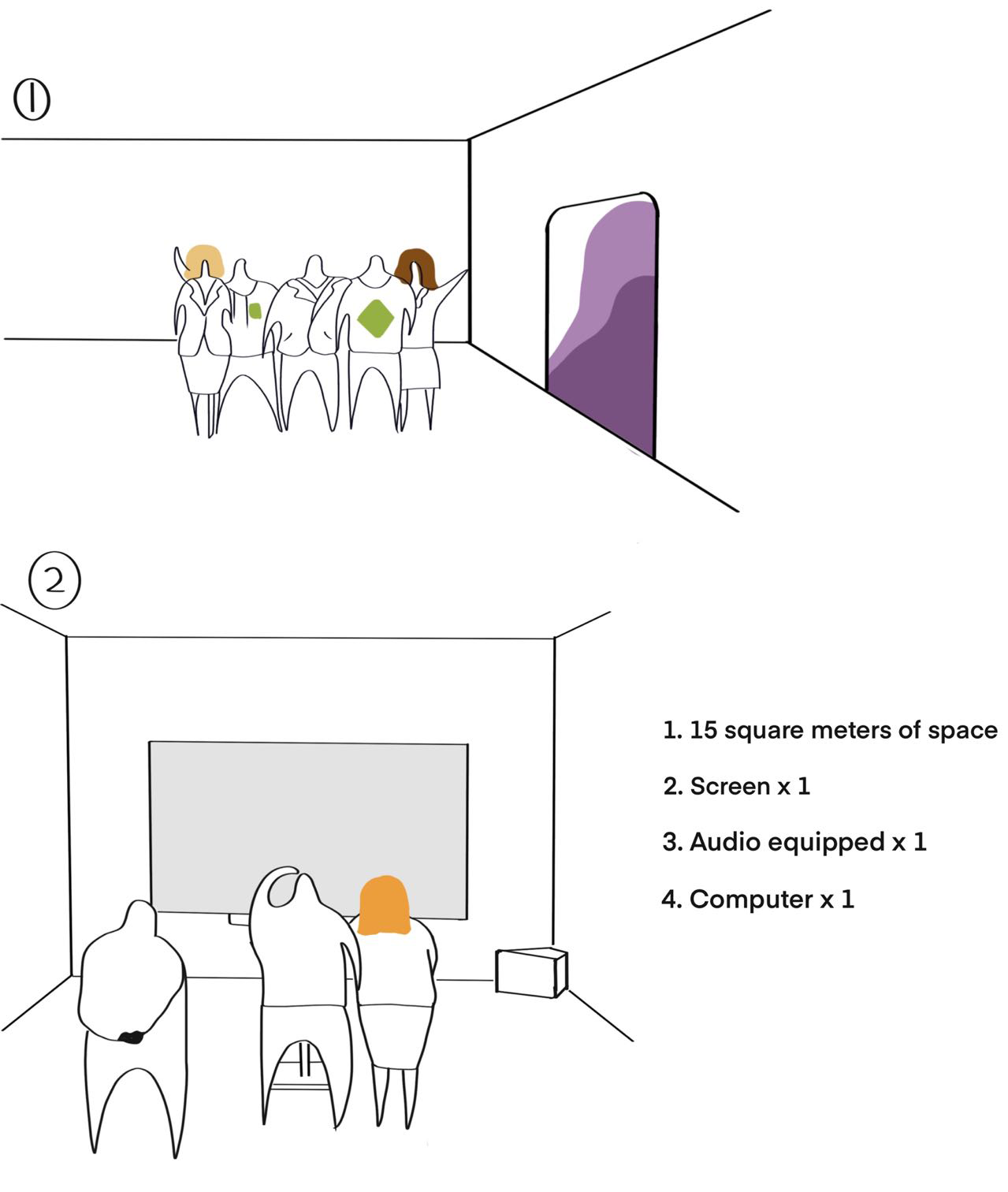

Where will it be shown/experienced: Q25 in ECA. For the exhibition, we will create a dark immersive space.

When:We expect to have all the music and visuals produced around the end of March and the exhibition around the beginning of April 🙂

What do you need:

Drawed by Jiayi Sun

Reference:

Artwork link: https://www.sothebys.com/en/articles/artificial-intelligence-and-the-art-of-mario-klingemann

StyleGAN2 Open Source Website:https://github.com/NVlabs/stylegan?tab=readme-ov-file

Process refers to the process of slowly forgetting as time passes.

Background : Alzheimer’s disease is a neurodegenerative disease that is common in the elderly, but most people do not have a deep understanding of Alzheimer’s disease and even call it “dementia” and stay away from it. The memory of AD patients is as elusive as dust. We could treat the patient’s memory as a speck of dust. Use music visualization to express the plight of Alzheimer’s patients at each stage of the disease,hoping to create empathy for Alzheimer’s disease.

Input: Interview several elderly people to learn their stories and the objects that are important to them. Using AI generation, unreal objects and scenes are slowly generated from real memories, hinting at the memory changes of Alzheimer’s patients. Users can watch the memory change through an interactive slider

Output:An old object corresponds to a memory and story, which slowly disappears under the influence of music.

Technology:Touchdesigner point cloud effect

Process refers to the traces that people leave behind when they travel to different places. Although the trace is slightly unaware, the careful AI uses the mood and destination scenery at the time to help users generate unique travel songs as surprises during the trip.

Background: When traveling, everyone wants to leave some special memories and traces, some record with images, some mark with feelings. If each person’s footprints can be recorded with the mood and scenery at that time, an M song or a rhythm belonging to travel can be generated. As the number of trips continues to increase, the user’s music library has more and more music and rhythms, which also represent different travel memories.

Input: Travel destination pictures、Real-time mood (optional)

Output: Generate a rhythm (single destination) or music (if the destination is very diverse) based on keywords such as destination and mood

Process refers to the process of growth and activity.

Under the premise of the plant-dominated Cyberplanet hypothesis, Cyberbonsai are all self-aware and play different roles to maintain the operation of the Cyberplanet

Background [Cyberplanet]:Many plants are endangered in the future, assuming the existence of a plant-dominated cyberworld, plants have their own division of labor (depending on sound) and color (depending on mood).

Input: Collect some endangered plant prototypes and model them, and finally use AI to simulate the growth process

Output: The AI generates different kinds of cyberplants and follows the music to generate self-awareness

Process is when people are constantly browsing data and think they are getting closer to nature, but they are really just trapped in a data garden.

Background [Nature-deficit disorder]:Nature-deficit disorder is a phenomenon proposed by the American writer Richard Love, that is, the complete separation of modern urban children from nature. Some people have given their own explanation for it: “Some kind of The desire for nature, or ignorance of nature, is caused by a lack of time to go outdoors, especially in the countryside.” In real life, the number of people with “nature deficit disorder” has expanded from children to adults.

Input: Users browse or search image data for plants

Output:Different plants composed of data